Anthropic summarizes simple and effective ways to build efficient intelligences

Over the past year, we've worked with teams building Large Language Model (LLM) agents across multiple industries. Consistently, we've found that the most successful implementations don't use complex frameworks or specialized libraries, but are built with simple, composable patterns.

In this post, we'll share our experience working with customers and building agents ourselves, and provide developers with practical advice for building efficient agents.

Intelligent/agent/Agent has the same meaning in the following text.

What is an agent?

"Agent" can be defined in many ways. Some customers define agents as fully autonomous systems that can run independently for extended periods of time, using a variety of tools to accomplish complex tasks. Others use it to describe more prescriptive implementations that follow predefined workflows. At Anthropic, we refer to these variants collectively as Agentized SystemsBut the architecture of the workflow cap (a poem) act on behalf of sb. in a responsible position An important distinction was made:

- workflow is a system that orchestrates LLMs and tools through predefined code paths.

- act on behalf of sb. in a responsible position is a system in which the LLM dynamically directs its own processes and tool use to maintain control over how tasks are accomplished.

Below we explore these two agentization systems in more detail. In Appendix 1 ("Agents in Practice"), we describe two areas in which clients' use of these systems has been particularly valuable.

When (and when not) to use proxies

When building applications with LLM, we recommend looking for the simplest solution possible, adding complexity only when necessary. This may mean not building an agentized system at all. Agentized systems often trade off latency and cost for better task performance, and you need to consider whether this tradeoff is worth it.

Workflows provide predictability and consistency for well-defined tasks when higher complexity is required, while agents perform better when flexibility and model-driven decision scaling are needed. However, for many applications, optimizing a single LLM call, combined with retrieval and contextual examples is often sufficient.

When and how to use frameworks

There are a number of frameworks that make agentized systems easier to implement, including:

- LangGraph From LangChain;

- Amazon Bedrock's AI Agent framework.;

- Rivet, a drag-and-drop GUI LLM workflow builder;

- VellumAnother GUI tool for building and testing complex workflows.

These frameworks make the development process easier by simplifying standard low-level tasks such as invoking LLMs, defining and parsing tools, and linking calls together. However, they tend to add additional layers of abstraction that can obscure the underlying hints and responses, making debugging more difficult. This may also tip the scales in favor of increased complexity, when in reality a simpler setup may suffice.

We recommend that developers first use the LLM API directly: many patterns can be implemented with a few lines of code. If using a framework, make sure you understand the underlying code. False assumptions about the underlying logic of the framework are a common source of client errors.

See our cookbook for some sample implementations.

Building blocks, workflows and agents

In this section, we will explore common agentized system patterns we see in production environments. We'll start with the base building block, Enhanced LLM, and then gradually increase the complexity from simple combinatorial workflows to autonomous agents.

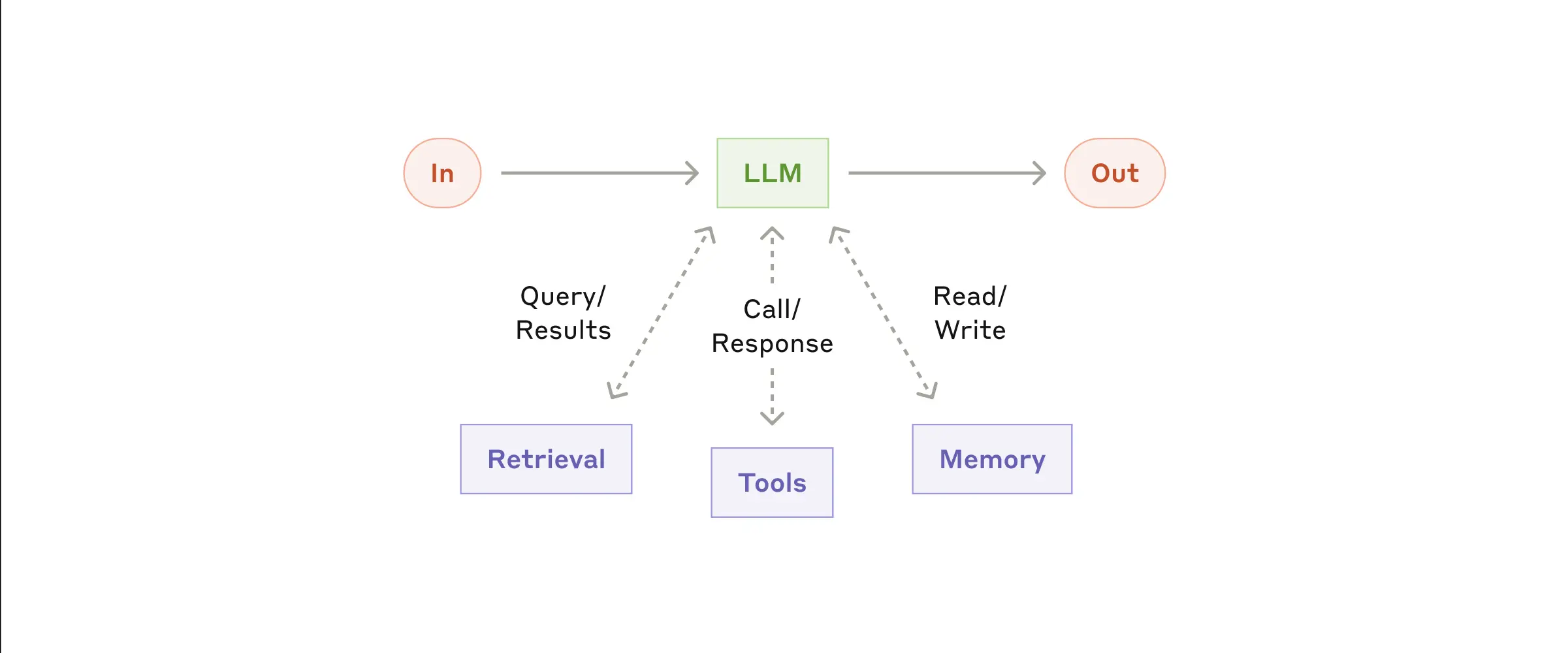

Building Blocks: Enhanced LLM

The basic building block of the agentized system is the Enhanced LLM, which integrates enhanced features such as search, tools and memory. Our current model can use these features proactively - generating its own search queries, selecting appropriate tools and deciding what information to retain.

- Enhanced LLM

We recommend focusing on two key aspects of the implementation: customizing these features to fit your specific use case, and ensuring that they provide an easy-to-use and well-documented interface to LLM. While these enhancements can be implemented in a number of ways, one approach is to use our recently released Model Context Protocol, which allows developers to integrate into the growing ecosystem of third-party tools through a simple client-side implementation.

For the remainder of this paper, we assume that these enhancements are accessible with every LLM call.

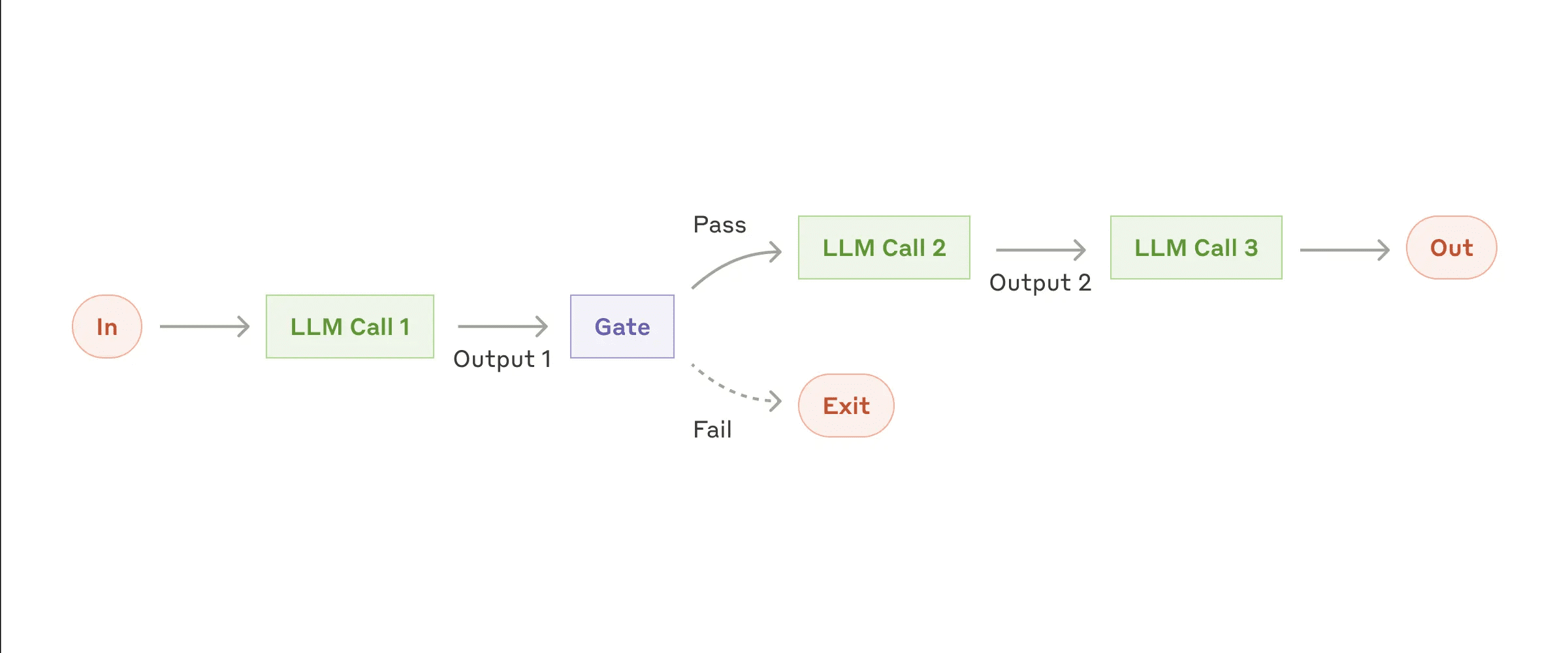

Workflow: Prompt chaining

The hint chain breaks the task into a series of steps, with each Large Language Model (LLM) call processing the output of the previous step. You can add procedural checks (see "gate" in the following figure) at any intermediate step to ensure that the process is still on the right track.

- Cue Chain Workflow

When to use this workflow:

This workflow is ideal when tasks can be easily and clearly broken down into fixed subtasks. The main goal is to improve accuracy while reducing latency by streamlining each LLM call into more manageable tasks.

Examples of where the cue chain applies:

- Generate marketing copy and then translate it into different languages.

- Write an outline of the document, check that the outline meets certain criteria, and then write the document based on the outline.

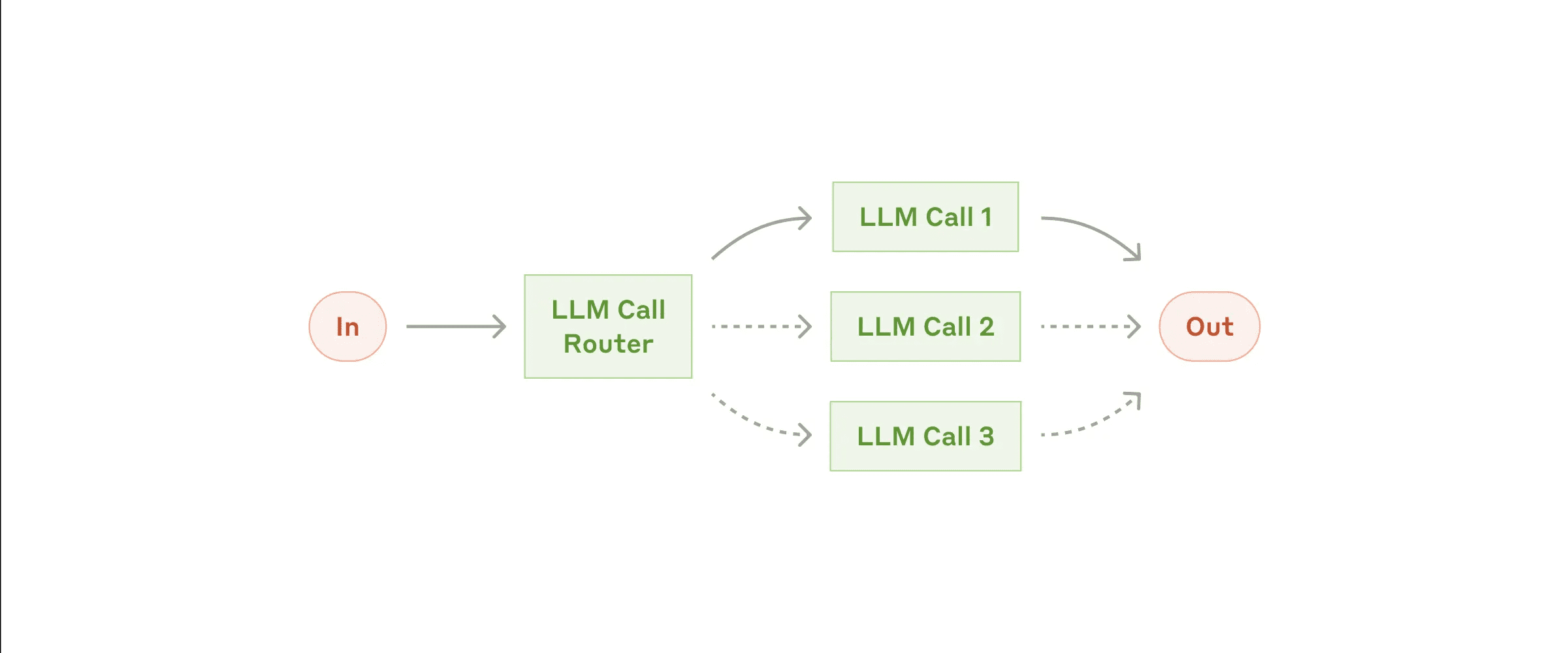

Workflow: Routing

Routing categorizes input and directs it to a specialized follow-up task. This workflow allows for separation of concerns and the construction of more specialized prompts. Optimizing for one type of input may degrade performance for other inputs if this workflow is not used.

- routing workflow

When to use this workflow:

Routing workflows perform well when complex tasks have different categories that need to be processed separately and the categorization can be accurately handled by LLM or more traditional classification models/algorithms.

Routing applicable examples:

- Direct different types of customer service inquiries (general questions, refund requests, technical support) to different downstream processes, tips and tools.

- Route simple/common problems to smaller models (e.g. Claude 3.5 Haiku), while routing complex/uncommon problems to more powerful models (e.g., Claude 3.5 Sonnet) to optimize cost and speed.

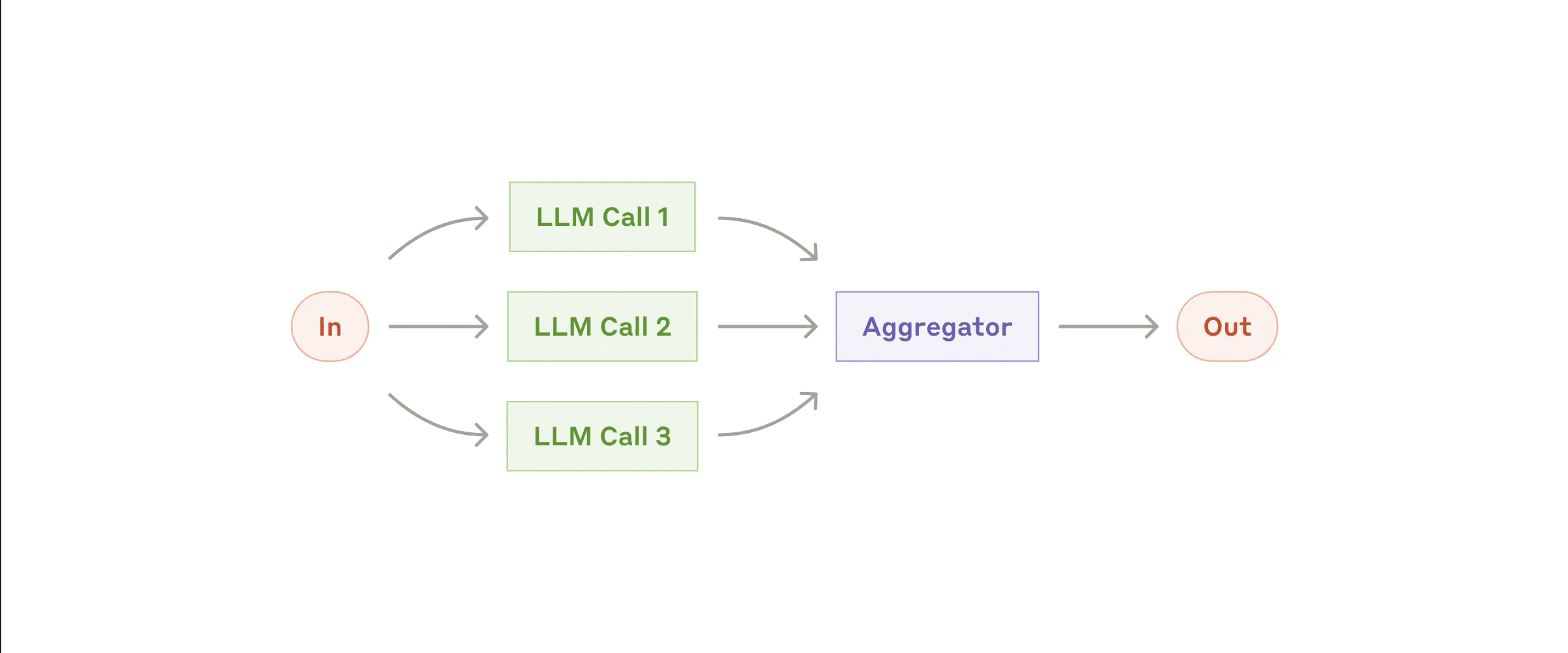

Workflow: parallelization (Parallelization)

LLMs can sometimes process tasks concurrently, and their output is aggregated programmatically. There are two key variations of parallelized workflows:

- Segmentation (Sectioning): Decompose tasks into independent subtasks that can run in parallel.

- Voting: Run the same task multiple times for diverse output.

- Parallelized workflows

When to use this workflow:

Parallelization is effective when decomposed subtasks can be processed in parallel for speed, or when multiple perspectives or attempts are needed to obtain higher confidence results. For complex tasks with multiple considerations, each consideration is usually better performed by a separate LLM call to process it, thus focusing on each specific aspect.

Examples of where parallelization applies:

- Segmentation (Sectioning):

- Implement safeguards where one model instance handles user queries and the other screens for inappropriate content or requests. This is usually better than having the same LLM call handle both the guards and the core response.

- Automated evaluation of LLM performance, where each LLM call evaluates a different aspect of the model's performance on a given cue.

- Voting:

- Review the code for vulnerabilities, checking the code multiple times with different prompts and flagging the issues found.

- Evaluate content for inappropriateness, use multiple prompts to evaluate different aspects or require different polling thresholds to balance false positives and omissions.

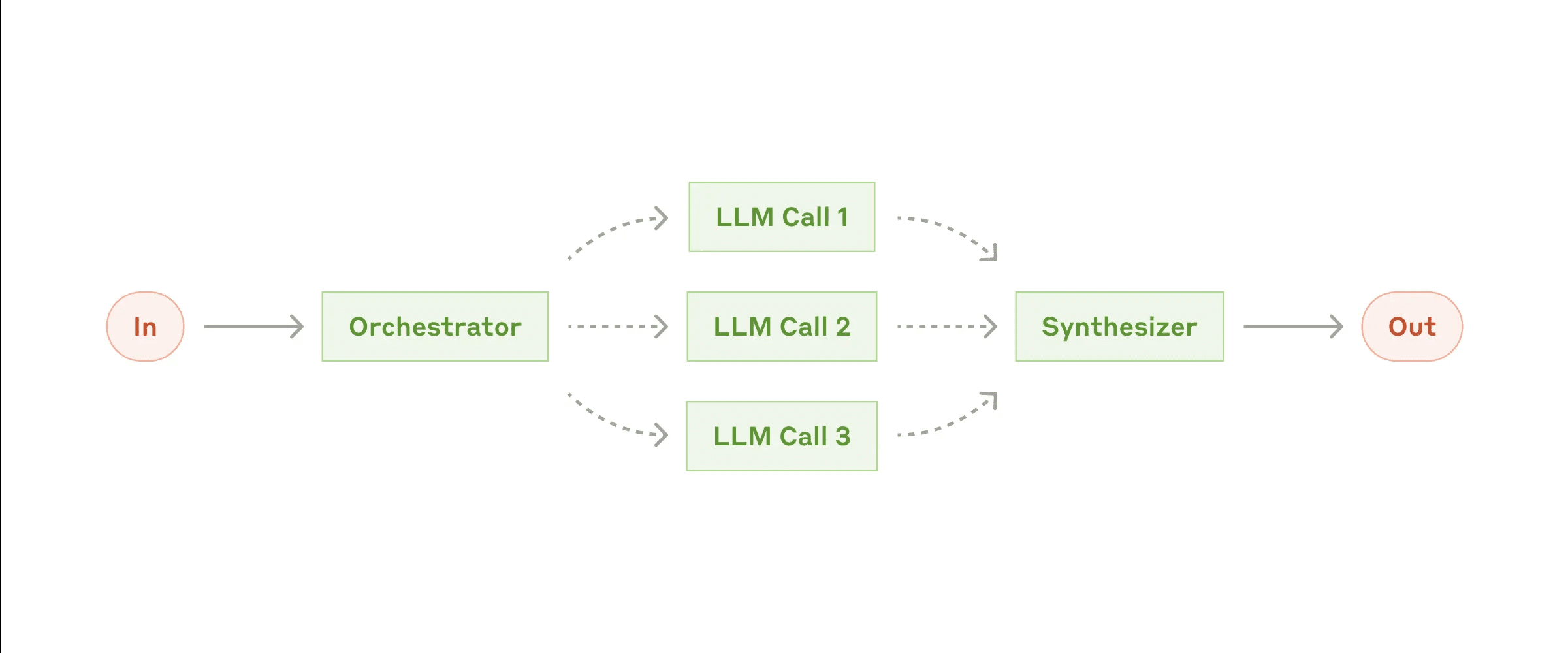

Workflow: Orchestrator-workers

In the coordinator-worker workflow, the center LLM dynamically decomposes tasks, delegates them to worker LLMs, and synthesizes their results.

- Coordinator-worker workflow

When to use this workflow:

This workflow is very suitable when the task is complex and the required subtasks cannot be predicted (e.g. in coding, where the number of files to be changed and the nature of the changes in each file may depend on the specific task). Similar to parallelized workflows, but the key difference is its flexibility - subtasks are not predefined, but are decided by the coordinator based on specific inputs.

Example of coordinator-worker application:

- Coding products that make complex changes to multiple files at a time.

- A search task that involves gathering and analyzing information from multiple sources to find information that may be relevant.

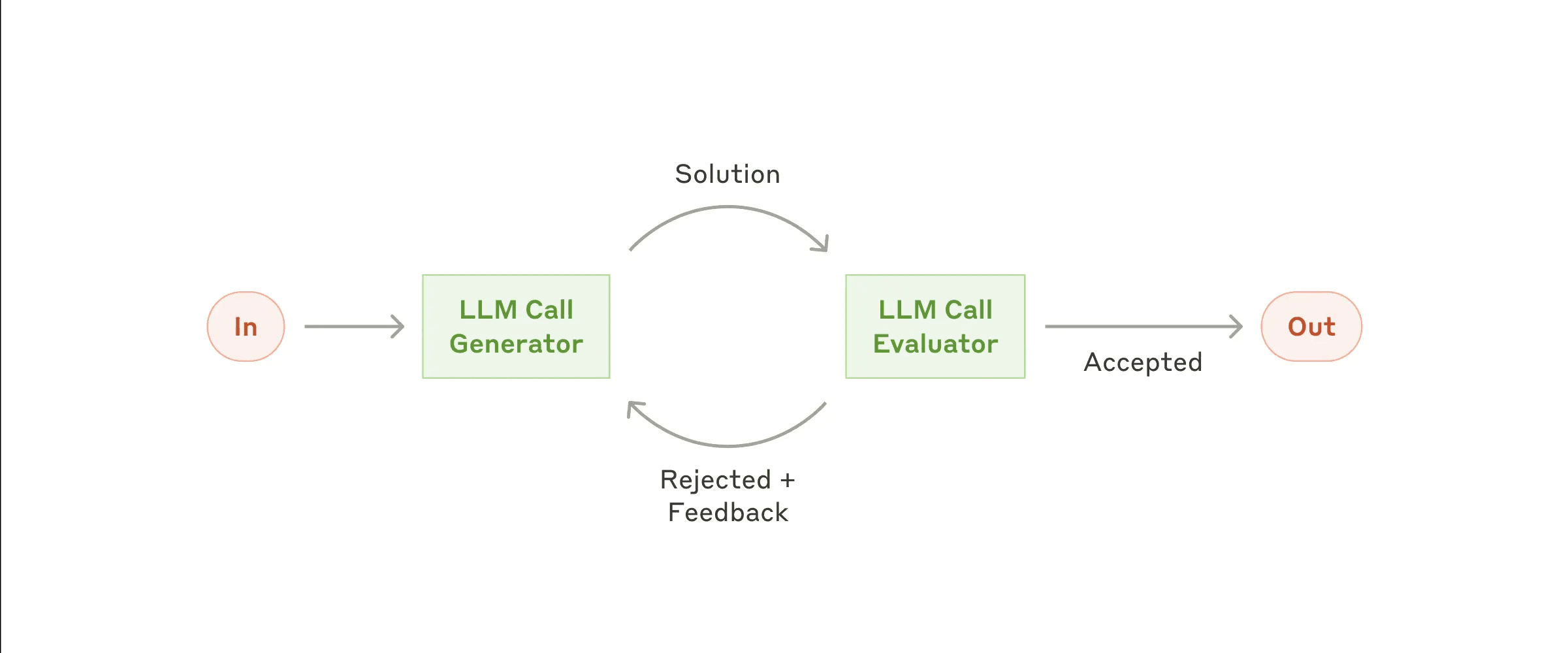

Workflow: Evaluator-Optimizer

In the evaluator-optimizer workflow, one large language model (LLM) call generates the response, while the other provides evaluation and feedback, forming a loop.

- Evaluator-Optimizer Workflow

When to use this workflow: The workflow is particularly effective when we have clear evaluation criteria and iterative optimization delivers measurable value. Two applicable hallmarks are, first, that LLM responses can be significantly improved when humans express feedback, and second, that LLM is able to provide such feedback. This is analogous to the iterative writing process that a human writer might go through when writing a polished document.

Examples of evaluator-optimizer applicability:

- Literary translations, where the translator LLM may not initially capture all nuances, but the evaluator LLM can provide useful critiques.

- Complex search tasks that require multiple rounds of search and analysis to gather comprehensive information, with an evaluator deciding whether further searches are needed.

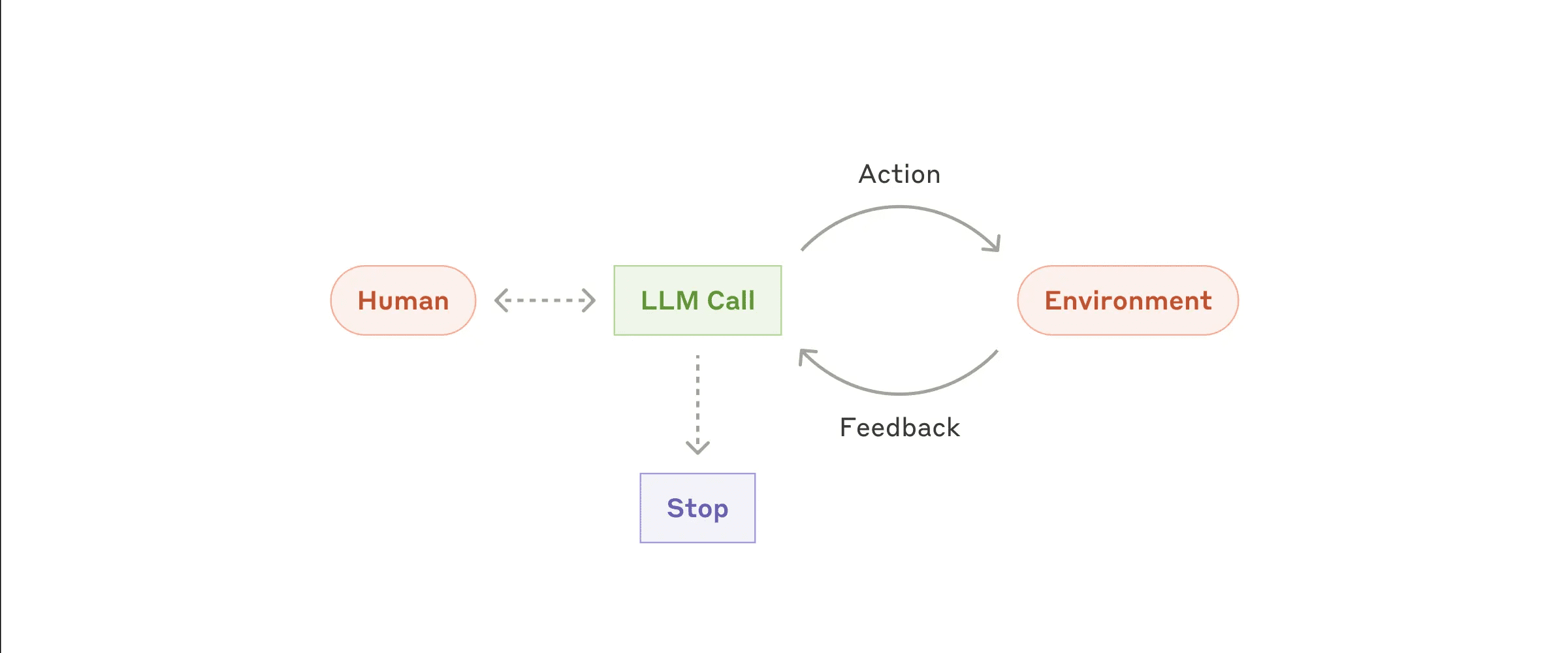

Intelligentsia/Agents

Agents (Agents) are gradually appearing in production as LLM matures in key capabilities such as understanding complex inputs, reasoning and planning, using tools reliably, and recovering from errors. Agents begin their work either with instructions from a human user or through interactive discussions. When the task is clear, the agent plans and operates independently, possibly returning to the human for more information or judgment. During execution, the agent must obtain "real information" from the environment (e.g., results of tool calls or code execution) at each step to evaluate its progress. The agent may pause at checkpoints or when it encounters obstacles to obtain human feedback. Tasks usually terminate at completion, but often include stop conditions (e.g., maximum number of iterations) to maintain control.

Agents can handle complex tasks, but their implementations are usually relatively simple. They are often just large language models based on environmental feedback loops for using the tool. Therefore, designing a toolset and its documentation needs to be clear and well thought out. We expand on best practices for tool development in Appendix 2 ("Hint Engineering for Tools").

- Autonomous Agents

When to use proxies: Agents can be used for open-ended problems where the number of steps required cannot be predicted and where fixed paths cannot be hard-coded.LLM may require multiple rounds of operations, so you must have some trust in its decision-making capabilities. The autonomy of the agent makes it well suited for scaling tasks in a trusted environment.

Agent autonomy implies higher costs and the potential risk of error accumulation. We recommend extensive testing in a sandbox environment with appropriate security measures in place.

Example of proxy application:

The following examples are from our actual implementations:

- Coding agent for solving SWE-bench tasks that involve editing multiple files according to the task description;

- our "Computer use" reference realizationIn this case, Claude used a computer to complete the task.

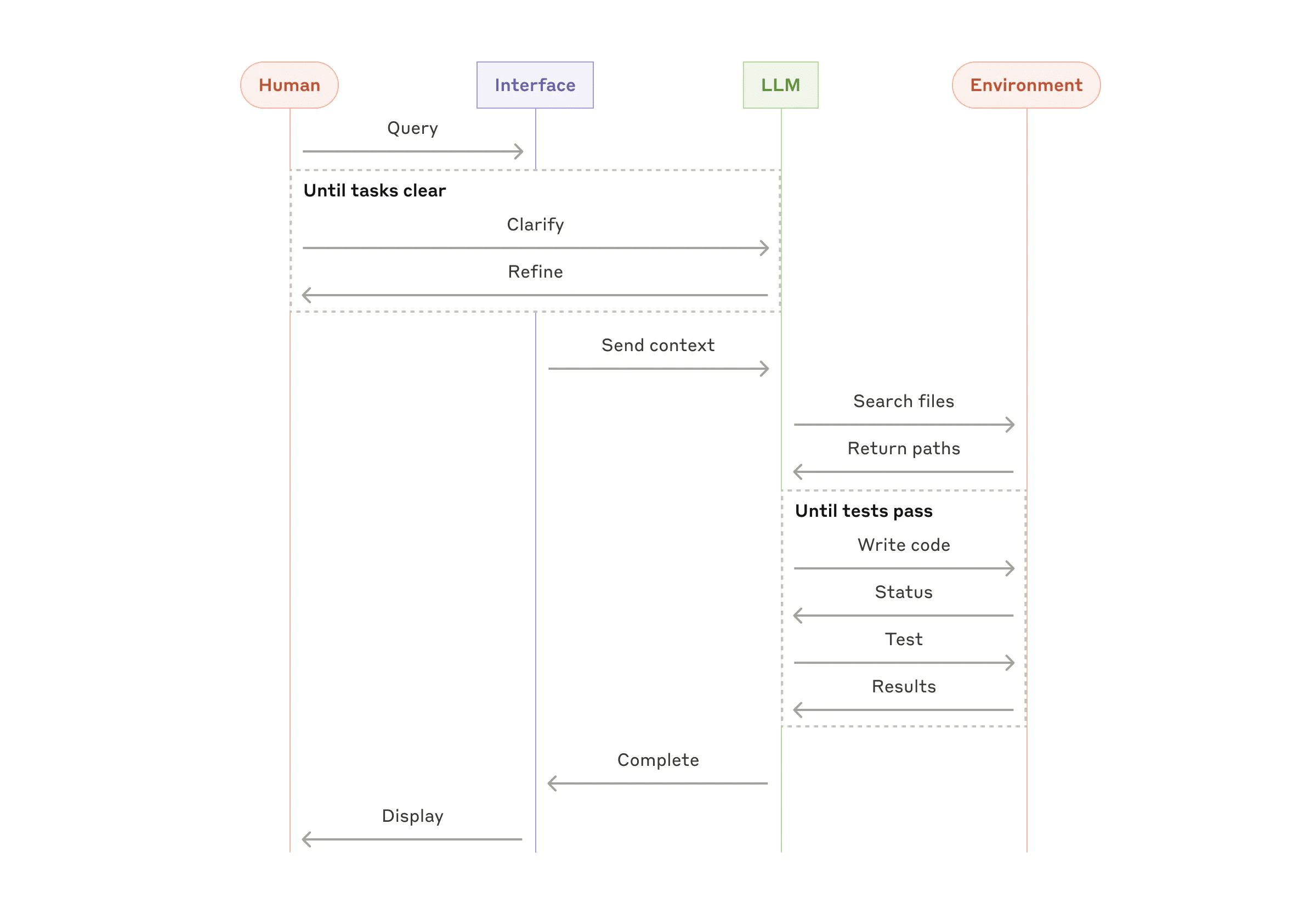

- High-level process for coding agents

Combine and customize these models

These building blocks are not mandatory. They are common patterns that developers can adapt and combine for different use cases. As with any LLM feature, the key to success is to measure performance and iterate on the implementation. Again: you should only consider adding complexity if it significantly improves results.

summaries

Success in the field of Large Language Modeling (LLM) is not about building the most complex system, but about building the system that best fits your needs. Start with simple hints, optimize through thorough evaluation, and add multi-step agent systems only when simple solutions don't meet your needs.

When implementing proxies, we follow three core principles:

- Maintaining agent-designed simplicity The

- Prioritize the planning steps by clearly displaying the agent's transparency The

- Through detailed tools Documentation and Testing Carefully design your agent-computer interface (ACI).

Frameworks can help you get started quickly, but don't hesitate to reduce the abstraction layers and build with base components when moving into production. By following these principles, you can create agents that are not only powerful but also reliable, maintainable, and trusted by your users.

a thank-you note

This paper was written by Erik Schluntz and Barry Zhang. This work is based on our work in Anthropic The experience of building an agent and the valuable insights shared by our clients are greatly appreciated.

Appendix 1: Practical Applications of Proxies

Our work with clients has revealed two particularly promising applications of AI agents that demonstrate the practical value of the above model. These applications show that agents are most valuable in tasks that require a combination of dialog and action, have clear success criteria, support feedback loops, and enable effective human supervision.

A. Client support

Customer support combines the familiar chatbot interface with enhanced functionality for tool integration. This scenario is perfect for more open agents as:

- Support interactions that naturally follow a conversational flow, while requiring access to external information and the execution of actions;

- Tools can be integrated to extract customer data, order history and knowledge base articles;

- Operations (such as issuing refunds or updating work orders) can be handled programmatically;

- Success can be clearly measured by user-defined solutions.

Multiple firms have demonstrated the viability of this approach through a usage-based pricing model that charges only for successfully resolved cases, demonstrating confidence in the effectiveness of the agency.

B. Programming agents

The field of software development has shown significant potential for LLM functionality, evolving from code completion to autonomous problem solving. Agents are particularly effective because:

- Code solutions can be verified by automated tests;

- Agents can use the test results as feedback to iterate on the solution;

- The problem areas are clear and structured;

- The quality of the output can be objectively measured.

In our implementation, the proxy has been able to solve the problem based on pulling the request description alone SWE-bench Verified Actual GitHub issues in benchmarking. However, while automated testing can help validate functionality, manual review is still critical to ensure that the solution meets broader system requirements.

Appendix 2: Tooltip Project

No matter what kind of agent system you're building, tools are probably an important part of your agent. Tools enable Claude to interact with external services and APIs by specifying their exact structure and definitions. When Claude responds, if it plans to invoke a tool, it will include in the API response a tool use block . Tool definitions and specifications should be subject to prompt engineering as much as overall prompts. In this appendix, we briefly describe how tools can be hint-engineered.

There are usually several ways to specify the same operation. For example, you can specify file editing by writing diff, or by rewriting the entire file. For structured output, you can return code in markdown or code in JSON. In software engineering, these differences are cosmetic and can be converted to each other non-destructively. However, some formats are more difficult for LLM to write than others. For example, writing diff requires knowing how many lines in a block header are being changed before new code is written. Writing code in JSON (as opposed to markdown) requires additional escaping of line breaks and quotes.

Recommendations on the format of the tool are set out below:

- Give the model enough Tokens "Think" so as not to get bogged down.

- Keep the formatting close to the text the model would naturally see on the Internet.

- Make sure there are no "extra burdens" on the format, such as having to calculate thousands of lines of code exactly, or escaping any code it writes.

A rule of thumb is to consider how much effort goes into the human-computer interface (HCI), and also plan on creating good act on behalf of sb. in a responsible position -Equal effort is invested in the computer interface (ACI). The following are some suggestions:

- Think in terms of modeling. Is it obvious to use this tool based on the description and parameters? If it requires careful thought, then it probably is for the model as well. A good tool definition usually includes examples of use, boundary cases, input format requirements, and clear boundaries with other tools.

- How can you change the name or description of a parameter to make it more obvious? Think of this as writing great documentation notes (docstring) for the junior developers on your team. This is especially important when using many similar tools.

- Test how the model uses your tool: Run many sample inputs on our workbench, watch what mistakes the model makes, and iterate.

- Poka-yoke Your Tools. Change the parameters to make it harder to make mistakes.

When building agents for SWE-bench, we actually spent more time optimizing the tools than optimizing the overall prompt. For example, we found that tools using relative file paths were prone to errors when the agent was moved out of the root directory. To fix this, we changed the tool so that it always required absolute file paths - we found that the model had no problems at all using this approach.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...