Anthropic CEO supports U.S. AI hegemony by calling out 'China threat'

Comment:

1. Discrediting China's AI development and rendering "China's threat theory".

The author of the article takes the position that the United Statesdeliberately exaggerate DeepSeek The so-called "threat" to the U.S. from the technological advances of Chinese AI companies and others, and the forced association of this with the so-called "XXX threat," is an argument that is replete withcold war mentalitycap (a poem)ideological biasThe

- The author acknowledges DeepSeek's innovation and efficiency, but then turns around and attributes its success to "being subjected to a XXXX that violated XX", an unfounded accusation that completely ignores the fact that China's AI companies rely on their own efforts and market-based mechanisms to achieve success.

- The author uses the excuse of "preventing China from obtaining XX advantages" to defend the U.S. policy of chip export control, but avoids talking about its own use of technological advantages to suppress its competitors.double standardExposed.

2. Upholding U.S. technological hegemony and attempting to curb China's AI development

The central purpose of the article is toPreserving U.S. Global Dominance in AI, and attempted to do so by:

- Exaggerating the role of export controls:: The authors claim that export controls are "the only way to prevent China from acquiring millions of chips" and see them as a key determinant of the future world landscape. This argument ignores the complexity of the global industrial chain and China's own technological progress.

- In fact, China is increasing its investment in chip research and development and has made significant progress. Although the U.S. export controls have had some impact on China's AI development, they cannot stop the continued progress of Chinese AI technology.

- Advocacy of "technological decoupling":: The author implies that the United States should continue to strengthen its technological embargo against China, and even suggests that the United States should take advantage of AI technology to establish a "lasting advantage", which is undoubtedly advocating "technological decoupling" and runs counter to the trend of globalization.

- Such an approach will not only jeopardize China's interests, but also the interests of the United States itself, and impede global scientific and technological progress and economic development.

3. Ignoring the nature of AI development and misleading public perception

The article is biased in its understanding of the development of AI, theoveremphasizeThe "law of scaling" and the "scale effect" ignore the following important factors:

- The multipolar trend in AI development: AI technology development is not the only path in the United States, China, Europe and other countries and regions are actively exploring the path of AI development and have achieved their own advantages.

- AI Ethics and Security:: The ethical and security issues brought about by AI development need to be tackled together through global cooperation, not through unilateralism and technological blockades.

- The impact of AI on human society:: AI technology should ultimately serve the development and progress of human society, rather than becoming a tool for great power games.

4. Calling for a Rational View of Sino-U.S. AI Competition and Promoting Global Cooperation

Facing the opportunities and challenges of AI development, China and the United States should:

- Abandon zero-sum game thinking: China and the U.S. do not have only a competitive relationship in the AI field, but should strengthen cooperation to jointly promote global AI technology progress and industrial development.

- Strengthening communication and dialogue: The two sides should conduct in-depth exchanges on key issues in AI development to enhance understanding and avoid misunderstanding and miscalculation.

- Co-development of AI governance rules:: China and the United States should work with other countries to promote the establishment of a fair, just and inclusive global AI governance system to ensure that AI technology benefits all of humanity.

speed reading

1. DeepSeek's technological advances and cost advantages

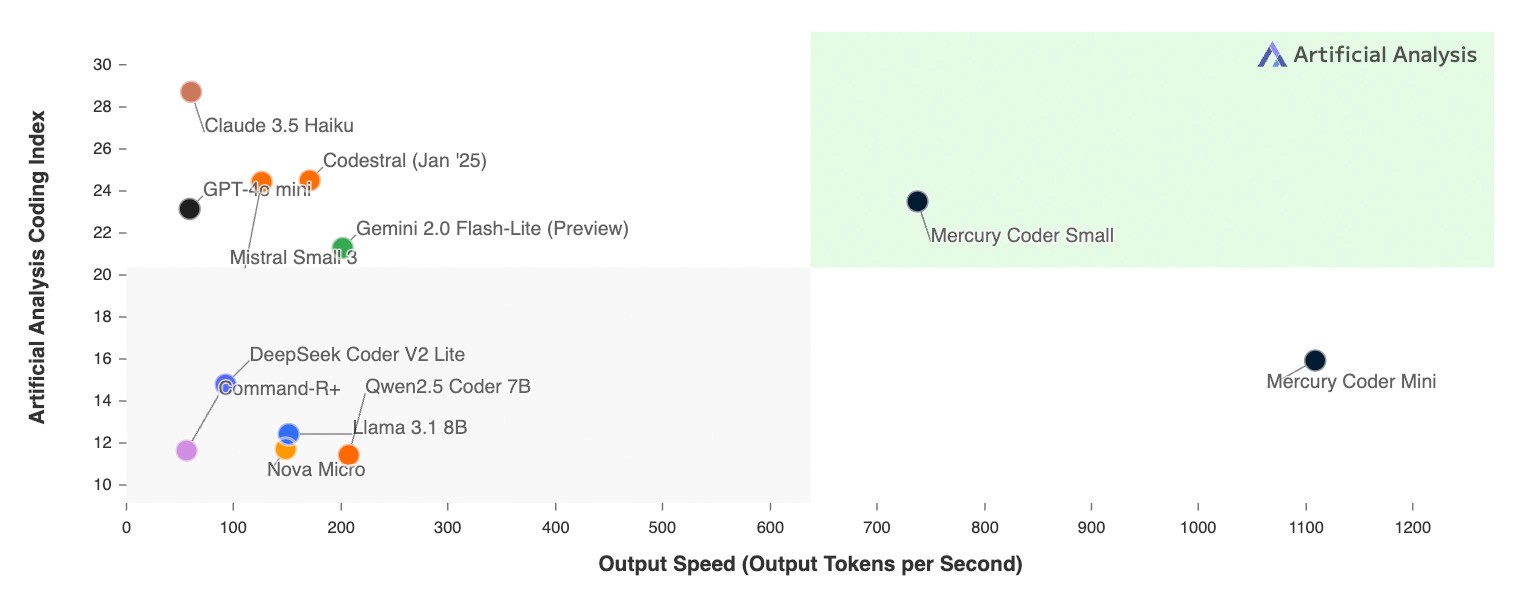

- Performance approaching that of cutting-edge AI models in the U.S.:: DeepSeek's published models (especially DeepSeek-V3) approach the performance of state-of-the-art U.S. models on some important tasks, such as coding, math competitions, and reasoning tasks [Part II of the original article, "DeepSeek's Models"].

- Significant cost reductions: DeepSeek's model training costs are much lower than those of U.S. companies. For example, DeepSeek-V3 cost about $6 million to train, while the Anthropic (used form a nominal expression) Claude 3.5 Sonnet costs tens of millions of dollars to train [Part II of the original article, "DeepSeek's Model"].

- Not a "disruptive" breakthrough.: The authors argue that DeepSeek's accomplishments are not "unique breakthroughs" but are within the expected curve of declining AI costs [Part II of the original article, "DeepSeek's Model"].

2. Three major developments in AI development

- the law of expansion (math.)As the training scale of the AI system increases, performance on cognitive tasks improves smoothly. For example, increasing the model size from $1M to $100M increases the task resolution rate from 20% to 60% [Part 1 of the original article, "The Three Dynamics"].

- curved transfer (physics): The cost of training can be reduced by improving the model architecture, increasing hardware efficiency, etc. For example, the Claude 3.5 Sonnet API is about 10 times less expensive than GPT-4. For example, the API price of Claude 3.5 Sonnet is about 10 times lower than that of GPT-4 [Part 1 of the original article, "Three major developments"].

- paradigm shiftNew training methods, such as reinforcement learning, are being introduced into the AI training process. For example, companies such as Anthropic and DeepSeek are exploring the use of reinforcement learning to train models to improve reasoning [Part 1 of the original article, "Three Big Developments"].

3. DeepSeek's resources vs. US AI companies

- Number of chipsDeepSeek has about 50,000 Hopper generation chips (including H100, H800, and H20), which is about 2-3 times the number of chips owned by major AI companies in the U.S. [Part 2 of the original article, "DeepSeek's Model"].

- capital investmentThere is not much difference between DeepSeek and American AI companies in terms of capital investment, both have invested a lot of money in AI research and development [Part 2 of the original article, "DeepSeek's model"].

4. U.S. Chip Export Controls on China

- control:: The United States has implemented several rounds of chip export control measures against China, such as banning the export of H100 chips to China and restricting the export of H800 chips [Part II of the original article, "DeepSeek's Model"].

- Control effects:: The authors argue that export controls are effective and that most of the chips used by DeepSeek were either not banned or shipped before the ban [Part II of the original article, "DeepSeek's Model"].

- future outlook:: The author argues that strict export controls are the key to preventing China from acquiring millions of chips and that this will determine whether the future world landscape will be unipolar or bipolar [Part II of the original article, "Export Controls"].

5. Geopolitical implications for AI development

- China-US AI Competition: The author believes that the development of AI will lead to increased competition between the United States and China, and may lead to a bipolar pattern of "genius countries in data centers" [Part II of the original article, "Export Controls"].

- American Advantage: The author argues that the United States should use its AI technology advantage to build a lasting advantage in order to prevent China from gaining a dominant position in the field of AI [Part II of the original article, "Export Controls"].

6. Other views on AI development

- AI Cost and ValueAlthough the cost of training AI models has declined with technological advances, the economic value of increased intelligence in AI models is higher, resulting in companies being willing to invest more money [Original Article, Part I, "The Three Dynamics"].

- Uncertainty in AI development:: The authors recognize that there are uncertainties in AI development, for example, that AI systems can help make smarter AI systems, which could lead to a temporary lead turning into a lasting advantage [Part II of the original article, "Export Controls"].

Critical Reading Full text of "On DeepSeek and Export Controls" by Anthropic CEO

A couple weeks ago, Iarticle (in publication)argued that the U.S. should tighten controls on Chinese chip exports. Since then, a Chinese AI company called DeepSeek has - at least in some ways - approached the performance of cutting-edge AI models in the U.S., while costing less.

Here, I won't be focusing on whether DeepSeek poses a threat to U.S. AI companies such as Anthropic (although I do think claims about their threat to U.S. AI leadership are grossly exaggerated). Instead, I will focus on whether the release of DeepSeek weakens the case for chip export control policies. I don't think it does. In fact.I think they make export control policy even more vital than it was a week ago.The

Export controls serve a crucial purpose: to keep democracies at the forefront of AI development. To be clear, they are not a way to hide from the competition between the United States and China. Ultimately, if we are to prevail, AI companies in the United States and other democracies must have better models than China. But we shouldn't hand China a technological advantage when we don't have to XXX.

Three major dynamics in the development of artificial intelligence

Before I present my policy argument, I will describe three basic dynamics of AI systems that are critical:

- Extended Laws. One of the characteristics of artificial intelligence - I worked with my co-founders at OpenAI is theEarliest recordsOne of the people of this characterization - is thatOther things being equal(math.) genusScaling up the training of AI systems leads to smoothly better results on a range of cognitive tasks. For example, a $1 million model might solve the important coding task of 20%, a $10 million model might solve 40%, a $100 million model might solve 60%, and so on. These differences often have huge implications in practice-another order of magnitude increase may correspond to a difference in skill level from undergraduate to PhD-so companies invest heavily in training these models.

- Curve Shift. The field is constantly coming up with ideas, big and small, to make things more effective or efficient: it could be to modelingbuildimprovements (tweaks to the Transformer architecture used by all today's models), or simply running the model on the underlying hardware in a more efficient way. Newer generations of hardware have the same effect. This will usuallytransfer curve: If innovation is a 2x "computational multiplier" (CM), then it allows you to spend $5M instead of $10M on a coding task to get to 40%; or $50M instead of $100M to get to 60%, and so on. Every cutting-edge AI company regularly finds many of these CMs: usually small (~1.2x), sometimes medium-sized (~2x), and occasionally very large (~10x). Because the value of owning a smarter system is so high, this shifting of the curve usually results in the companySpend moreThe cost efficiency gains are ultimately used exclusively to train smarter models, limited only by the financial resources of the company. People are naturally attracted to the idea that "first something is expensive, then it gets cheaper" - as if AI is a constant mass, and as it gets cheaper, we'll use fewer chips to train it. But here's the important thing.expansion curve: When it shifts, we just traverse it faster because the value at the end of the curve is so high. In 2020, my team published adiscuss a paper or thesis (old)This indicates that due to thearithmeticprogress, the curve shifted at a rate of about 1.68 times per year. This has probably accelerated significantly since then; it also doesn't take into account efficiency and hardware. I'd guess that today that number is probably about 4x per year. Another estimate ishere are. The shift in the training curve also shifted the inference curve, so that for many years in theKeeping the mass of the model constant, significant price reductions have been occurring. For example, Claude 3.5 Sonnet was released 15 months after the original GPT-4 and scored better than GPT-4 in almost all benchmarks, while at the same time the API price was reduced by a factor of about 10.

- Paradigm Shift. Every so often, the underlying thing being extended changes a bit, or a new type of extension is added during training. From 2020 to 2023, the main things being extended arePre-trained models: models trained on an ever-increasing amount of Internet text, plus a little bit of other training. in 2024, the use ofIntensive learning(RL) to train models to generate chains of thought has become a new focus for scaling. anthropic, DeepSeek, and many others (perhaps most notably OpenAI with the release of their o1-preview model in september) have found that this kind of training dramatically improves performance on certain specific, objectively measurable tasks (e.g., math, coding contests, and reasoning that is similar to reasoning similar to these tasks). This new paradigm involvessurname CongOrdinary pre-trained modelscommencement, and then used RL as a second stage to add reasoning skills. Importantly, because this type of RL is new, we are still in the very early stages of the expansion curve: the expenditures for the second stage of the RL phase were small across all participants. Spending $1 million instead of $100,000 is enough to reap huge benefits. Companies are now working very quickly to scale Phase II to hundreds of millions or even billions of dollars, but it is critical to understand that we are at a unique "intersection" where a powerful new paradigm is at an early stage of the scaling curve, and therefore can make huge gains very quickly.

DeepSeek's model

The above three dynamics can help us understand DeepSeek's recent release. About a month ago, DeepSeek released a program called "DeepSeek-V3"The model, which is a purelyPre-trained models--phase 1 as described above. Then last week, they released "DeepSeek-R1", adding a second phase. It's impossible to determine all the details of these models from the outside, but here's my best understanding of the two releases.

DeepSeek-V3is actually truly innovative, andought toIt caught people's attention about a month ago (we certainly noticed). As a pre-trained model, it appears to approach the performance of state-of-the-art US models on certain important tasks, while being considerably cheaper to train (although, we found that Claude 3.5 Sonnet is still much better on certain other critical tasks, such as real-world coding.) The DeepSeek team has achieved this with some truly impressive innovations, mainly Focusing on engineering efficiency. There have been particularly innovative improvements in managing a key-value cache called "key-value caching" and in driving an approach called "expert blending".

However, it is important to look closely:

- DeepSeek didn't "do for $6 million what American AI companies can do for billions of dollars". I can only speak for Anthropic, but Claude 3.5 Sonnet is a medium-sized model that cost tens of millions of dollars to train (I won't give exact numbers). Furthermore, the training of 3.5 Sonnet did not in any way involve a larger or more expensive model (contrary to some rumors.) Sonnet was trained 9-12 months ago, while DeepSeek's model was trained in November/December, and Sonnet is still clearly ahead of the curve in many internal and external evaluations. So I think a fair statement would be "DeepSeek produced a model with similar performance to the US model 7-10 months ago, at a much lower cost (but nowhere near the proportions people are implying)".

- If the historical trend in cost curve declines is about 4x per year, that means that in normal business activity - in the normal historical trend of cost declines occurring in 2023 and 2024 - we would expect to now have a model that is 3.5 times cheaper than the Sonnet/GPT-4o cheaper model by a factor of 3-4. Since DeepSeek-V3 is worse than those U.S. frontier models - let's say about a factor of 2 worse on the expansion curve, I think that's already pretty generous to DeepSeek-V3 - which means that if DeepSeek V3 costs about 8 times less to train than the current US model developed a year ago, that would be perfectly normal and perfectly "on trend". I won't give specific numbers, but it's clear from the previous point that even if you take DeepSeek's training costs at face value, they are at best on-trend, and probably not even close. For example, this is flatter than the difference in inference price (10x) from the original GPT-4 to Claude 3.5 Sonnet, which is a better model than GPT-4. **All of this suggests that DeepSeek-V3 is not a unique breakthrough, nor does it fundamentally change the economics of LLM; it is an expected point on the curve of ongoing cost reduction. The difference is that this time it was a Chinese company that first demonstrated the expected cost reduction. **This has never happened before, and has significant geopolitical implications. However, US companies will soon follow - and they won't do it by copying DeepSeek, but because they too are realizing the usual cost reduction trends.

- DeepSeek and AI America both have more money and more chips than ever before. The extra chips are used for R&D to develop the ideas behind the models, and sometimes for training larger models that aren't ready (or need multiple tries to get right). There are reports - we're not sure if they're true - that DeepSeek actually has50,000 Hoppergeneration chips, which I'd guess is about 2-3 times different from the number owned by major US AI companies (e.g., it's more than xAI's "Colossus"clusters are 2-3 times less). These 50,000 Hopper chips cost about $1 billion.As a result, DeepSeek's total spending as a company (as opposed to spending on training individual models) isn't that different from that of US AI labs.

- It's worth noting that the "extended curve" analysis is a bit of an oversimplification, as the models are somewhat different and have different strengths and weaknesses; the extended curve figure is a rough average that leaves out a lot of detail. I can only talk about Anthropic's model, but as I alluded to above, Claude is very well designed in the way he codes and interacts with people (and many people use it to seek personal advice or support). There is simply no comparison with DeepSeek on these and some additional tasks. These factors are not present in the extended figures.

R1, which is a model released last week that has sparked widespread public concern (includingNVIDIA shares fall about 17%), is nowhere near as interesting as V3 from an innovation or engineering perspective. It adds a second stage of training - reinforcement learning, as described in point 3 of the previous section - and essentially replicates what OpenAI did with o1 (they seem to achieve similar results at similar scales)^8^. However, since we are in the early stages of the expansion curve, there are likely to be several companies producing this type of model as long as they start with strong pre-trained models. Given that V3 is probably very cheap to produce R1. So we are at an interesting "crossover point" where there are several companies producing good inference models for the time being. This will quickly stop as all companies extend their curves further in this type of model.

export control

All of this is just a prelude to my main topic of interest: chip export controls on China. Given these facts, my view of the situation is as follows:

- There is a continuing trend of companiesSpending more and more.to train powerful AI models, even if the curve shifts periodically and the trainingstate in advanceThe cost of horizontal modeling intelligence is rapidly declining. It's just that the economic value of training smarter models is so great that any cost benefits are almost immediatelycancel out completely--They are being reinvested in making smarter models at the same enormous cost we initially planned to spend. Since U.S. labs have yet to discover them, the efficiency innovations developed by DeepSeek will soon be applied by labs in the U.S. and China to train billions of dollars worth of models. These models will perform better than the multi-billion dollar models they previously planned to train - but they will still cost billions of dollars. That number will continue to rise until we reach the point where AI is smarter than almost all humans at almost everything.

- Making AI that is smarter than almost all humans at almost everything will require millions of chips, cost at least tens of billions of dollars, and will most likely happen in 2026-2027. the DeepSeek releases don't change that, as they roughly fit the cost-reduction curve that's always been considered in these calculations.

- This means that in 2026-2027, we could be living in two very different worlds. In the US, multiple companies will certainly have the millions of chips needed (at a cost of tens of billions of dollars). The question is whether China will also have access to millions of chips.

- If they could, we would be living in athe north and south polesworld, both the US and China have powerful AI models that will lead to extremely rapid advances in science and technology - what I call "Genius Nation in the Data Center". A bipolar world is not necessarily always balanced. Even if U.S. and Chinese AI systems are on a par, China may be able to devote more talent, capital, and attention to military applications of the technology. Combined with its large industrial base and military-strategic advantages, this could help China achieve dominance on the global stage, not just in AI, but in all aspects.

- If Chinashould notGet millions of chips and we will (at least temporarily) live in aunipolarworld, only the United States and its allies have these models. It is unclear how long the unipolar world will last, but it is at least a possibility that theBecause AI systems can ultimately help make smarter AI systems, a temporary lead can translate into a lasting advantage. As a result, it is a world in which the United States and its allies are likely to take a dominant and enduring lead on the global stage.

- Strictly enforced export controls are the only thing that can prevent China from acquiring millions of chips, and are therefore the most important factor in determining whether we end up living in a unipolar or bipolar world.

- DeepSeek's performance doesn't mean that export controls failed. As I said above, DeepSeek has a moderate to large number of chips, so it's not surprising that they were able to develop and train a powerful model. They aren't any more resource constrained than US AI companies, and export controls aren't a major factor in their "innovation". They are just very talented engineers and show why China is a serious competitor to the US.

- DeepSeek also doesn't show that China will always be able to get the chips it needs through smuggling, or that there will always be loopholes in the controls. I don't believe export controls were ever designed to prevent China from obtaining tens of thousands of chips. a billion dollars of economic activity can be hidden, but it's hard to hide $100 billion or even $10 billion. a million chips might be hard to smuggle as well. Again, it's instructive to look at the chips DeepSeek currently reports owning. According to SemiAnalysis, it's a mix of H100s, H800s, and H20s totaling 50,000. the H100s have been banned by export controls since they were released, so if DeepSeek has any, they had to have gotten them through smuggling (note that NVIDIAAlready declaredDeepSeek's progress is "fully compliant with export control compliance"). the H800 was allowed in the first round of export controls in 2022, but was banned in the October 2023 update of the controls, so these were probably shipped prior to the ban. the H20 is less efficient for training, more efficient for sampling --but still allowed, though I think it should be banned. All of this suggests that the bulk of DeepSeek's AI chip fleet consists of chips that were not banned (but should have been); chips that were shipped out before the ban; and some chips that seem very likely to have been smuggled. This suggests that the export controls are actually working and are adapting: the loopholes are being closed; otherwise, they might have a full fleet of top-tier H100s. If we can close them fast enough, we may be able to stop China from getting millions of chips, increasing the likelihood of a unipolar world in which the US leads.

Given my concerns about export controls and US national security, I want to be clear. I don't see DeepSeek as an adversary per se, and the focus isn't specifically on them. In the interviews they've done, they seem like smart, curious researchers just trying to make useful technology.

But they are subject to a XXXX that violates XX and acts aggressively on the world stage, and if they are able to match the US in AI, they will be even more unfettered in those behaviors. Export controls are one of the most powerful tools we have to prevent that, and the idea that technology becomesmore powerfulPrice/performance ratiogreater, is a reason to lift our export controls, which is totally unjustified.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...