Ali Bailian provides QwQ-32B API for free, and 1 million tokens are free to use it every day!

Recently, the AliCloud Hundred Refinement Platform was announced for QwQ-32B The Big Language Model opens up API interfaces and providesFree access to 1 million tokens per dayThe QwQ-32B model is the first of its kind in the world, a move that significantly lowers the barrier for users to experience cutting-edge AI technology. For users who want to experience the powerful performance of the QwQ-32B model but are limited by the local hardware computing power, calling the cloud model through the API interface is definitely a more attractive option.

Recommended reading for those who don't know about QwQ-32B:Small Model, Big Power: QwQ-32B Takes on Full-Blooded DeepSeek-R1 with 1/20 Parameters

Advantages of the API interface: breaking hardware limitations, powerful computing power at your fingertips

Previously we released Local Deployment QwQ-32B Large Model: Easy to follow guide for PCs In addition, users wanting to experience large-scale language models such as QwQ-32B often need to deploy high-performance computing equipment locally. The hardware requirement of 24GB or even higher video memory often blocks many users from the door of AI experience. The API interface provided by AliCloud's Hundred Refinement Platform cleverly solves this pain point.

By calling QwQ models through the API interface, users can gain several advantages:

- No threshold for hardware configuration. No need to locally deploy high-performance hardware, lowering the threshold of use. Even thin and light laptops or even smartphones can smoothly utilize the powerful modeling power of the cloud. Users are recommended to use a graphics card with 24G video memory or higher for a smoother local modeling experience.

- System Compatibility. The API interface is OS-independent and cross-platform. No matter if you are using Windows, macOS or Linux, you can access it easily.

- The more powerful Plus version. Users can experience the enhanced QwQ Plus model with better performance than the locally deployed full-blooded version of QwQ-32B. The Plus version, i.e., the enhanced version of the QwQ inference model for Tongyi Qianqi, is based on the Qwen2.5 model and trained by reinforcement learning. Compared with the basic version, the Plus version achieves significant improvement in the model inference ability, and reaches the highest level in the evaluation of core metrics (e.g., AIME 24/25, livecodebench) and some general metrics (e.g., IFEval, LiveBench, etc.), such as the math code. DeepSeek-R1 Full-blooded version of the model's level.

- High Speed Response. The API interface enables fast response times of 40-50 tokens/second. This means that users can have a near real-time interactive experience, dramatically increasing efficiency.

It is worth mentioning that in addition to AliCloud Hundred Refine, the in silico mobility platform also provides an API interface to the QwQ-32B model. If users are interested in the in silico flow platform, they can refer to the previous article. In this article, we will mainly introduce how to use the API interface provided by Aliyun Hundred Refine platform.

Aliyun Hundred Refined API Access Guide: Three Simple Steps to Get Started Quickly

AliCloud's Hundred Refinement Platform provides QwQ series model API users with 1 million daily tokens The free credit. For most users, this amount is sufficient for day-to-day experience and testing. Users just need to complete a simple registration and configuration to get started.

Below are the brief steps to configure Aliyun Bai Lian QwQ Plus API on the client side:

1. Get the API Key and model name

First, visit the AliCloud Hundred Refinement Platform and complete the registration or login.

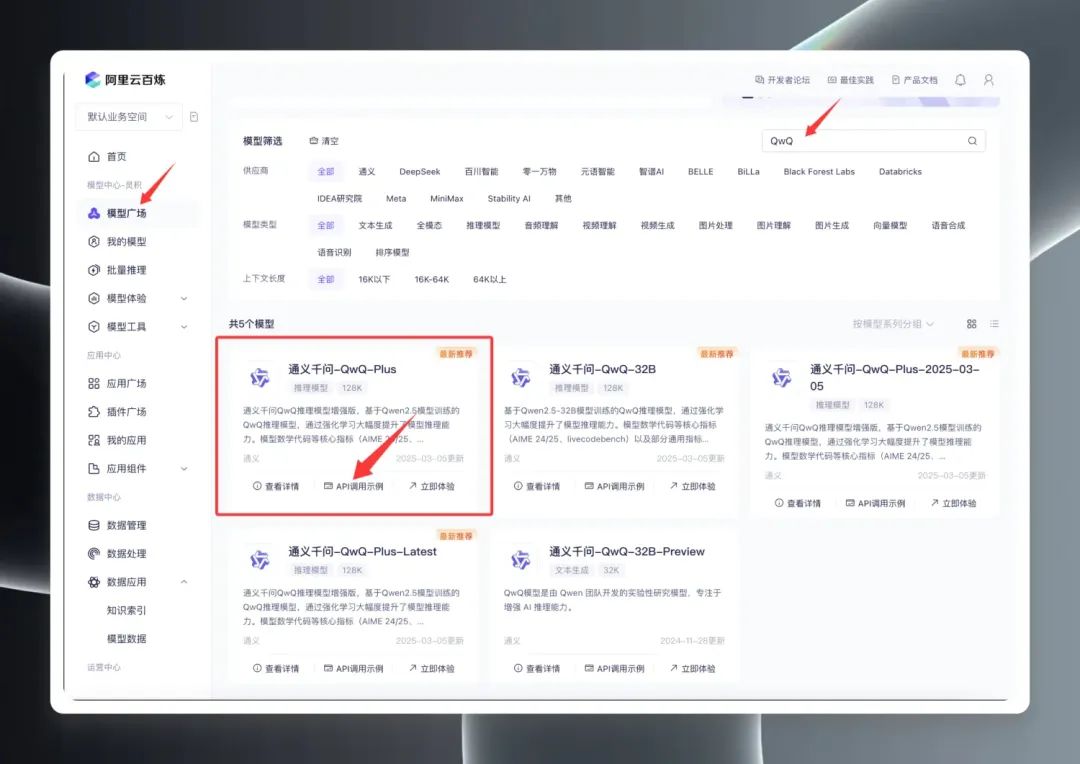

Once logged in, search for "QwQ" in Model Square to see the QwQ series of models. In fact, Model Square displays three main versions: QwQ32B (official version), QwQ32B-Preview (preview version), and QwQ Plus (enhanced version, also known as commercial version).

Select "QwQ Plus (Enhanced)", click on "API Call Examples", and on the new page find the Model name qwq-plusThe

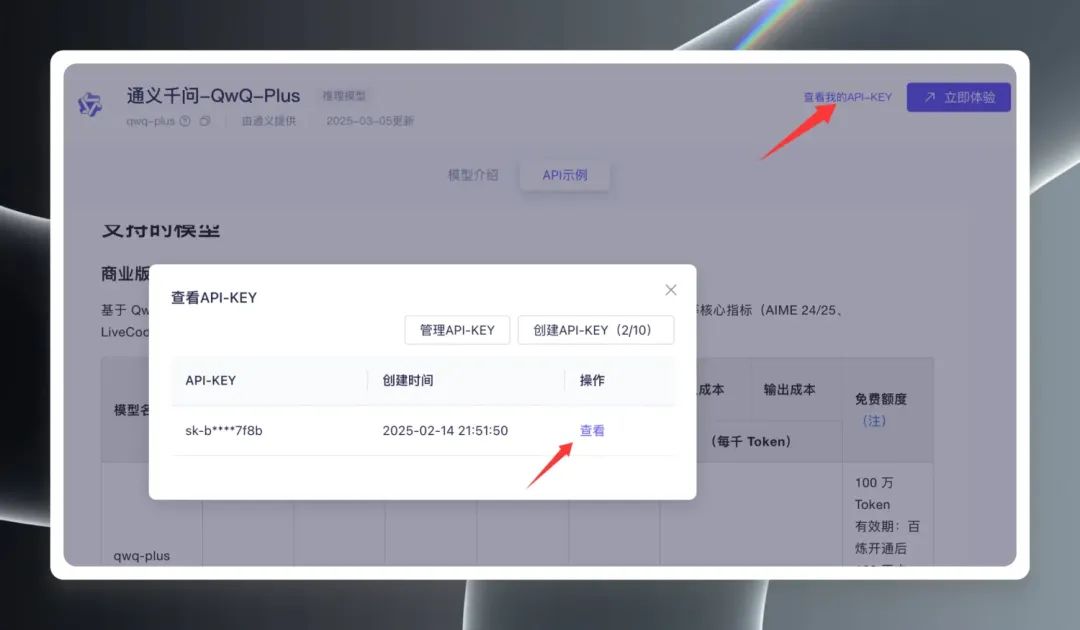

Next, click "View My API Key" in the upper right corner of the page, you need to create an API Key for the first time, if you have already created one, you can directly view and copy it. API KeyThe

2. Client configuration

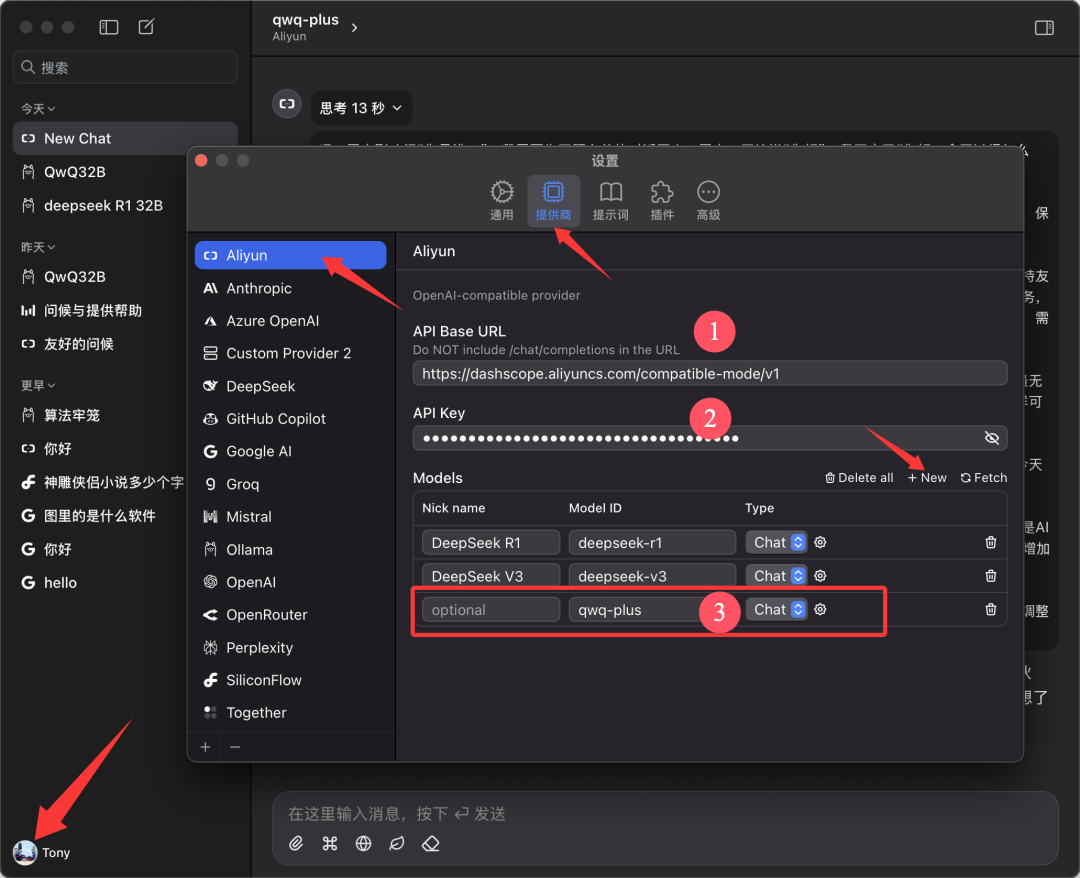

this paper is based on Chatwise The client is used as an example for demonstration purposes. Open the Chatwise software, click on the user's avatar and go to the "Settings" screen.

Find "Aliyun" in the list of providers, if not found click "➕" at the bottom to add it.

Follow the configuration as shown in the figure below:

- API Base URL.

https://bailian.aliyuncs.com(General) - API Key. Paste the API Key you copied in the previous step

- Model. Add model name

qwq-plus(must be the name)

3. Starting the experience

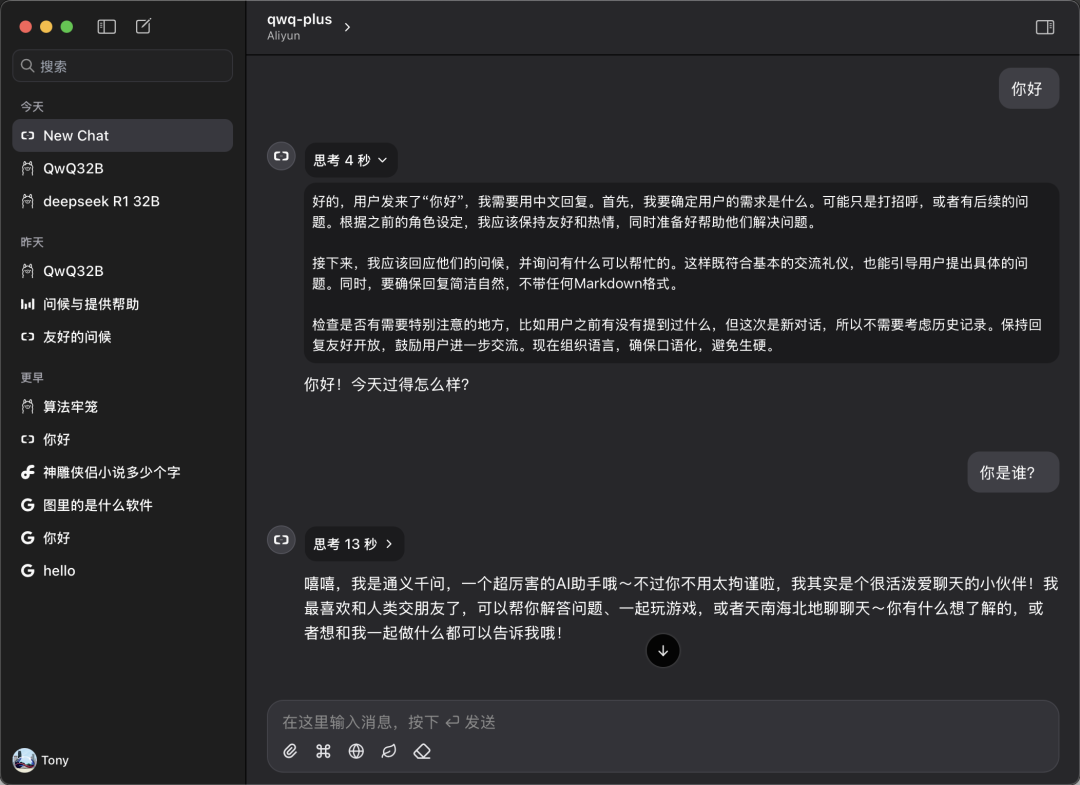

Return to the main Chatwise screen and select the "qwq-plus" model in the model selection drop-down menu to begin your conversation experience.

Real-world performance: comparable to or better than local deployments

In order to verify the actual performance of the QwQ Plus API, we performed a simple comparison test.

Speed test:

Measurements show that the QwQ Plus API interface speed is excellent, with rates stabilized at 40-50 tokens/second. In comparison, the DeepSeek R1 model API, the rate is significantly slower at 10+ tokens/sec.

Compatibility testing:

Users can also configure and use the QwQ Plus API on a client such as CherryStudio, but during testing of CherryStudio, a potential issue was observed: when the model performs complex reasoning over a long period of time, CherryStudio may consume a large amount of system resources, and software reboots may occur on some configured devices. However, using the Chatwise client in the same hardware environment did not наблюдаться similar problems. This may be related to differences in development frameworks for different clients.

Competency Comparison:

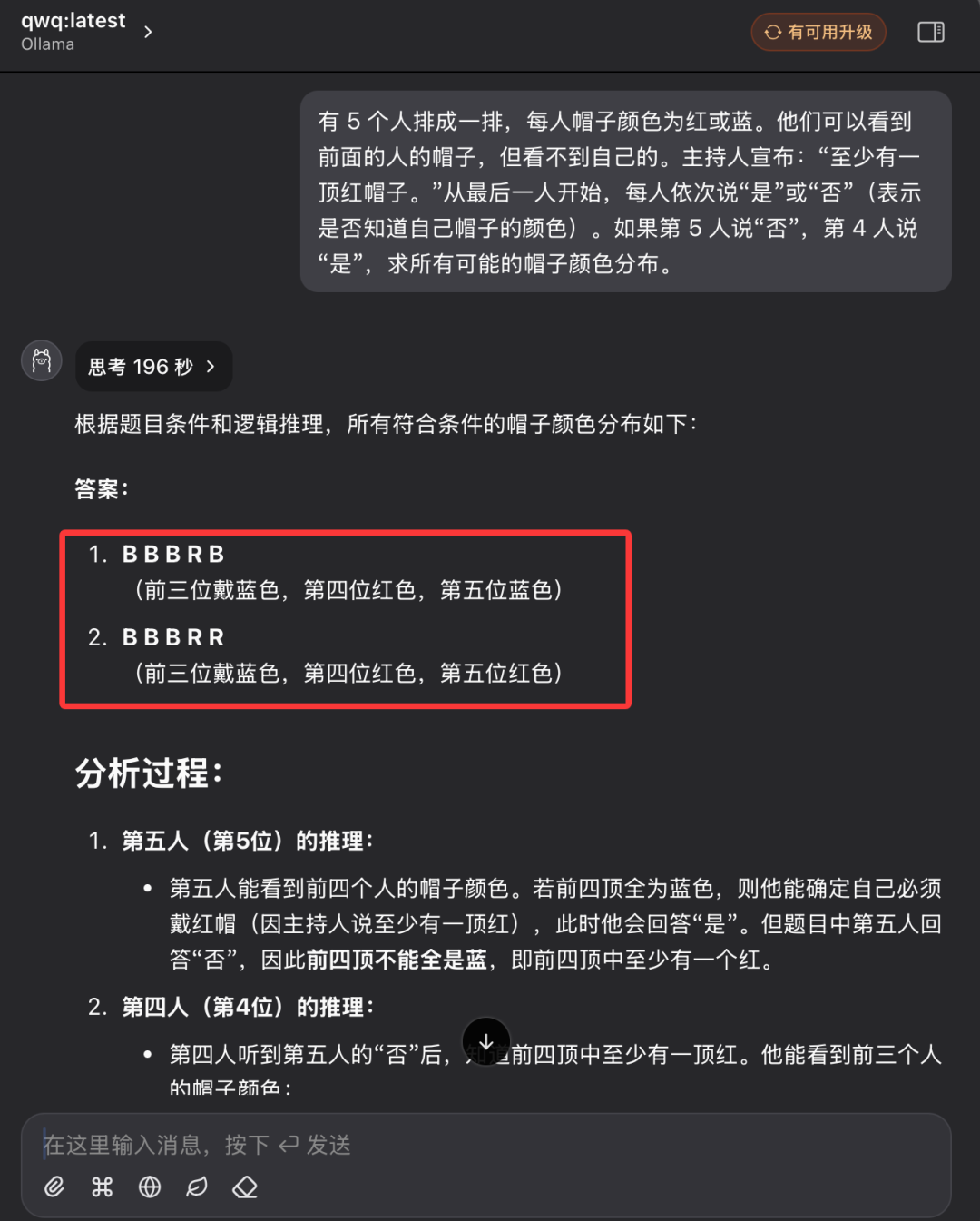

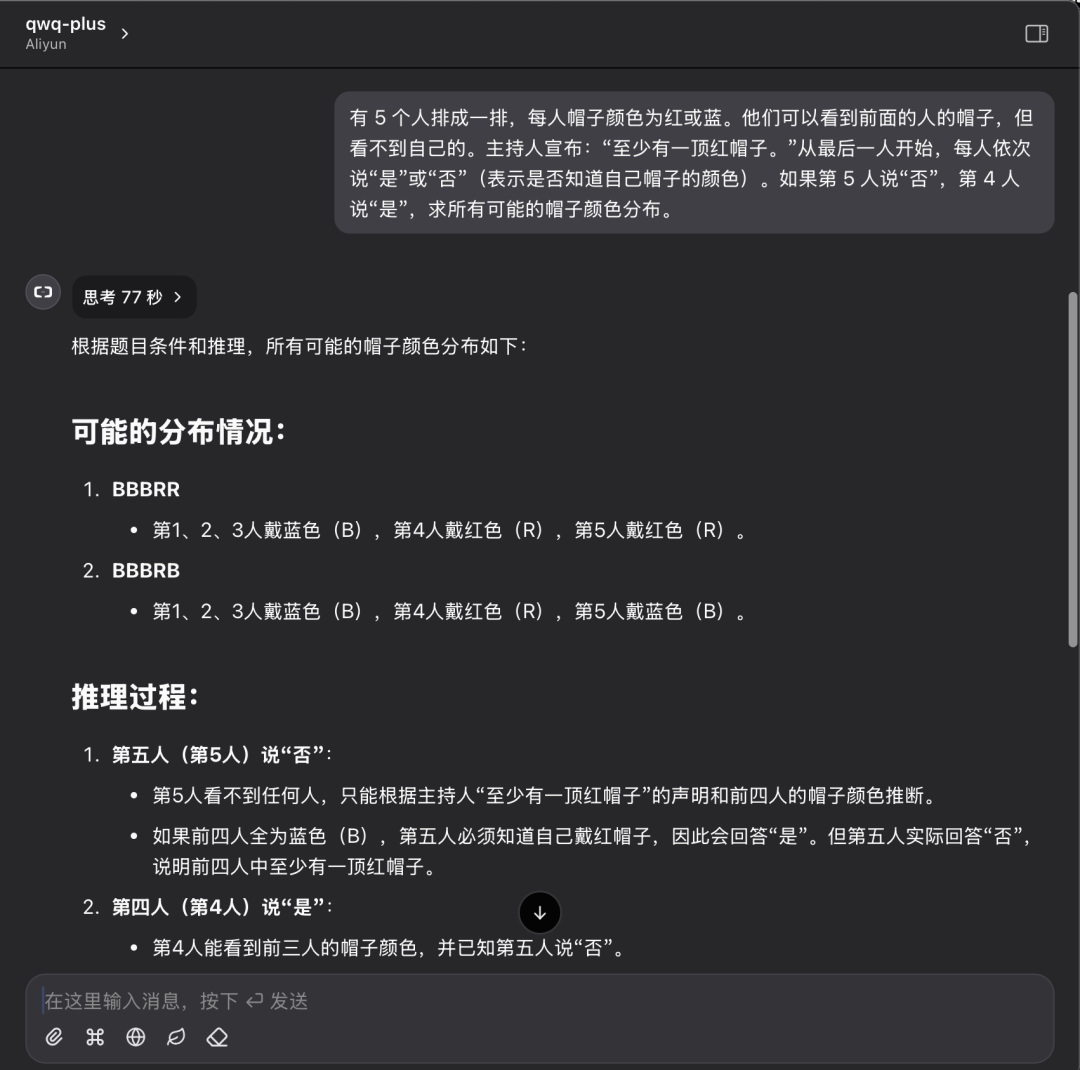

We follow the previous hat-colored logical reasoning questions and compare the performance of the native QwQ32 model with the QwQ Plus API.

Problem Description:

There are 5 people lined up in a row, each with a red or blue hat color. They can see the hats of the people in front of them, but not their own. The facilitator announces, "There is at least one red hat." Starting with the last person, each person in turn says "yes" or "no" (indicating whether or not they know the color of their hat). If the fifth person says "no" and the fourth person says "yes", find the distribution of all possible hat colors.

Local QwQ32 model performance:

The local QwQ32 model was finally successfully answered after two attempts, the second taking 196 seconds.

QwQ Plus API performance:

QwQ Plus API performance on the same question: one-time correct answer in 77 seconds.

Analysis of test results:

Although a single case is not enough to fully evaluate the model capability, the results of this test can intuitively reflect the difference between the locally deployed model and the cloud-based API solution. When solving logical reasoning problems, both solutions can give correct answers, but the QwQ Plus API is better in terms of efficiency and clarity of the reasoning process, with shorter reasoning time and less token consumption.

Embrace Cloud AI for Everyone

The free opening of the QwQ-32B API interface on the AliCloud Hundred Refinement Platform and the provision of a generous amount of free tokens is undoubtedly an important step in promoting the popularization of large language modeling technology. With the API interface, users can easily experience the power of high-performance AI models in the cloud without investing in expensive hardware costs. Whether you are a developer, researcher, or AI enthusiast, you can now take full advantage of the free resources provided by Aliyun Hundred Refine to start your AI exploration journey.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...