AI College of Engineering: 1. Tip Engineering

🚀 Tip Engineering

A key skill in the era of generative AI is Prompt Engineering, the art and science of designing effective instructions to guide language models in generating desired outputs. This emerging discipline involves designing and optimizing prompts to elicit specific responses from AI models, particularly Large Language Models LLMs, shaping the way we interact with AI and harness its power, according to DataCamp.

📚 Warehouse structure

| Name of the document | descriptive |

|---|---|

| Basic Cue Design | An introduction to the concepts and structures of basic cue engineering. |

| Advanced Cue Design | Advanced tips for optimizing and structuring output. |

| Advanced Cue Design Hands-on | A practical guide to applying advanced cue design techniques. |

| Understanding the OpenAI API | An overview of prompting using the OpenAI API. |

| Function Calls in LLM | Notebook that demonstrates the function call functionality of the language model. |

| Integrated Tips Engineering Notebook | Jupyter Notebook covering a wide range of prompt design techniques. |

🎯 Cue Design Briefs

Prompting is the process of providing an AI model with specific instructions or inputs to trigger a desired output or behavior. It is an important interface between humans and AI systems, allowing the user to effectively guide the model's response. In the context of Large Language Models (LLMs), prompting can be extended from simple queries to complex sets of instructions, including context and style guides.

Key aspects of prompt design include:

- Versatility: depending on the AI model and task, prompts can be in textual, visual or auditory form

- Specificity: well-designed tips provide precise details to generate more accurate and relevant outputs

- Iterative optimization: cue design typically involves interpreting the model's response and adjusting subsequent cues for better results

- Application diversity: hints are used in various areas such as text generation, image recognition, data analytics and conversational AI

🌟 Tips on the importance of engineering

- Improve AI performance: well-designed cue words can significantly improve the quality and relevance of AI-generated output. By providing clear instructions and context, cue engineering enables models to generate more accurate, coherent and useful responses.

- Customization and Flexibility: Cue Engineering allows users to tailor AI responses to specific needs and domains without the need for extensive model retraining. This flexibility allows AI systems to better adapt to diverse applications in a variety of industries.

- Bias mitigation: bias in AI output can be reduced by crafting cue words that can guide the model to consider multiple perspectives or focus on specific unbiased sources of information.

- Improving the user experience: effective cue engineering improves the user experience by bridging the gap between human intent and machine understanding, making AI tools more accessible and user-friendly [4].

- Cost efficiency: optimizing cue words allows for more efficient use of computational resources and reduces the need for larger, more expensive models to achieve desired results.

- Rapid Prototyping and Iteration: Cue Engineering supports rapid experimentation and optimization of AI applications, accelerating development cycles and innovation.

- Ethical considerations: Thoughtful prompt design helps ensure that AI systems follow ethical guidelines and generate appropriate content for different contexts and audiences.

- Scalability: Once effective cue words are developed, they can be easily scaled across the organization for consistent and high-quality AI interactions.

- Cross-disciplinary applications: Cue Engineering connects technical and domain expertise, allowing subject matter experts to leverage AI capabilities without in-depth technical knowledge.

Tip Engineering

- Introduction to cue engineering: a foundational overview of cue engineering concepts, including basic principles, structured cues, comparative analysis, and problem-solving applications.

- Basic Prompt Structures: explores single- and multi-round prompts, showing how to create simple prompts and engage in dialog with AI models.

- Hint Templates and Variables: An introduction to the use of templates and variables in hints, with a focus on creating flexible and reusable hint structures with tools such as Jinja2.

- Zero Sample Prompting: demonstrates how AI models can be guided to complete tasks without specific examples through direct task specification and role-based prompting techniques.

- Sample Less Learning and Contextual Learning: covers techniques for improving performance on specific tasks without the need for fine-tuning by providing a small number of examples to guide the AI response.

- Chain Thinking (CoT) Tip: Encourage AI models to break down complex problems into step-by-step reasoning processes to improve problem solving.

- Self-consistency and multipath reasoning: exploring methods for generating diverse reasoning paths and aggregating results to improve the accuracy and reliability of AI outputs.

- Constrained and Guided Generation: focuses on setting constraints on model outputs and implementing rule-based generation to control and guide the AI's response.

- Role Hints: demonstrates how to assign specific roles to AI models and design effective role descriptions to elicit desired behaviors or expertise.

- Task Breakdown Prompts: Explore techniques for breaking down complex tasks into smaller, manageable subtasks in prompts.

- Cue Chains and Sequences: demonstrates how to connect multiple cues in a logical flow to handle complex multi-step tasks.

- Directive Engineering: Focuses on designing clear and efficient directives for language models, balancing specificity and generality to optimize performance.

- Cue Optimization Techniques: Covers advanced methods for improving cues, including A/B testing and iterative optimization based on performance metrics.

- Dealing with ambiguity and improving clarity: exploring techniques for recognizing and resolving ambiguous prompts, and strategies for writing clearer, more effective prompts.

- Prompt length and complexity management: explore strategies for managing long or complex prompts, including chunking and summarization techniques.

- Negative Cues and Avoiding Undesired Outputs: shows how to use negative examples and constraints to steer the model away from unwanted responses.

- Cue Formats and Structures: investigate various cue formats and structural elements that optimize AI model responses.

- Task-specific prompts: focus on designing prompts for specific tasks such as summarizing, quizzing, code generation and creative writing.

- Multilingual and Cross-Language Prompts: Explore prompt design techniques that work effectively across multiple languages, as well as prompt design for translation tasks.

- Ethical considerations in cue engineering: focusing on the ethical dimensions of avoiding bias and creating inclusive cues.

- Prompt Security and Safety Measures: Covers techniques to prevent prompt injection and implement content filtering to ensure the safety of AI applications.

- Cue validity assessment: explore methods for assessing and measuring cue validity, including manual and automated assessment techniques.

Basic Cueing Tips

Cue engineering encompasses a range of techniques designed to optimize interactions with AI models. These basic methods form the basis for more advanced strategies and are essential skills for anyone wishing to fully utilize the potential of generative AI tools.

Zero-sample hints are the simplest technique, i.e., asking instructions or questions directly to the AI without additional context or examples [1][2]. This approach is suitable for simple tasks or for getting answers quickly. For example, asking "Where is the capital of France?" is a zero-sample prompt.

The single-sample cue adds an example to the zero-sample cue to guide the AI's response [3]. This technique is particularly useful when dealing with specific formats or styles. For example, to generate a product description, an example description could be provided and then asked to generate another description for a different product.

The sample less cue extends this concept further by providing multiple examples to the AI [2]. This approach is particularly effective for complex tasks or when output consistency is required. By providing multiple examples of the desired output format or style, the AI can better understand and replicate patterns.

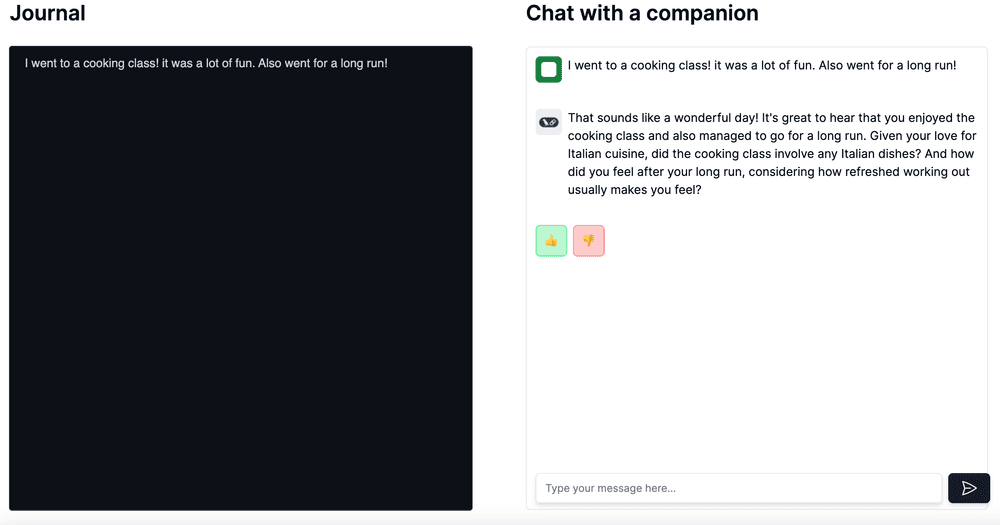

Role-based prompts significantly influence the tone and content of an AI's response by assigning it a specific role or identity [1]. For example, instructing the AI to "play technical support specialist" before asking a question can result in a more technical and supportive response.

Prompt reframing is a technique for guiding the AI to provide a different perspective or a more nuanced answer by rewording or reorganizing the prompt [3]. This approach is particularly useful when initial results are unsatisfactory or when multiple aspects of a topic need to be explored. For example, instead of asking "What are the benefits of renewable energy?" , it could be reframed as "How does renewable energy affect different sectors of the economy?"

Prompt combination combines multiple instructions or questions into one comprehensive prompt [3]. This technique is valuable for obtaining multiple responses or dealing with complex questions. For example, combining "Explain the difference between shared hosting and VPS hosting" and "Recommend which is more suitable for small e-commerce websites" into a single prompt results in a more comprehensive and customized response.

These fundamental techniques provide a solid foundation for effective cue engineering, enabling users to guide AI models to generate more accurate, relevant, and useful outputs across a wide range of applications.

Advanced Cueing Strategy

Advanced Hints engineering techniques utilize more sophisticated methods to enhance the performance and capabilities of large language models. These methods go beyond basic hints and aim to achieve more complex reasoning and task-specific outcomes.

Coherent Thinking (CoT) Prompts

This technique improves model performance in complex reasoning tasks by providing a series of intermediate reasoning stages. Coherent thinking cues enable the language model to break down complex problems into smaller, more manageable steps that produce more accurate and logical output.

self-compatibility

As an enhancement to CoT, the self-consistency approach involves sampling multiple reasoning paths and selecting the most consistent answer. This approach is particularly effective in problems where multiple valid solutions exist, as it allows the model to explore multiple reasoning strategies before determining the most reliable outcome.

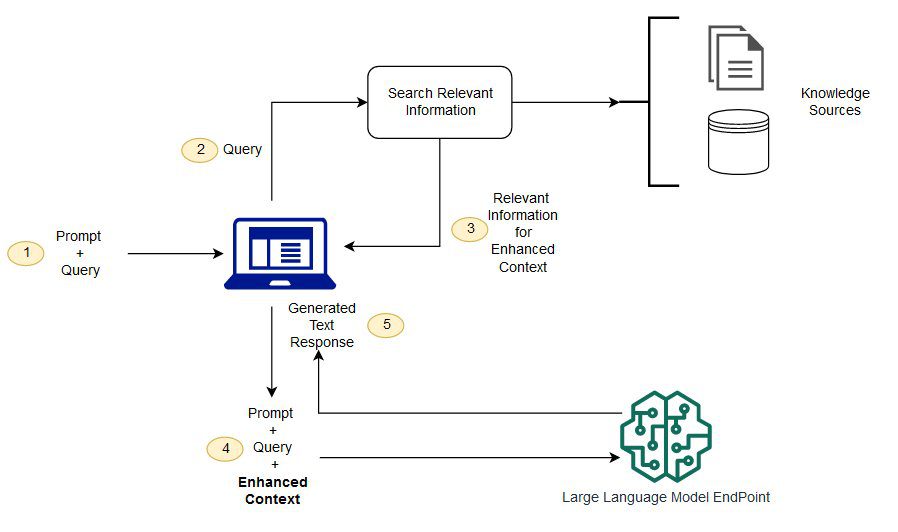

ReAct

ReAct technique combines reasoning and action, with the model generating both the reasoning process and the task-specific actions. This approach enables AI to dynamically plan, execute, and adapt its approach, and is ideally suited for complex problem-solving scenarios that require interaction with external sources of information.

Multimodal Cue Engineering

This advanced technique involves designing cues that incorporate multiple input types, such as text, images, and audio. By utilizing diverse data types, multimodal cues make AI interactions more comprehensive and contextually relevant, mimicking human perception and communication capabilities.

Real-time alert optimization

This emerging technology provides immediate feedback on the validity of prompts, assessing the clarity, potential bias, and alignment of the prompts with the desired outcome. Such real-time guidance simplifies the process of prompt authoring for both novice and experienced users.

Proactive tips

This dynamic approach allows cues to be adapted based on user interaction and feedback. Proactive cues allow the AI model to adapt its responses in real time, thereby increasing the relevance and validity of the output throughout the interaction.

Knowledge Generation Tips

This technique utilizes language models to generate relevant knowledge prior to answering a question, enhancing their common sense reasoning. By generating relevant information first and then incorporating it into the answer, the model can provide a more insightful and contextualized response.

These advanced techniques represent the cutting edge of cue engineering and provide powerful tools for optimizing AI performance across a wide range of application domains and problem areas.

logical thinking

Coherent Thinking (CoT) reasoning is an advanced prompt engineering technique designed to enhance the reasoning capabilities of large language models. This method involves generating multiple paths of reasoning for a given problem and selecting the most consistent or comprehensive solution. By performing CoT reasoning multiple times, cueing engineers can enhance a model's ability to solve problems that require logical thinking and multiple steps.

Key points of CoT reasoning

- autocomplete decoding: Select the most common conclusions in multiple reasoning.

- Complexity-based tips: Select the result with the longest inference chain.

- human intervention: Correct the inference chain with the help of manual correction when the inference results are significantly inconsistent.

- Improve Accuracy: Improving model performance in tasks involving reasoning, computation, and decision making.

By utilizing CoT reasoning, cue engineers can create more robust and reliable AI systems capable of handling complex problems in a variety of domains.

Example of a ReAct prompt

The ReAct (Reasoning and Acting) prompting method is a high-level technique that combines reasoning processes and task-specific actions to enhance the problem solving capabilities of Large Language Models (LLMs). This approach enables LLMs to alternately generate linguistic reasoning steps and perform actions, leading to more efficient and dynamic problem solving.

A typical ReAct tip

- Thought: The reasoning process of the model.

- Action: A specific task or query that needs to be performed.

- Observation: The results or information obtained from an action.

typical example

concern: What is the capital of the country where the Eiffel Tower is located?

- reflections: To answer this question, I would need to find out where the Eiffel Tower is located and then determine the capital of that country.

- act: Search for "Location of the Eiffel Tower".

- heed: The Eiffel Tower is located in Paris, France.

- reflections: Now that I know the Eiffel Tower is in France, I need to look up the French capital.

- actSearch for "capital of France".

- heed: The capital of France is Paris.

- reflections: I have all the information I need to answer this question.

- act: Provide answers.

- solution: The capital of the country where the Eiffel Tower is located is Paris, France.

Application of the ReAct prompt

- question and answer: Enhance the ability of models to decompose complex problems and obtain relevant information.

- Task automation: Guide the model through a multi-step process by combining reasoning and action.

- Interacting with external knowledge bases: Enables the model to retrieve and incorporate additional information to support its reasoning.

- Decision-making: Improve the ability of models to evaluate options and make informed choices based on available data.

By implementing the ReAct prompt, developers and researchers can create more robust and adaptable AI systems that are capable of complex reasoning tasks and realistic problem-solving scenarios.

targeted stimulus cueing

Directional Stimulus Prompting (DSP) is an innovative framework for guiding Large Language Models (LLMs) to generate specific desired outputs. The technique utilizes a small, tunable strategy model to generate auxiliary directional stimulus prompts for each input instance, which serve as nuanced cues to guide LLMs in generating desired outcomes.

Key Features of DSP

- Generation of oriented stimuli using a small, adjustable strategy model (e.g., T5).

- Optimization through supervised fine-tuning and intensive learning.

- Can be applied to a variety of tasks, including summary generation, dialog response generation, and chained reasoning.

- Significantly improve performance with limited labeled data, e.g., with only 80 conversations used, the ChatGPT The performance on the MultiWOZ dataset is improved by 41.41 TP3T.

By using the DSP, researchers and practitioners can enhance the capabilities of the LLM without directly modifying its parameters, providing a flexible and efficient approach to cue engineering.

Knowledge Generation Hints

Generated Knowledge Prompting (GKP) improves the performance of AI models by first asking the model to generate relevant facts before answering a question or completing a task. This two-step process involves knowledge generation (where the model generates relevant information) and knowledge integration (where this information is used to form more accurate and contextualized responses).

Key Benefits

- Improve the accuracy and reliability of AI-generated content.

- Enhance contextual understanding of the given topic.

- Ability to anchor responses to factual information.

- Knowledge can be further expanded by incorporating external sources such as APIs or databases.

By prompting the model to consider relevant facts first, the Knowledge Generation Prompting Method helps to create more informative and plausible outputs, especially for complex tasks or when dealing with specialized topics.

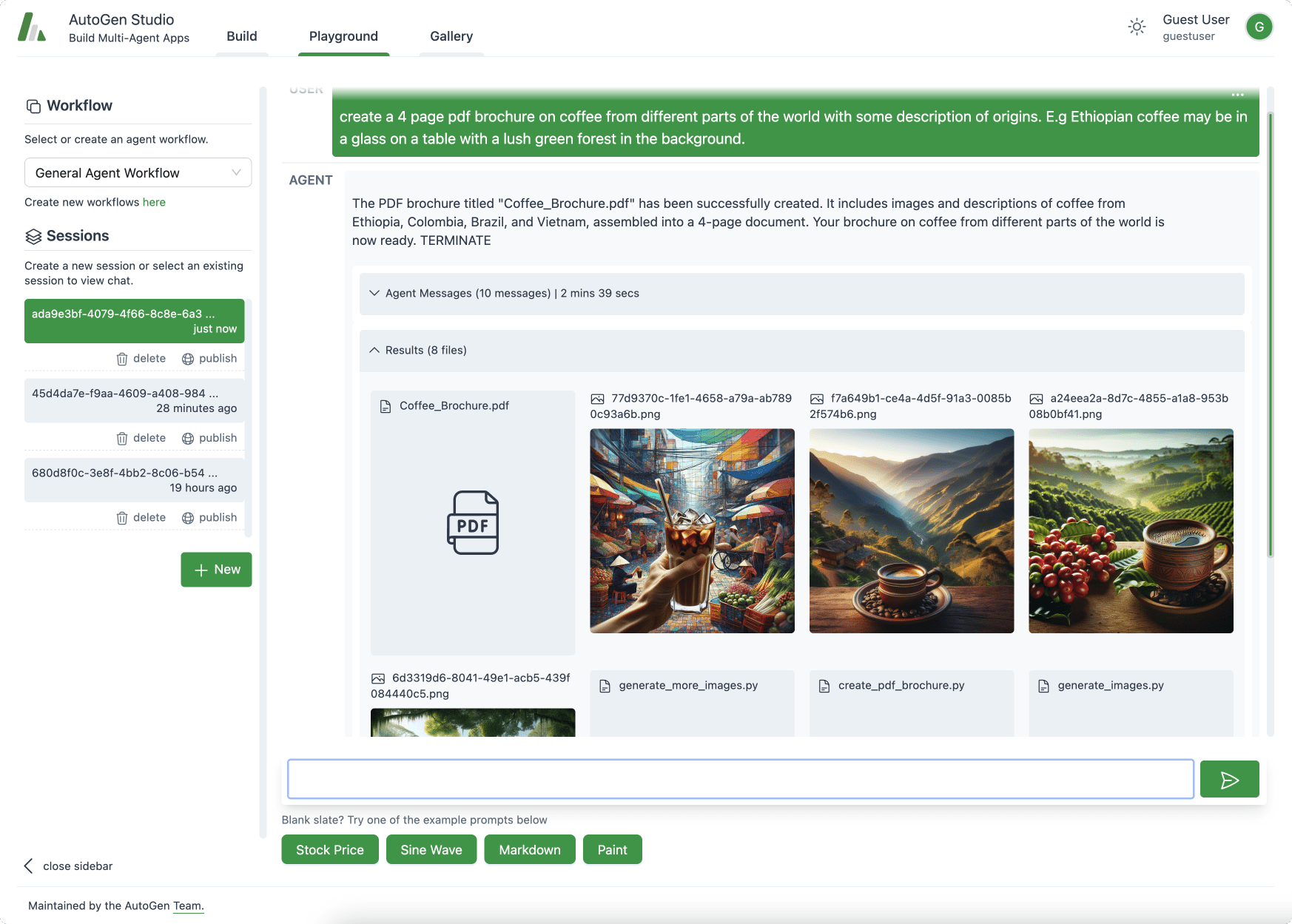

Hints for hands-on engineering skills

This Tips Engineering Technical Guide is based entirely on NirDiamant A repository of excellent practices. All content and Jupyter notebook is copyrighted to the original repository:Prompt EngineeringThe

I'm adding this here because it's a great learning resource, full copyright Prompt Engineering Warehouse.

🌱 Basic concepts

- Tip Project Introduction

Overview 🔎

A detailed introduction to the foundational concepts of AI and language modeling cue engineering.

Realization 🛠️

Combines theoretical explanations with hands-on demonstrations covering foundational concepts, structured prompts, comparative analysis, and problem-solving applications.

- Basic cueing structure

Overview 🔎

Explore two basic cueing structures: single-round cues and multiple-round cues (conversations).

Realization 🛠️

Demonstration of single- and multi-round prompts, prompt templates, and dialog chains using OpenAI's GPT model and LangChain.

- Tip Templates and Variables

Overview 🔎

Describes how to create and use prompt templates with variables using Python and the Jinja2 template engine.

Realization 🛠️

Involves template creation, variable insertion, conditional content, list processing, and integration with the OpenAI API.

🔧 Core technologies

- Zero Sample Tip

Overview 🔎

Exploring zero-sample cues to enable language models to accomplish tasks without specific examples or prior training.

Realization 🛠️

Demonstrates direct task specification, role-based prompting, format specification, and multi-step inference using OpenAI and LangChain.

- Sample less learning vs. contextual learning

Overview 🔎

Explore sample less learning and contextual learning techniques with OpenAI's GPT models and the LangChain library.

Realization 🛠️

Best practices for implementing basic and advanced less-than-sample learning, contextual learning, and example selection and evaluation.

- Chain Thinking (CoT) Tips

Overview 🔎

Introducing Chain Thinking (CoT) hints to guide AI models in breaking down complex problems into step-by-step reasoning processes.

Realization 🛠️

Covers basic and advanced CoT techniques, applies them to a variety of problem-solving scenarios, and compares them to standard prompts.

🔍 Advanced Strategy

- Self-Consistency and Multipath Reasoning

Overview 🔎

Explore techniques for generating diverse inference paths and aggregating results to improve the quality of answers generated by AI.

Realization 🛠️

Demonstrate designing diverse inference prompts, generating multiple responses, implementing aggregation methods, and applying self-consistency checks.

- Constrained and bootstrap generation

Overview 🔎

Focus on techniques for setting constraints on model outputs and implementing rule-based generation.

Realization 🛠️

Use LangChain's PromptTemplate to create structured prompts, enforce constraints and explore rule-based generation techniques.

- Character Tips

Overview 🔎

Explore techniques for assigning specific roles to AI models and designing effective role descriptions.

Realization 🛠️

Demonstrate the creation of role-based prompts, assigning roles to AI models and optimizing role descriptions for various scenarios.

🚀 Advanced Realization

- Task Breakdown Tips

Overview 🔎

Explore techniques for breaking down complex tasks and linking subtasks in prompts.

Realization 🛠️

Involves problem analysis, subtask definition, goal cue engineering, sequential execution, and synthesis of results.

- Cue Chaining and Serialization

Overview 🔎

Demonstrate how to connect multiple prompts and build logical flows for complex AI-driven tasks.

Realization 🛠️

Explore basic cue chains, sequential cues, dynamic cue generation, and error handling in cue chains.

- command engineering

Overview 🔎

Focus on designing clear and effective instructions for language models, balancing specificity and generality.

Realization 🛠️

Involves creating and optimizing instructions, trying out different structures, and making iterative improvements based on model responses.

🎨 Optimization and Improvement

- Cue optimization techniques

Overview 🔎

Explore advanced techniques for optimizing prompts with a focus on A/B testing and iterative improvement.

Realization 🛠️

Demonstrate a prompt A/B testing, iterative improvement process, and performance evaluation using relevant metrics.

- Handling ambiguity and improving clarity

Overview 🔎

Focuses on techniques for recognizing and resolving ambiguous prompts and writing clearer prompts.

Realization 🛠️

Includes analyzing ambiguous prompts, implementing strategies for resolving ambiguity, and exploring techniques for writing clearer prompts.

- Cue length and complexity management

Overview 🔎

Explore techniques for managing prompt length and complexity when using large language models.

Realization 🛠️

Demonstrate techniques for balancing detail with brevity and strategies for dealing with long contexts, including chunking, summarization, and iterative processing.

🛠️ Specialized applications

- Negative Cues and Avoiding Unexpected Output

Overview 🔎

Exploring negative cues and techniques for avoiding undesired output from large language models.

Realization 🛠️

Includes examples of base negatives, explicit exclusions, implementing constraints using LangChain, and ways to evaluate and improve negative cues.

- Cue Formatting and Structure

Overview 🔎

Explore various cue formats and structural elements and demonstrate their impact on AI model responses.

Realization 🛠️

Demonstrate creating multiple cue formats, integrating structural elements, and comparing responses to different cue structures.

- Task-specific tips

Overview 🔎

Explore the creation and use of prompts for specific tasks such as text summarization, quizzes, code generation, and creative writing.

Realization 🛠️

This includes designing task-specific prompt templates, implementing them using LangChain, executing them with sample inputs, and analyzing the output for each task type.

🌍 Advanced Applications

- Multilingual and cross-language tips

Overview 🔎

Exploring techniques for designing effective prompts for multilingual and language translation tasks.

Realization 🛠️

This includes creating multilingual prompts, implementing language detection and adaptation, designing cross-language translation prompts, and handling various writing systems and character sets.

- Ethical Considerations in Cue Engineering

Overview 🔎

Explore the ethical dimensions of prompt engineering, focusing on avoiding bias and creating inclusive and equitable prompts.

Realization 🛠️

Includes methods for identifying bias in prompts, implementing strategies for creating inclusive prompts, and assessing and improving the ethical quality of AI output.

- Tips Safety and Security

Overview 🔎

Highlights how to prevent prompt injection and implement content filtering in prompts to secure AI applications.

Realization 🛠️

Includes techniques to prevent prompt injection, content filtering implementations, and testing the effectiveness of security measures.

- Evaluating the effectiveness of prompts

Overview 🔎

Explore methods and techniques for assessing the validity of AI language modeling cues.

Understanding the Large Language Model (LLM) API

Let's discuss the Large Language Model (LLM) API.

There are a couple of ways to use the API, one is to send a direct HTTP request, and the other is to install the official OpenAI package via pip, which is always up to date with the latest features.

pip install openai

Because this API format is so popular, many other providers offer APIs in the same format, which is called "OpenAI compliant".

List of vendors offering OpenAI-compatible APIs

Below is a list of vendors that are compatible with the OpenAI API, and developers can utilize these services in a similar way to what OpenAI provides:

- Groq

- Groq Most of the APIs provided are compatible with OpenAI's client libraries. Users only need to change the

base_urland use Groq's API key to configure their applications to run on Groq.

- Groq Most of the APIs provided are compatible with OpenAI's client libraries. Users only need to change the

- Mistral AI

- Mistral Provides an API that supports OpenAI-compliant requests, allowing developers to access various models through this service.

- Hugging Face

- Hugging Face provides access to many models via an API that is configured in a similar way to OpenAI's API. Hugging Face is known for its large library of models.

- Google Vertex AI

- Google Vertex AI allows users to interact with large language models in a manner consistent with the OpenAI API.

- Microsoft Azure OpenAI

- Microsoft provides access to OpenAI models through its Azure platform for easy integration with existing Azure services.

- Anthropic

- Anthropic models can also be accessed through an API that mimics the structure of OpenAI, allowing for similar interactions.

These providers enable developers to take advantage of different AI capabilities while maintaining compatibility with the familiar OpenAI API structure for easier integration into applications and workflows.

There are also some providers that use different API architectures and types, but we can use something like the litellm Such a client library provides a unified client package for different types of large language models.

In addition, the likes of Portkey Such a gateway provides OpenAI-compatible APIs for any large language model.

In this article, you will learn about the different parameters in the API.

Key parameters

Temperature

- descriptiveThe : Temperature parameter controls the randomness of the model output, ranging from 0 to 2.

- affect (usually adversely):

- Low value (0-0.3): The output is more deterministic and focused, and usually repeats similar responses.

- Medium (0.4-0.7):: Strike a balance between creativity and coherence.

- High value (0.8-2): Generate more diverse and creative output, but may produce meaningless results.

- typical example:

response = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": "完成句子:'幸福的关键是'。"}], temperature=1 )

Top_p

- descriptiveThis parameter enables nucleus sampling, where the model considers only the highest

pProbabilistic quality of the output. - realm: From 0 to 1, lower values limit the output to the more likely Token.

- typical example:

response = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": "写一首诗。"}], top_p=0.9 )

Max Tokens

- descriptive: Define the maximum number of characters in the generated response Token Quantity (words or parts of words).

- default value: For most models, this is usually set to a maximum of 4096 Token.

- typical example:

response = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": "讲一个故事。"}], max_tokens=150 )

Function Calling

- descriptive: This feature allows the model to interact with external APIs or services by calling predefined functions based on user input.

- typical example:

functions = [ { "name": "get_current_weather", "description": "获取某地的当前天气。", "parameters": { "type": "object", "properties": { "location": {"type": "string"}, "unit": {"type": "string", "enum": ["celsius", "fahrenheit"]} }, "required": ["location"] } } ] response = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": "纽约的天气如何?"}], functions=functions, function_call={"name": "get_current_weather"} )

Roles in API Calls

Understanding the roles involved in an API call helps structure the interaction effectively.

System Role

- goal: Provide high-level instructions or context that guide the model's behavior throughout the conversation.

- usage: Set at the beginning of the message array to establish the tone or rules of the interaction.

- typical example::

messages = [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "What can you do?"} ]

user role

- goal: Represents input from a human user, guiding a dialog through queries or prompts.

- usage: Most commonly used in interactions to ask questions or provide statements.

- typical example::

{"role": "user", "content": "Can you explain how OpenAI works?"}

Assistant Role

- goal: represents the response generated by the model based on user inputs and system commands.

- usage: When responding to a user query, the model automatically assumes this role.

- typical example::

{"role": "assistant", "content": "OpenAI uses advanced machine learning techniques to generate text."}

Other parameters

stream (computing)

- descriptive: If set to true, allows streaming when generating partial responses, for real-time applications.

Logprobs

- descriptive: Returns the logarithmic probability of the Token's prediction, which is used to understand the model's behavior and improve the output.

summarize

The OpenAI API provides a powerful set of parameters and roles that developers can utilize to create highly interactive applications. By adjusting parameters such as temperature and effectively utilizing structured roles, users can tailor responses to specific needs while ensuring clarity and control of the conversation.

Below are examples of how to use the APIs of various providers that are compatible with OpenAI, as well as application examples of the Lightweight Large Language Model (LLM).

Example of using a compatible provider's OpenAI API

1. Groq

To use Groq's API, you need to set the base URL and provide the Groq API key.

import os

import openai

client = openai.OpenAI(

base_url="https://api.groq.com/openai/v1",

api_key=os.environ.get("GROQ_API_KEY")

)

response = client.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Tell me a joke."}]

)

print(response.choices[0].message['content'])

2. Mistral AI

Mistral AI It also provides an OpenAI-compatible API, and here's how to use it:

import requests

url = "https://api.mistral.ai/v1/chat/completions"

headers = {

"Authorization": f"Bearer {os.environ.get('MISTRAL_API_KEY')}",

"Content-Type": "application/json"

}

data = {

"model": "mistral-7b",

"messages": [{"role": "user", "content": "What is the capital of France?"}]

}

response = requests.post(url, headers=headers, json=data)

print(response.json()['choices'][0]['message']['content'])

3. Hugging Face

Access tokens are required to use the Hugging Face API. Below is the sample code:

import requests

url = "https://api-inference.huggingface.co/models/gpt2"

headers = {

"Authorization": f"Bearer {os.environ.get('HUGGINGFACE_API_KEY')}"

}

data = {

"inputs": "Once upon a time in a land far away,"

}

response = requests.post(url, headers=headers, json=data)

print(response.json()[0]['generated_text'])

4. Google Vertex AI

The following code can be used to interact with Google Vertex AI:

from google.cloud import aiplatform

aiplatform.init(project='your-project-id', location='us-central1')

response = aiplatform.gapic.PredictionServiceClient().predict(

endpoint='projects/your-project-id/locations/us-central1/endpoints/your-endpoint-id',

instances=[{"content": "Who won the World Series in 2020?"}],

)

print(response.predictions)

5. Microsoft Azure OpenAI

The following is an example of code that calls Azure's OpenAI service:

import requests

url = f"https://your-resource-name.openai.azure.com/openai/deployments/your-deployment-name/chat/completions?api-version=2023-05-15"

headers = {

"Content-Type": "application/json",

"api-key": os.environ.get("AZURE_OPENAI_API_KEY")

}

data = {

"messages": [{"role": "user", "content": "What's the weather today?"}],

"model": "gpt-35-turbo"

}

response = requests.post(url, headers=headers, json=data)

print(response.json()['choices'][0]['message']['content'])

6. Anthropic

Using Anthropic's Claude Sample code for the model and its API is shown below:

import requests

url = "https://api.anthropic.com/v1/complete"

headers = {

"Authorization": f"Bearer {os.environ.get('ANTHROPIC_API_KEY')}",

"Content-Type": "application/json"

}

data = {

"model": "claude-v1",

"prompt": "Explain quantum physics in simple terms.",

}

response = requests.post(url, headers=headers, json=data)

print(response.json()['completion'])

The example shows how to effectively utilize lightweight models while achieving meaningful output in a text generation task.

Source:

https://www.coltsteele.com/tips/understanding-openai-s-temperature-parameter

https://community.make.com/t/what-is-the-difference-between-system-user-and-assistant-roles-in-chatgpt/36160

https://arize.com/blog-course/mastering-openai-api-tips-and-tricks/

https://learn.microsoft.com/ko-kr/Azure/ai-services/openai/reference

https://community.openai.com/t/the-system-role-how-it-influences-the-chat-behavior/87353

https://community.openai.com/t/understanding-role-management-in-openais-api-two-methods-compared/253289

https://platform.openai.com/docs/advanced-usage

https://platform.openai.com/docs/api-reference

https://console.groq.com/docs/openai

https://docs.jabref.org/ai/ai-providers-and-api-keys

https://www.reddit.com/r/LocalLLaMA/comments/16csz5n/best_openai_api_compatible_application_server/

https://docs.gptscript.ai/alternative-model-providers

https://towardsdatascience.com/how-to-build-an-openai-compatible-api-87c8edea2f06?gi=5537ceb80847

https://modelfusion.dev/integration/model-provider/openaicompatible/

https://docs.jabref.org/ai/ai-providers-and-api-keys

https://docs.gptscript.ai/alternative-model-providers

https://console.groq.com/docs/openai

Tip Project Primer II (Function Calls)

The following libraries, tools, and configurations are necessary for loading.

https://colab.research.google.com/github/adithya-s-k/AI-Engineering.academy/blob/main/PromptEngineering/function_calling.ipynb

Getting Started with Hint Engineering

The following libraries, tools, and configurations are necessary for loading.

https://colab.research.google.com/github/adithya-s-k/AI-Engineering.academy/blob/main/PromptEngineering/prompt_engineering.ipynb

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...