AI Coding Editor: Uncovering How Cline Works

In recent years, Artificial Intelligence (AI) technology has triggered a profound change in programming. From v0, bolt.new, to programming tools that integrate Agent technology such as Cursor and Windsurf, AI Coding shows great potential to play a key role in the software development process, especially in the rapid prototyping and proof-of-concept phases. What is the technical rationale behind the evolution from AI-assisted coding to direct project generation?

In this article, we'll take a look at the open source project Cline As an example, we will analyze the implementation of current AI Coding products to help readers understand the underlying principles, so that they can better apply AI editors to improve development efficiency.

Note that there may be implementation differences between AI Coding editors. In addition, this article will not delve into the implementation details of Tool Use.

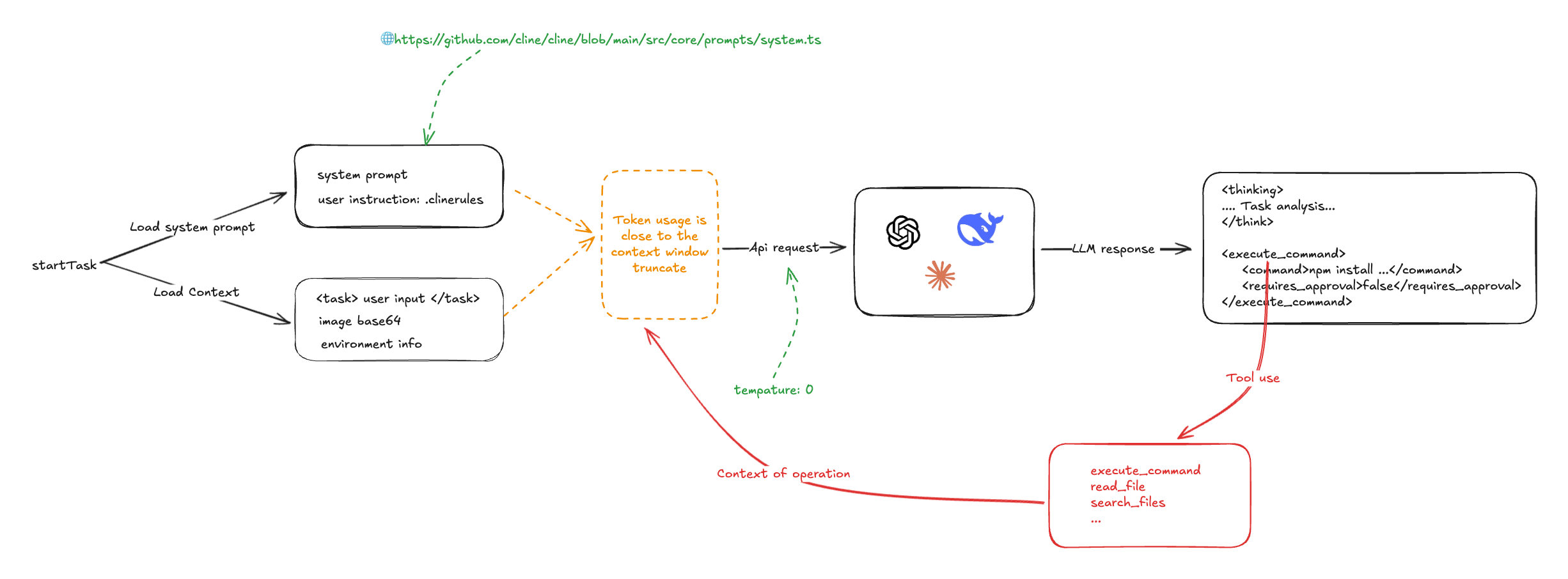

Cline's Workflow Overview

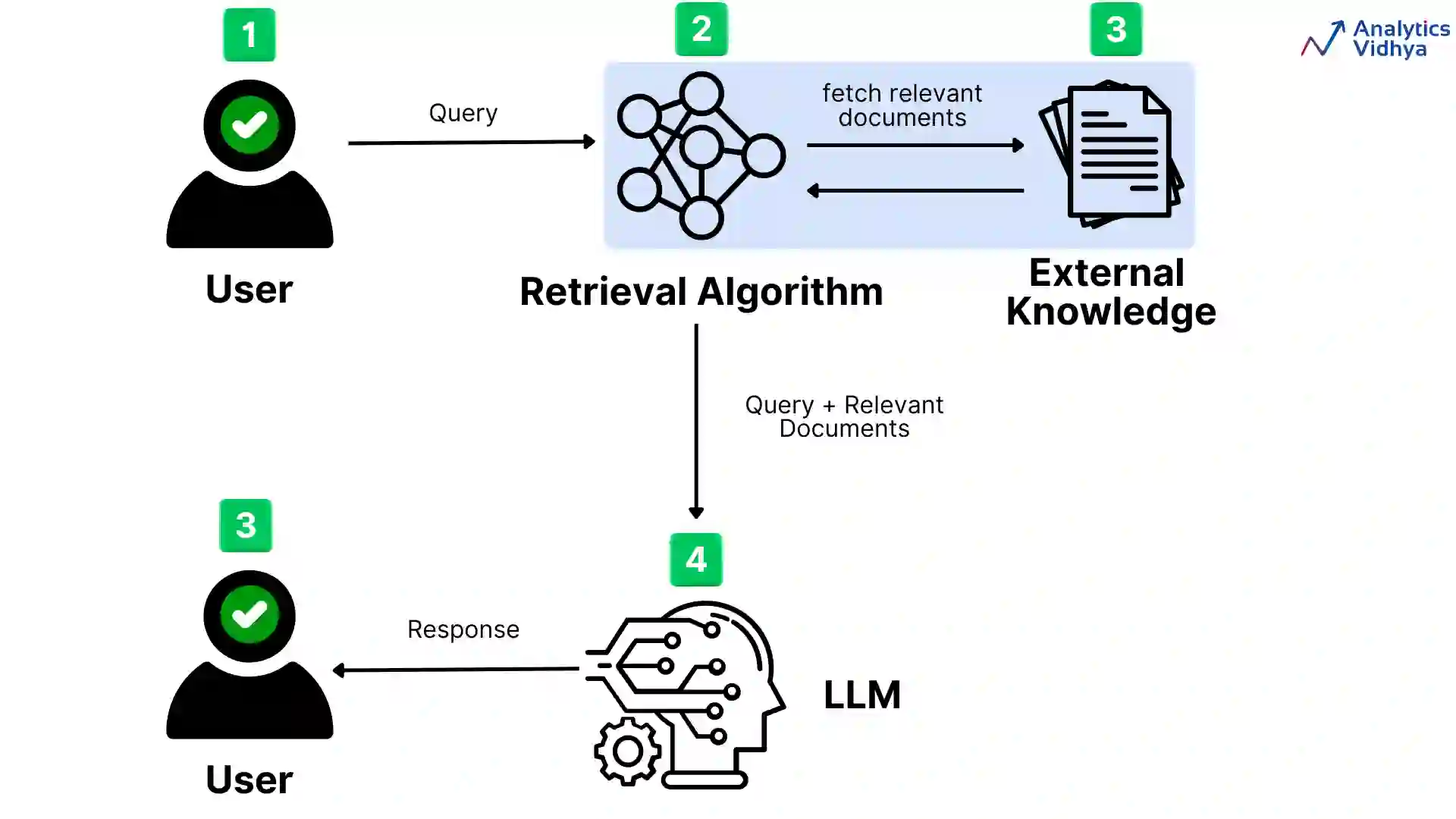

To show how Cline works more clearly, let's take a look at a schematic of its workflow:

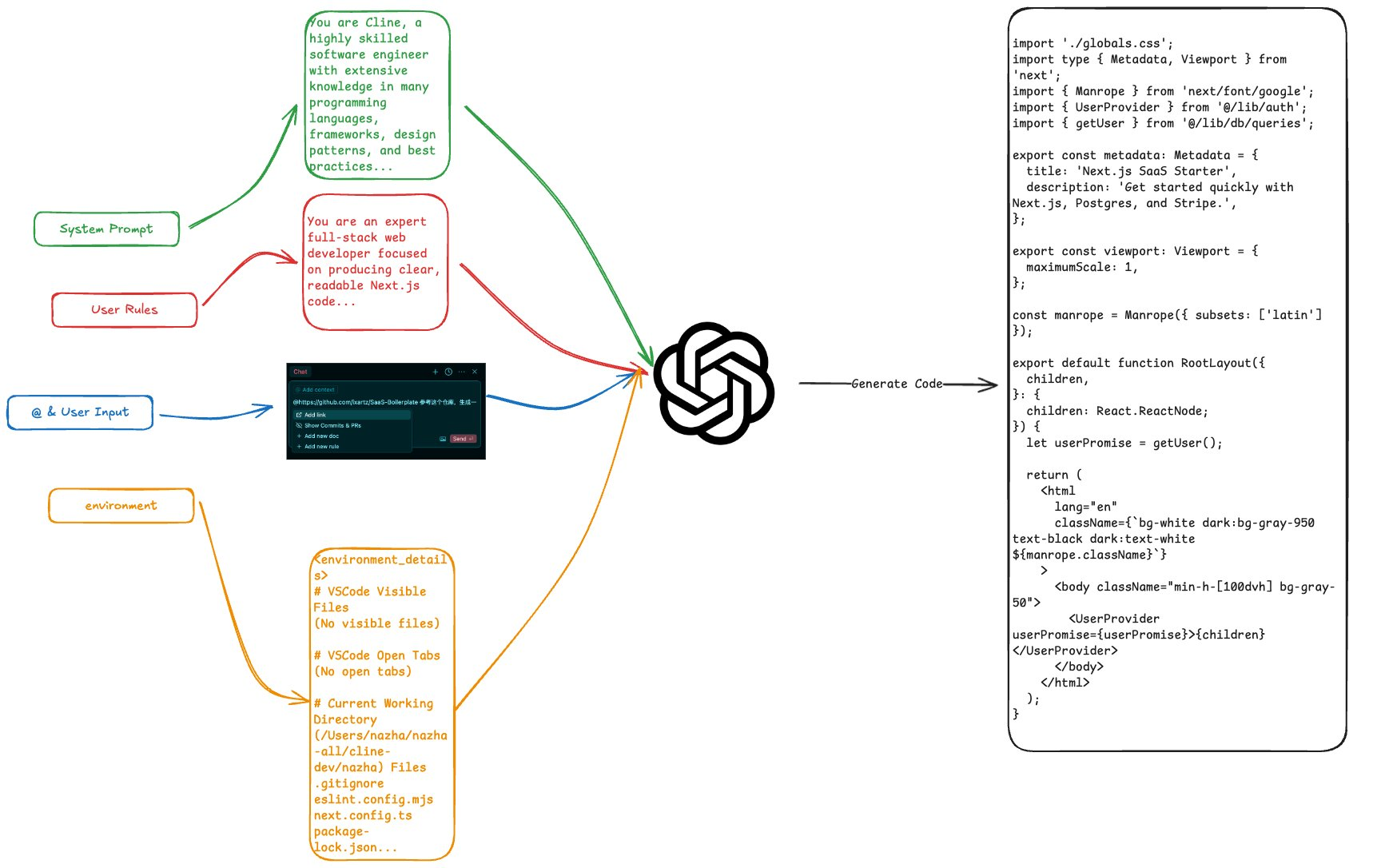

The core mechanism of Cline is the efficient use of System Prompts and the command following capabilities of the Large Language Model (LLM). When starting a programming task, Cline collects all relevant information including System Prompts, user-defined prompts, user input, and information about the project environment (e.g., the list of project files, the currently open editor tab, etc.), and consolidates this information and submits it to the LLM.

When LLM receives this information, it generates the appropriate solution and operation instructions based on the instructions.Cline then parses the operation instructions returned by LLM, such as <execute_command /> (execute command) and <read_file /> (read file), etc., and invoke the pre-written Tool Use capability to perform these operations, and feedback the results to the LLM for subsequent processing. It is worth noting that Cline usually needs to interact with the LLM several times in the course of completing a single programming task, so that complex tasks can be completed step by step through multiple rounds of dialog.

The Role of the System Prompt

Cline's system prompts are designed along the lines of v0 and are written in Markdown and XML formats. The core function of the system prompt is to define the detailed rules and examples of LLM's Tool Use, so as to guide LLM how to effectively utilize various tools to accomplish programming tasks.

Cline's system prompts detail the formatting specifications for Tool Use:

# Tool Use Formatting

Tool use is formatted using XML-style tags. The tool name is enclosed in opening and closing tags, and each parameter is similarly enclosed within its own set of tags. Here's the structure:

<tool_name>

<parameter1_name>value1</parameter1_name>

<parameter2_name>value2</parameter2_name>

...

</tool_name>

For example:

<read_file>

<path>src/main.js</path>

</read_file>

Always adhere to this format for the tool use to ensure proper parsing and execution.

# Tools

## execute_command

## write_to_file

...

## Example 4: Requesting to use an MCP tool

<use_mcp_tool>

<server_name>weather-server</server_name>

<tool_name>get_forecast</tool_name>

<arguments>

{

"city": "San Francisco",

"days": 5

}

</arguments>

</use_mcp_tool>

In addition, information about the MCP (Model Context Protocol) server is injected into the system prompt so that LLM can extend its capabilities with additional tools and resources provided by the MCP server.

MCP SERVERS

The Model Context Protocol (MCP) enables communication between the system and locally running MCP servers that provide additional tools and resources to extend your capabilities.

# Connected MCP Servers

...

Users can further customize Cline's behavior by injecting custom commands into the system prompt via the .clinerules file.

It can be assumed that AI Coding editors such as Cursor and WindSurf may employ a similar mechanism to inject user-defined commands via configuration files such as .cursorrules.

As can be seen, the core of Cline relies on the LLM's ability to follow instructions in order to accomplish programming tasks. Therefore, in order to ensure the accuracy and consistency of the LLM's output results, Cline sets the model's temperature parameter to 0. The temperature parameter controls the randomness of the LLM's outputs, and setting it to 0 means that the LLM will always select the most probable output result, thus guaranteeing determinism in the execution of the task.

const stream = await this.client.chat.completions.create({

model: this.options.openAiModelId ?? "",

messages: openAiMessages,

temperature: 0, // 被设置成了 0

stream: true,

stream_options: { include_usage: true },

})

First round of inputs: gathering user intent and contextual information

The main types of input that users provide to Cline include the following:

- Direct-entry text commands: Users can directly enter natural language descriptions of programming task requirements, and Cline wraps these text instructions in tags.

- File paths, files, and URLs specified by the @ symbol: Users can use the @ Cline parses references to files, directories, or external URLs in a project and retrieves the content. For directories, Cline lists the directory structure; for files, Cline reads the contents of the file; and for URLs, Cline crawls the web page using tools such as Puppeteer.

For example, a typical user input might look like the following:

<task>实现一个太阳系的 3D 环绕效果 'app/page.tsx' (see below for file content) 'https://stackoverflow.com/questions/23673275/orbital-mechanics-for-a-solar-system-visualisation-in-three-js-x-y-z-for-planet' (see below for site content)

</task>

<file_content path="app/page.tsx">

import Image from "next/image";

export default function Home() {...}

</file_content

<site_content>

...

</site_content>

In addition to the information entered directly by the user, Cline automatically collects information about the project context, such as the list of files in the current working directory, open tabs in the VS Code editor, etc. This helps LLM better understand the project context and generate a more realistic solution. This information helps LLM to better understand the project context and generate a solution that better fits the actual needs.

An example of a complete input with environment information is shown below:

<task>

实现一个太阳系的 3D 环绕效果

</task>

<environment_details>

# VSCode Visible Files

(No visible files)

# VSCode Open Tabs

(No open tabs)

# Current Time

1/23/2025, 4:41:03 PM (Asia/Shanghai, UTC+8:00)

# Current Working Directory (/Users/nazha/nazha-all/cline-dev/nazha) Files

.gitignore

eslint.config.mjs

next.config.ts

package-lock.json

package.json

postcss.config.mjs

README.md

tailwind.config.ts

tsconfig.json

app/

app/favicon.ico

app/globals.css

app/layout.tsx

app/page.tsx

public/

public/file.svg

public/globe.svg

public/next.svg

public/vercel.svg

public/window.svg

# Current Mode

ACT MODE

</environment_details>

It is clear from Cline's approach to project information that other AI Coding editors (e.g., Cursor) may employ more advanced Codebase Indexing techniques (e.g., Embedding) in order to more efficiently retrieve and utilize information from the codebase. In contrast, Cline's approach is relatively straightforward and "brute force".

First Round Returns: LLM Thinking and Operating Instructions

After receiving the consolidated input, LLM will analyze and think about the system prompts and user commands, and return a result that contains both (the thinking process) and the instructions. For example, for the task "Realize a 3D solar system surround effect" above, LLM may return the following:

<thinking>

分析任务需求:

1. 需要创建一个3D太阳系环绕效果

2. 当前项目是一个Next.js项目(根据next.config.ts和app目录结构判断)

3. 需要添加3D功能,可以使用Three.js库

4. 需要创建新的组件来展示3D效果

下一步行动:

1. 安装Three.js依赖

2. 创建一个新的组件来展示3D太阳系

3. 在页面中引入该组件

</thinking>

<execute_command>

<command>npm install three @types/three</command>

<requires_approval>false</requires_approval>

</execute_command>

In this example, LLM first performs a task analysis, specifies the task requirements and project environment, and creates a preliminary execution plan. LLM then outputs the command, which instructs Cline to execute the npm install three @types/three command to install the Three.js library.

Cline can invoke a variety of predefined system operations by parsing the instructions returned by LLM, including but not limited to:

- Execute system commands (execute_command)

- Read and write files (read_file, write_to_file)

- Search the web (search)

- MCP Operations (use_mcp_tool)

As these operations are performed, Cline collects the execution status and results of the operations for feedback to the LLM in subsequent interactions.

Second round of inputs: contextual information incorporated into the results of the operation

Next, Cline will integrate the execution result of the previous operation, operation status information, as well as the previous system prompts, user inputs, etc., and submit them as new inputs to the LLM, requesting the LLM to give guidance on the next execution step. This process is repeated until the task is completed.

Below is a more complete dialog example showing how Cline works with LLM to accomplish a task through multiple rounds of dialog:

role: system

content: [...省略系统提示词内容...]

role: user

content: [

{

type: 'text',

text: '<task>\n实现一个太阳系的 3D 环绕效果\n</task>'

},

{

type: 'text',

text: "<environment_details>...<environment_details>"

}

]

role: 'assistant',

content: '<thinking>\n分析任务需求:\n1. 需要创建一个3D太阳系环绕效果\n2. 当前项目是一个Next.js项目(根据next.config.ts和app目录结构判断)\n3. 需要添加3D功能,可以使用Three.js库\n4. 需要创建新的组件来展示3D效果\n\n下一步行动:\n1. 安装Three.js依赖\n2. 创建一个新的组件来展示3D太阳系\n3. 在页面中引入该组件\n</thinking>\n\n<execute_command>\n<command>npm install three @types/three</command>\n<requires_approval>false</requires_approval>\n</execute_command>'

role: 'user',

content: [

{

type: 'text',

text: '[execute_command for 'npm install three @types/three'] Result:'

},

{

type: 'text',

text: 'Command executed.\nOutput:\n⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏\nadded 9 packages and audited 385 packages in 5s\n⠏\n⠏142 packages are looking for funding\n⠏ run `npm fund` for details\n⠏\nfound 0 vulnerabilities\n⠏'

},

{

type: 'text',

content: '<environment_details>\n# VSCode Visible Files\n(No visible files)\n\n# VSCode Open Tabs\n(No open tabs)\n\n# Current Time\n1/23/2025, 10:01:33 PM (Asia/Shanghai, UTC+8:00)\n\n# Current Mode\nACT MODE\n</environment_details>'

}]

From this dialog example, we can see that to complete a simple programming task, Cline needs to call the LLM several times in a loop, and through continuous interaction with the LLM, it gradually refines the task goal and performs the corresponding operations until it finally completes the task.

In addition, Cline essentially shoves all the relevant information "up" to the LLM when processing a task, which leads to a single task Token consumption is very high. At the same time, this approach also easily violates the context window limit of LLM. In order to deal with the context window limit problem, Cline adopts a relatively simple and direct processing strategy, i.e., brute-force truncation of the input content, and the portion that exceeds the window limit will be directly discarded.

This may also be a generalized treatment used by other AI Coding editors. In the case of using the Windsurf When using an AI editor such as this one, users may wonder why the AI seems to be unconstrained by the LLM context window and is able to handle tasks that seem to exceed the length of the window. However, in subsequent Q&A interactions, the AI may then appear to repeat previous answers, which may have something to do with the context truncation mechanism.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...