Agent Service Toolkit: a complete toolset for building AI intelligences based on LangGraph

General Introduction

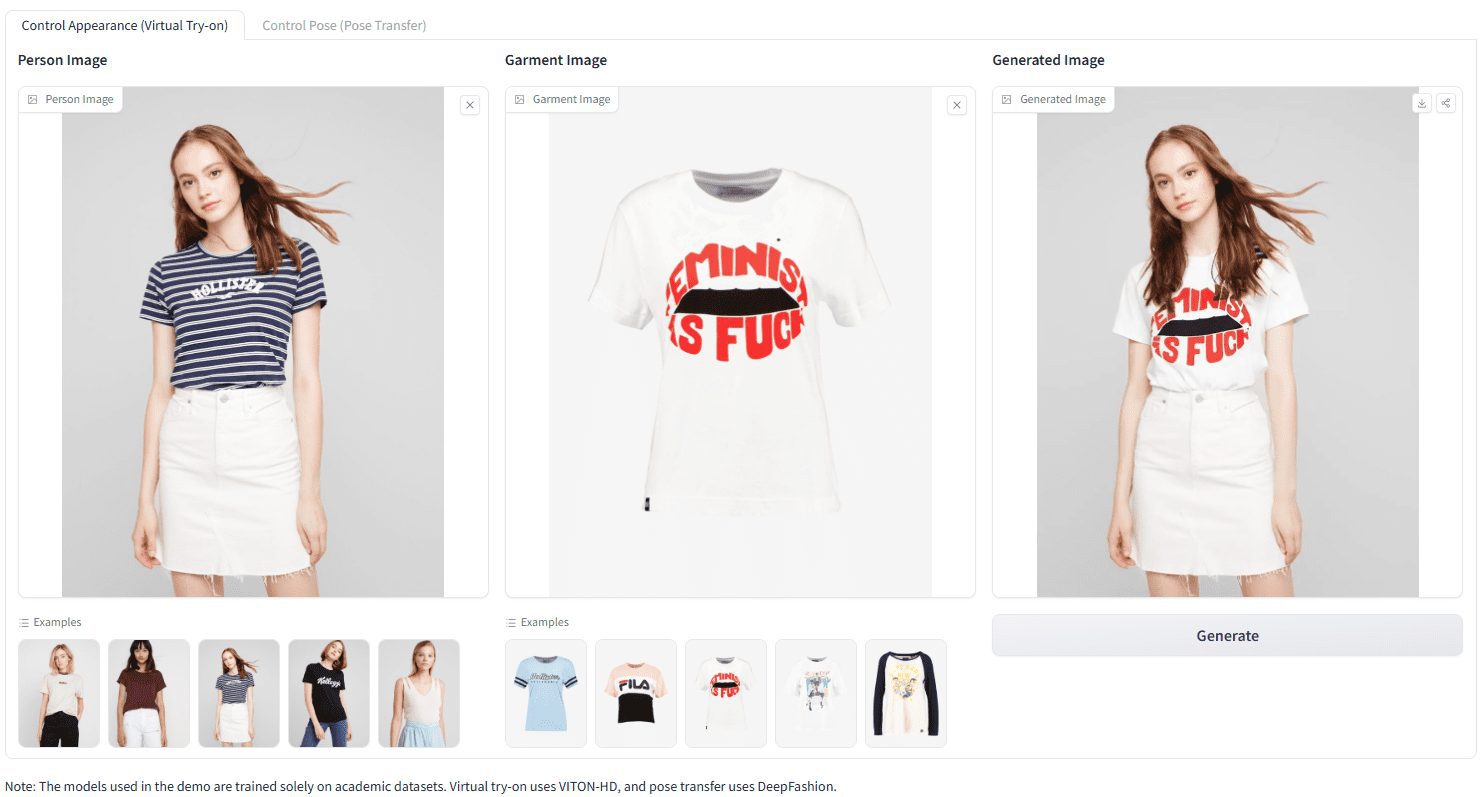

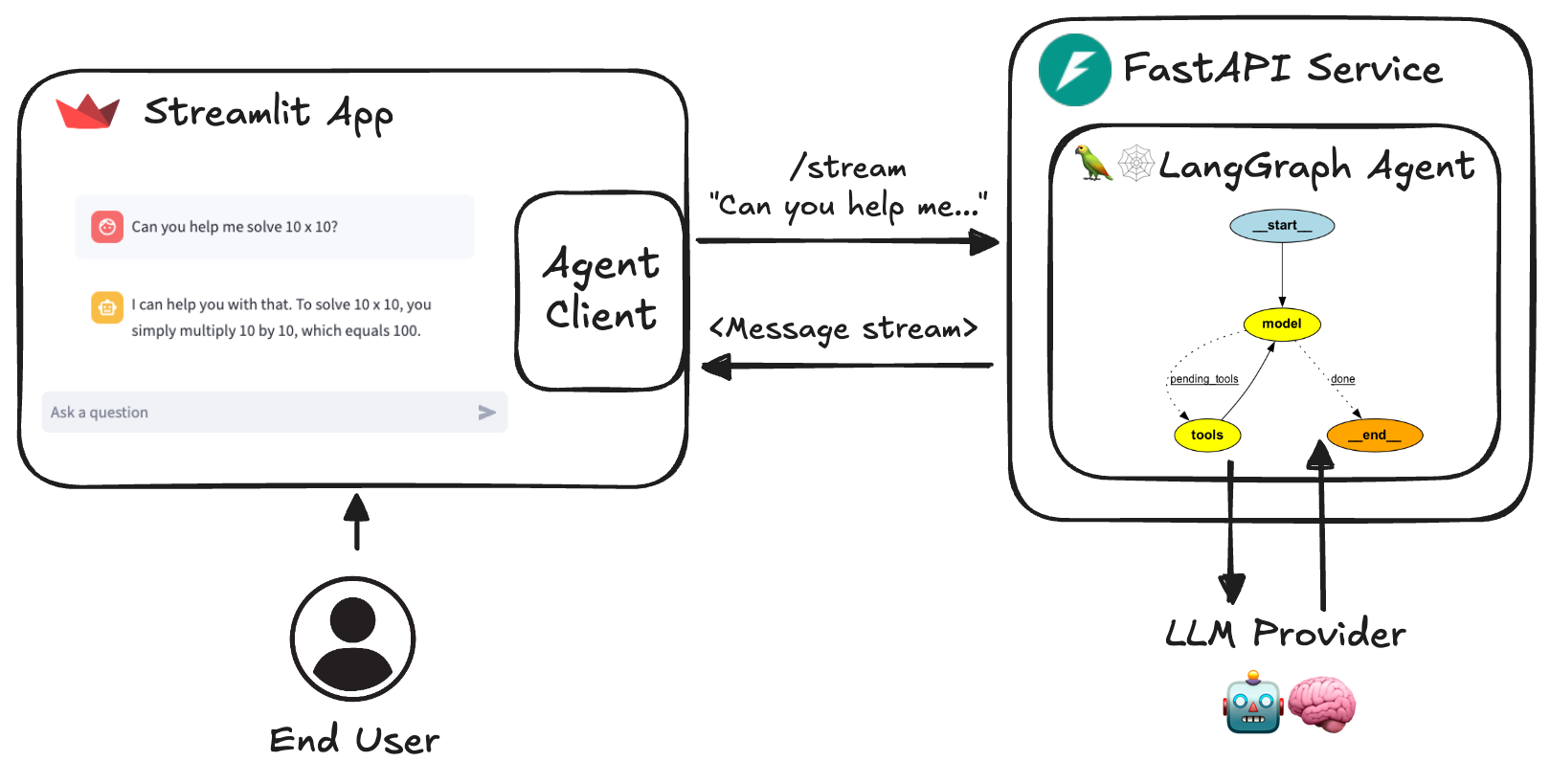

AI Agent Service Toolkit is a complete toolset built on LangGraph, FastAPI and Streamlit, designed to help developers quickly build and run AI agent services. The toolkit provides a flexible framework that supports user-defined agent features and interactions for a variety of application scenarios. Whether developing chatbots, data analytics tools, or other AI-based services, users can quickly implement them using the toolkit. The toolkit is designed with ease of use and scalability in mind, allowing users to easily integrate the required functionality through simple configuration and code modifications.

Experience: https://agent-service-toolkit.streamlit.app/

Agent Service Toolkit Architecture

Function List

- LangGraph Agent : Use LangGraph framework builds customizable agents.

- FastAPI Services : Provides services for streaming and non-streaming endpoints.

- Advanced Streaming Processing : Supports token- and message-based streaming.

- Content Audit : Implement LlamaGuard for content auditing (required) Groq (API key).

- Streamlit Interface : Provides a user-friendly chat interface for interacting with agents.

- Multi-agent support : Run multiple proxies in the service and invoke them via URL paths.

- asynchronous design : Efficiently handle concurrent requests with async/await.

- Feedback mechanisms : Includes a star-based feedback system integrated with LangSmith.

- Dynamic metadata The : /info endpoint provides dynamic configuration metadata about services and available agents and models.

- Docker Support : Includes Dockerfiles and docker compose files for easy development and deployment.

- test (machinery etc) : Includes complete unit and integration testing.

Using Help

Installation process

- Run it directly in Python ::

- Make sure you have at least one LLM API key:

echo 'OPENAI_API_KEY=your_openai_api_key' >> .env- Install dependencies and synchronize:

pip install uv uv sync --frozen- Activate the virtual environment and run the service:

source .venv/bin/activate python src/run_service.py- Activate the virtual environment in another terminal and run the Streamlit application:

source .venv/bin/activate streamlit run src/streamlit_app.py - Running with Docker ::

- Make sure you have at least one LLM API key:

bash echo 'OPENAI_API_KEY=your_openai_api_key' >> .env - Run it with Docker Compose:

bash docker compose up

- Make sure you have at least one LLM API key:

Functional operation flow

- LangGraph Agent ::

- Define the proxy: in the

src/agents/catalog to define agents with different capabilities. - Configuring the agent: Use the

langgraph.jsonfile configures the behavior and settings of the agent.

- Define the proxy: in the

- FastAPI Services ::

- Start the service: run

src/service/service.pyStart the FastAPI service. - Access to endpoints: via

/streamcap (a poem)/non-streamEndpoint Access Proxy Service.

- Start the service: run

- Streamlit Interface ::

- Launch screen: Run

src/streamlit_app.pyLaunch the Streamlit application. - Interactive use: Interact with the agent through a user-friendly chat interface.

- Launch screen: Run

- Content Audit ::

- Configuring LlamaGuard: In the

.envfile to add the Groq API key to enable content auditing.

- Configuring LlamaGuard: In the

- Multi-agent support ::

- Configure multiple agents: in the

src/agents/Define multiple proxies in the directory and invoke them via different URL paths.

- Configure multiple agents: in the

- Feedback mechanisms ::

- Integrated Feedback System: A star-based feedback system is integrated into the agent service to collect user feedback for service improvement.

- Dynamic metadata ::

- Access to metadata: via

/infoEndpoints obtain dynamic configuration metadata about services and available agents and models.

- Access to metadata: via

- test (machinery etc) ::

- Run the test: in the

tests/catalog to run unit and integration tests to ensure the stability and reliability of the service.

- Run the test: in the

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...