Agent AI: Exploring the Frontier World of Multimodal Interaction [Fei-Fei Li - Classic Must Reads]

Agent AI: Surveying the Horizons of Multimodal Interaction.

Original: https://ar5iv.labs.arxiv.org/html/2401.03568

summaries

Multimodal AI systems are likely to be ubiquitous in our daily lives. One promising approach to making these systems more interactive is to implement them as intelligences in physical and virtual environments. Currently, systems utilize existing base models as the basic building blocks for creating embodied intelligences. Embedding intelligences into such environments contributes to the model's ability to process and interpret visual and contextual data, which is critical for creating more complex and context-aware AI systems. For example, a system that is able to sense user behavior, human behavior, environmental objects, audio expressions, and the collective emotion of a scene can be used to inform and guide the response of an intelligent body in a given environment. In order to accelerate the study of intelligentsia-based multimodal intelligence, we define "Agent AI" as a class of interacting systems that can perceive visual stimuli, linguistic inputs, and other environmentally-based data, and can produce meaningful embodied behaviors. In particular, we explore systems that aim to improve the prediction of intelligences based on the next embodied behavior by integrating external knowledge, multisensory inputs, and human feedback. We argue that the illusion of large base models and their tendency to produce environmentally incorrect outputs can also be mitigated by developing intelligent body AI systems in grounded environments. The emerging field of "Agent AI" encompasses the broader embodied and intelligent body aspects of multimodal interaction. In addition to intelligences acting and interacting in the physical world, we envision a future in which people can easily create any virtual reality or simulation scenario and interact with intelligences embedded in the virtual environment.

![Agent AI: 探索多模态交互的边界-1 Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/6dbf9ac2da09ee1.png)

Figure 1: Overview of an Agent AI system that can perceive and act in a variety of domains and applications.Agent AI is emerging as a promising pathway to General Artificial Intelligence (AGI).Agent AI training has demonstrated the ability to perform multimodal understanding in the physical world. It provides a framework for reality-independent training by utilizing generative AI and multiple independent data sources. When trained on cross-reality data, large base models trained for intelligences and action-related tasks can be applied to both physical and virtual worlds. We show a general overview of an Agent AI system that can perceive and act in many different domains and applications, potentially serving as a pathway to AGI using the intelligent body paradigm.

catalogs

- 1 introductory

- 1.1 locomotive

- 1.2 contexts

- 1.3 summarize

- 2 Agent AI Integration

- 2.1 Infinite AI Intelligence

- 2.2 Agent AI using large-scale base models

- 2.2.1 figment of one's imagination

- 2.2.2 Bias and inclusiveness

- 2.2.3 Data privacy and use

- 2.2.4 Interpretability and descriptiveness

- 2.2.5 Reasoning Enhancement

- 2.2.6 supervisory

- 2.3 Agent AI for emergent capabilities

- 3 Agent AI Paradigm

- 3.1 Large Language Modeling and Visual Language Modeling

- 3.2 Intelligent Body Transformer Definition

- 3.3 Intelligent Body Transformer Creation

- 4 Agent AI Learning

- 4.1 Strategies and mechanisms

- 4.1.1 Reinforcement Learning (RL)

- 4.1.2 Imitation Learning (IL)

- 4.1.3 Traditional RGB

- 4.1.4 Situational learning

- 4.1.5 Optimization in Intelligent Body Systems

- 4.2 Intelligent body systems (zero and few sample levels)

- 4.2.1 Intelligent Body Module

- 4.2.2 Intelligent Body Infrastructure

- 4.3 Intelligent body base model (pre-training and fine-tuning levels)

- 4.1 Strategies and mechanisms

- 5 Agent AI Classification

- 5.1 General Intelligence Domain

- 5.2 embodied intelligence

- 5.2.1 mobile intelligence

- 5.2.2 interacting intelligence

- 5.3 Simulation and Environmental Intelligence

- 5.4 generative intelligence

- 5.4.1 AR/VR/Mixed Reality Intelligent Body

- 5.5 Intellectual and Logical Reasoning Intelligence

- 5.5.1 Intellectual Intelligence Unit (KIU)

- 5.5.2 logical intelligence

- 5.5.3 Intelligent bodies for emotional reasoning

- 5.5.4 Neurosymbolic Intelligence Unit (NSI)

- 5.6 Large Language Modeling and Visual Language Modeling Intelligentsia

- 6 Agent AI application tasks

- 6.1 Intelligent bodies for gaming

- 6.1.1 NPC Behavior

- 6.1.2 Human-NPC Interaction

- 6.1.3 Intelligent body-based game analysis

- 6.1.4 For game scene compositing

- 6.1.5 Experiments and results

- 6.2 Robotics

- 6.2.1 Large language model/visual language model intelligences for robotics.

- 6.2.2 Experiments and results.

- 6.3 health care

- 6.3.1 Current health care capacity

- 6.4 multimodal intelligence

- 6.4.1 Image-Language Understanding and Generation

- 6.4.2 Video and language comprehension and generation

- 6.4.3 Experiments and results

- 6.5 Video-Language Experiment

- 6.6 Intelligentsia for Natural Language Processing

- 6.6.1 macrolanguage model intelligence

- 6.6.2 Universal Large Language Model Intelligence (ULM)

- 6.6.3 Command-Following Large Language Model Intelligentsia

- 6.6.4 Experiments and results

- 6.1 Intelligent bodies for gaming

- 7 Agent AI Across Modalities, Domains, and Realities

- 7.1 Intelligentsia for cross-modal understanding

- 7.2 Intelligentsia for cross-domain understanding

- 7.3 Interactive Intelligentsia for Cross-Modal and Cross-Reality

- 7.4 Migration from simulation to reality

- 8 Continuous and self-improvement of Agent AI

- 8.1 Data based on human interaction

- 8.2 Data generated by the base model

- 9 Smartbody datasets and leaderboards

- 9.1 The "CuisineWorld" dataset for multi-intelligence games

- 9.1.1 standard of reference

- 9.1.2 mandates

- 9.1.3 Indicators and rubrics

- 9.1.4 valuation

- 9.2 Audio-video-language pre-training dataset.

- 9.1 The "CuisineWorld" dataset for multi-intelligence games

- 10 Broader impact statement

- 11 ethical consideration

- 12 Diversity Statement

- A GPT-4V Intelligent Body Alert Details

- B GPT-4V for Bleeding Edge

- C GPT-4V for Microsoft Flight Simulator

- D GPT-4V for Assassin's Creed Odyssey

- E GPT-4V for GEARS of WAR 4

- F GPT-4V for Starfield

1 Introduction

1.1 Motivation

Historically, AI systems were defined at the Dartmouth Conference in 1956 as "artificial life forms" capable of gathering information from the environment and interacting with it in a useful way. Inspired by this definition, Minsky's group at MIT constructed a robotic system in 1970 called the "Replica Demonstration", which observed a "block world" scenario and successfully reconstructed the observed polyhedral block structure. The system included observation, planning, and manipulation modules, revealing that each subproblem was challenging and required further research. The field of AI is fragmented into specialized subfields that have made great strides in solving these and other problems, but oversimplification obscures the overall goals of AI research.

In order to move beyond the status quo, it is necessary to return to the foundations of AI driven by Aristotelian holism. Fortunately, the recent revolutions in Large Language Modeling (LLM/Large Language Model) and Visual Language Modeling (VLM/Visual Language Model) have made it possible to create new types of AI intelligences that conform to holistic ideals. Seizing this opportunity, this paper explores models that integrate linguistic abilities, visual cognition, contextual memory, intuitive reasoning, and adaptability. It explores the potential for accomplishing this holistic synthesis using large language models and visual language models. In our exploration, we also revisit the design of systems based on Aristotle's "purposive cause," the teleological "reason for the existence of a system," which may have been overlooked in previous AI developments.

The renaissance of natural language processing and computer vision has been catalyzed with the emergence of powerful pre-trained big language models and visual language models. Big language models now demonstrate an amazing ability to decipher the nuances of real-world linguistic data, often with capabilities that match or even surpass human expertise OpenAI (2023). Recently, researchers have shown that big language models can be extended to act in a variety of environments asintelligent bodythat perform complex actions and tasks when paired with domain-specific knowledge and modules Xi et al. (2023). These scenarios are characterized by complex reasoning, understanding of the intelligences' roles and their environments, and multi-step planning, testing the ability of intelligences to make highly nuanced and complex decisions within the constraints of their environment Wu et al. (2023); Meta Fundamental AI Research Diplomacy Team et al. (2022) Meta Fundamental AI Research (FAIR) Diplomacy Team, Bakhtin, Brown, Dinan, Farina, Flaherty, Fried, Goff, Gray, Hu, et al. (FAIR).

Building on these initial efforts, the AI community is on the cusp of a major paradigm shift away from the creation of AI models for passive, structured tasks, and towards models that can hypothesize the role of dynamic, intelligent bodies in diverse and complex environments. In this context, this paper investigates the great potential of using large language models and visual language models as intelligentsia, emphasizing models that combine linguistic competence, visual cognition, contextual memory, intuitive reasoning, and adaptability. Utilizing large language models and visual language models as intelligibles, especially in domains such as gaming, robotics, and healthcare, not only provides a rigorous platform for evaluating state-of-the-art AI systems, but also foreshadows the transformative impact that intelligibles-centered AI will have on society and industry. When fully utilized, intelligent body models can redefine the human experience and raise operational standards. The potential for full automation from these models heralds a dramatic shift in industry and socioeconomic dynamics. These advances will be intertwined with multifaceted leaderboards, not only technologically but also ethically, as we will elaborate in Section 11. We delve into the overlapping domains of these subfields of Intelligent Body AI and illustrate their interconnectedness in Figure 1.

1.2 Background

We will now present relevant research papers supporting the concept, theoretical background, and modern implementations of Artificial Intelligence for Intelligent Bodies.

Large-scale base models.

Large language models and visual language models have been driving efforts to develop general-purpose intelligent machines (Bubeck et al., 2023; Mirchandani et al., 2023). Although they are trained using large text corpora, their superior problem-solving capabilities are not limited to the canonical language processing domain. Large language models have the potential to handle complex tasks previously thought to be the preserve of human experts or domain-specific algorithms, from mathematical reasoning (Imani et al., 2023; Wei et al., 2022; Zhu et al., 2022) to answering specialized legal questions (Blair-Stanek et al., 2023; Choi et al., 2023; Nay, 2022). 2023; Nay, 2022). Recent research has shown that it is possible to use large language models to generate complex plans for robotics and gaming AIs (Liang et al., 2022; Wang et al., 2023a, b; Yao et al., 2023a; Huang et al., 2023a), which marks an important milestone in the use of large language models as general-purpose intelligent intelligences.

Embodied AI.

Some work has utilized large language models to perform task planning (Huang et al., 2022a; Wang et al., 2023b; Yao et al., 2023a; Li et al., 2023a), in particular the World Wide Web-scaled domain knowledge of large language models and the emergent zero-sample embodied capabilities to perform complex task planning and reasoning. Recent robotics research has also utilized large language models to perform task planning (Ahn et al., 2022a; Huang et al., 2022b; Liang et al., 2022) by decomposing natural language commands into a series of sub-tasks (either in natural language form or in Python code form), which are then executed using a low-level controller. In addition, they incorporate environmental feedback to improve task performance (Huang et al., 2022b), (Liang et al., 2022), (Wang et al., 2023a), and (Ikeuchi et al., 2023).

Interactive learning:

AI intelligences designed for interactive learning operate using a combination of machine learning techniques and user interaction. Initially, the AI intelligences are trained on a large dataset. This dataset contains various types of information, depending on the intended function of the intelligence. For example, an AI designed for a language task would be trained on a large corpus of text data. Training involves the use of machine learning algorithms, which may include deep learning models (e.g., neural networks). These training models enable the AI to recognize patterns, make predictions, and generate responses based on the data on which it was trained. AI intelligences can also learn from real-time interactions with users. This interactive learning can occur in a number of ways: 1) Feedback-based learning: the AI adjusts its responses based on direct feedback from the user Li et al. (2023b); Yu et al. (2023a); Parakh et al. (2023); Zha et al. (2023); Wake et al. (2023a, b, c). For example, if the user corrects the AI's response, the AI can use this information to improve future responses Zha et al. (2023); Liu et al. (2023a). 2) Observational Learning: the AI observes user interactions and learns implicitly. For example, if a user frequently asks similar questions or interacts with the AI in a particular way, the AI may adapt its responses to better fit these patterns. It allows AI intelligences to understand and process human language, multimodal settings, interpret cross-reality situations and generate responses from human users. Over time, the performance of the AI intelligences usually continues to improve through more user interaction and feedback. This process is usually overseen by human operators or developers who ensure that the AI is learning appropriately and is not developing biases or incorrect patterns.

1.3 Overview

Multimodal Agent AI (MAA/Multimodal Agent AI) is a set of systems that generate effective actions in a given environment based on the understanding of multimodal sensory inputs. With the emergence of the Large Language Model (LLM/Large Language Model) and the Visual Language Model (VLM/Visual Language Model), a number of multimodal agent AI systems have been proposed in a variety of fields ranging from basic research to applications. While these research areas are rapidly evolving through integration with traditional techniques in each domain (e.g., visual questioning and visual language navigation), they share common interests such as data collection, benchmarking, and ethical perspectives. In this paper, we focus on some representative research areas of AI for multimodal intelligences, namely multimodality, gaming (VR/AR/MR), robotics, and healthcare, and our goal is to provide comprehensive knowledge about the common concerns discussed in these areas. Thus, we want to learn the basics of artificial intelligence for multimodal intelligences and gain insights to further advance their research. Specific learning outcomes include:

- An overview of Artificial Intelligence for Multimodal Intelligentsia: an in-depth look at its principles and role in contemporary applications, providing researchers with a comprehensive grasp of its importance and uses.

- METHODOLOGY: Case studies from gaming, robotics, and healthcare detail how macrolanguage modeling and visual language modeling can enhance the artificial intelligence of multimodal intelligences.

- Performance evaluation: a guide to evaluating multimodal intelligences AI using relevant datasets, focusing on their effectiveness and generalization capabilities.

- Ethical Considerations: a discussion of the social implications and ethical rankings of deploying intelligent body AI, highlighting responsible development practices.

- Emerging Trends and Future Charts: categorizes the latest developments in each field and discusses future directions.

Computer-based action and generalist intelligences (GA/Generalist Agent) are useful for many tasks. In order for a Generalist Intelligent Body to be truly valuable to its users, it can interact naturally and can generalize to a wide range of contexts and modalities. Our goal is to foster a vibrant research ecosystem within the Intelligent Body AI community and create a shared sense of identity and purpose. Multimodal intelligent body AI has the potential to be applied to a wide range of contexts and modalities, including input from humans. Therefore, we believe that this field of intelligent body AI can attract a wide variety of researchers, thereby fostering a dynamic intelligent body AI community and shared goals. Led by renowned experts from academia and industry, we expect that this paper will be an interactive and enriching experience, including smart body tutorials, case studies, task sessions, and experimental discussions, ensuring a comprehensive and engaging learning experience for all researchers.

The purpose of this paper is to provide general and comprehensive knowledge about current research in the field of Artificial Intelligence for Intelligentsia. To this end, the rest of the paper is organized as follows. Section 2 outlines how intelligent body AI can benefit by integrating with relevant emerging technologies, in particular large-scale base models. Section 3 describes the new paradigm and framework we propose for training intelligent body AI. Section 4 provides an overview of widely used methods for training intelligent body AI. Section 5 categorizes and discusses various types of intelligibles. Section 6 describes applications of intelligent-body AI in gaming, robotics, and healthcare. Section 7 explores the efforts of the research community to develop a generalized intelligent body AI that can be applied to a variety of modalities, domains, and bridge the simulation-to-reality gap. Section 8 discusses the potential of an intelligent body AI that not only relies on pre-trained base models, but also continuously learns and improves itself by utilizing interactions with the environment and the user. Section 9 describes our new dataset designed for training multimodal intelligent body AI. Section 11 discusses the hot topic of AI intelligences, limitations, and ethical considerations of the social implications of our paper.

2 Intelligent Body Artificial Intelligence Integration

As suggested in previous studies, the underlying models based on macrolanguage models and visual language models still exhibit limited performance in the field of embodied AI, especially in understanding, generating, editing, and interacting in unseen environments or scenes Huang et al. (2023a); Zeng et al. (2023). As a result, these limitations lead to suboptimal output from AI intelligences. Current approaches to intelligencercentric AI modeling focus on directly accessible and well-defined data (e.g., textual or string representations of the state of the world) and typically use domain- and environment-independent patterns learned from their large-scale pre-training to predict action outputs for each environment Xi et al. (2023); Wang et al. (2023c); Gong et al. (2023a); Wu et al. (2023). In (Huang et al., 2023a), we investigate the task of knowledge-guided collaboration and interactive scene generation by combining large base models, and show promising results indicating that knowledge-based intelligences of large language models can improve the performance of 2D and 3D scene comprehension, generation, and editing, as well as other human-computer interactions Huang et al. (2023a). ). By integrating the Intelligent Body AI framework, the large base model is able to understand user inputs more deeply, resulting in complex and adaptive human-computer interaction systems. The emergent capabilities of large language models and visual language models play an unseen role in human-computer interaction for generative AI, embodied AI, knowledge augmentation for multimodal learning, mixed reality generation, text-to-vision editing, and 2D/3D simulation in games or robotics tasks. Recent advances in fundamental modeling of intelligent body AI provide an imminent catalyst for unlocking generalized intelligence in embodied intelligences. Large-scale action models or visual language models of intelligences open up new possibilities for generalized embodied systems, such as planning, problem solving, and learning in complex environments. Intelligent embodied AI takes further steps in the metaverse and points the way to early versions of generalized AI.

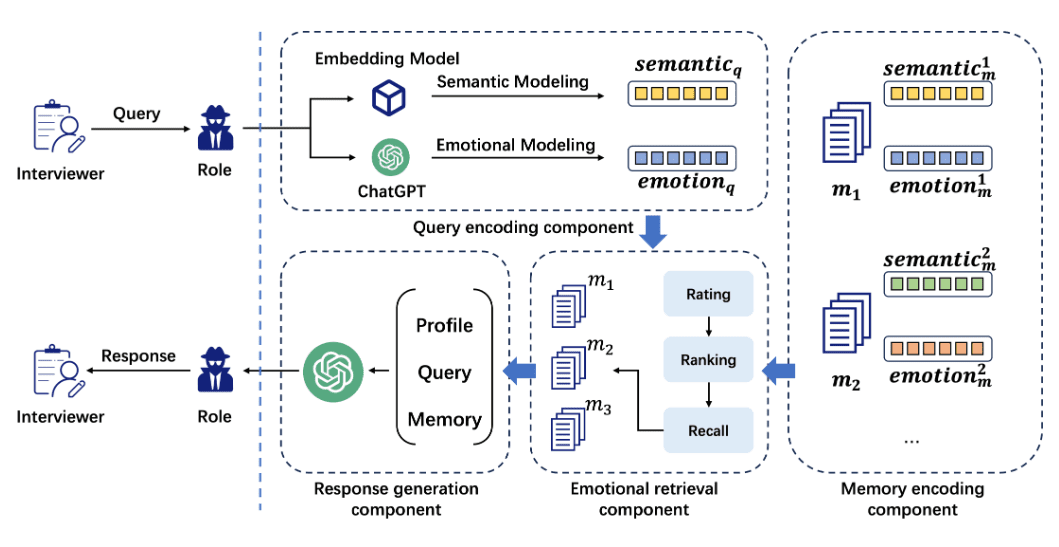

![Agent AI: 探索多模态交互的世界[李飞飞-经典必读]-1 Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/2dd99ca44577ecf.png)

Fig. 2: Multimodal Smartbody AI for 2D/3D embodied generation and editing interactions across reality.

2.1 Infinite Artificial Intelligence Intelligence

Artificial intelligence intelligences have the ability to interpret, predict and respond based on their training and input data. While these capabilities are advanced and improving, it is important to recognize their limitations and the impact of the underlying data on which they are trained. AI intelligences systems typically have the following capabilities:1) Predictive Modeling:AI intelligences can predict likely outcomes or suggest subsequent steps based on historical data and trends. For example, they may predict the continuation of text, the answer to a question, the next action of a robot, or the solution to a scenario.2) Decision Making: in some applications, AI intelligences can make decisions based on their inferences. Typically, the intelligences will make decisions based on what is most likely to achieve the specified goal. For AI applications such as recommender systems, intelligences can decide which products or content to recommend based on their inferences about user preferences.3) Handling Ambiguity: AI intelligences can often handle ambiguous inputs by inferring the most likely interpretation based on context and training. However, their ability to do so is limited by the scope of their training data and algorithms.4) Continuous Improvement: While some AI intelligences have the ability to learn from new data and interactions, many large language models do not continually update their knowledge base or internal representations after training. Their inferences are typically based only on the data available as of their last training update.

We show augmented interactive intelligences for multimodal and cross-reality agnostic integration with emergent mechanisms in Figure 2. Artificial intelligence intelligibles require the collection of large amounts of training data for each new task, which can be costly or impossible for many domains. In this study, we develop an infinite intelligent body that learns to transfer in-memory information from a generalized base model (e.g., GPT-X, DALL-E) to new domains or scenarios for scenario comprehension, generation, and interactive editing in a physical or virtual world.

One application of such infinite intelligences in robotics is RoboGen Wang et al. (2023d). In this study, the authors present a pipeline for autonomously running task suggestion, environment generation, and skill learning cycles.RoboGen is an effort to transfer knowledge embedded in large-scale models to robotics.

2.2 Artificial Intelligence for Intelligentsia with Large Base Models

Recent research has shown that large-scale base models play a crucial role in creating data that act as benchmarks for determining the actions of an intelligent body within the constraints imposed by the environment. Examples include the use of base models for robot manipulation Black et al. (2023); Ko et al. (2023) and navigation Shah et al. (2023a); Zhou et al. (2023a). To illustrate, Black et al. employ an image editing model as a high-level planner to generate images of future subgoals to guide the low-level strategy Black et al. (2023). For robot navigation, Shah et al. propose a system that employs a macrolanguage model to recognize landmarks from text and a visual language model to associate these landmarks with visual inputs, thereby augmenting navigation with natural language commands Shah et al. (2023a).

There is also a growing interest in generating conditioned human movements tailored to linguistic and environmental factors. Several AI systems have been proposed to generate movements and actions customized to specific linguistic commands Kim et al. (2023); Zhang et al. (2022); Tevet et al. (2022) and adapted to a variety of 3D scenes Wang et al. (2022a). This research highlights the growing capability of generative models in enhancing the adaptability and responsiveness of AI intelligences in a variety of scenarios.

2.2.1 Hallucinations

Intelligentsia that generate text are often prone to hallucinations, i.e., situations where the generated text is meaningless or does not match the provided source content Raunak et al. (2021); Maynez et al. (2020). Illusions can be categorized into two types, theinner illusioncap (a poem)external illusion Ji et al. (2023). Intrinsic illusions are those that contradict the source material, while extrinsic illusions are cases where the generated text contains additional information not initially included in the source material.

Some promising avenues for reducing the rate of illusions in language generation include the use of retrieval to enhance generation Lewis et al. (2020); Shuster et al. (2021) or other approaches that support natural language output through external knowledge retrieval Dziri et al. (2021); Peng et al. (2023). Typically, these approaches aim to enhance language generation by retrieving other source material and by providing mechanisms to check for contradictions between the generated response and the source material.

In the context of multimodal intelligent body systems, visual language models have also been shown to produce hallucinations Zhou et al. (2023b). A common cause of hallucinations in vision-based language generation is an overreliance on the co-occurrence of objects and visual cues in the training data Rohrbach et al. (2018). AI intelligences that rely exclusively on pre-trained macrolanguage or visual language models and use limited context-specific fine-tuning may be particularly prone to hallucinations because they rely on the internal knowledge base of the pre-trained model to generate actions and may not accurately understand the dynamics of the world state in which they are deployed.

2.2.2 Prejudice and inclusiveness

AI intelligences based on Large Language Models (LLMs) or Large Multimodal Models (LMMs) are biased due to multiple factors inherent in their design and training process. When designing these AI intelligences, we must be mindful of inclusivity and aware of the needs of all end-users and stakeholders. In the context of AI Intelligent Bodiesnon-exclusivity This refers to measures and principles taken to ensure that the responses and interactions of intelligentsia are inclusive, respectful, and sensitive to a wide range of users from diverse backgrounds. We will list key aspects of intelligent body bias and inclusiveness below.

- Training data: The underlying models are trained on large amounts of textual data collected from the Internet, including books, articles, websites, and other textual sources. These data often reflect biases that exist in human society, which the model may inadvertently learn and reproduce. This includes stereotypes, prejudices, and biased views related to race, gender, ethnicity, religion, and other personal attributes. In particular, by training on Internet data, and often using only English text for training, models implicitly learn the cultural norms of Western, Educated, Industrialized, Rich, and Democratic (WEIRD) societies Henrich et al. ( 2010 ), which have a disproportionate presence on the Internet. However, it is important to recognize that human-created datasets cannot be completely free of bias, as they often reflect societal biases as well as those of the individuals who originally generated and/or compiled the data.

- Historical and cultural bias: AI models are trained on large datasets from diverse content. As such, training data often includes historical texts or materials from different cultures. In particular, training data from historical sources may contain offensive or derogatory language that represents the cultural norms, attitudes, and prejudices of a particular society. This may result in models that perpetuate outdated stereotypes or fail to fully understand contemporary cultural changes and nuances.

- Language and contextual constraints: Language models may have difficulty understanding and accurately representing nuances in language, such as irony, humor, or cultural allusions. This can lead to misunderstandings or biased responses in some cases. Additionally, many aspects of spoken language are not captured by text-only data, leading to a potential disconnect between how humans understand language and how models understand it.

- Policies and guidelines: AI intelligences operate under strict policies and guidelines to ensure fairness and inclusiveness. For example, when generating images, there are rules to diversify the depiction of characters and avoid stereotypes related to race, gender, and other attributes.

- overgeneralization: These models tend to generate responses based on patterns seen in the training data. This can lead to overgeneralization, and models may generate responses that seem to stereotype certain groups or make broad assumptions.

- Continuous monitoring and updating: The AI system is continually monitored and updated to address any emerging issues of bias or inclusivity. Feedback from users and ongoing research in AI ethics play a critical role in this process.

- Amplifying the mainstream view: Since training data usually contains more content from the dominant culture or group, the model may be more biased in favor of these views and thus may underestimate or distort the views of minority groups.

- Ethical and Inclusive Design: AI tools should be designed with ethical considerations and inclusiveness as core principles. This includes respecting cultural differences, promoting diversity and ensuring that AI does not perpetuate harmful stereotypes.

- User Guide: Users are also instructed on how to interact with the AI in ways that promote inclusivity and respect. This includes avoiding requests that may lead to biased or inappropriate output. In addition, it can help mitigate situations where the model learns harmful material from user interactions.

Despite these measures, biases in AI intelligences persist. Ongoing efforts in AI research and development focus on further reducing these biases and enhancing the inclusiveness and fairness of AI systems for intelligences. Bias Reduction Efforts:

- Diverse and inclusive training data: Efforts are being made to include more diverse and inclusive sources in the training data.

- Bias detection and correction: Ongoing research focuses on detecting and correcting bias in model responses.

- Ethical guidelines and policies: Models are often bound by ethical guidelines and policies designed to mitigate bias and ensure respectful and inclusive interactions.

- Diverse representation: Ensure that the content generated or responses provided by AI intelligences represent a wide range of human experiences, cultures, ethnicities, and identities. This is particularly relevant in scenarios such as image generation or narrative construction.

- Bias mitigation: Actively work to reduce bias in AI responses. This includes bias related to race, gender, age, disability, sexual orientation, and other personal characteristics. The goal is to provide a fair and balanced response, not to perpetuate stereotypes or biases.

- Cultural sensitivity: AI's designs are culturally sensitive, recognizing and respecting the diversity of cultural norms, practices and values. This includes understanding and responding appropriately to cultural references and nuances.

- accessibility: Ensure that AI intelligences are accessible to users with different abilities, including those with disabilities. This may involve incorporating features that make it easier for people with visual, hearing, motor or cognitive impairments to interact.

- Language-based inclusiveness: Provide support for multiple languages and dialects for a global user base and be sensitive to intra-language nuances and variations Liu et al. ( 2023b ).

- Ethical and respectful interaction: The intelligence is programmed to interact ethically and respectfully with all users, avoiding responses that may be perceived as offensive, harmful, or disrespectful.

- User feedback and adaptation: Incorporate user feedback to continuously improve the inclusiveness and effectiveness of AI intelligences. This includes learning from interactions to better understand and serve a diverse user base.

- Compliance with inclusive guidelines: Adhere to established guidelines and standards for inclusivity in AI intelligences, which are typically set by industry groups, ethics committees, or regulatory agencies.

Despite these efforts, it is important to be aware of the potential for biases in responses and to think critically about interpreting them.Continuous improvements in AI intelligences technology and ethical practices aim to reduce these biases over time. Intelligent Bodies One of the overarching goals of AI inclusivity is to create an intelligent body that is respectful and accessible to all users, regardless of their background or identity.

2.2.3 Data privacy and use

A key ethical consideration for AI intelligences involves understanding how these systems process, store and potentially retrieve user data. We discuss key aspects below:

Data collection, use and purpose.

When using user data to improve model performance, model developers have access to data collected by AI intelligences in production and when interacting with users. Some systems allow users to view their data through their user account or by making a request to a service provider. It is important to recognize what data the AI intelligences are collecting during these interactions. This may include text input, user usage patterns, personal preferences, and sometimes more sensitive personal information. Users should also understand how the data collected from their interactions is used. If, for some reason, the AI holds incorrect information about a specific individual or group, there should be a mechanism for users to help correct that error once it is recognized. This is important for accuracy and respect for all users and groups. Common uses for retrieving and analyzing user data include improving user interactions, personalizing responses, and system optimization. It is important for developers to ensure that data is not used for purposes that users have not consented to (e.g., unsolicited marketing).

Storage and security.

Developers should be aware of where user interaction data is stored and the security measures in place to protect it from unauthorized access or disclosure. This includes encryption, secure servers, and data protection protocols. It is important to determine if and under what conditions smart body data is shared with third parties. This should be transparent and usually requires user consent.

Data deletion and retention.

It is also important for users to understand how long user data is stored and how users can request that their data be deleted. Many data protection laws give users the right to be forgotten, which means they can request that their data be deleted.AI Intelligentsia must comply with data protection laws such as the EU's GDPR or California's CCPA. These laws govern data processing practices and users' rights to their personal data.

Data portability and privacy policy.

Additionally, developers must create privacy policies for AI intelligences to document and explain to users how their data will be handled. This should detail data collection, use, storage and user rights. Developers should ensure they obtain user consent for data collection, especially for sensitive information. Users can often opt out or restrict the data they provide. In some jurisdictions, users may even have the right to request a copy of their data in a format that can be transferred to another service provider.

Anonymization.

For data used in broader analytics or AI training, it should ideally be anonymized to protect individual identities. Developers must understand how their AI intelligences retrieve and use historical user data during interactions. This may be for personalization or to improve the relevance of a response.

In summary, understanding data privacy for AI intelligences includes understanding how user data is collected, used, stored, and protected, and ensuring that users are aware of their rights regarding access, correction, and deletion of their data. Understanding the data retrieval mechanisms of users and AI intelligences is also critical to a full understanding of data privacy.

2.2.4 Interpretability and descriptiveness

Learning by imitation → decoupling

Intelligentsia are typically trained using continuous feedback loops in reinforcement learning (RL) or imitation learning (IL), starting with a randomly initialized strategy. However, this approach faces bottlenecks in obtaining initial rewards in unfamiliar environments, especially when rewards are sparse or only available at the end of long-step interactions. Therefore, a superior solution is to use infinite-memory intelligences trained through imitation learning, which can learn strategies from expert data, leading to improved exploration and utilization of unseen environment spaces, as well as emerging infrastructures, as shown in Fig. 3. With expert features to help the intelligent body better explore and utilize the unseen environmental space. Intelligent body AI can learn strategies and new paradigm processes directly from expert data.

Traditional imitation learning allows intelligences to learn strategies by imitating the behavior of an expert demonstrator. However, learning expert strategies directly may not always be the best approach, as the intelligent body may not generalize well to unseen situations. To address this problem, we propose to learn an intelligent body with contextual cues or implicit reward functions that capture key aspects of the expert's behavior, as shown in Figure 3. This equips the infinite-memory intelligences with physical-world behavioral data learned from expert demonstrations for task execution. It helps overcome the drawbacks of existing imitation learning, such as the need for large amounts of expert data and the potential for error in complex tasks. The key idea behind the Intelligent Body AI has two components: 1) the Infinite Intelligent Body, which collects physical-world expert demonstrations as state-action pairs, and 2) the virtual environment of the Imitation Intelligent Body Generator. The Imitation Intelligent Body generates actions that mimic the behavior of the expert, and the Intelligent Body learns a policy mapping from states to actions by a loss function that reduces the difference between the expert's actions and the actions generated by the learning policy.

Decoupling → Generalization

Instead of relying on task-specific reward functions, the intelligent body learns from expert demonstrations that provide a diverse set of state-action pairs covering various task aspects. The intelligent body then learns strategies for mapping states to actions by imitating the expert's behavior. Decoupling in imitation learning refers to separating the learning process from the task-specific reward function, thus allowing the strategy to generalize across different tasks without explicitly relying on the task-specific reward function. Through decoupling, an intelligent can learn from expert demonstrations and learn a strategy that can be adapted to various situations. Decoupling enables transfer learning, in which a strategy learned in one domain can be adapted to other domains with minimal fine-tuning. By learning a generalized strategy that is not tied to a specific reward function, an intelligent body can use the knowledge it has gained in one task to perform well in other related tasks. Since the intelligent body does not depend on a specific reward function, it can adapt to changes in the reward function or the environment without extensive retraining. This makes the learned strategies more robust and generalizable across different environments. In this context, decoupling refers to the separation of two tasks in the learning process: learning the reward function and learning the optimal policy.

![Agent AI: 探索多模态交互的边界-3 Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/3cc0593b0703242.png)

Figure 3: Example of an emergent interaction mechanism using intelligences to recognize image-related text from candidate text. The task involves integrating external world information using multimodal AI intelligences from the network and manually labeled knowledge interaction samples.

Generalization → emergent behavior

Generalization explains how emergent properties or behaviors emerge from simpler components or rules. The key idea is to identify the basic elements or rules that control the behavior of the system, such as individual neurons or basic algorithms. Thus, by observing how these simple components or rules interact with each other. The interactions of these components usually lead to the emergence of complex behaviors that cannot be predicted by examining only the individual components. Generalization across different levels of complexity allows the system to learn generic principles that apply at these levels, leading to emergent properties. This allows the system to adapt to new situations, demonstrating the emergence of more complex behaviors from simpler rules. Additionally, the ability to generalize across different levels of complexity facilitates the transfer of knowledge from one domain to another, which helps to emerge complex behaviors in new environments as the system adapts.

2.2.5 Reasoning Enhancement

The reasoning capabilities of AI intelligences lie in their ability to interpret, predict, and respond based on training and input data. While these capabilities are advanced and improving, it is important to recognize their limitations and the impact of the underlying data on which they are trained. In particular, in the context of Large Language Models (LLMs), it refers to their ability to draw conclusions, make predictions, and generate responses based on the data they are trained on and the inputs they receive.Reasoning augmentation in AI intelligences refers to the use of additional tools, techniques, or data to augment the natural reasoning capabilities of an AI in order to improve its performance, accuracy, and utility. This is especially important in complex decision-making scenarios or when dealing with nuanced or specialized content. We'll list particularly important sources of reasoning enhancement below:

Data richness.

Incorporating additional (often external) data sources to provide more context or background can help AI intelligences make more informed inferences, especially in areas where their training data may be limited. For example, AI intelligences can infer meaning from the context of a conversation or text. They analyze the given information and use it to understand the intent and relevant details of a user's query. These models are good at recognizing patterns in data. They use this ability to infer information about language, user behavior, or other relevant phenomena based on patterns learned during training.

Algorithmic Enhancement.

Improving the underlying algorithms of AI for better reasoning. This may involve using more advanced machine learning models, integrating different types of AI (e.g., combining natural language processing (NLP) with image recognition), or updating algorithms to better handle complex tasks. Reasoning in language modeling involves understanding and generating human language. This includes grasping tone, intent, and the nuances of different language structures.

Human in the Loop (HITL).

Involving humans to augment the AI's reasoning may be particularly useful in areas where human judgment is critical, such as ethical considerations, creative tasks, or ambiguous scenarios. Humans can provide guidance, correct errors, or offer insights that the intelligence cannot infer on its own.

Real-time feedback integration.

Using real-time feedback from the user or environment to augment reasoning is another promising approach to improving performance during inference. For example, an AI may adjust its recommendations based on real-time user responses or changing conditions in a dynamic system. Alternatively, if the AI takes an action that violates certain rules in a simulated environment, feedback may be dynamically provided to the AI to help it correct itself.

Cross-disciplinary knowledge transfer.

Leveraging knowledge or models from one domain to improve reasoning in another is particularly useful when generating output in specialized disciplines. For example, techniques developed for language translation might be applied to code generation, or insights from medical diagnostics could enhance predictive maintenance in machinery.

Customization for specific use cases.

Tailoring the reasoning capabilities of an AI for a specific application or industry may involve training the AI on specialized datasets or fine-tuning its model to better suit a particular task, such as legal analysis, medical diagnosis, or financial forecasting. Since specific language or information within a domain may contrast with language from other domains, fine-tuning the intelligences on domain-specific knowledge may be beneficial.

Ethical and bias considerations.

It is important to ensure that the enhancement process does not introduce new biases or ethical issues. This involves careful consideration of the impact on fairness and transparency of sources of additional data or new reasoning enhancement algorithms. When reasoning, especially on sensitive topics, AI intelligences must sometimes respond to ethical considerations. This includes avoiding harmful stereotypes, respecting privacy, and ensuring fairness.

Continuous learning and adaptation.

Regularly update and refine AI's capabilities to keep up with new developments, changing data landscapes, and evolving user needs.

In summary, reasoning augmentation in AI intelligences involves methods to augment their natural reasoning capabilities with additional data, improved algorithms, artificial inputs, and other techniques. Depending on the use case, such enhancements are often critical for handling complex tasks and ensuring the accuracy of the intelligences' output.

2.2.6 Regulation

Recently, there have been significant advances in intelligent body AI and its integration with embodied systems has opened up new possibilities for interacting with intelligent bodies through more immersive, dynamic, and engaging experiences. To accelerate this process and alleviate the drudgery involved in the development of intelligent body AI, we propose the development of a next-generation AI-enabled intelligent body interaction pipeline. Develop a human-machine collaboration system that enables humans and machines to communicate and interact meaningfully. The system could utilize the conversational capabilities and wide range of actions of a Large Language Model (LLM) or Visual Language Model (VLM) to talk to human players and recognize human needs. It will then perform appropriate actions to assist the human player as required.

When using Large Language Models (LLM)/Visual Language Models (VLM) for human-robot collaborative systems, it is important to note that these models operate as black boxes and produce unpredictable outputs. This uncertainty can become critical in physical settings (e.g., operating an actual robot). One way to address this challenge is to limit the focus of the Large Language Model (LLM)/Visual Language Model (VLM) through cue engineering. For example, when performing robotic task planning based on instructions, providing environmental information in cues has been reported to produce more stable output than relying only on text Gramopadhye and Szafir (2022). This report is supported by Minsky's AI framework theory Minsky (1975), which suggests that the problem space to be solved by a Large Language Model (LLM)/Visual Language Model (VLM) is defined by a given prompt. Another approach is to design prompts such that the Large Language Model (LLM)/Visual Language Model (VLM) contains explanatory text to enable the user to understand what the model is focusing on or recognizing. In addition, implementing a higher level that allows for pre-execution validation and modification under human guidance can facilitate the operation of systems that work under such guidance (Figure 4).

![Agent AI: 探索多模态交互的边界-4 Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/6ffe2854665853e.png)

Figure 4: Robotics teaching system developed in Wake et al. (2023c). (Left) System workflow. The process consists of three steps: task planning, which ChatGPT Planning of robot tasks based on commands and environmental information; Demonstration, where the user demonstrates action sequences visually. All steps are reviewed by the user, and if any step fails or shows defects, previous steps can be revisited as needed. (right) A web application that allows uploading of demo data and interaction between the user and ChatGPT.

2.3 Intelligentsia AI for emergent capabilities

Despite the increasing adoption of interactive intelligent body AI systems, most of the proposed approaches still face challenges in terms of generalization performance in unseen environments or scenarios. Current modeling practices require developers to prepare large datasets for each domain to fine-tune/pre-train the model; however, this process is costly or even impossible if the domain is new. To address this problem, we constructed interactive intelligences that utilize the knowledge memory of generalized base models (ChatGPT, Dall-E, GPT-4, etc.) for new scenarios, in particular for generating collaborative spaces between humans and intelligences. We identify an emergent mechanism - which we call mixed reality with knowledge-reasoning interactions - that facilitates collaboration with humans to solve challenging tasks in complex real-world environments, and the ability to explore unseen environments to adapt to virtual reality. For this mechanism, intelligences learn i) micro-responses across modalities: collecting relevant individual knowledge for each interaction task (e.g., understanding unseen scenarios) from explicit network sources and implicitly inferring it through outputs from pre-trained models; ii) macro-behavior in a reality-independent manner: refining the dimensions and patterns of interactions in the linguistic and multimodal domains and reasoning about them according to the represented roles, certain goal variables, mixed reality and influence diversity of collaborative information in the Large Language Model (LLM) to make changes. We investigate the task of knowledge-guided interaction synergies for scenario generation in collaboration with various OpenAI models and show how the Interactive Intelligentsia system can further enhance promising results for large-scale base models in our setup. It integrates and improves the generalization depth, awareness and interpretability of complex adaptive AI systems.

3 Intelligent Body AI Paradigm

In this section, we discuss a new paradigm and framework for training AI for intelligences. We hope to achieve several goals with the proposed framework:

- -

Utilizing existing pre-training models and pre-training strategies to efficiently guide our intelligences to understand important modalities, such as textual or visual inputs. - -

Support adequate long-term mission planning capacity. - -

Introducing a mnemonic framework that allows learning to be encoded and retrieved at a later date. - -

Allows the use of environmental feedback to effectively train the intelligences to learn which actions to take.

We show a high-level graph of new intelligences in Figure 5, outlining the important sub-modules of such a system.

![Agent AI: 探索多模态交互的边界-5 Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/893a5d2140b345e.png)

Fig. 5: Our proposed new intelligent body paradigm for multimodal generalized intelligences. As shown, there are 5 main modules: 1) environment and perception, including task planning and skill observation; 2) intelligent body learning; 3) memory; 4) intelligent body action; and 5) cognition.

3.1 Large Language Modeling and Visual Language Modeling

We can use either a Large Language Model (LLM) or a Visual Language Model (VLM) to bootstrap the components of an intelligent body, as shown in Figure 5. In particular, Large Language Models have been shown to perform well in task planning Gong et al. (2023a), contain a large amount of world knowledge Yu et al. (2023b), and exhibit impressive logical reasoning Creswell et al. (2022). In addition, visual language models like CLIP Radford et al. (2021) provide a generalized visual coder aligned to language as well as providing zero-sample visual recognition capabilities. For example, state-of-the-art open-source multimodal models such as LLaVA Liu et al. (2023c) and InstructBLIP Dai et al. (2023) rely on the frozen CLIP model as a visual encoder.

![Agent AI: 探索多模态交互的边界-4 Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/bbe6faec7c0ecfb.png)

Figure 6: We show the current paradigm for creating multimodal AI intelligences by combining large language models (LLMs) with large visual models (LVMs). Typically, these models receive visual or verbal input and use pre-trained and frozen visual and verbal models to learn to connect and bridge smaller sub-networks of modalities. Examples include Flamingo Alayrac et al. (2022), BLIP-2 Li et al. (2023c), InstructBLIP Dai et al. (2023), and LLaVA Liu et al.

3.2 Definition of Intelligent Body Transformer

In addition to using frozen macrolanguage models and visual language models as AI intelligences, a single intelligence can be used Transformer model, which combines the visual Token and linguistic Token as inputs, similar to Gato Reed et al. (2022). In addition to visual and linguistic, we add a third generic type of input, which we denote asintelligent body Token: Conceptually, an Intelligent Body Token is used to reserve a specific subspace for Intelligent Body behaviors in the input and output space of a model. For robots or games, this can be represented as the input action space of the controller. Intelligent Body Token can also be used when training an intelligent body to use a specific tool, such as an image generation or image editing model, or for other API calls, as shown in Figure 7, where we can combine Intelligent Body Token with Visual and Linguistic Token to generate a unified interface for training multimodal intelligent body AI. Using the Intelligent Body Transformer has several advantages over using a large proprietary large language model as an intelligent body. First, the model can be easily customized to very specific intelligent body tasks that may be difficult to represent in natural language (e.g., controller inputs or other specific actions). Thus, intelligences can learn from environmental interactions and domain-specific data to improve performance. Second, by accessing the probabilities of an intelligent body's Token, it can be easier to understand why a model takes or does not take a particular action. Third, certain domains (e.g., healthcare and law) have stringent data privacy requirements. Finally, a relatively small Intelligent Body Transformer may be much cheaper than a larger proprietary language model.

![Agent AI: 探索多模态交互的边界-5 Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/b415bfaf197bc30.png)

Fig. 7: Unified multimodal Transformer model for intelligentsia. Instead of connecting frozen submodules and using existing base models as building blocks, we propose a unified end-to-end training paradigm for intelligent body systems. We can still initialize the submodules using the large language model and the large vision model in Figure 6, but we can also use SmartBody Tokens, which are specialized tokens used to train models to perform SmartBody behaviors in specific domains (e.g., robotics.) For more details on SmartBody Tokens, see Section 3.2.

3.3 Creating an Intelligent Body Transformer

As shown in Figure 5 above, we can use the new Intelligent Body Paradigm with Intelligent Bodies guided by Large Language Models and Visual Language Models, and use the data generated by the large base model to train the Intelligent Body Transformer model to learn to perform specific goals. In the process, the Intelligent Body model is trained to be customized specifically for a particular task and domain. This approach allows you to leverage features and knowledge learned from pre-existing base models. We show a simplified overview of the process in two steps below:

Define goals within the domain.

In order to train an Intelligent Body Transformer, the goal and action space of the Intelligent Body for each particular environment needs to be explicitly defined. This includes determining which specific tasks or actions the intelligences need to perform and assigning unique intelligences Token to each task or action.In addition, any automated rules or procedures that can be used to recognize successful task completion can significantly increase the amount of data available for training. Otherwise, data generated by the base model or manually annotated data will be needed to train the model. Once data is collected and the performance of the intelligences can be evaluated, the process of continuous improvement can begin.

Continuous improvement.

Continuously monitoring the performance of the model and collecting feedback are fundamental steps in the process. Feedback should be used for further fine-tuning and updating. It is also critical to ensure that the model does not perpetuate biased or unethical results. This requires scrutinizing the training data, periodically checking for bias in the output, and, if needed, training the model to identify and avoid bias. Once the model reaches satisfactory performance, it can be deployed into the intended application. Continuous monitoring remains critical to ensure that the model is performing as expected and to facilitate necessary adjustments. See Section 8 for more details on this process, sources of training data, and more details on continuous learning of AI for intelligences.

4 Intelligence Body AI Learning

4.1 Strategies and Mechanisms

Strategies for interactive AI in different domains extend the paradigm of using trained intelligences that actively seek to collect user feedback, action information, useful knowledge for generation and interaction to invoke large base models. Sometimes, there is no need to re-train the large language model/visual language model, and we improve the performance of the intelligences by providing them with improved contextual cues at test time. On the other hand, it always involves modeling knowledge/reasoning/common sense/reasoning interactions through a combination of ternary systems - one system performs knowledge retrieval from multi-model queries, the second performs interaction generation from relevant intelligences, and the last trains new, information-rich self-supervised training or pre-training in an improved way, including reinforcement learning or imitation learning.

4.1.1 Reinforcement of learning (RL)

There is a long history of using reinforcement learning (RL) to train interactive intelligences that exhibit intelligent behavior. Reinforcement learning is a method for learning the optimal relationship between states and actions based on the rewards (or punishments) received for their actions. Reinforcement learning is a highly scalable framework that has been applied to numerous applications including robotics, however, it typically faces several leaderboard problems and large language modeling/visual language modeling has shown its potential to mitigate or overcome some of these difficulties:

- Reward designThe efficiency of strategy learning depends heavily on the design of the reward function. Designing a reward function requires not only an understanding of the reinforcement learning algorithm but also an in-depth knowledge of the nature of the task, and thus usually requires designing the function based on expert experience. Several studies have explored the use of large language models/visual language models to design reward functions Yu et al. (2023a); Katara et al. (2023); Ma et al.

- Data collection and efficiency Given its exploratory nature, strategy learning based on reinforcement learning requires large amounts of data Padalkar et al. (2023). The need for large amounts of data becomes particularly evident when the strategy involves managing long sequences or integrating complex operations. This is because these scenarios require more nuanced decision making and learning from a wider range of situations. In recent studies, efforts have been made to enhance data generation to support strategy learning Kumar et al. (2023); Du et al. Furthermore, in some studies, these models have been integrated into reward functions to improve strategy learning Sontakke et al. (2023). Parallel to these developments, another study focused on achieving parameter efficiency in the learning process using visual language models Tang et al. (2023); Li et al. (2023d) and a large language model Shi et al. (2023).

- longitudinal step Regarding data efficiency, reinforcement learning becomes more challenging as the length of action sequences increases. This is due to the unclear relationship between actions and rewards (known as the credit allocation problem), as well as the increase in the number of states to be explored, which requires a large amount of time and data. A typical approach for long and complex tasks is to decompose them into a series of subgoals and apply pre-trained strategies to solve each subgoal (e.g., Takamatsu et al. (2022)). This idea belongs to the Task and Motion Planning (TAMP) framework Garrett et al. (2021). Task and motion planning consists of two main components: task planning, which entails identifying high-level sequences of operations, and motion planning, which entails finding physically consistent, collision-free trajectories to achieve the goals of the task plan. Large language models are well suited for task and motion planning, and recent research has typically taken the approach that high-level task planning is performed using large language models, while low-level control is solved by reinforcement learning-based strategies Xu et al. (2023); Sun et al. (2023a); Li et al. (2023b); Parakh et al. The advanced features of large language models allow them to efficiently decompose abstract instructions into subgoals Wake et al. (2023c), thus contributing to enhanced language comprehension in robotic systems.

4.1.2 Imitation Learning (IL)

While reinforcement learning aims to train strategies based on exploring behaviors and maximizing the rewards of interacting with the environment, imitation learning (IL) aims to use expert data to mimic the behavior of experienced intelligences or experts. For example, one of the main frameworks for imitation-based learning in robotics is behavioral cloning (BC). Behavioral cloning is an approach to train a robot to imitate an expert's actions through direct copying. In this approach, the actions of an expert in performing a specific task are recorded and the robot is trained to replicate these actions in similar situations. Recent approaches based on behavioral cloning typically combine techniques from large language modeling/visual language modeling, resulting in more advanced end-to-end models. For example, Brohan et al. present RT-1 Brohan et al. (2022) and RT-2 Brohan et al. (2023), Transformer-based models that take a series of images and language as inputs and output sequences of base and arm actions. These models are reported to show high generalization performance due to being trained on a large amount of training data.

4.1.3 Traditional RGB

Learning intelligent body behavior using image inputs has been of interest for many years Mnih et al. (2015). The inherent challenge of using RGB input is dimensionality catastrophe. To address this problem, researchers have either used more data Jang et al. (2022); Ha et al. (2023) or introduced an inductive bias in model design to improve sample efficiency. In particular, the authors integrate 3D structures into the model architecture used for manipulation Zeng et al. (2021); Shridhar et al. (2023); Goyal et al. (2023); James and Davison (2022). For robot navigation, the authors Chaplot et al. (2020a, b) utilize maps as a representation. Maps can be learned by neural networks aggregating all previous RGB inputs, as well as by 3D reconstruction methods (e.g., neural radiation fields) Rosinol et al. (2022).

To obtain more data, researchers have used graph simulators to synthesize synthetic data Mu et al. (2021); Gong et al. (2023b) and have attempted to close the sim2real gap Tobin et al. (2017); Sadeghi and Levine (2016); Peng et al. (2018). Recently, concerted efforts have been made to curate large-scale datasets aiming at solving the data scarcity problem Padalkar et al. (2023); Brohan et al. On the other hand, data enhancement techniques have also been extensively investigated in order to increase sample complexity Zeng et al. (2021); Rao et al. (2020); Haarnoja et al. (2023); Lifshitz et al.

4.1.4 Context learning

Context learning has been shown to be an effective method for solving natural language processing tasks using large language models like GPT-3 Brown et al. (2020); Min et al. (2022). By providing task examples in the context of the big language model prompts, it can be seen that sample less prompts are an effective way to contextualize the model output in a variety of tasks in natural language processing. Factors such as the variety of examples and the quality of the examples presented in the context may improve the quality of the model output An et al. (2023); Dong et al. (2022). In the context of multimodal base models, when only a small number of examples are given, models like Flamingo and BLIP-2 Alayrac et al. (2022); Li et al. (2023c) have been shown to be effective in a wide variety of visual comprehension tasks. Contextual learning of intelligences in the environment can be further improved by integrating context-specific feedback when certain actions are taken Gong et al. (2023a).

4.1.5 Optimization in Intelligent Body Systems

Optimization of intelligent body systems can be divided into spatial and temporal aspects. Spatial optimization considers how intelligent bodies operate in physical space to perform tasks. This includes coordination between robots, resource allocation, and maintaining an organized space.

To efficiently optimize intelligent-body AI systems, especially those in which a large number of intelligences run in parallel, previous work has focused on using high-volume reinforcement learning Shacklett et al. (2023). Since task-specific multi-intelligent body interaction datasets are rare, self-gaming reinforcement learning enables teams of intelligent bodies to improve over time. However, this can also lead to very fragile intelligences that can only work under self-gaming and not with humans or other independent intelligences because they overfit the self-gaming training paradigm. To address this problem, we can instead discover a set of different conventions Cui et al. (2023); Sarkar et al. (2023) and train an intelligent that understands the various conventions. The base model can further help to establish conventions with humans or other independent intelligences, thus enabling smooth coordination with new intelligences.

On the other hand, temporal optimization focuses on how intelligences perform tasks over time. This includes task scheduling, sequencing, and timeline efficiency. For example, optimizing the trajectory of a robot arm is an example of efficiently optimizing movement between successive tasks Zhou et al. (2023c). At the task-scheduling level, algorithms like the LLM-DP Dagan et al. (2023) and the ReAct Approaches such as Yao et al. (2023a) address effective task planning by interactively integrating environmental factors.

4.2 Agent systems (zero and few sample levels)

4.2.1 Agent Module

Our initial exploration of the Agent paradigm involved the development of Agent AI "modules" for interactive multimodal Agents using either the Large Language Model (LLM) or the Visual Language Model (VLM). Our initial Agent modules contribute to training or contextual learning and employ a minimalist design designed to demonstrate the ability of an Agent to schedule and coordinate effectively. We also explored initial cue-based memory techniques that help with better planning and inform future methods of action in the field. To illustrate this, our "MindAgent" infrastructure consists of 5 main modules: 1) Environment Awareness with Task Planning, 2) Agent Learning, 3) Memory, 4) Generalized Agent Action Prediction, and 5) Cognition, as shown in Figure 5.

4.2.2 Agent infrastructure

Agent-based AI is a large and rapidly growing community in entertainment, research, and industry. The development of large-scale base models has significantly improved the performance of Agent AI systems. However, creating agents in this way is limited by the increasing amount of work and overall cost required to create high-quality datasets. At Microsoft, building a high-quality Agent infrastructure has had a significant impact on multimodal Agent co-piloting through the use of advanced hardware, diverse data sources, and robust software libraries. As Microsoft continues to push the boundaries of Agent technology, the AI Agent platform is expected to continue to be a dominant force in multimodal intelligence for years to come. Nonetheless, Agent AI interaction currently remains a complex process that requires a combination of skills. Recent advances in the field of large-scale generative AI modeling have the potential to significantly reduce the high cost and time currently required for interactive content, both for large studios and for smaller, independent content creators to design high-quality experiences beyond their current capabilities. Multimodal Agents Inside Current HCI systems are largely rule-based. They do have intelligent behaviors that respond to human/user actions and have some degree of network knowledge. However, these interactions are usually limited by the cost of software development, which prevents specific behaviors from being implemented in the system. In addition, current models are not designed to help users achieve goals in situations where they are unable to accomplish specific tasks. Therefore, an Agent AI system infrastructure is needed to analyze user behavior and provide appropriate support when needed.

4.3 Agent-based base models (pre-training and fine-tuning levels)

The use of pre-trained base models provides significant advantages in terms of broad applicability across a variety of use cases. The integration of these models enables the development of customized solutions for a variety of applications, thus avoiding the need to prepare large labeled datasets for each specific task.

A notable example in the field of navigation is the LM-Nav system Shah et al. (2023a), which combines GPT-3 and CLIP in a new approach. it effectively utilizes textual landmarks generated by a language model to navigate by anchoring them in images acquired by the robot. This approach demonstrates the seamless fusion of textual and visual data, significantly enhancing the robot's ability to navigate while maintaining broad applicability.

In robot manipulation, several studies have proposed the use of off-the-shelf LLMs (e.g., ChatGPT) along with an open vocabulary object detector.The combination of an LLM and an advanced object detector (e.g., Detic Zhou et al. (2022)) can help to comprehend human commands while situating the textual information within the scene information Parakh et al. (2023 ). Furthermore, recent advances demonstrate the potential of using cue engineering in conjunction with advanced multimodal models such as GPT-4V(ision) Wake et al. (2023b). This technique opens the way to multimodal task planning, highlighting the versatility and adaptability of pre-trained models in a variety of environments.

5 Agent AI Classification

5.1 Generic Agent Domain

Computer-based actions and Generalized Agents (GA) are useful for many tasks. Recent advances in the field of large-scale base models and interactive AI have enabled new capabilities for GA. However, for GA to be truly valuable to its users, it must be easy to interact with and generalize to a wide range of environments and modalities. We expand on the main chapters on Agent-based AI in Section 6 with high quality, especially in areas related to these topics in general:

Multimodal Agent AI (MMA) is an upcoming forum ^1^^^1^ Current URL: https://multimodalagentai.github.io/ for our research and industry communities to interact with each other and with the broader Agent AI research and technology community. Recent advances in the field of large-scale fundamental models and interactive AI have enabled new capabilities for General Purpose Agents (GA), such as predicting user behavior and task planning in constrained environments (e.g., MindAgent Gong et al. (2023a), fine-grained multimodal video understanding Luo et al. (2022), robotics Ahn et al. ( (2022b); Brohan et al. (2023)), or providing users with chat companions that contain knowledge feedback (e.g., web-based customer support for healthcare systems Peng et al. (2023)). More detailed information on representative and recent representative work is shown below. We hope to discuss our vision for the future of MAA and inspire future researchers to work in the field. This paper and our forum cover the following major topics, but are not limited to them:

- Main Theme: Multimodal Agent AI, Generalized Agent AI

- Secondary theme: Embodied Agents, Motion Agents, Language-based Agents, Visual and Linguistic Agents, Knowledge and Reasoning Agents, Agents for Gaming, Robotics, Healthcare, and more.

- Extended Theme: Visual navigation, simulated environments, rearrangement, Agent-based modeling, VR/AR/MR, embodied vision, and language.

Next, we list the following representative Agent categories:

5.2 Possessive Agent

Our biological brain exists in our bodies, and our bodies move through an ever-changing world. The goal of embodied AI is to create Agents, such as robots, that learn to creatively solve challenging tasks that require interaction with the environment. While this is a huge challenge, major advances in deep learning and the growing popularity of large datasets such as ImageNet have enabled superhuman performance on a wide range of AI tasks that were previously considered difficult to handle. Computer vision, speech recognition, and natural language processing have undergone transformative revolutions in passive input-output tasks such as language translation and image categorization, while reinforcement learning has achieved world-class performance in interactive tasks such as gaming. These advances provide a powerful impetus for embodied AI, enabling more and more users to move quickly toward intelligent agents that can interact with machines.

5.2.1 Action Agent

Action Agents are agents that need to perform physical actions in a simulated physical environment or in the real world; in particular, they need to be actively involved in activities with the environment. We broadly categorize Action Agents into two different classes based on their application areas: game AI and robotics.

In Game AI, the Agent will interact with the game environment and other independent entities. In these settings, natural language enables smooth communication between Agent and humans. Depending on the game, there may be a specific task to be accomplished that provides a real reward signal. For example, in competitive diplomacy games, human-level gaming can be achieved by using human dialog data and training language models with reinforcement-learning action strategies Meta Fundamental AI Research Diplomacy Team et al. (2022) Meta Fundamental AI Research (FAIR ) Diplomacy Team, Bakhtin, Brown, Dinan, Farina, Flaherty, Fried, Goff, Gray, Hu, et al. (FAIR).

In some cases, Agents will act like ordinary residents of a town Park et al. (2023a) without trying to optimize for specific goals. Base models are useful in these settings because they can simulate more natural-looking interactions by mimicking human behavior. When augmented with external memory, they produce convincing Agents that can carry on conversations, organize daily activities, build relationships, and have virtual lives.

5.2.2 Interactive Agent