3FS: A Parallel File System to Improve Data Access Efficiency (DeepSeek Open Source Week Day 5)

General Introduction

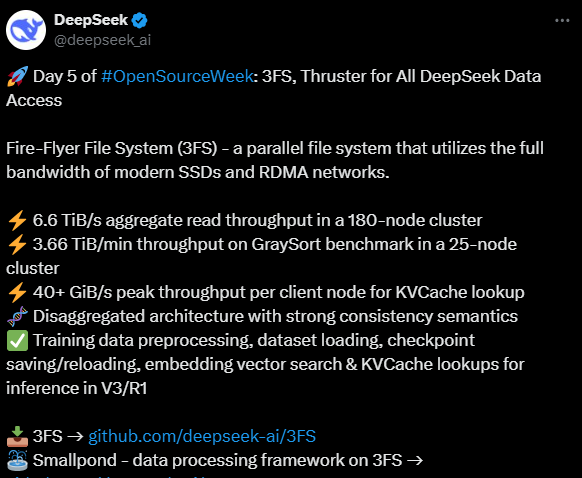

3FS (Fire-Flyer File System) is an open source parallel file system developed by the DeepSeek team, designed for modern SSDs and RDMA networks, aiming to dramatically improve data access efficiency. It achieves an aggregated read throughput of 6.6 TiB/s and a write speed of 3.66 TiB/min in a 180-node cluster, demonstrating stunning high-performance performance. As one of the outcomes of DeepSeek Open Source Week, 3FS is not only a product of technological innovation, but also reflects the team's commitment to community sharing. It subverts the traditional disk-oriented distributed file system design, optimized for modern hardware characteristics, and is suitable for large-scale computing scenarios that require efficient data processing, such as AI training and big data analysis. Both developers and enterprise users can access the source code through GitHub and freely deploy and customize it.

Function List

- High throughput data access: Supports 6.6 TiB/s read and 3.66 TiB/min write to fully utilize SSD and RDMA network bandwidth.

- parallel processing capability: Allows multiple nodes to work together to improve data processing efficiency in large-scale clusters.

- Modern hardware optimization: Deeply adapted for SSDs and RDMA networks, breaking through the performance bottleneck of traditional file systems.

- Open Source Customizable: Full source code is provided so that users can modify and extend the functionality according to their needs.

- Out of the box for production environments: Production-validated by the DeepSeek team and can be deployed directly to real-world business scenarios.

Using Help

Acquisition and Installation Process

3FS, as an open source project, requires some technical background for deployment. The following is a detailed installation and usage guide to help users get started quickly.

1. Access to source code

- Open your browser and visit https://github.com/deepseek-ai/3FS.

- Click the "Code" button in the upper right corner of the page, and select "Download ZIP" to download the zip file, or use the Git command to clone the repository:

git clone https://github.com/deepseek-ai/3FS.git

- Once the download is complete, unzip the file to a local directory, for example

/home/user/3FSThe

2. Environmental preparation

3FS relies on modern hardware (SSDs and RDMA networks) and a Linux environment to run. Make sure your system meets the following conditions:

- operating system: Ubuntu 20.04 or later Linux distributions are recommended.

- hardware requirement: Server clusters that support NVMe SSDs and RDMA networks such as InfiniBand or RoCE.

- software-dependent: Basic compilation tools such as Git, GCC, Make, etc. need to be installed. You can run the following command to install them:

sudo apt update sudo apt install git gcc make

3. Compilation and installation

- Go to the 3FS directory:

cd /home/user/3FS - Check for additional dependency descriptions (usually found in the

README.md), if any, install it according to the documentation. - Compile the source code:

make - After successful compilation, install to the system:

sudo make installOnce the installation is complete, the 3FS executables and configuration files are deployed to the system default path (usually the

/usr/local/bin).

4. Configuring clusters

- Edit the configuration file (by default located in the

configdirectory, for example3fs.conf), set the cluster node information:- Specify the node IP address, for example

node1=192.168.1.10The - Configure the SSD storage path, for example

storage_path=/dev/nvme0n1The - Set the RDMA network parameters to ensure proper communication between nodes.

- Specify the node IP address, for example

- After saving the configuration, start the service on each node:

3fs --config /path/to/3fs.conf

5. Verification of installation

- Check the status of the service:

3fs --status - If a message like "3FS running on 180 nodes" is returned, the deployment was successful.

Main function operation flow

High throughput data access

- Mounting a file system: Run the following command on the client to mount 3FS to a local directory:

mount -t 3fs /path/to/storage /mnt/3fs - retrieve data: Direct use

catmaybecpcommand to read a file, for example:cat /mnt/3fs/large_file.txtThe system automatically extracts data from multiple nodes in parallel at speeds up to 6.6 TiB/s.

- write data: Copy the file to the mount directory:

cp large_file.txt /mnt/3fs/Write speed up to 3.66 TiB/min.

parallel processing

- In a multi-node environment, 3FS automatically assigns data tasks. Users do not need to manually intervene, they just need to ensure that the application supports parallel I/O (e.g. Hadoop or MPI programs).

- Test Parallel Performance Example:

dd if=/dev/zero of=/mnt/3fs/testfile bs=1G count=10Observe whether the throughput is close to the expected value.

custom development

- Modify the source code: go to

srcdirectory to edit C/C++ files. For example, adjust the buffer size to optimize for a specific workload. - Recompile:

make clean && make - Deploy the modified version:

sudo make install

caveat

- hardware compatibility: Ensure that all nodes support RDMA and NVMe, otherwise performance may be degraded.

- Network Configuration: The firewall needs to open the RDMA-related ports (e.g. 4791 by default) to avoid communication interruption.

- documentation reference: If you encounter problems during installation, check out the GitHub page on the

README.mdOr submit an Issue for help.

With the above steps, users can quickly build and use 3FS to experience its efficient data access capabilities. Whether it is AI training or big data processing, 3FS can provide strong support.

Looking back at this week's release, DeepSeek has built a complete technology stack for big models:

- Day 1 - FlashMLA: Efficient MLA decoding kernel optimized for variable-length sequence processing - Day 2 - DeepEP: First open source expert parallel communication library to support MoE model training and inference - Day 3 - DeepGEMM: FP8 GEMM library supporting dense and MoE computations - Day 4 - Parallel Computing Strategies: Includes DualPipe bi-directional pipelined parallel algorithm and EPLB Expert Parallel Load Balancer. - Day 5 - 3FS and Smallpond: High-performance data storage and processing infrastructure

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...