ByteDance and others release first 1.58-bit FLUX quantization model with 99.51 TP3T parameter reduction, comparable to full-precision FLUX!

Highlight Analysis

- 1.58-bit FLUXThe first will be FLUX visually Transformer parameters (totaling 11.9 billion) is reduced by 99.5% to 1.58-bit quantization model, eliminating the need to rely on image data and dramatically reducing storage requirements.

- Developed an efficient linear kernel optimized for 1.58-bit computation, achieving significant memory reduction and inference acceleration.

- prove 1.58-bit FLUX Performance is comparable to the full-precision FLUX model in the challenging T2I benchmark.

Quick summary

Problems solved

- Current text-to-generate-image (T2I) models, such as DALLE 3 and Stable Diffusion 3, have a huge number of parameters and high memory requirements for inference, making it difficult to deploy them on resource-limited devices (e.g., mobile devices).

- This paper focuses on the feasibility of very low-bit quantization (1.58-bit) in T2I models to reduce storage and memory requirements while improving inference efficiency.

proposed program

- The FLUX.1-dev model was chosen as the quantization target, and its weights were compressed to 1.58-bit (with values restricted to {-1, 0, +1}) by a post-training quantization method without accessing the image data.

- Development of a dedicated low-bit operation optimization kernel to further improve inference efficiency.

Technology applied

- 1.58-bit weight quantification: Extremely low bitization is achieved by compressing the linear layer weights of the model to 1.58-bit using a method similar to BitNet b1.58 and storing the weights via 2-bit signed integers.

- Unsupervised quantitative methods: relies entirely on the self-supervision mechanism of the FLUX.1-dev model itself and does not need to rely on mixed precision schemes or additional training data.

- Customized Kernel: An inference kernel optimized for low-bit operations, reducing memory usage and inference latency.

impact

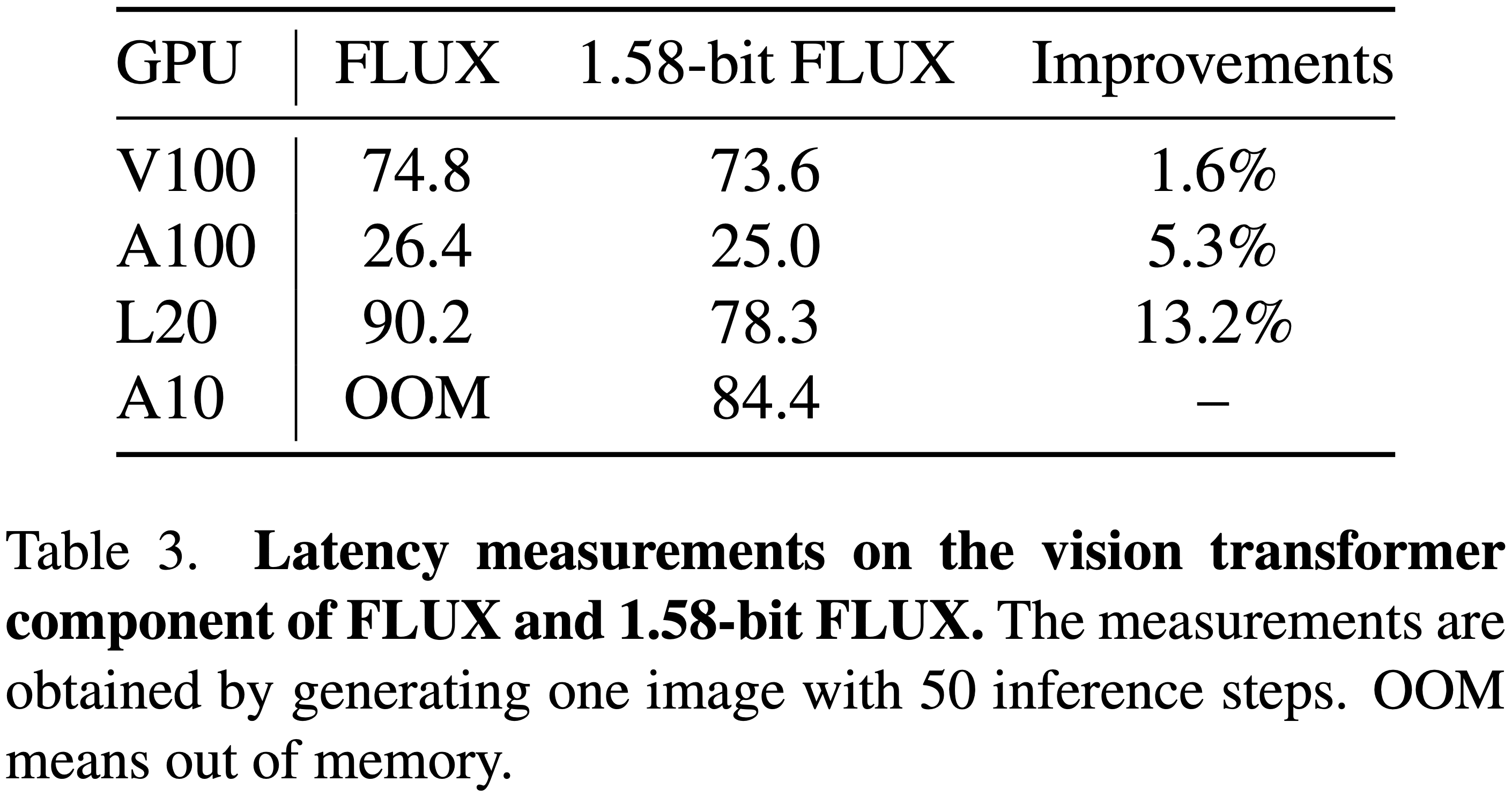

- Storage efficiency: Model storage requirements reduced by 7.7×, compressed from 16-bit to 2-bit.

- Reasoning efficiency: Memory usage during inference is reduced by 5.1× and inference latency is significantly improved.

- Generating quality: On the GenEval and T2I Compbench benchmarks, the generation quality is essentially equal to that of full-precision FLUX, validating the effectiveness and utility of the scheme.

Results

set up

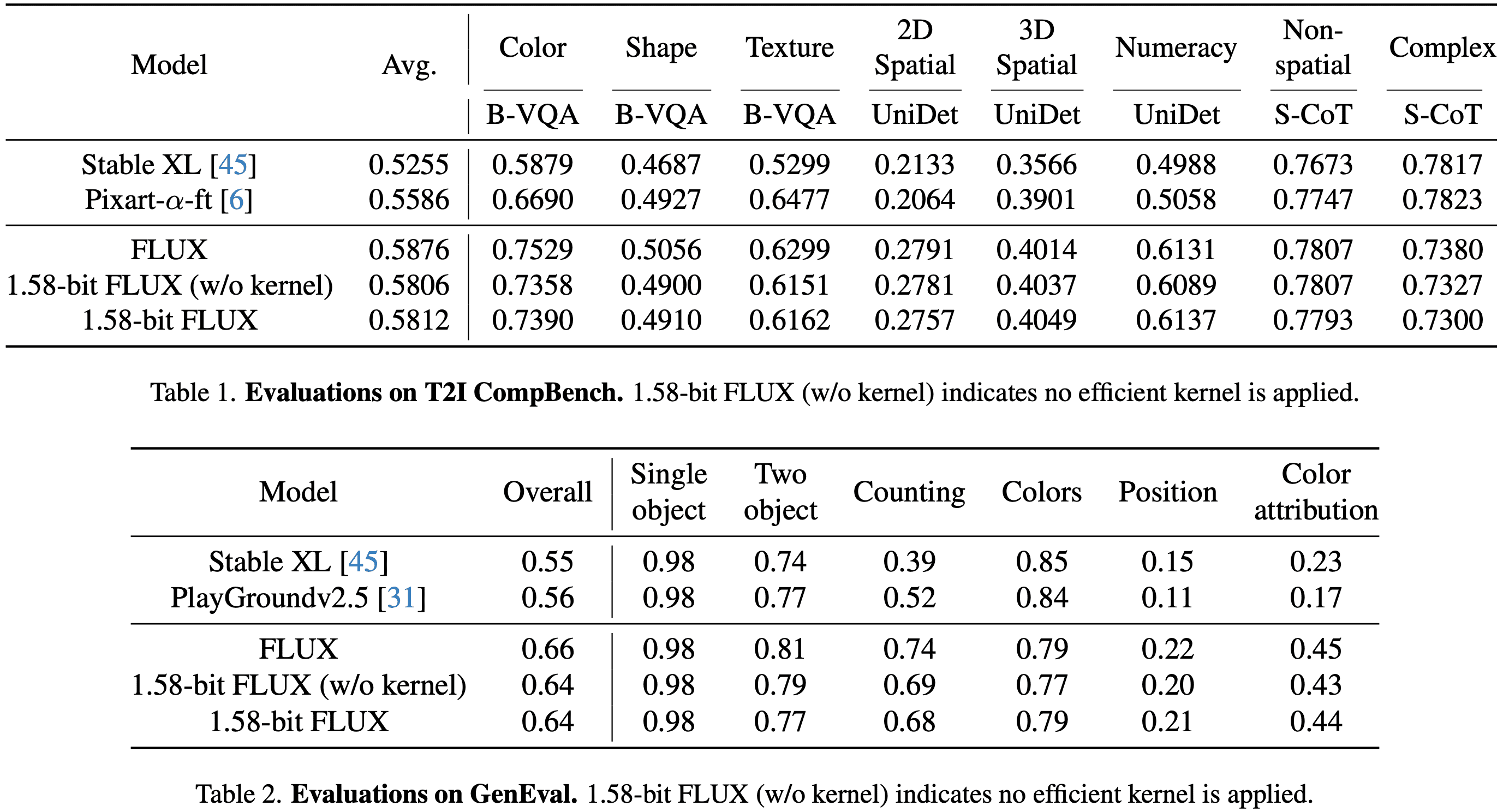

quantize: Quantification was performed using a calibrated dataset consisting of the Parti-1k dataset and cues from the T2I CompBench training set, totaling 7,232 cues. The entire process is completely independent of image data, and no additional datasets are required. Quantization compresses all linear layer weights of FluxTransformerBlock and FluxSingleTransformerBlock in FLUX to 1.58-bit, which accounts for 99.5% of the total model parameters.

valuation: Evaluation of FLUX and 1.58-bit FLUX on the GenEval dataset and the T2I CompBench validation set, following the official image generation process.

- GenEval datasetThe following is a list of 553 prompts, each of which generates 4 images.

- T2I CompBench Validation SetThe evaluation was carried out in 8 categories with 300 prompts per category, each prompt generating 10 images, for a total of 24,000 images for evaluation.

- All images are generated at 1024 × 1024 resolution for FLUX and 1.58-bit FLUX.

in the end

performances: The performance of 1.58-bit FLUX is comparable to that of full-precision FLUX in the T2I Compbench and GenEval benchmarks, as shown in Tables 1 and 2. The performance change before and after applying the customized linear kernel is minimal, further validating the accuracy of the implementation.

efficiency: As shown in Figure 2 below, 1.58-bit FLUX achieves significant improvements in model storage and inference memory. In terms of inference latency, as shown in Table 3 below, the improvement is especially significant on low-performance but easy-to-deploy GPUs such as L20 and A10.

Conclusion and discussion

This paper presents the 1.58-bit FLUXIn addition, we quantized the Transformer parameter of 99.5% to 1.58-bit and implemented the following improvements by customizing the computational kernel:

- Reduced storage requirements: Reduce model storage requirements by a factor of 7.7.

- Inference memory reduction: Reduce inference memory usage by more than 5.1x.

Despite achieving these compression effects, the 1.58-bit FLUX showed comparable performance to the full-precision model in the T2I benchmarks, while maintaining high visual quality. Hopefully 1.58-bit FLUX The ability to incentivize communities to develop models that are more appropriate for mobile devices.

Current limitations

Limitations on speed improvements

- Although 1.58-bit FLUX reduces model size and memory consumption, its latency improvement is limited due to the lack of activation value quantization and more advanced kernel optimizations.

- Given the results achieved so far, it is hoped that the community will be incentivized to develop custom kernel implementations for the 1.58-bit model.

On the limitations of visual quality

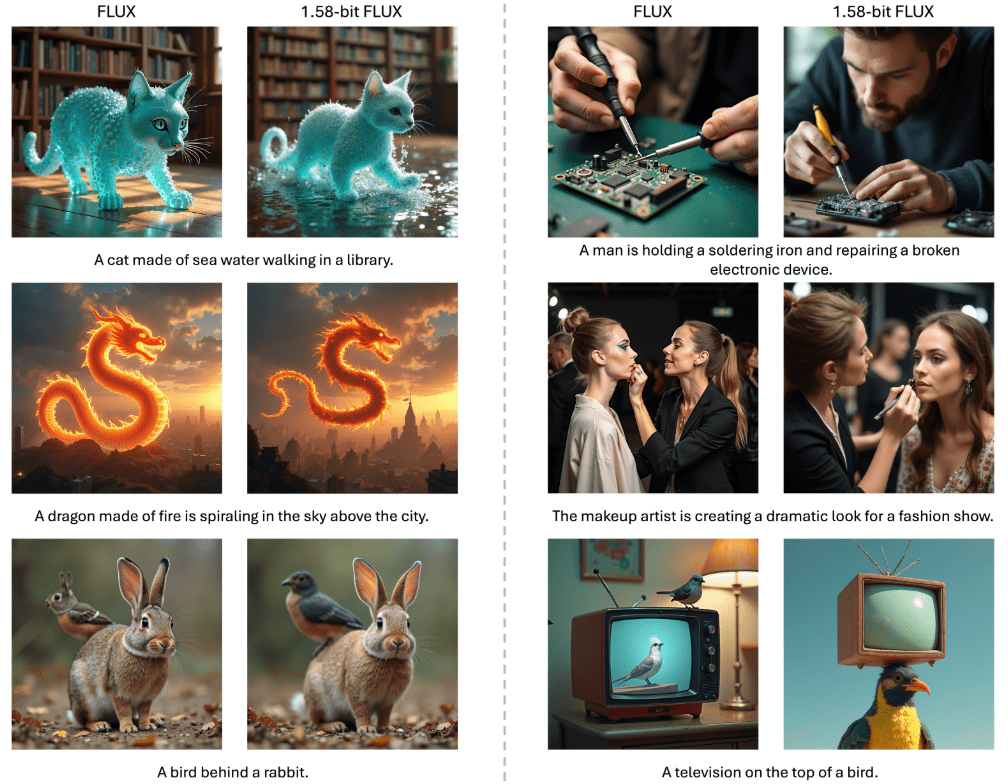

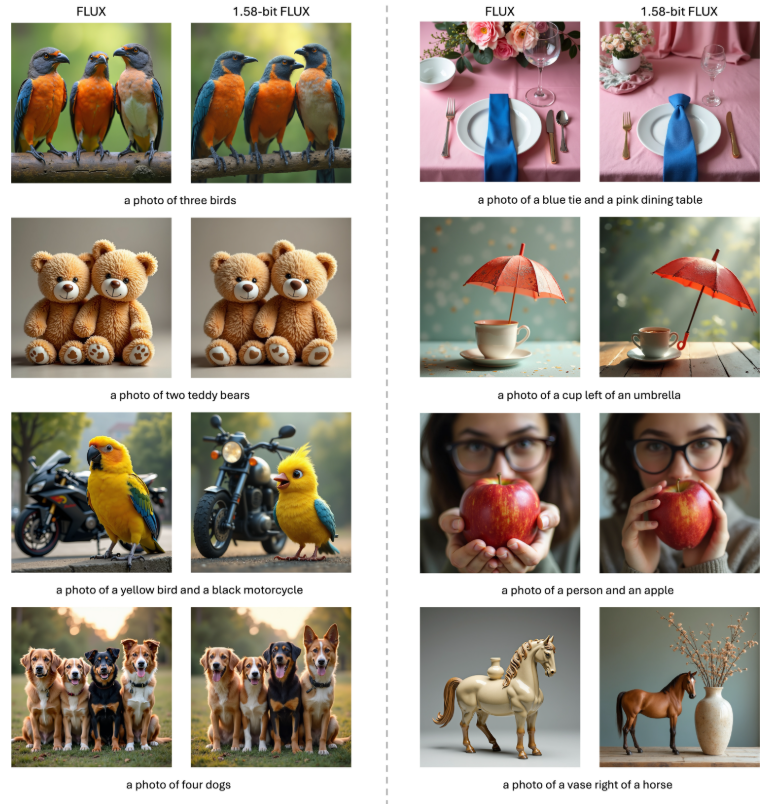

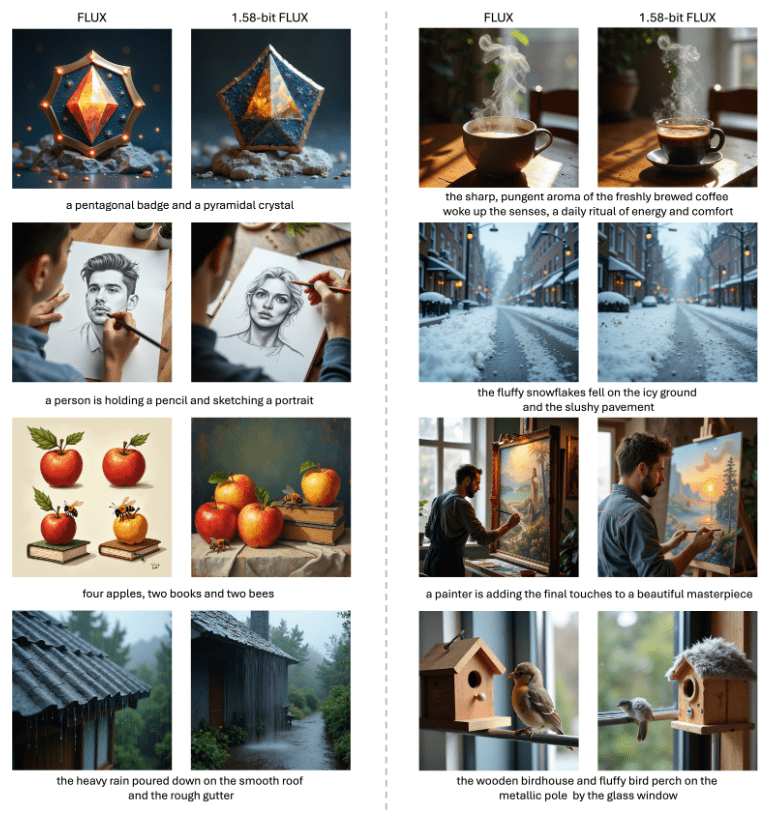

- As shown in Figures 1, 3, and 4 below, 1.58-bit FLUX produces vivid and realistic images that are highly consistent with the textual cues, but still lags behind the original FLUX model when rendering ultra-high resolution details.

- Plans are in place to close this gap in future studies.

- Visual comparison of FLUX with 1.58-bit FLUX. 1.58-bit FLUX demonstrates comparable generation quality to FLUX with 1.58-bit quantization, where 99.5% of the visual Transformer's 11.9B parameter is constrained to be +1, -1, or 0. For consistency, all images in each comparison are generated using the same latent noise input. 1.58-bit FLUX uses a custom 1.58-bit kernel. For consistency, all images in each comparison are generated using the same potentially noisy input. 1.58-bit FLUX uses a customized 1.58-bit kernel.

Inference codes and weights are being released: https://github.com/Chenglin-Yang/1.58bit.flux

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...