[Transfer] Disassemble a hot browser automation intelligence from scratch, learn to design an autonomous decision-making Agent in 4 steps

Previously, most of the intelligences we developed were in a fixed workflow pattern, and very few of them followed the framework below, developing intelligences with aautonomous decision-makingcap (a poem)Self-directed use of toolsThe Intelligentsia.

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/e0a98a1365d61a3.png)

Two days ago, I shared an open source Agent that automates simple tasks using a browser - thebrowser-useThe

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/02d659050056867.gif)

Demonstrated above is that it automatically searched for 'ferry code' and opened my blog.browser-use It is an open source Agent with 1.5w stars on GitHub, and can be installed locally with a single command, which is a very low threshold.

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/dab531e141537b6.png)

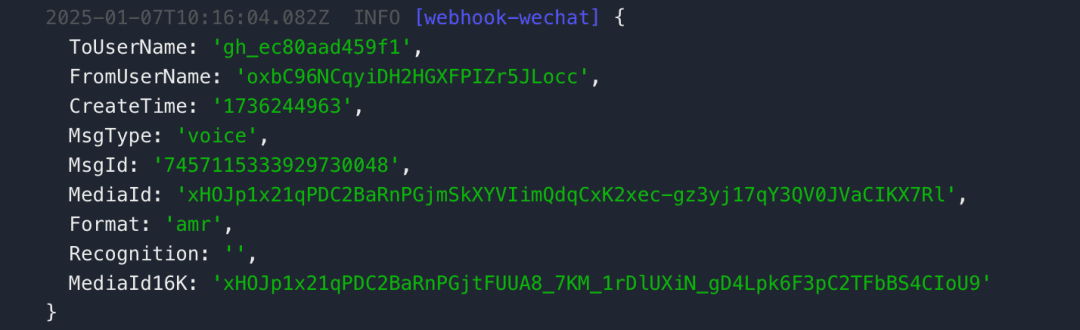

Since the last time I shared this, I've had an occupational hazard of always wanting to disassemble it to see how it's implemented, so here's today's post.browser-use The Agent's four modules - Memory, Planning, Tools, and Action - in the first diagram above are completed with a single prompt, which is found in the source file prompts.py, line 130.

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/4579dd0a3fb310b.png)

Although the prompts are a bit long, don't worry, you'll find it so clear and easy to follow the four modules above after breaking them down.

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/2b12d4eb06e20ae.png)

The prompt above defines the four modules, so let's talk about them one by one.

Memory - Record the tasks that have been completed and those that are to be performed next.

"memory": "Description of what has been done and what you need to remember until the end of the task",

I've documented the execution of the 'open blog' case above, and you can see Memory's actual chestnut

'memory': "Baidu is open, ready to search for '渡码'."

Planning)- Determine whether the previous execution was successful or not based on the current page (web page) and generate the tasks that should be executed next.

"evaluation_previous_goal": "Success|Failed|Unknown - Analyze the current elements and the image to check if the previous goals/actions are successful like intended by the task. Ignore the action result. The website is the ground truth. Also mention if something unexpected happened like new suggestions in an input field. Shortly state why/why not","next_goal": "What needs to be done with the next actions"

There are two parts here, the first step is evaluation_previous_goal which determines if the previous task was successful or not, what was the previous task can be found in thememorizationThis explains why Memory has a dotted line pointing to Planning in the first figure.

The previous task status determines the planning of the next task, retrying if the previous task fails and planning a new task if it succeeds.

Actual chestnuts:

'evaluation_previous_goal': 'Success - Baidu was successfully opened in a new tab.',

'next_goal': "Input '渡码' into the search box and submit the search."

Tools- browser-use defines 15 tools that can be used to manipulate the pages.

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/04911fccff996cc.png)

Definitions of the tools are placed in the cue word for large model selection. Each tool has a corresponding code for accomplishing a specific task.

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/aa2d28b7fb4acea.png)

Action- Generating a series of specific actions based on Planning is a direct chestnut:

'action': [{'input_text': {'index': 12, 'text': '渡码'}}, {'click_element': {'index': 13}}]

In this example, there are two actions; the first step is to enter 'ferry code' where the page element labeled 12 is located (the search box); the second step is to click where the page element labeled 13 is located (the search button) to complete the search.

Just coincidentally, all actions in browser-use are done with Tools.

Some of you may be wondering where these markers come from.

browser-use analyzes the HTML code of a page, identifies the components (elements) of the page, and assigns a tag to each component. ![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/675833e9da90882.png)

On this page, you can see the colorful marked boxes and the markers on the boxes, which are recognized by browser-use.

Eventually this information will be converted to '1[:]'<a name="tj_settingicon">set up</a>' Text in this format, appended to the cue word, is fed into the larger model

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/ab3a29c23668d93.png)

In this way, the big model knows what the page looks like so it can plan the task.

I think this idea is well worth learning, and it is precisely because of the powerful understanding that big models have that they can replace a complex page with just a few lines of text, greatly simplifying a seemingly complex thing.

There are a few other implementation details to learn about browser-use, such as the fact that some Actions may cause the page to change after execution, which will interrupt the task and regenerate a new Action.

Another example is the support for visual macromodeling, where uploading screenshots of the entire webpage allows the macromodel to better understand the page and thus better plan the task. Interested parties can download the source code to continue their research.

Additionally, a new trend in intelligent bodies has been seen recently - active learning. Relying on this capability, Genius intelligences outperformed top human players and other AI models in the classic game Pong using only 101 TP3T of data and 2 hours of training.

I also have a deep understanding of building intelligent bodies at work, because the work is a private scenario, the intelligent body does not understand your business, so it will not be able to give you the right Planning, this time the intelligent body's ability to learn on its own is very necessary.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...