Wisdom Spectrum supports 128K context/4K output length large model GLM-4-Flash full volume free open!

Since Aug. 27th, Wisdom Spectrum has made the GLM-4-Flash API available to the public for free. Running a 3-day English-to-Chinese translation test, GLM-4-Flash compares the GLM-4-9b provided by Silicon Flow, and the OpenRouter: Unified Interface for Integrating Multiple LLMs, Free Large Model Interface It's no less effective or faster than the many free modeling APIs offered in the

GLM-4-9b previously released a free API on the SILICONFLOW (Silicon Flow): accelerating AGI for humanity, integrating free large model interfaces GLM-4-Flash and GLM-4-9b have some differences in model size, number of parameters, application scenarios, etc. They are also both free APIs, and both provide free services, how should we choose?

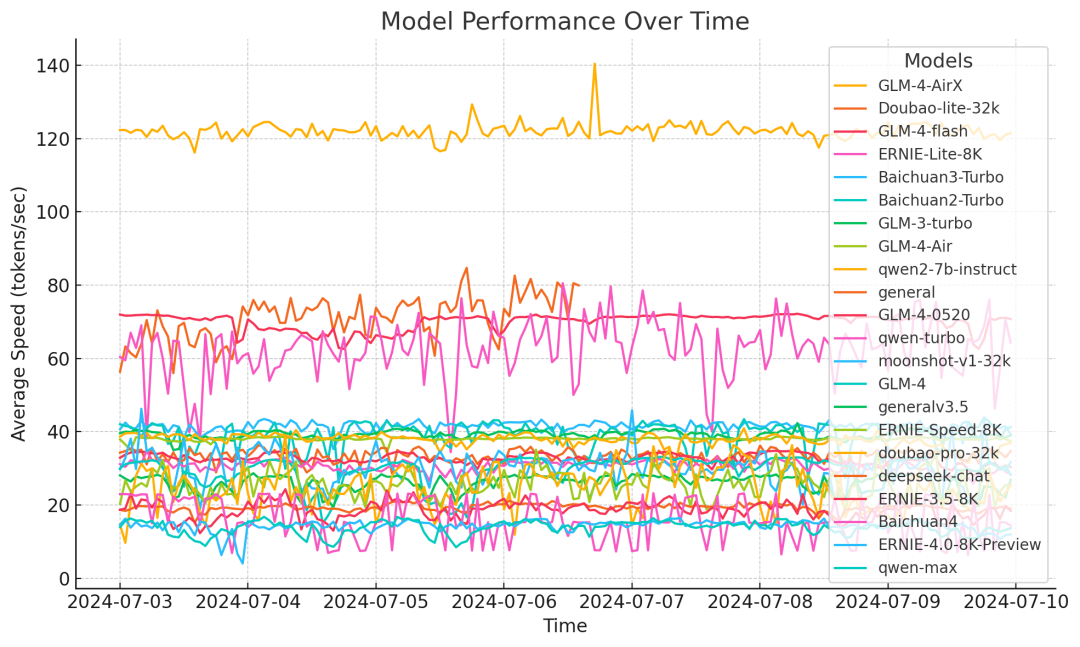

It can be said that the GLM-4-Flash model has a big advantage in both "speed" and "performance".

In order to improve the inference speed of the model, GLM-4-Flash adopts several optimization measures such as adaptive weight quantization, multiple parallel processing techniques, batch processing strategy and speculative sampling. According to the third-party model speed test results, the inference speed of GLM-4-Flash is stabilized at around 72.14 token/s in a one-week test cycle, which is significantly better than other models.

In terms of performance, GLM-4-Flash uses up to 10T of high-quality multilingual data in the pre-training phase of the model, which enables the model to reason about multi-round conversations, web searches, tool invocations, and long text (with support for context lengths of up to 128K.Output 4K length), etc. It supports 26 languages including Chinese, English, Japanese, Korean and German.

On the basis of completely free and open GLM-4-Flash API, in order to support the majority of users to better apply the model to specific application scenarios, we provide the model fine-tuning function to meet the needs of different users. Welcome to use it!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...