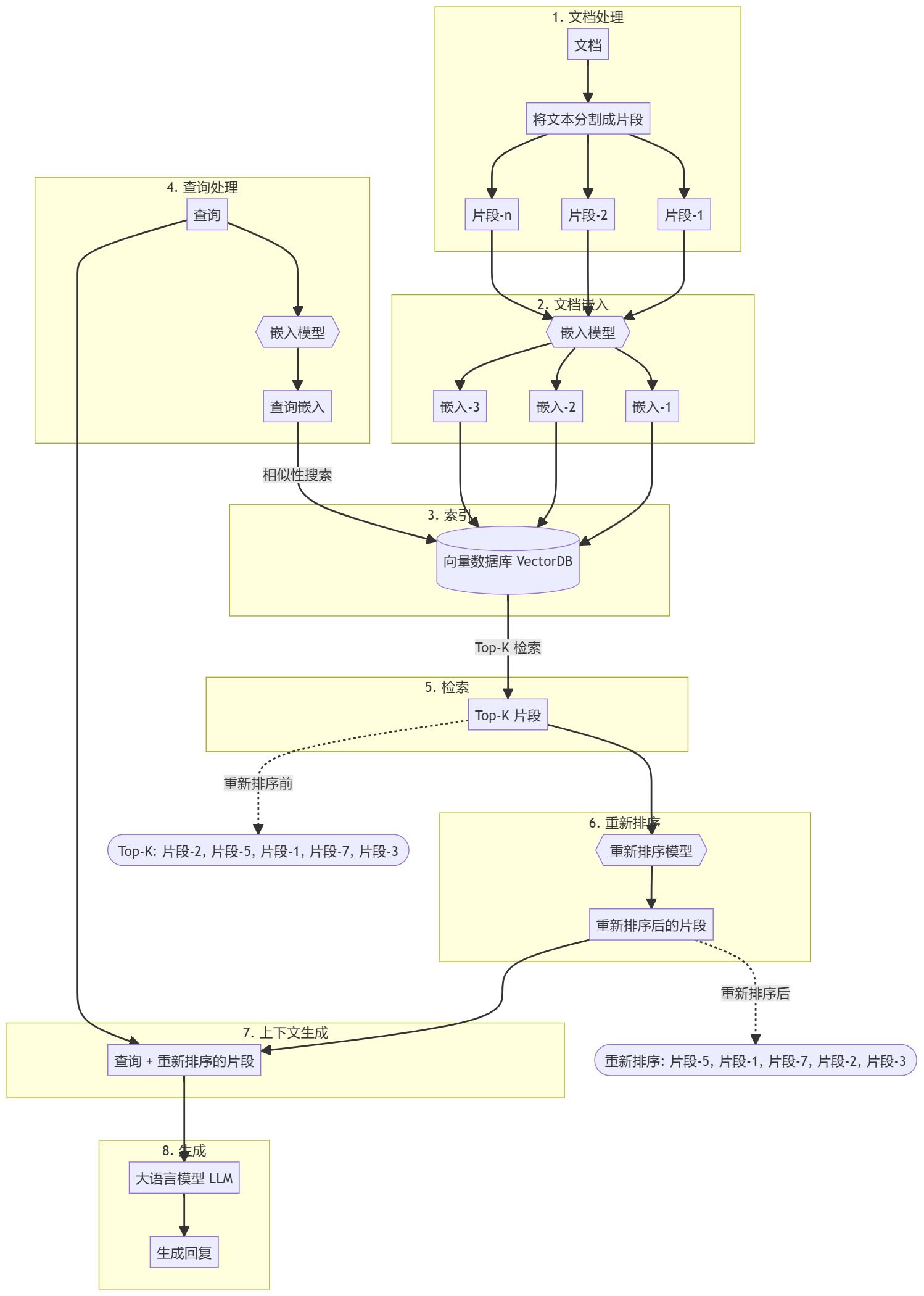

One diagram explains the whole picture of building a RAG system.

This diagram clearly depicts the architectural blueprint of a modern, sophisticated Question Answering (QA) or Retrieval-Augmented Generation (RAG) system. It starts with the user asking a question and continues to the final generation of the answer, showing in detail the key steps and technology choices in between. We can decompose the whole process into the following core stages:

1, query construction (Query Construction)

This is the first step in the user's interaction with the system and the starting point for the system to understand the user's intent. The image shows how queries are constructed for different types of databases:

a. Relational DBs: For relational databases, a common way of constructing queries is Text-to-SQL, which means that the system needs to translate the user's natural language questions into structured SQL query statements. This usually involves Natural Language Understanding (NLU) techniques and the ability to map natural language semantics to SQL syntax and database Schema. The figure also mentions SQL w/ PGVector, which can be used in conjunction with vector databases (PGVector is a vector extension to PostgreSQL) to enhance SQL queries, e.g., to perform semantic similarity searches, and thus more flexibly handle fuzzy or semanticalized queries from users.

b. Graph DBs: For graph databases, the corresponding query construction method is Text-to-Cypher, a query language for the graph database Neo4j that is similar to SQL but better suited for graph-structured queries. Text-to-Cypher requires the translation of natural language questions into Cypher query statements, which requires an understanding of the structure of the graph database and the characteristics of the graph query language. requires an understanding of the structure of graph databases and the properties of graph query languages.

c. vector database (Vector DBs): For vector databases, Self-query retriever is illustrated, which means that the system can automatically generate metadata filters based on the user's question and query the vector database directly. Vector databases typically store vector representations of text or data, which are retrieved by similarity search.The key to Self-query retriever is the ability to extract structured information for filtering from natural language questions and combine it with vector similarity search to achieve more accurate retrieval.

Summarizing the query construction phase: the core goal of this phase is to transform questions posed by the user in natural language into query statements that can be understood and executed by the system, with different query languages and techniques for different types of data stores (relational, graphs, vectors). The system's support for multimodal data and query modalities is demonstrated.

2、Query Translation (Query Translation)

After query construction, it is sometimes necessary to further process and optimize the user's original query in order to retrieve and understand the user's intent more efficiently. Two main query translation strategies are illustrated in Fig:

a. Query Decomposition: Complex questions can be decomposed into smaller, more manageable sub-questions (Sub/Step-back question(s)). This can be done using techniques such as Multi-query, Step-back, RAG-Fusion, and so on. - Multi-query may mean generating several different queries to explore the question from different angles. - Step-back might mean answering more basic, prerequisite questions first, and then progressively solving the final, complex question. - RAG-Fusion may refer to the combination of retrieval-enhanced generation with query fusion techniques to more fully understand user intent through multiple retrievals and fusions. - The core idea is Decompose or re-phrase the input question, i.e., decompose or re-phrase the input question to reduce the processing difficulty of complex questions.

b. Pseudo-documents: HyDE (Hypothetical Document Embeddings) is a typical method for generating pseudo-documents. The idea is to let the model generate a "hypothetical document" (pseudo-document) based on the question. This pseudo-document does not need to be real, but it should contain the model's initial understanding and prediction of the answer to the question. The pseudo-document is then vectorized with the real document, and a similarity search is performed.HyDE aims to help the vector searcher to better find the relevant real document by introducing the model's prior knowledge.

Summarizing the query translation phase: this phase aims to optimize the user query to make it more suitable for the subsequent retrieval process. Complexity can be handled through query decomposition, and the accuracy of vector retrieval can be improved through pseudo-document generation, reflecting the flexibility and intelligence of the system in understanding and processing user intent.

3. Routing

When the system receives a translated query, it needs to decide which data source or sources to route the query to for retrieval. Two routing strategies are illustrated in Fig:

a. Logical routing: Let LLM choose DB based on the question, which means using LLM to determine which database should be queried based on the content and characteristics of the question. For example, if the question involves entities and relationships related to knowledge graphs, it will be routed to a graph database; if the question involves structured data queries, it will be routed to a relational database; if the question is more oriented towards semantic search, it will be routed to a vector database.

b. Semantic routing: Embed question and choose prompt based on similarity. This approach first embeds the question and then chooses a different prompt based on the similarity of the question vectors (Prompt #1 , Prompt #2). , Prompt #2). This means that for different types of questions or intents, the system presets different Prompt strategies and automatically selects the most appropriate Prompt by semantic similarity to guide the subsequent retrieval or generation process.

Summarize the routing phase: the routing phase is a key step in the system's intelligent decision-making, which selects the most appropriate data source and processing strategy according to the content and characteristics of the problem, reflecting the system's intelligence in resource management and task scheduling.

4、Indexing (Indexing)

For efficient retrieval, the data needs to be pre-indexed. The blue area of the image demonstrates multiple strategies for indexing optimization:

a. Chunk Optimization: When working with long documents, it is often necessary to split the document into chunks and then index the chunks. chunk optimization focuses on how to do chunking more efficiently.

- Split by Characters, Sections, Semantic Delimiters: Different chunking strategies, e.g. by number of characters, sections, or semantic delimiters.

- Semantic Splitter: emphasizes the importance of semantic chunking, optimizes the chunk size used for embedding, and makes each chunk more semantically complete and independent, so as to improve the quality of embedding and retrieval effect.

b. Multi-representation indexing: Summary -> {} -> Relational DB / Vectorstore This means that it is possible to create multiple representations of a document for indexing, e.g. in addition to the original text block of the document, it is possible to generate a summary of the document (Summary) and index that as well. For example, in addition to the original text block of the document, you can generate a summary of the document and index the summary as well. This allows different representations to be used to meet different query requirements. Figure implies that the summary can be stored in a relational database or vector database.

- Parent Document, Dense X: may refer to indexing a document together with information about its parent document, as well as representing a document using a Dense Representation (Dense X), which may refer to a dense vector representation.

- Convert documents into compact retrieval units (e.g., a summary): Emphasizes the conversion of documents into more compact retrieval units, such as summaries, to improve retrieval efficiency.

c. Specialized Embeddings: Fine-tuning, CoLBERT, [0, 1, ... ] -> Vectorstore. This means that specially trained or fine-tuned Embedding models, such as CoLBERT, can be used to generate vector representations of documents and store these vectors in a vector database.

- Domain-specific and / or advanced embedding models: Emphasizes that domain-specific or more advanced Embedding models can be used to obtain more accurate semantic representations and enhance retrieval results.

d. Heirarchical Indexing Summaries: Splits -> cluser -> cluser -> ... -> RAPTOR -> Graph DB. -> RAPTOR -> Graph DB. RAPTOR (which may refer to a hierarchical document summarization and indexing method) constructs a hierarchical structure of document summaries by means of multilayer clustering (cluser).

- Tree of document summarization at various abstraction levels: Emphasizes that RAPTOR constructs a tree of document summarization at multiple abstraction levels.

- Stored in a graph database (Graph DB), the graph database is utilized to store and manage this hierarchical index structure, facilitating multi-level search and navigation.

Summarizing the indexing phase: the indexing phase is concerned with organizing and representing data efficiently and effectively for fast and accurate retrieval. From chunking optimization, multiple representations, specialized Embedding to hierarchical index summarization, it reflects the diversity and sophistication in indexing strategies.

5. Retrieval

Based on the routed data sources and indexes, the system performs the actual retrieval process. The green areas of the image demonstrate the two main aspects of the retrieval:

a. Ranking: Question -> {} -> Relevance -> Filter. retrieved documents need to be ranked according to their relevance to the query.

- Re-Rank, RankGPT, RAG-Fusion: Some advanced ranking techniques are mentioned, such as Re-Rank (re-ranking, which performs a more fine-grained ranking on top of the initial retrieval results), RankGPT (ranking with a large-scale model such as GPT), and RAG-Fusion (which fuses ranking with retrieval enhancement generation).

- Rank or filter / compress documents based on relevance: The purpose of sorting can be either to sort directly to return the most relevant documents, or to filter or compress documents based on relevance for subsequent processing. - CRAG (Context-Relevant Answer Generation) also appears in the sorting session, the sorting process also needs to take into account contextual information.

b. Active retrieval: {} -> CRAG -> Answer. Re-retrieve and / or retrieve from new data sources (e.g., web) if retrieved documents are not relevant. Active retrieval means that the system can actively re-retrieve (Re-retrieve) or retrieve from new data sources (e.g., web) if the initial retrieval results are not satisfactory.

- CRAG also appears in active retrieval, further emphasizing the importance of contextual relevance and iterative retrieval.

- Techniques such as Self-RAG, RRR (Retrieval-Rewrite-Read) may also be relevant to active retrieval, aiming to continuously optimize retrieval results and answer quality through an iterative retrieval and generation process.

Summarize the retrieval phase: the core objective of the retrieval phase is to find the most relevant document or information to the user's query. From sorting to active retrieval, the system reflects the refinement and intelligence of the retrieval strategy and strives to provide high-quality retrieval results.

6. Generation

Ultimately, the system needs to generate answers based on the retrieved documents and present them to the user. The purple area of the image shows the core technology in the generation phase:

a. Active retrieval (reappearance): {} -> Answer -> Self-RAG, RRR -> Question re-writing and/or re-retrieval of documents. active retrieval also plays an important role in the generation phase.

- Self-RAG (Self-Retrieval Augmented Generation) is a self-retrieval augmented generation method that allows a generative model to actively perform retrieval as needed during the process of generating an answer and to adjust the generation strategy based on the results of the retrieval. - RRR (Retrieval-Rewrite-Read) is an iterative generation process that may include steps such as retrieval, rewriting the question and reading the document to optimize the answer quality through multiple iterations.

- Use generation quality to inform question re-writing and / or re-retrieval of documents: Emphasizes that the quality of generated answers can be used to guide question re-writing and document re-retrieval, forming a closed-loop optimization process.

Summarizing the generation phase: The generation phase is a key step in the final output of answers. Active retrieval and self-retrieval-enhanced generation (Self-RAG, RRR) technologies make the generation process more intelligent and controllable, and can generate more accurate and user-friendly answers.

Overall Summary: This diagram clearly demonstrates the complexity and granularity of a modern RAG system. It covers the complete process from query understanding, data routing, indexing optimization, efficient retrieval, to final answer generation, and shows the many advanced techniques and strategies that can be employed at each step.

Key highlights and trends.

- Multi-database support: The system supports relational database, graph database and vector database, which can handle different types of data and query requirements.

- Query Optimization and Translation: Enhance the system's ability to handle complex and semantic queries through techniques such as query decomposition and pseudo-document generation.

- Intelligent Routing: Utilizes LLM and semantic similarity for routing decisions, enabling intelligent selection of data sources and task scheduling.

- Indexing Optimization Diversity: From Chunking, Multiple Representation, Dedicated Embedding to Hierarchical Index Digest, reflecting the diversity of indexing strategies and deep optimization.

- Search refinement and proactivity: From sorting algorithms to proactive searching, the system strives to provide high quality, relevant search results.

- Deep integration of generation and retrieval: Self-RAG, RRR and other techniques show that the generation phase is no longer a simple splicing of information, but a deep integration with the retrieval process, forming a closed loop of iterative optimization.

This figure represents an important trend in the development of current RAG systems, which is to focus more on the intelligence, modularity and scalability of the system. The future RAG system will not only be simple "retrieval + generation", but will develop in the direction of more intelligence, which can better understand the user's intention, more effectively utilize multimodal data, more accurately carry out retrieval and generation, and ultimately provide a better and more personalized user experience. This diagram provides a very valuable reference framework for us to understand and build the next generation of RAG systems.

References:

[1] GitHub: https://github.com/bRAGAI/bRAG-langchain/

[2] https://bragai.dev/

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...