Transform Cursor and Windsurf into $500/month worth of Devin in an hour!

In our last post, we discussed Devin, an Agentic AI that enables fully automated programming. with other Agentic AI tools like Cursor and Windsurf), it offers significant advantages in terms of process planning, self-evolution, tool extension and fully automated operation. This makes Devin Become a new generation of tools to differentiate from existing Agentic AI tools.

However, after using Devin for a while, my "builder's mentality" kicked in again and drove me to Windsurf and Cursor to implement Devin 90% functionality. I have also open-sourced these modifications so that you can turn Cursor or Windsurf into Devin in less than a minute.This article focuses on the specifics of these modifications and uses this example to show how efficiently they can be built and scaled in the age of Agentic AI. To simplify the discussion, we'll use Cursor as a proxy for this tool, and conclude with a discussion of what small tweaks you'll need to make if you want to use Windsurf.

| artifact | process planning | self-evolution | Tool Extension | automated implementation | prices |

|---|---|---|---|---|---|

| Devin | Yes (automatic, complete) | Yes (self-study) | multi- | be in favor of | $500/month |

| Cursor (before modification) | finite | clogged | Limited toolset | Manual confirmation required | $20/month |

| Cursor (modified) | Approach Devin. | be | Close to Devin, scalable | Confirmation or solution still required | $20/month |

| Windsurf (modified) | Approach Devin. | Yes, but indirectly | Close to Devin, scalable | Support for full automation in Docker containers | $15/month |

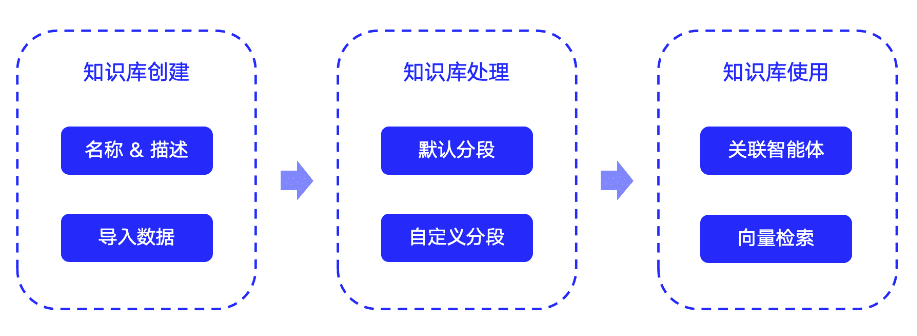

Process planning and self-evolution

As mentioned earlier, an interesting aspect of Devin is that it is more like an organized intern. It creates a plan before executing a task and continuously updates the progress of the plan during execution. This makes it easier for AI managers to track current progress while preventing the AI from deviating from the original plan, leading to deeper thinking and quality of task completion.

While this functionality may seem impressive, it is actually very simple to implement on Cursor.

For Cursor, open the root directory of the folder with a file named .cursorrules This is a special file. What's special about this file is that it allows you to modify the Cursor cue words to be passed to a back-end big language model such as GPT or Claude. In other words, everything in this file becomes part of the cue word sent to the back-end AI, providing great flexibility for customization.

For example, we could put the contents of the plan into this file so that each time we interact with the Cursor, it receives the latest version of the plan. We could also provide more detailed instructions in this file, such as asking the Cursor to think and create the plan at the beginning of the task, and to update the plan after completing each step. Since the Cursor can use the Agent to modify the file, and the .cursorrules It's a file in itself, which creates a closed loop. It automatically reads the contents of the file every time, understands the latest update and writes the updated progress and next steps to this file after thinking about it, making sure we always have the latest update.

Similarly, the self-evolutionary function can be realized in the same way. In .cursorrules file, we add prompts for the Cursor to reflect and consider whether there is a documentable reusable experience as the user corrects the error. If there is, it will update the .cursorrules relevant parts of the document, thereby building project-specific knowledge.

A typical example is that current models of large languages are not aware of the existence of GPT-4o due to the early knowledge deadline for many models. If you tell it, "This model does exist, you just don't know about it," it will record this experience to the .cursorrules documented so that the same mistakes are not made again in the future, thus enabling learning and improvement. However, this still depends on the cue being effective - sometimes it may miss points and fail to record knowledge we think we should be aware of. In this case, we can also use natural language to directly prompt it to record it. This more direct approach can also enable the experience and growth of AI.

As a result, only by .cursorrules documentation and a few tips and tricks, we can add Devin's process planning and self-evolution capabilities to our existing Agentic AI programming tools.

If Windsurf is used, there is one difference: probably for security reasons, it does not allow the AI to directly modify the .windsurfrules file. Therefore, we need to split it into two parts, using another file (such as the scratchpad.md). In .windsurfrules The documentation states that you should check Scratchpad before each thought process and update the plan there. This indirect method may not be as effective as placing it directly on .cursorrules It works in that it still requires the AI to call the Agent and think based on the feedback, but it actually works.

Tool Extension

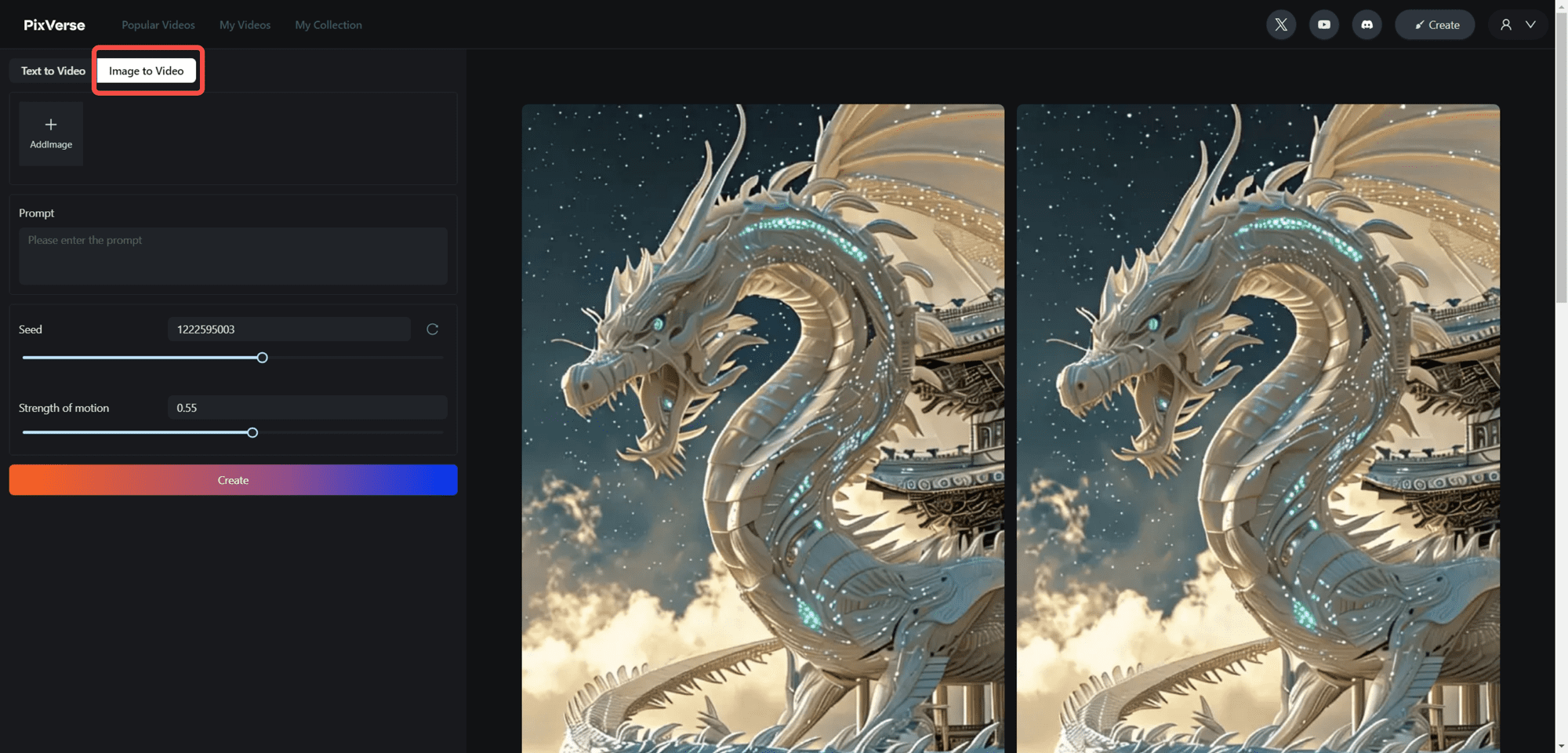

One of the main advantages of Devin over Cursor is its ability to use more tools. For example, it canCall up your browser to search, browse the web, and even analyze content using LLM intelligence!. Although Cursor doesn't support these features by default, fortunately, since we can use them via the .cursorrules Direct control of the Cursor's prompt word and the fact that it has command execution capabilities creates another closed loop. We can prepare pre-written programs (such as Python libraries or command-line tools) and then add them to the .cursorrules The Cursor is introduced to these tools so that the Cursor is ready to learn and use them to accomplish its tasks.

In fact, the tools themselves can be written in minutes using Cursor. For example, for web browsing functionality, the Devin.cursorrules A reference implementation is provided in There are some technical decisions to be aware of, such as using browser automation tools (e.g., playwright) rather than Python's request library for JavaScript-intensive websites. In addition, in order to better communicate with LLM and help it understand and crawl subsequent content, instead of simply extracting the textual content of a web page using beautiful soup, we follow certain rules for converting it to Markdown format, which preserves more detailed basic information (such as class names and hyperlinks), and supports LLM's ability to write subsequent crawlers at a more basic level.

Similarly, for search tools, there's a small detail: both Bing and Google have API searches that are much less high-quality than client-side searches, largely due to a history of APIs and web interfaces being maintained by different teams. However, DuckDuckGo does not have this problem, so our reference implementation uses DuckDuckGo's free API.

Regarding Cursor's use of its own intelligence for deep analysis, this is relatively complex. On the one hand, Cursor does have some degree of this capability - in both of the tools mentioned above, when we print the content of a web page to stdout, it becomes part of the Cursor's cue to the LLM, which allows it to intelligently analyze that text content. On the flip side, however, Devin has the unique ability to utilize the LLM for batch processing of relatively large amounts of text in a way that Cursor cannot. To do this, we implemented an additional tool - it's very simple to pre-set the API key on your system, and then have the tool call GPT, Claude, or the native LLM API to give Cursor the ability to do text batch processing using LLM. In my reference implementation, I used my own native vllm cluster, but it's very simple to modify - just remove the base_url line.

Despite these modifications, there are still two tools that cannot be implemented due to Cursor limitations:

- Devin appears to have image understanding capabilities, which allows it to perform front-end interactions and tests, but due to the limitations of Cursor, we can't pass images as input to the back-end AI - which would require changes to its implementation.

- Devin is not flagged as a bot by anti-crawling algorithms during data collection, whereas our web search tools often encounter CAPTCHA or are blocked. This may be fixable, and I'm still exploring it, but it's certainly one of Devin's unique strengths.

Fully automated implementation

The last interesting feature is fully automated execution. Since Devin runs in a fully virtualized cloud environment, we can confidently let it execute various commands without worrying about LLM attacks or incorrectly running dangerous commands. Even if the entire system is deleted, it can be recovered by simply starting a new container. However, Cursor runs on a local host system, so security is a serious concern. That's why, in Cursor's Agent mode, we need to manually confirm each command before executing it. This is acceptable for relatively simple tasks, but now with sophisticated process planning and the ability to evolve itself, Cursor is also capable of handling long term complex tasks, which makes this type of interaction seem incompatible with its capabilities.

To solve this problem, I haven't found a solution based on Cursor yet (Update: December 17, 2024 Cursor also added this feature, called Yolo Mode, but it still doesn't support development in Docker), but Windsurf takes this into account. As you can see from its design, it's aiming for a Devin-style product form, and the current code editor is just an intermediate form. More specifically, Windsurf has a feature that can connect directly to a Docker container and run there, or if we have a configuration file, it can help us start a new Docker container, do some initialization, and map a local folder. As a result, all the commands it executes (except for changes to the local folder) take place in the Docker container and have no impact on the host system, thus greatly increasing security.[Sample configuration]

On top of that, it introduces a blacklist/whitelist mechanism that automatically rejects commands in the blacklist and allows commands in the whitelist. For commands that are neither blacklisted nor whitelisted, LLM intelligently determines if there is a risk to the host system - for example, if it wants to delete a file in a folder, it will ask the user for confirmation, but commands such as pip install Ordinary commands such as these are simply allowed. Note that this feature seems to be enabled only when running in Docker containers. If we run commands on the host system, the experience is still similar to Cursor and requires frequent confirmations. Additionally, automated command execution needs to be enabled in the configuration.

summarize

Thus, we can see that while Devin's product form and design concepts are indeed very advanced, the gap between it and existing Agentic AI tools is not as large as we might think from a technical threshold perspective. Using popular tools like Cursor and Windsurf (only $15-20 per month), we can implement Devin 90%'s functionality in less than an hour and use it to accomplish complex tasks that we couldn't before the modifications. For example, I assigned Cursor the task of analyzing the returns of popular tech stocks over the past 5 years for an in-depth data analysis, and it provided a very detailed and comprehensive report. In addition, I had Windsurf crawl the publish times of the 100 most popular posts on my blog and visualize the data in a GitHub contribution graph style, tasks that it can fully automate. These kinds of tasks are not possible with traditional Cursor and Windsurf - only Devin can do them, but with a simple modification we can achieve the results of the $20/month tool with the $500/month tool. I even did a more in-depth experiment: as a developer completely unfamiliar with front-end development, I spent an hour and a half creating a job board, both front-end and back-end. This efficiency is comparable to Devin, if not higher.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...