It is shown that:RL outperforms SFT in learning generalizable knowledge, especially in multimodal tasks, and exhibits stronger reasoning and visual recognition abilities

summary

In the field of artificial intelligence.basic model(e.g., large-scale language models and visual language models) have become a central force driving technological progress. However, how to effectively enhance these models'generalization capabilityThe challenge is still a major one, which is to make it adaptable to various complex and changing real-life scenarios. Currently.Supervised fine-tuning (SFT) and reinforcement learning (RL)are two widely adopted post-training methods, but their specific roles and effects in improving the generalization ability of the models remain unclear.

This dissertation is presented through aIn-depth comparative studies, systematically explore the impact of SFT and RL on the generalization ability of the underlying model. We focus on the following two key aspects:

- Textual rule-based generalization: We have designed a program called GeneralPoints of an arithmetic reasoning card game that assesses the model's performance under different rule variants.

- Visual generalization: We have adopted the V-IRL task, a navigation environment based on real-world visual inputs, to test the model's ability to adapt under changes in visual inputs.

Through a series ofRigorous experimentation and analysis, we reached the following important conclusions:

- RL outperforms SFT in both rule and visual generalization: RL is able to learn and apply new rules efficiently while maintaining good performance in the presence of visual input variations. In contrast, SFT tends to memorize training data and has difficulty adapting to unseen variants.

- RL improves visual recognition: In visual language modeling (VLM), RL not only improves reasoning but also enhances visual recognition, whereas SFT reduces visual recognition.

- SFT is critical to RL training: SFT is a key factor in the success of RL training when the backbone model does not have good instruction following capability. It stabilizes the output format of the model so that RL can take full advantage of its performance.

- Expanding the number of verification iterations improves RL generalization: In RL training, increasing the number of validation iterations can further improve the generalization ability of the model.

These findingsProvides valuable insights for future AI research and applicationsIn this study, we have shown that RL has more potential in complex multimodal tasks. Our study not only reveals the different roles of SFT and RL, but also provides new ideas on how to combine these two approaches more effectively to build more powerful and reliable underlying models.

Whether you are an AI researcher, an engineer, or a reader interested in the future of artificial intelligence, this paper will provide you with insights and practical guidance. Let's delve deeper into the mysteries of SFT and RL to reveal the critical path to generalization of the underlying model.

Original text:https://tianzhechu.com/SFTvsRL/assets/sftvsrl_paper.pdf

speed reading

1. RL outperforms SFT in rule generalization

Conclusion:

RL is able to efficiently learn and generalize text-based rules, whereas SFT tends to memorize training data and has difficulty adapting to unseen rule variants.

Example:

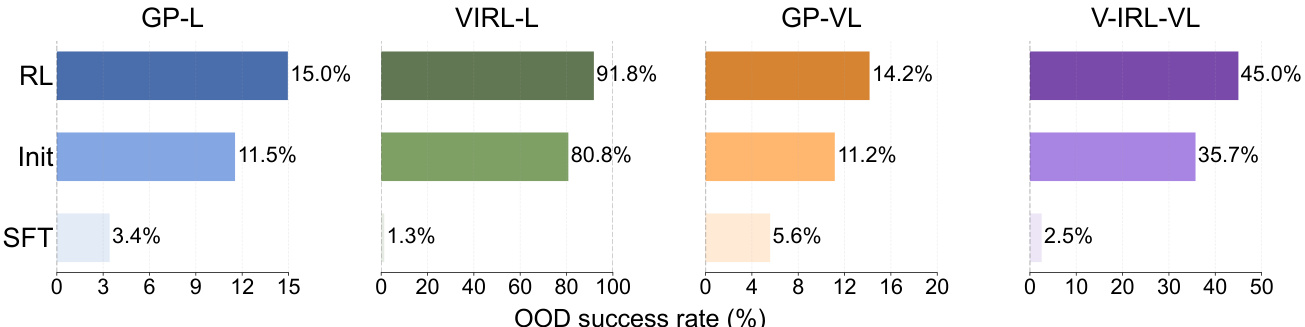

RL outperforms SFT in out-of-distribution (OOD) performance in both GeneralPoints and V-IRL tasks.

- GeneralPoints (GP-L):

- RL. The success rate was 15.01 TP3T, which is an increase from 11.51 TP3T at the initial checkpoint +3.5%The

- SFT. The success rate was 3.41 TP3T, down from 11.51 TP3T in the initial checkpoint -8.1%The

- V-IRL (V-IRL-L):

- RL. The per-step accuracy was 91.8%, which is an improvement over the 80.8% of the initial checkpoints +11.0%The

- SFT. The per-step accuracy was 1.31 TP3T, down from 80.81 TP3T at the initial checkpoint -79.5%The

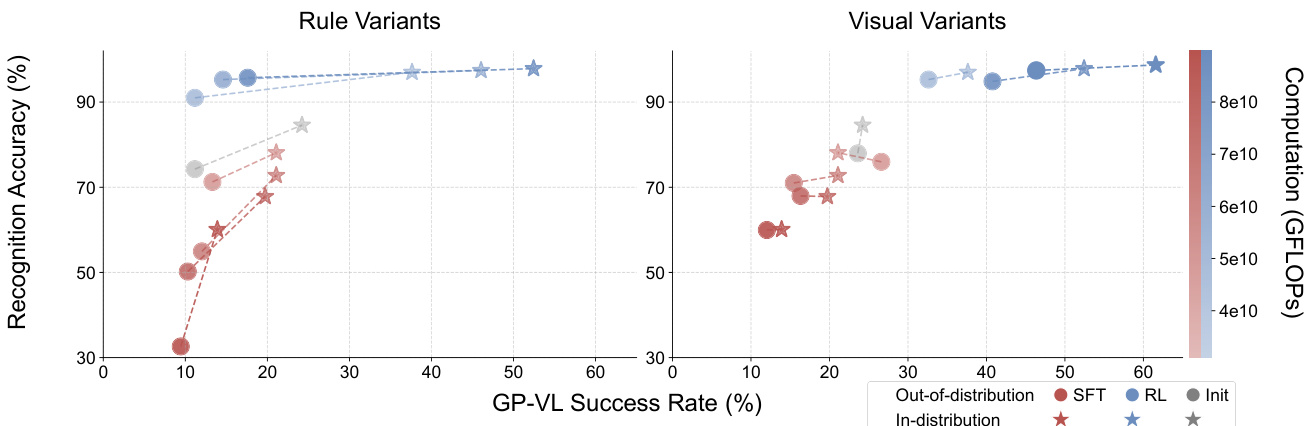

Figure 6: For each subgraph, RL and SFT are trained using the same amount of computation, and their shared initial checkpoint (labeled Init) is set as the baseline. See Appendix C.3 for detailed settings.

2. RL also generalizes in visual OOD tasks, while SFT performs poorly

Conclusion:

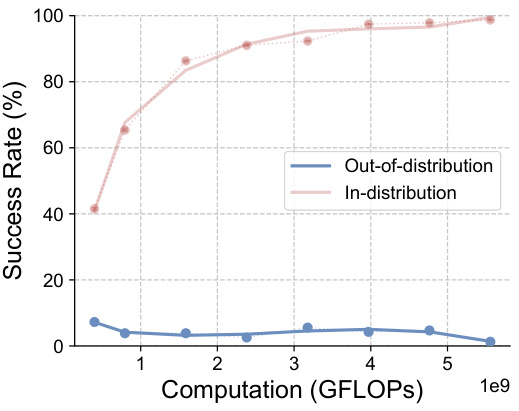

Even in tasks containing visual modalities, RL is still able to generalize to unseen visual variants, whereas SFT suffers performance degradation.

Example:

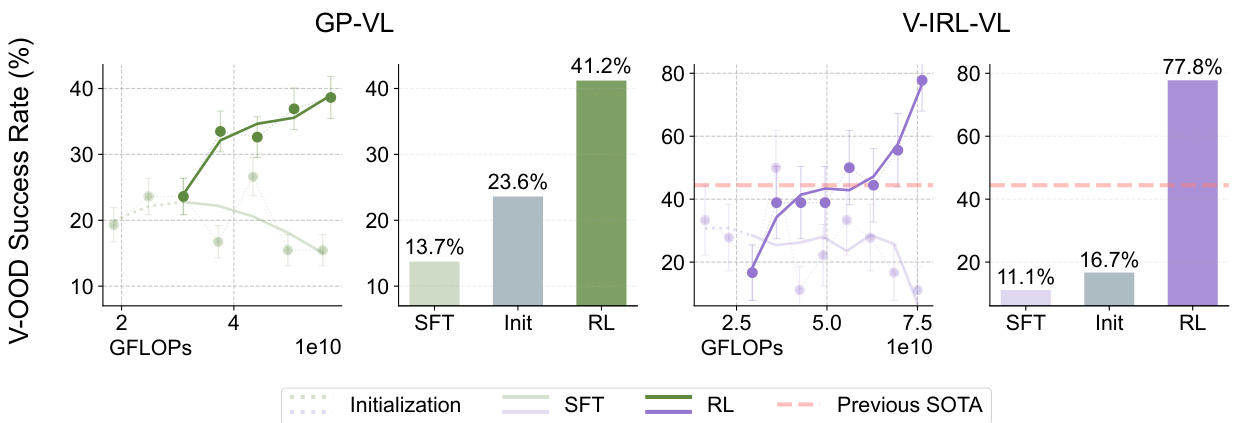

In the GeneralPoints-VI and V-IRL-VL tasks:

- GeneralPoints-VI (GP-VI):

- RL. The success rate was 41.21 TP3T, which is an increase from 23.61 TP3T at the initial checkpoint +17.6%The

- SFT. The success rate was 13.71 TP3T, a decrease from 23.61 TP3T at the initial checkpoint. -9.9%The

- V-IRL-VL (V-IRL-VL):

- RL. The per-step accuracy was 77.81 TP3T, which is an improvement over the initial checkpoint of 16.71 TP3T +61.1%The

- SFT. Accuracy per step was 11.11 TP3T, down from 16.71 TP3T at the initial checkpoint -5.6%The

Figure 7: Similar to Figures 5 and 6, we show the performance dynamics (shown as lines) and the final performance (shown as bars) evaluated outside the visual distribution. The previous state-of-the-art on the V- IRL VLN small benchmark test (Yang et al., 2024a) is marked in orange. See Appendix C.3 for detailed evaluation setup (and curve smoothing).

3. RL improves visual recognition of VLMs

Conclusion:

RL not only improves the model's inference, but also enhances its visual recognition, whereas SFT reduces visual recognition.

Example:

In the GeneralPoints-VI task:

- RL. As the amount of training computation increases, both the visual recognition accuracy and overall success rate improve.

- SFT. As the amount of training computation increases, both the visual recognition accuracy and overall success rate decrease.

Figure 8: Recognition rate vs. success rate for reinforcement learning (RL) and supervised fine-tuning (SFT) with different variants of GP-VL. The graphs show the performance of the recognition rate (y-axis) and the single-screen success rate (x-axis), corresponding to the in-distribution data (red) and the out-of-distribution data (blue), respectively. The transparency of the data points (color bars) represents the amount of training computation. The (⋆-◦) data pairs connected by lines are evaluated using the same checkpoints. The results show that Reinforcement Learning (RL) improves in both recognition rate and overall accuracy as the amount of post-training computation increases, while Supervised Fine-Tuning (SFT) shows the opposite trend.

Figure 8: Recognition rate vs. success rate for reinforcement learning (RL) and supervised fine-tuning (SFT) with different variants of GP-VL. The graphs show the performance of the recognition rate (y-axis) and the single-screen success rate (x-axis), corresponding to the in-distribution data (red) and the out-of-distribution data (blue), respectively. The transparency of the data points (color bars) represents the amount of training computation. The (⋆-◦) data pairs connected by lines are evaluated using the same checkpoints. The results show that Reinforcement Learning (RL) improves in both recognition rate and overall accuracy as the amount of post-training computation increases, while Supervised Fine-Tuning (SFT) shows the opposite trend.

4. SFT is necessary for RL training

Conclusion:

SFT is necessary for RL training when the backbone model does not have good command following.

Example:

All experiments in which end-to-end RL was directly applied to post-train Llama-3.2 without SFT initialization ended in failure.

- Failure case studies:

- The model generates lengthy, digressive, and unstructured responses that fail to retrieve information and rewards associated with RL training.

- For example, the model attempts to solve the 24-point game by writing code, but fails to complete code generation, resulting in a validation failure.

![]()

Figure 20: We recorded model responses using a cue similar to the one shown in Figure 11. The results show that Llama-3.2-Vision-11B fails to follow instructions correctly. We omitted lengthy responses that attempted to solve puzzles through code, but failed to accomplish doing so within a limited context length.

5. Expanding the number of validation iterations improves the generalization ability of RL

Conclusion:

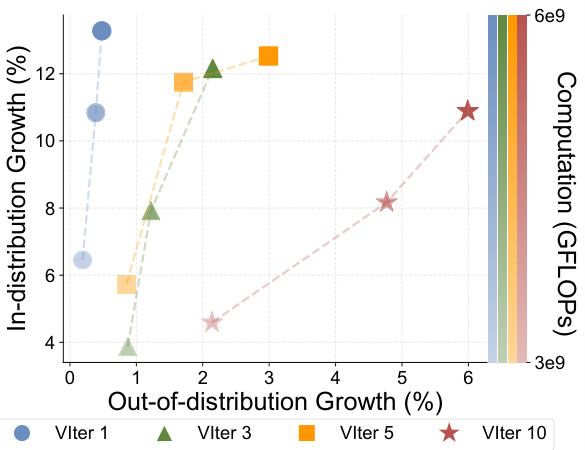

In RL training, increasing the number of validation iterations improves the generalization of the model.

Example:

In the GeneralPoints-Language (GP-L) task:

- 1 validation iteration. OOD performance is only improved by +0.48%The

- 3 validation iterations. OOD performance is improved +2.15%The

- 5 validation iterations. OOD performance is improved +2.99%The

- 10 validation iterations. OOD performance is improved +5.99%The

Figure 10: We recorded RL experiments with varying numbers of validation iterations (VIter) as a way to scale up the amount of training computation (color transparency).

6. SFT overfitting to inference markers and ignoring recognition markers

Conclusion:

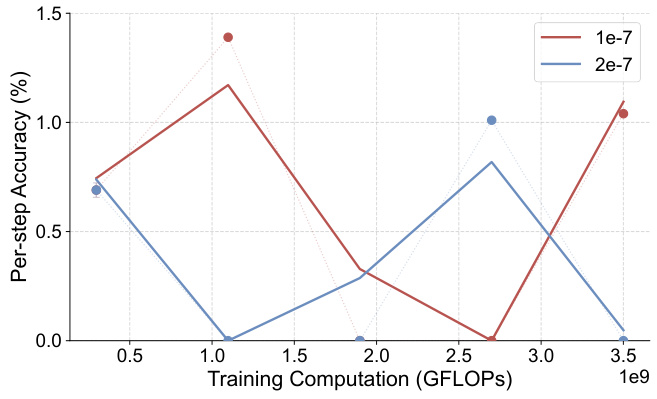

SFT tends to overfit to inferred markers and underfocus on recognized markers, possibly due to the high frequency of inferred markers.

Example:

In the GeneralPoints-VI task, SFT could not achieve comparable intra-distribution performance to RL, even after adjusting the hyperparameters.

- SFT ablation study/

- After adjusting for learning rate and other adjustable components, none of the SFT success rates exceeded 30% and showed no increasing trend.

Figure 16: Ablation study of GeneralPoints-VL SFT. We performed ablation experiments on the learning rate and report the within-distribution, single-screen success rate (%) for all experiments. None of the experiments had success rates above 30% and showed no increasing trend.

7. RL cannot recover OOD performance from overfitting checkpoints

Conclusion:

When initializing from overfitted checkpoints, RL was unable to recover the OOD performance of the model.

Example:

In the V-IRL-VL task:

- RL Initialization from overfitted checkpoints: the

- The initial per-step accuracy is lower than 1% and RL cannot improve OOD performance.

Figure 19: Out-of-distribution single-step accuracy (%) - GFLOPs: V-IRL-VL model under regular variants (using overfitted initial checkpoints). See Appendix C.3 for details of the assessment metrics.

summarize

Through a series of experiments and analyses, this thesis demonstrates the advantages of RL in learning generalizable knowledge, as well as SFT's tendency to memorize training data. The thesis also highlights the importance of SFT for RL training and the positive impact of expanding the number of validation iterations on the generalization ability of RL. These findings provide valuable insights for building more robust and reliable base models in the future.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...