Private Deployment without Local GPUs DeepSeek-R1 32B

with regards to DeepSeek-R1 For daily office use, directly in the official website is the best choice, if you have other concerns or special needs... Must be used in the Local deployment of DeepSeek-R1 (with one-click installer), here's a great tutorial for the little white guy.

If you have a poorly configured computer, but still want to deploy using a privatized DeepSeek-R1 ... Then consider using free GPUs, taught everyone ages ago Deploying DeepSeek-R1 Open Source Models Online with Free GPU Computing PowerHowever, there is a fatal drawback, that is, the free GPU can only install 14B, installing 32B will be very card, but after testing only install DeepSeek-R1 32B or more output quality to meet the needs of daily work.

So... What we're going to do is install free GPUs that run efficiently in the Quantized version of DeepSeek-R1 32B. He's coming!

In the free GPU can be done in the output of 2 to 6 words per second (according to the complexity of the problem output speed fluctuates), the disadvantage of this method is that you need to turn on the service from time to time.

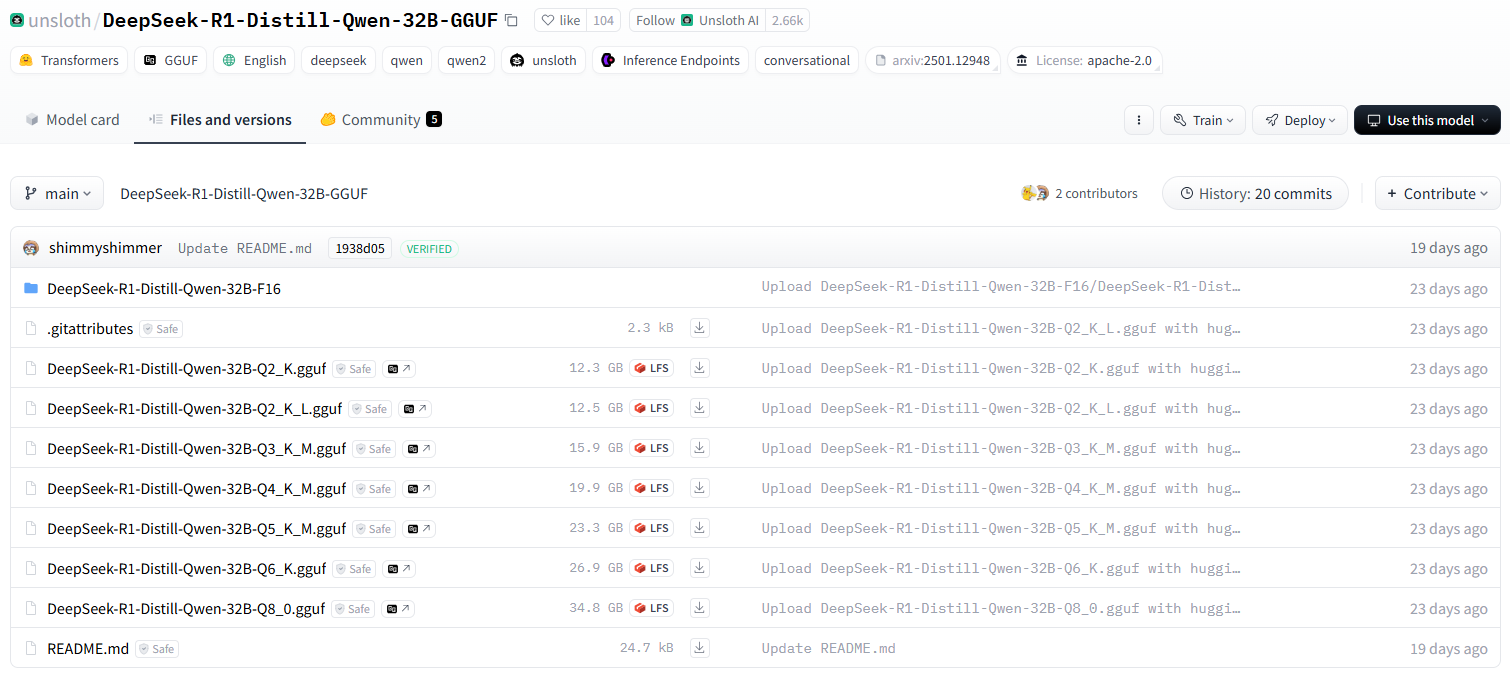

unsloth team quantized version DeepSeek-R1

unsloth The supplied version of Qwen-32B-Q4_K_M is compressed right down to 20GB, which is already capable of running on a consumer-grade single card.The

A brief summary of the computer performance requirements for the quantized version

DeepSeek-R1-Distill-Qwen-32B-GGUFDescription of the different quantitative versions of the model

The suffix of each file (e.g. Q2_K_L, Q4_K_M, etc.) represents a different quantization. Here are their main differences:

q2_k_l, q3_k_m, q4_k_m, q5_k_m, q6_k, q8_0

Q2,Q3,Q4,Q5,Q6,Q8indicates the number of bits to be calculated (e.g.Q4(Indicates a 4-bit calculation).KbesidesMIt could be a different quantization strategy or accuracy level.Q8_0Typically 8-bit quantization, close to FP16 precision, with the highest computational requirements but better inference quality.

DeepSeek-R1-Distill-Qwen-32B-F16

F16indicate16-bit floating point (FP16), is the uncalculated model, which has the highest accuracy but uses the most video memory.

Learn more about the concept of quantization here:What is Model Quantization: FP32, FP16, INT8, INT4 Data Types Explained

How do I choose the right version?

- Low video memory devices (e.g. consumer GPUs) →SelectQ4, Q5 quantificatione.g.

Q4_K_MmaybeQ5_K_M, balancing performance and accuracy. - Extremely low video memory devices (e.g. CPU operation) →SelectQ2 or Q3 quantificatione.g.

Q2_K_LmaybeQ3_K_M, reducing the memory footprint. - High-performance GPU servers→SelectQ6 or Q8 quantificatione.g.

Q6_KmaybeQ8_0, obtaining a better quality of reasoning. - most effective→Select

F16version, but requires a significant amount of significant storage (~60GB+).

Free GPU Recommended Installation Version

Q2_K_L

Start installing DeepSeek-R1 32B

From how to get a free GPU until it's installed Ollama Process skimming, or reading:Deploying DeepSeek-R1 Open Source Models Online with Free GPU Computing Power, as the only difference from the previous tutorial is a slight change in the install command.

Go straight to the process of how to install a specific quantized version in Ollama. Thankfully, Ollama has simplified the entire installation process to the extreme, requiring only one installation command to learn.

1. install huggingface quantitative versioning model base command format

Remember the following installation command format

ollama run hf.co/{username}:{reponame}

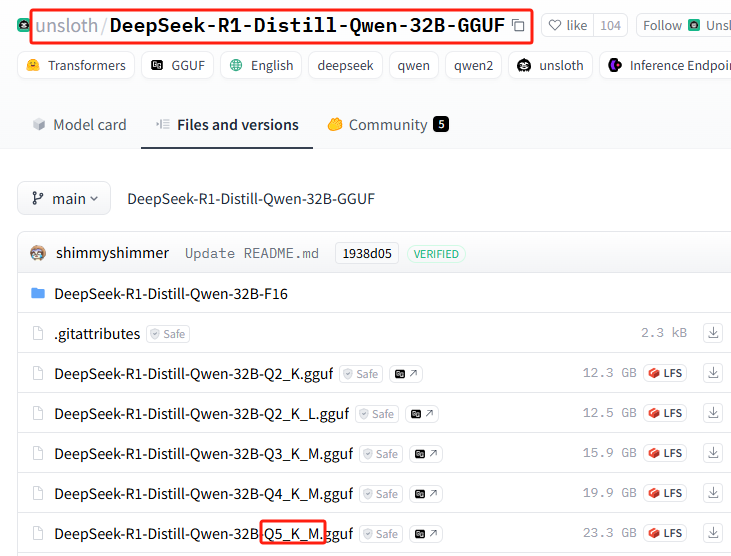

2. Selecting the quantization version

List of all quantized versions: https://huggingface.co/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF/tree/main

This installation uses: Q5_K_M (for demo only, theAgain, please install the free GPU recommended version!(Q5 requires 23G of hard disk space for actual installation)

3. Splice Installation Command

{username}=unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF

{reponame}=Q5_K_M

Splice to get the full install command:ollama run hf.co/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF:Q5_K_M

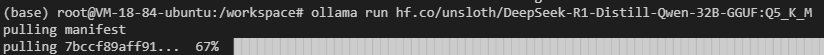

4. Execute the installation in Ollama

Execute the installation command

You may experience network failures (good luck with that), repeat the install command a few more times...

Still not working? Try executing the following command (switch to the domestic mirror address):ollama run https://hf-mirror.com/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF:Q5_K_M

Why don't you just use the mirror address to download, instead of using the official address first and then the mirror?

This is because the integrated installation is faster!

Of course you may not need this quantized version, here's a more recent popular uncensored version: ollama run huihui_ai/deepseek-r1-abliterated:14b

5. Make Ollama accessible to externalization

Confirm the Ollama port by entering the command in the terminal

ollama serve

11414 or 6399

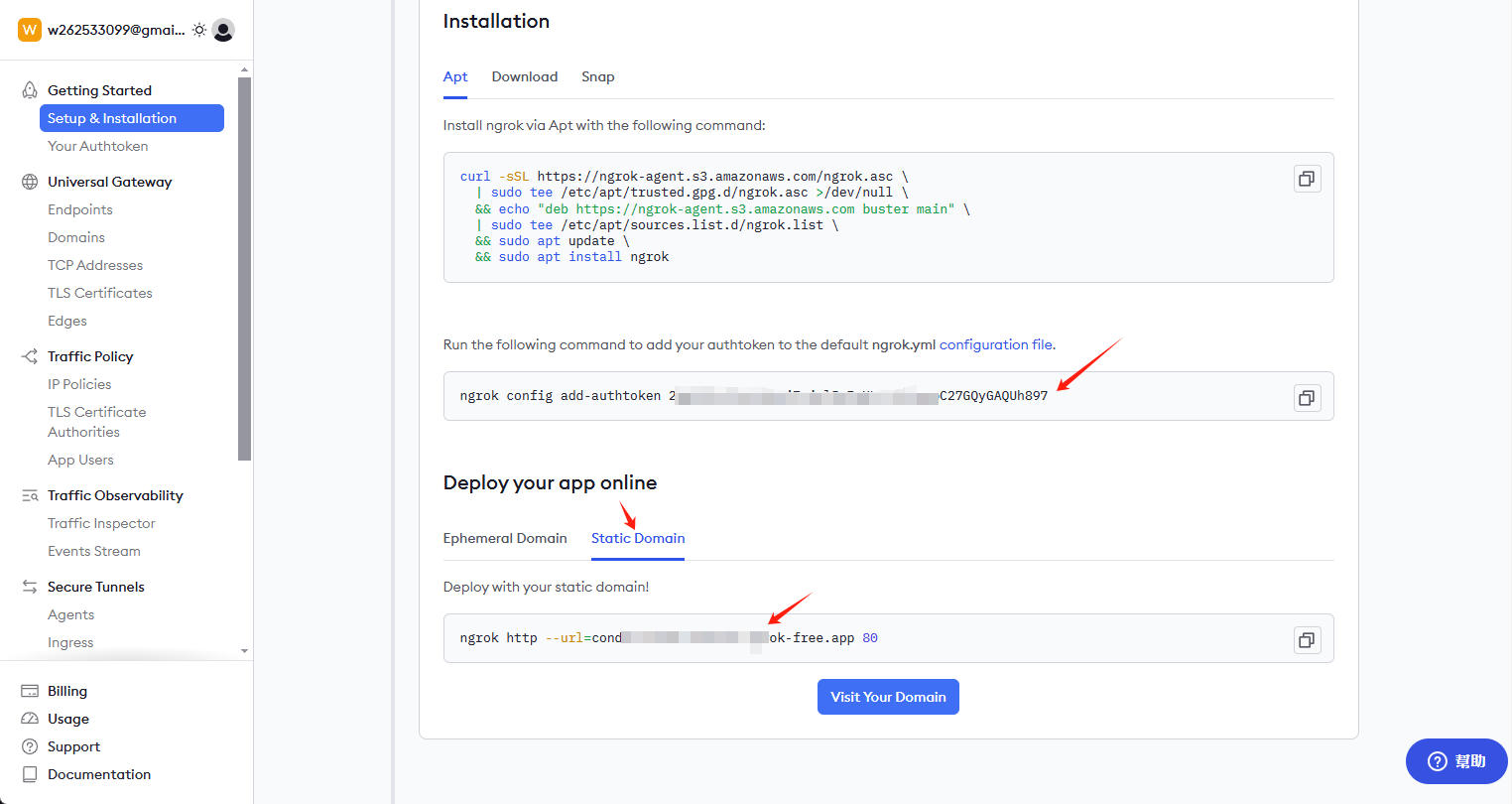

Installation of ngrok

curl -sSL https://ngrok-agent.s3.amazonaws.com/ngrok.asc \ | tee /etc/apt/trusted.gpg.d/ngrok.asc >/dev/null \ && echo "deb https://ngrok-agent.s3.amazonaws.com buster main" \ | tee /etc/apt/sources.list.d/ngrok.list \ && apt update \ && apt install ngrok

Get key and permanent link

Visit ngrok.com to sign up for an account and go to the homepage to get the key and permanent link

Install the key and enable the external access address

Enter the following command in the client:

ngrok config add-authtoken 这里是你自己的密钥

Continue to enter the following commands to open external access: 6399 This port may be different for everyone, check and modify it yourself

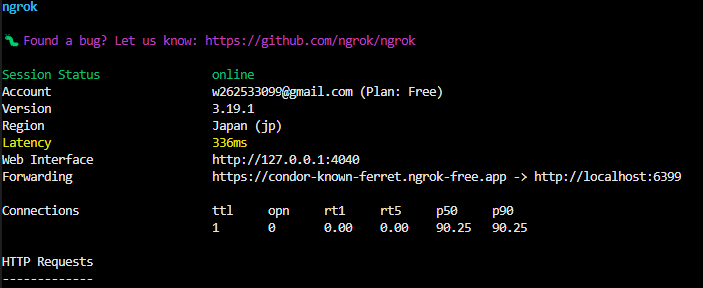

ngrok http --url=condor-known-ferret.ngrok-free.app 6399

After success you will see in your terminal

https://condor-known-ferret.ngrok-free.app is the access address of the model interface, when opened you can see the following content

utilization

Obtained from https://condor-known-ferret.ngrok-free.app如何使用?

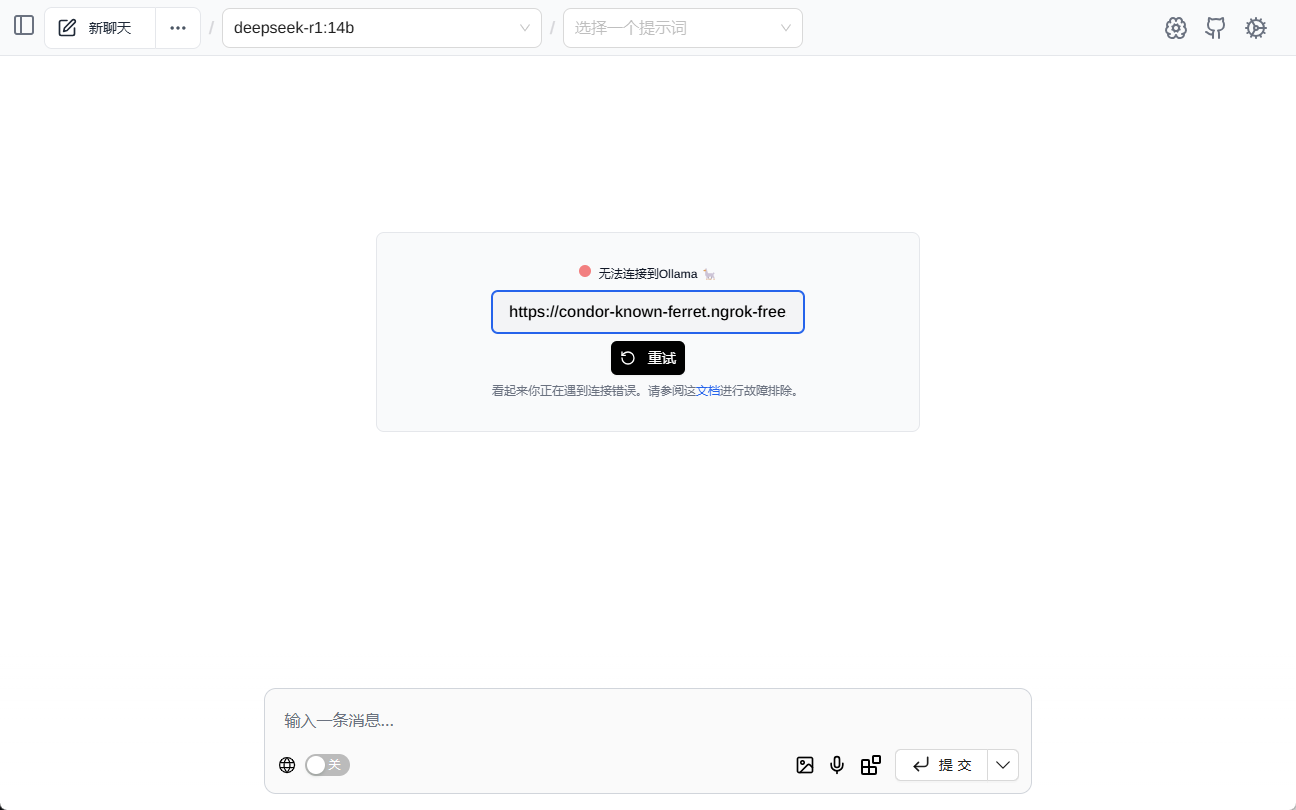

The easiest way to do this is to install Page Assist Used together, the tool is a browser plugin that installs itself.

configure

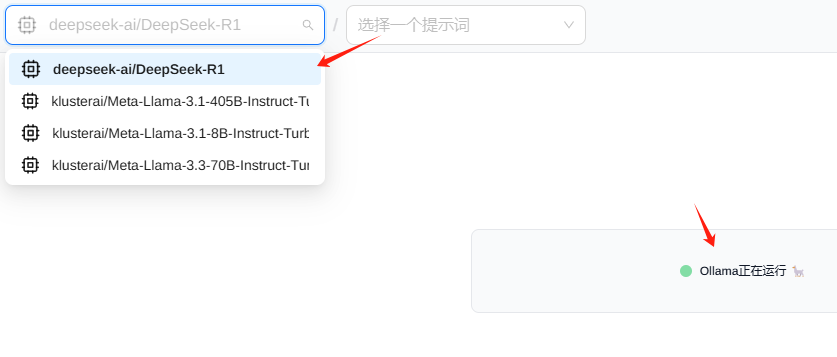

show (a ticket) Page Assist After that, you will see the following interface, please fill in the interface address

Normally you will see the model loaded out automatically

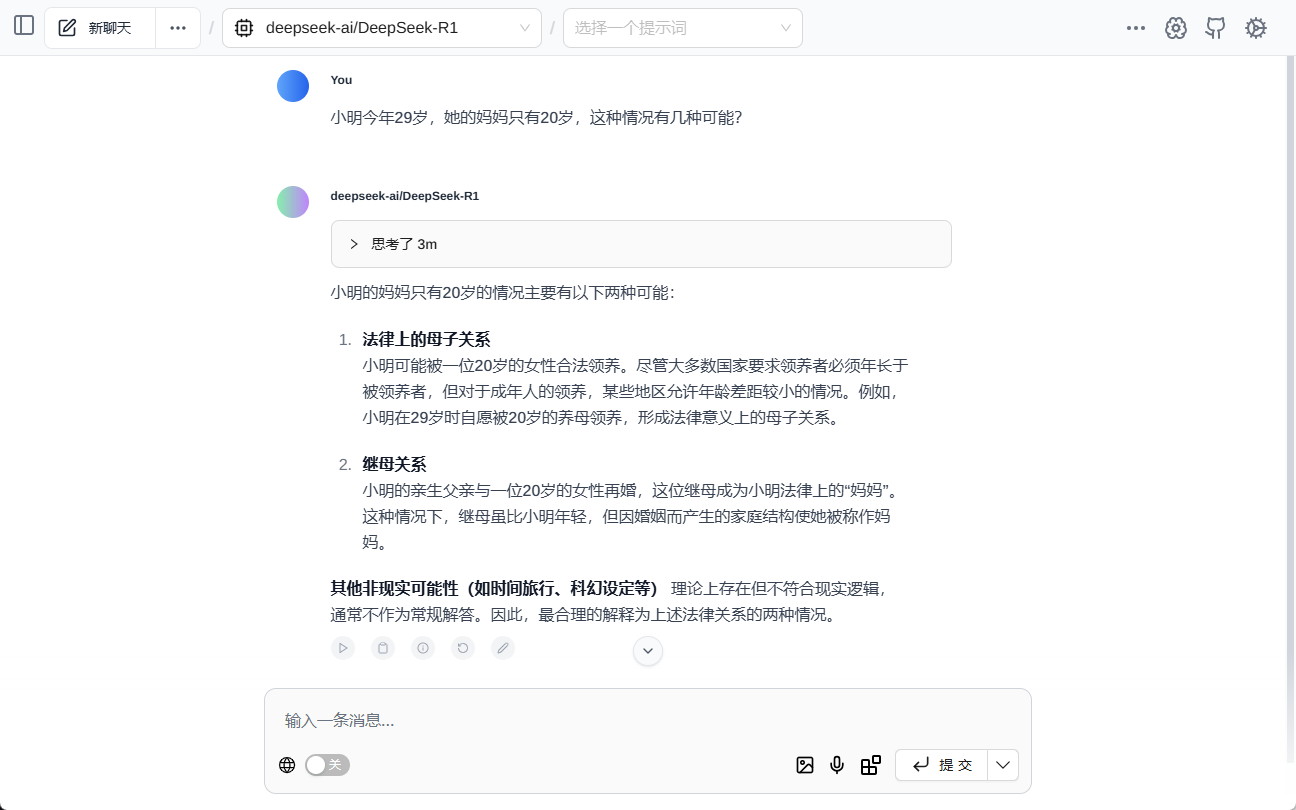

test (machinery etc)

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...