Transfer Learning (Transfer Learning) what is it, an article to read and understand

Definition of Transfer Learning

Transfer Learning (TL) is an important branch in the field of machine learning, where the core idea is to apply knowledge learned from one task or domain to another related but different task or domain. Transfer learning allows a model to utilize existing experience to improve the learning efficiency of a new task. In traditional machine learning, each model is trained from scratch, requiring large amounts of labeled data and computational resources. In reality, however, many tasks share underlying features or patterns, and it is by capturing these shared elements that migration learning reduces the reliance on data from new domains.

For example, a model pre-trained on an image recognition task can be adapted to recognize anomalies in medical images without having to be trained from scratch. Not only does this save time and cost, but it also improves the model's performance in data-scarce scenarios. The theoretical basis of transfer learning involves domain adaptation, knowledge representation and generalization capabilities, with applications spanning multiple disciplines such as computer vision and natural language processing. Migration learning embodies the analogical and reasoning process in human learning, which makes AI systems more close to human flexibility and adaptability.

Origins of Transfer Learning

The origins of transfer learning can be traced back to the cross influences of multiple domains, with early ideas setting the stage for modern developments.

- Early machine learning research began to explore the concept of knowledge reuse in the 1990s:Scholars have noted that similarities between different tasks may facilitate learning.

- 1997:Caruana's multitask learning paper was the first to systematically introduce the idea of shared representations, inspiring subsequent work on transfer learning.

- Early twenty-first century:As computational power increased and data availability increased, researchers such as Pan and Yang formally defined a transfer learning framework that distinguishes between source and target domains.

- Psychology and cognitive science contributed inspiration:Research on human analogical reasoning shows that knowledge transfer is part of the natural learning process.

- Demand from industry drives development:Google and Microsoft, for example, experimented with migration methods in their ad recommendation systems to deal with the lack of data on new users.

- The rise of open source frameworks such as tensor flow and PyTorch:Lowering the experimental threshold for transfer learning accelerates community adoption and innovation.

Core concepts of transfer learning

The core concept of transfer learning revolves around how to effectively transfer and adapt knowledge and involves several key elements.

- Source and target areas:The source domain is the task or dataset from which the knowledge originated, and the target domain is the new task to which the knowledge is applied; the difference between the two determines the difficulty of migration.

- Characteristic representation:Learning transferable features allows the model to extract generic patterns from the source domain and adapt them to the specific needs of the target domain.

- Type of knowledge:Including parameter sharing, structural knowledge or rules such as neural network weights or decision tree rules can be migrated.

- Migration strategy:such as instance weighting, where the source domain data is reweighted to reduce the effect of distributional mismatches between domains.

- Negative migration prevention:Avoiding negative effects of source domain knowledge on the target domain requires optimizing the migration process by assessing similarity.

- Generalization capabilities:Migration learning aims to improve the performance of models on unseen data, emphasizing cross-domain robustness.

Types of Transfer Learning

Transfer learning can be categorized into various types based on methodology and implementation, each of which is applicable to different scenarios.

- Instance-based migration:Reuse specific data points from the source domain to aid target domain learning by weighting or selecting similar instances.

- Feature-based migration:Learning a shared feature space, e.g., mapping source domain features to the target domain through dimensionality reduction or encoding techniques.

- Model-based migration:Direct migration of model parameters or structures, e.g., fine-tuning a pre-trained neural network to adapt to a new task.

- Relationship-based migration:Applies to relational data, migrating knowledge of logical rules or graph structures between entities.

- Isomorphic vs. isomorphic migration:The source and target domain feature spaces are the same in isomorphic migration, while heterogeneous migration involves different feature spaces and requires additional transformations.

- No supervised migration:In the absence of labeling in the target domain, unsupervised knowledge from the source domain is utilized for migration and enhanced learning.

How transfer learning works

Transfer learning works by involving a series of steps and techniques to ensure that knowledge is transferred effectively.

- Pre-processing stage:Analyze data distributions in source and target domains to identify commonalities and reduce differences between domains, e.g., through data augmentation or normalization.

- Feature Extraction:High-level features are extracted from the source domain using pre-trained models such as convolutional neural networks, which can serve multiple target tasks.

- Fine-tuning the process:Adaptation of source model parameters on target domain data, usually freezing the bottom layer and training the top layer for the new task.

- Evaluation and Validation:Measure migration effects through cross-validation or domain adaptation metrics to ensure performance gains rather than degradation.

- Iterative optimization:Adjusting the migration strategy based on feedback loops, e.g., dynamically adjusting the learning rate or introducing regularization to prevent overfitting.

- Integration Methods:Combine knowledge from multiple source domains to improve model robustness in the target domain through voting or weighted averaging.

Application areas of transfer learning

The application areas of transfer learning are wide-ranging, covering almost all AI-related industries, demonstrating its practical value.

- Computer vision:Pre-trained models such as residual networks for image classification migrate to medical image analysis to help diagnose diseases such as cancer or retinopathy.

- Natural Language Processing:Language models such as bi-directional encoder representations are pre-trained on large amounts of text and migrated to sentiment analysis or machine translation tasks to improve accuracy and efficiency.

- Autopilot:Migration of driving strategies learned from simulated environments to real-world vehicle control reduces the risk and cost of real-world vehicle testing.

- Recommended Systems:E-commerce platforms use user behavior data to migrate from one category of products to another, personalizing recommendations for new products and enhancing the user experience.

- Healthcare:Migration learning aids drug discovery by applying data on known compounds to new target prediction, accelerating the R&D process.

- Industrial maintenance:Migrate from historical equipment failure data to predictive maintenance for new machines, with early warning of failures and reduced downtime.

- Financial Risk Control:Banks use migration learning to apply anti-fraud models from one financial product to another, adapting to changing fraud patterns.

Advantages of Transfer Learning

The advantages of transfer learning make it a key technique for modern machine learning, bringing multiple benefits.

- Data Efficiency Improvements:Reducing reliance on large amounts of labeled data is especially valuable in data-scarce areas such as niche language processing or rare disease research.

- Training Acceleration:Dramatically reduce training time from weeks to hours with pre-trained models, speeding up model deployment and iteration.

- Cost reduction:Save on data collection and computing resource overhead, enabling SMBs to apply advanced AI solutions as well.

- Performance Improvement:By migrating the enriched knowledge, the model often achieves higher accuracy on the target task, especially in complex or dynamic environments.

- Generalization enhancement:Models are more robust to unseen data, reducing the risk of overfitting and applying to real-world uncertainty.

- Cross-domain adaptability:Support cross-modal migration from simulation to reality or from text to image to expand the boundaries of AI applications.

The Challenges of Transfer Learning

Despite the significant benefits, migratory learning also faces a number of challenges that require continued research and innovation to overcome.

- Inter-field differences:Different distributions of source and target domains risk knowledge migration failures and require the development of advanced adaptation techniques such as adversarial training.

- Data privacy issues:Migration involves multiple sources of data, raising privacy breach concerns, especially in sensitive areas such as healthcare or finance.

- Computational complexity:Certain migration methods such as multi-task learning increase the model size and computational burden, affecting scalability.

- Assessing Difficulty:The lack of standardized indicators to measure the effects of migration complicates comparing different methods or reproducing results.

- Theoretical gaps:The theoretical foundations of transfer learning are not yet well developed, for example, more research is still needed on how to quantify transferability.

Practical examples of transfer learning

Real-world examples vividly demonstrate the effectiveness of transfer learning, covering a wide range of scenarios from research to industry.

- Image network pre-training model:Convolutional neural networks trained in the Image Networking Competition were migrated to custom image tasks, such as artwork recognition or satellite image analysis, with significant accuracy gains.

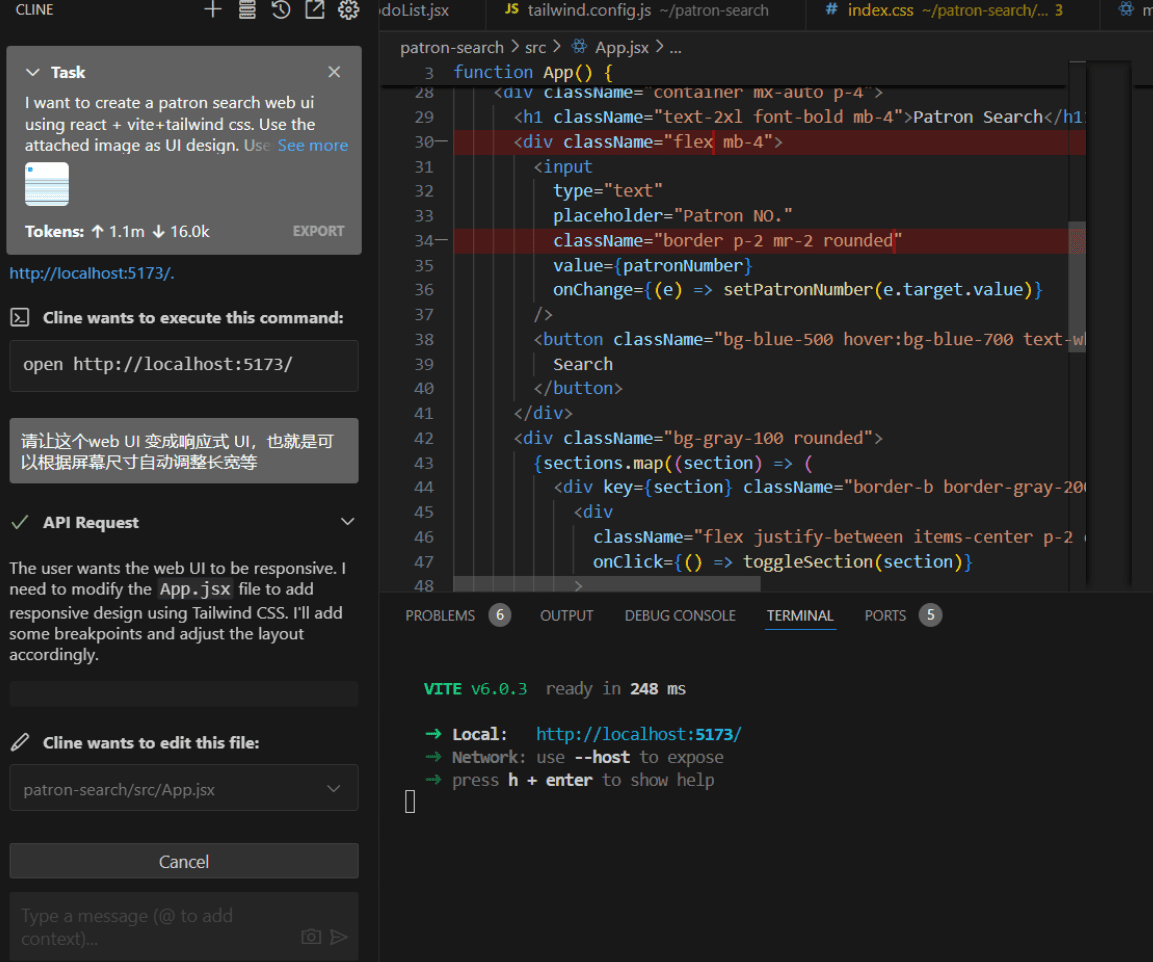

- Generating pre-trained transformer family language models:Generative pre-trained transformer models for open AI are migrated to Q&A or summarization tasks after extensive text pre-training to drive chatbots and content generation tools.

- Tesla Autopilot:Using driving data from simulated environments migrated to real vehicles, the decision-making system is continuously learned and optimized through shadowing patterns.

- Amazon Recommendation Engine:Migrating from Book Purchase Data to Electronics Recommendations Using Migration Learning to Handle New Category Cold Starts.

- Diagnostic medical imaging:Google Health uses retinal scanning models migrated from public datasets to specific hospital data to assist physicians in detecting diabetic retinopathy.

- Speech Recognition:Voice assistants migrate English speech models to other languages, accelerating global deployment and reducing native language data requirements.

- Climate change research:Migration learning applies historical climate models to future predictions to improve weather forecasting accuracy and support environmental decision-making.

Future Perspectives on Transfer Learning

Future perspectives reveal potential directions for the development of transfer learning, full of opportunities and room for innovation.

- Automated Migration:Develop AI-driven automated tools that intelligently select source domains and migration strategies to reduce the need for manual intervention.

- Cross-modal fusion:Integration of visual, linguistic, and sensor data for more robust multimodal migration, e.g., image generation from textual descriptions.

- Federal Learning Integration:Combining federated learning frameworks for privacy-preserving transfer learning on distributed devices to drive edge computing applications.

- Interpretability enhancement:Enhance the interpretability of the migration process to help users understand how knowledge is transferred through visualization or rule extraction.

- Ethics and Fairness:Address bias and ensure that transfer learning does not amplify social inequalities, for example in hiring or lending decisions.

- Bio-inspired methods:Drawing inspiration from the nervous system, it mimics migration mechanisms in human learning to create more flexible AI.

- Sustainable development:Apply migratory learning to optimize energy use or reduce carbon footprint to support green AI initiatives.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...