What is Recurrent Neural Network (RNN) in one article?

Definition of recurrent neural network

Recurrent Neural Network (RNN) is a neural network architecture designed for processing sequential data. Sequential data refers to a collection of data with temporal order or dependencies, such as linguistic text, speech signals, or time series. Unlike traditional feed-forward neural networks, RNN enables the network to maintain an internal hidden state that captures dynamic contextual information in sequences by introducing a recurrent connection structure. This hidden state is continuously updated with the inputs at each time step and passed to the next time step, forming a memory mechanism that enables the RNN to process variable-length sequences and model short-term and long-term dependencies. Primitive RNNs suffer from gradient vanishing and explosion problems, making it difficult to learn long-range dependencies effectively. Improved variants such as Long Short-Term Memory Networks (LSTMs) and Gated Recurrent Units (GRUs) have been developed for this purpose.The core value of RNNs lies in their powerful modeling ability for time-series dynamics, which are widely used in fields such as natural language processing, speech recognition, and time-series prediction, and have become an important basic model for deep learning.

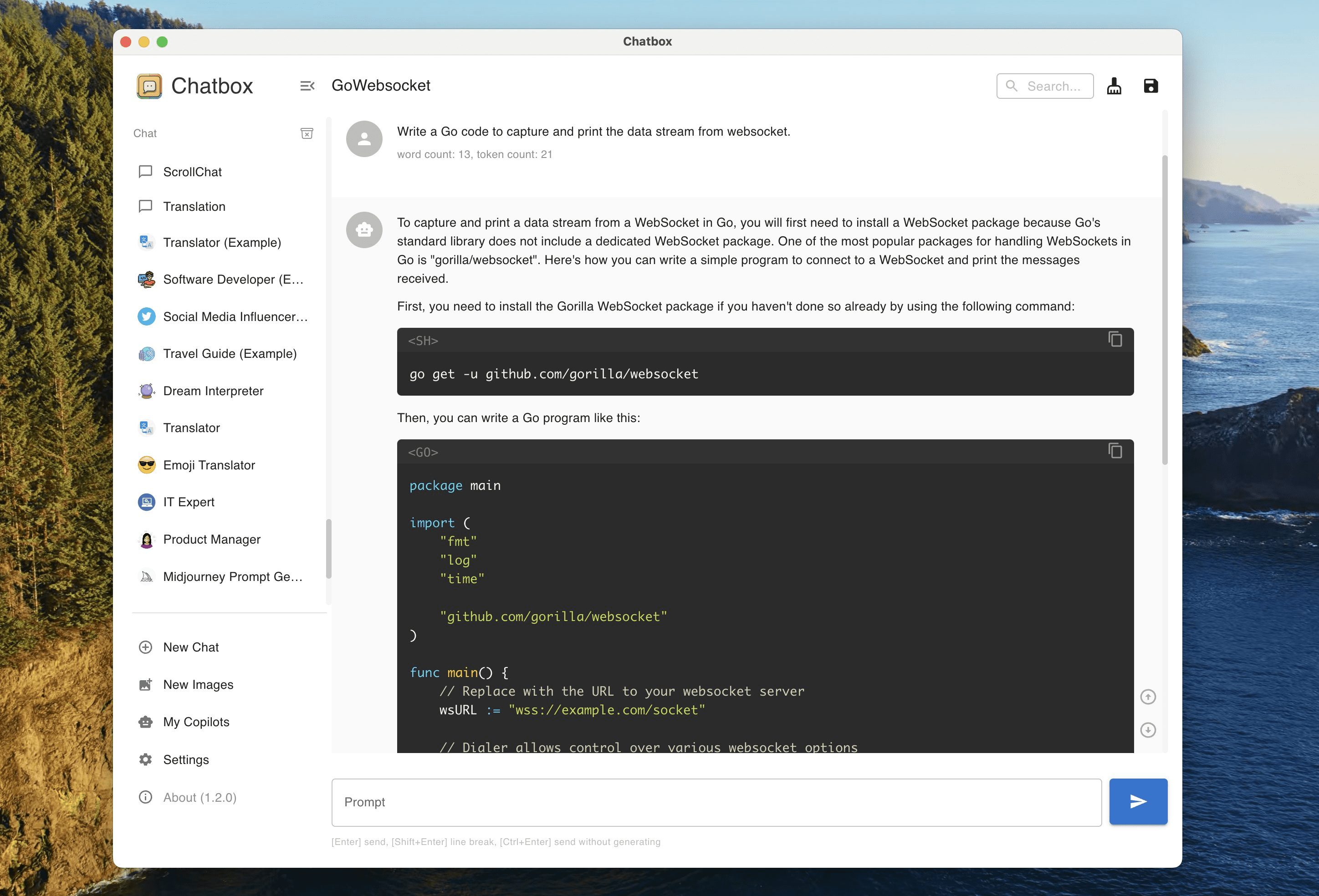

How recurrent neural networks work

The core working mechanism of recurrent neural networks revolves around its recurrent structure and the updating and transferring of hidden states, which can effectively process information with temporal associations.

- Timing Expansion and Step-by-Step Processing: A recurrent neural network treats the entire input sequence as a series of data points arranged in time steps. Instead of processing all the data at once, the network performs calculations time-step by time-step. For example, when analyzing a sentence, the network reads in each word in turn.

- Cycling and updating of hidden states: At each time step, the network receives two inputs: external input data from the current time step, and internal hidden states from the previous time step. These two inputs are linearly transformed by a set of shared weight parameters, and then processed by a nonlinear activation function (e.g., the hyperbolic tangent function tanh), which ultimately generates a new hidden state for the current time step.

- How the output is generated: The hidden state of the current time step is not only passed to the future, but also used to generate the output of that time step. This output may be a predicted value, such as the predicted probability distribution of the next word, and is usually obtained through an output layer transformation such as a Softmax function. Not every time step needs to generate an output.

- Sharing mechanism for parameters: Recurrent neural networks reuse the same weight matrix (input weights, hidden state weights, and output weights) at all time steps. This parameter sharing greatly reduces the number of parameters the model needs to learn, improves computational efficiency, and allows the model to generalize to sequences of different lengths.

- Targeted delivery of information flows: Cyclic connections constitute a directed flow of information, allowing historical information to continuously influence future computations. This design allows the network to capture short-term patterns in the sequence, but primitive simple recurrent networks have inherent difficulties in capturing long-term patterns.

Application areas of recurrent neural networks

Recurrent neural networks have found use in numerous scientific and industrial fields due to their superior ability to process sequential data.

- natural language processing (NLP): Recurrent neural networks are the core technology for machine translation, text generation, sentiment analysis and language modeling. In machine translation, the model reads source language sentences as a sequence and gradually generates target language words, making full use of contextual information to improve translation accuracy.

- Speech Recognition and Synthesis: Speech signals are typically time series. Recurrent neural networks are used to convert audio waveforms into textual transcripts, driving intelligent voice assistants and real-time captioning systems. They are also used to synthesize more natural speech.

- Time series forecasting and analysis: In finance, meteorology, energy and industry, recurrent neural networks are used to predict stock prices, weather forecasts, power loads or the remaining life of equipment. Models make inferences about future trends by learning patterns in historical data.

- Video Content Understanding: Video consists of consecutive image frames. Recurrent neural networks can process these frame sequences for behavior recognition, video content description, automatic marking, and anomalous event detection, which are widely used in security surveillance and content recommendation.

- Music Generation and Sequence Composition: Recurrent neural networks can learn the notes, chords and rhythmic patterns of musical compositions and automatically create new musical fragments, melodies or even complete scores, providing tools for creative artificial intelligence.

Type variants of recurrent neural networks

To overcome the limitations of basic recurrent neural networks, researchers have proposed several important architectural variants.

- Basic Recurrent Neural Network (Vanilla RNN): The simplest form of recurrent network, using activation functions such as tanh. However, its memory capacity is short-lived, it is prone to suffer from the gradient vanishing problem, and it is difficult to learn long term reliance.

- Long Short-Term Memory Network (LSTM): By introducing sophisticated "gating" mechanisms (including input gates, forgetting gates, and output gates), LSTMs can selectively remember or forget information, effectively controlling the flow of information. This enables it to learn and memorize long-distance dependencies, making it the preferred choice for many sequential tasks.

- Gated Recirculation Unit (GRU): As a variant of LSTM, the gated loop cell combines the input and forget gates into a single "update gate" and simplifies the cell state structure. This design reduces computational complexity and speeds up training while maintaining similar performance to LSTM.

- Bidirectional Recurrent Neural Network (Bi-RNN): The architecture consists of two separate recurrent network layers, one processing sequences forward along time and the other reverse. The final output combines past and future contextual information and is well suited for tasks that require complete sequence information, such as entity naming recognition.

- Deep Recurrent Neural Network (Deep RNN): By stacking multiple loop layers on top of each other, the depth and expressiveness of the model can be increased, allowing it to learn more complex, hierarchical sequence features. However, deeper depth also comes with increased training difficulty.

Advantages of recurrent neural networks

The set of advantages of recurrent neural networks makes them an irreplaceable architecture for sequence modeling tasks.

- Direct processing of variable-length sequences: Without the need to pre-crop or populate the input data to a fixed size, recurrent neural networks can natively process sequential inputs of varying lengths, which is consistent with the diversity of real-world data.

- Parameter sharing leads to efficiency: Sharing parameters at all time steps not only drastically reduces the number of total parameters in the model and reduces the risk of overfitting, but also enhances the model's ability to generalize to sequences of different lengths.

- Powerful timing dynamic modeling capabilities: The recurrent mechanism of hidden states allows the network to capture time-dependent and dynamic changes in the data, which feedforward neural networks cannot do directly.

- Flexible and scalable architecture: Recurrent neural networks can be used as a base module that can be easily combined with other neural network architectures (e.g., convolutional neural network CNNs) to form more powerful hybrid models for processing multimodal sequence data.

- Supports end-to-end learning: The entire model can be learned directly from raw sequence data to the final output, minimizing the need for manual feature engineering and simplifying the machine learning process.

Limitations of recurrent neural networks

Despite their power, recurrent neural networks have some inherent drawbacks and challenges.

- Gradient vanishing and gradient explosion problems: This is the main obstacle to training deep recurrent networks. During backpropagation, the gradient may shrink (disappear) or expand (explode) exponentially, causing the network to fail to update the weights of earlier layers, making it difficult to learn long-term dependencies.

- Low computational parallelism and slow training: Due to its sequential nature of computation, it must wait for the previous time step to complete before proceeding to the next time step, which does not fully utilize the parallel computation capability of modern hardware (e.g., GPUs), resulting in a long training time.

- Limited actual memory capacity: Although variants such as LSTM improve memorization, the fixed dimensionality of the hidden state still limits the total amount of historical information that can be memorized by the network, and may not perform well for very long sequences.

- There is a risk of overfitting: Although parameter sharing helps regularization, complex recurrent networks are still prone to overfitting the training set when the amount of data is insufficient, requiring regularization techniques such as Dropout.

- Poor model interpretation: The meanings represented by the internal states (hidden states) of recurrent neural networks are often difficult to interpret, and their decision-making process resembles a black box, which is a major drawback in applications that require a high degree of transparency and trustworthiness.

Training methods for recurrent neural networks

Successful training of recurrent neural networks requires specific algorithms and techniques to ensure stability and convergence.

- Back propagation through time (BPTT): This is the standard algorithm for training recurrent neural networks, and is essentially an unfolding of the traditional backpropagation algorithm in the time dimension. The error is backpropagated from the final output to the start of the sequence to compute the gradient.

- Optimizer Selection and Application: In addition to standard stochastic gradient descent (SGD), adaptive learning rate optimizers such as Adam, RMSProp are widely adopted. They can automatically adjust the learning rate of parameters to accelerate convergence and improve training stability.

- Gradient cropping technique: To mitigate the gradient explosion problem, gradient cropping sets an upper limit on the gradient value.

- Weight Initialization Strategy: Proper initialization is crucial for training deep networks. For recurrent networks, methods such as Xavier or orthogonal initialization help to keep the gradient flowing well in the early stages of training.

- Regularization methods to prevent overfitting: In addition to the early stopping method, the Dropout technique is commonly used in recurrent neural networks. A variant is to apply Dropout between time steps or on the inputs of the recurrent layer, rather than on the recurrent connections themselves, to avoid corrupting the memory.

Historical development of recurrent neural networks

The evolution of the idea of recurrent neural networks has undergone decades of research accumulation and breakthroughs.

- Early germination of ideas: The concept of cyclic connectivity dates back to the 1980's. In 1982, John Hopfield proposed the Hopfield network, one of the first cyclic networks for associative memory.

- Theoretical Foundations and Problems Revealed: In the 1990s, Sepp Hochreiter analyzed the gradient vanishing problem in depth in his thesis. in 1997, Hochreiter and Schmidhuber proposed a preliminary design for a long-short-term memory network (LSTM), which provided a direction for the solution of the problem.

- Advances in algorithms and initial applications: With the refinement of the backpropagation through time (BPTT) algorithm and the increase in computational power, recurrent neural networks are beginning to be applied to small-scale speech recognition and language modeling tasks.

- Deep Learning Renaissance and Prosperity: Around 2010, thanks to large-scale datasets, leaps in GPU computing power, and improvements in training techniques, recurrent network variants such as LSTM and GRU have achieved breakthrough successes in areas such as natural language processing, and have become the core of many commercial systems.

- Current and future status: In recent years, Transformer architectures based on self-attention mechanisms have demonstrated superior performance to recurrent networks on several tasks. However, recurrent neural networks and their variants still maintain significant value in many scenarios due to their fundamental position in sequence modeling.

Comparison of recurrent neural networks with other models

Comparing recurrent neural networks with other mainstream models helps to understand their unique value and applicability scenarios.

- Comparison with feedforward neural networks (FNN): Feedforward neural networks assume that input data are independent of each other, have no internal state, and process fixed-sized inputs. Recurrent neural networks, on the other hand, are designed for sequences and have memory capabilities, but are more complex to train and less computationally efficient.

- Comparison with Convolutional Neural Networks (CNN): Convolutional neural networks are good at extracting spatially localized features (e.g., images), and their translation invariance is advantageous in image processing. Recurrent neural networks are good at capturing temporal global dependencies. One-dimensional convolutional networks can also handle sequences, but with limited sensory fields, while recurrent networks can theoretically memorize the entire history.

- Comparison with Transformer model: Transformer, based entirely on a self-attentive mechanism, can process entire sequences in parallel, is extremely efficient to train, and excels at long-distance dependency modeling. Recurrent networks, on the other hand, must be processed sequentially and are slow, but may have lower computational and memory overhead for inference and are better suited for resource-constrained streaming applications.

- Comparison with Hidden Markov Models (HMM): Hidden Markov models are classical sequential probabilistic graphical models, based on strict mathematical assumptions, which are smaller and easier to interpret. Recurrent neural networks are data-driven discriminative models that are more expressive and usually perform better, but require more data and computational resources.

- Comparison with Reinforcement Learning (RL): Reinforcement learning focuses on intelligences learning decision-making strategies through trial and error in an environment where the problem itself is usually temporal in nature. Recurrent neural networks are often used as a core component in reinforcement learning intelligences to process partially observable states or to memorize historical observations.

Future trends in recurrent neural networks

Research on recurrent neural networks continues to evolve, and the future may go in several directions.

- Efficiency gains and hardware synergies: Research on lighter and more computationally efficient loop units to optimize their deployment and application in edge computing scenarios such as mobile devices and embedded systems.

- Integration of innovation with new technologies: Deeper integration of recurrent neural networks with new ideas such as attention mechanisms and memory enhancement networks to create new architectures that maintain the efficiency of the recurrent structure while providing stronger memory and generalization capabilities.

- Expanding the boundaries of emerging applications: Explore the potential of recurrent neural networks for applications in emerging fields such as bioinformatics (gene sequence analysis), healthcare (electronic medical record analysis), and automated driving (sensor time-series fusion).

- Enhancing interpretability and credibility: Developing new visualization tools and analytics to reveal the representation and decision logic learned by the internal states of recurrent neural networks, increasing model transparency and meeting the needs of responsible AI.

- Explore more advanced learning paradigms: Investigate how paradigms such as meta-learning and small-sample learning can be combined with recurrent neural networks so that they can be quickly adapted to new, data-scarce sequential tasks, improving the generality and flexibility of the models.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...