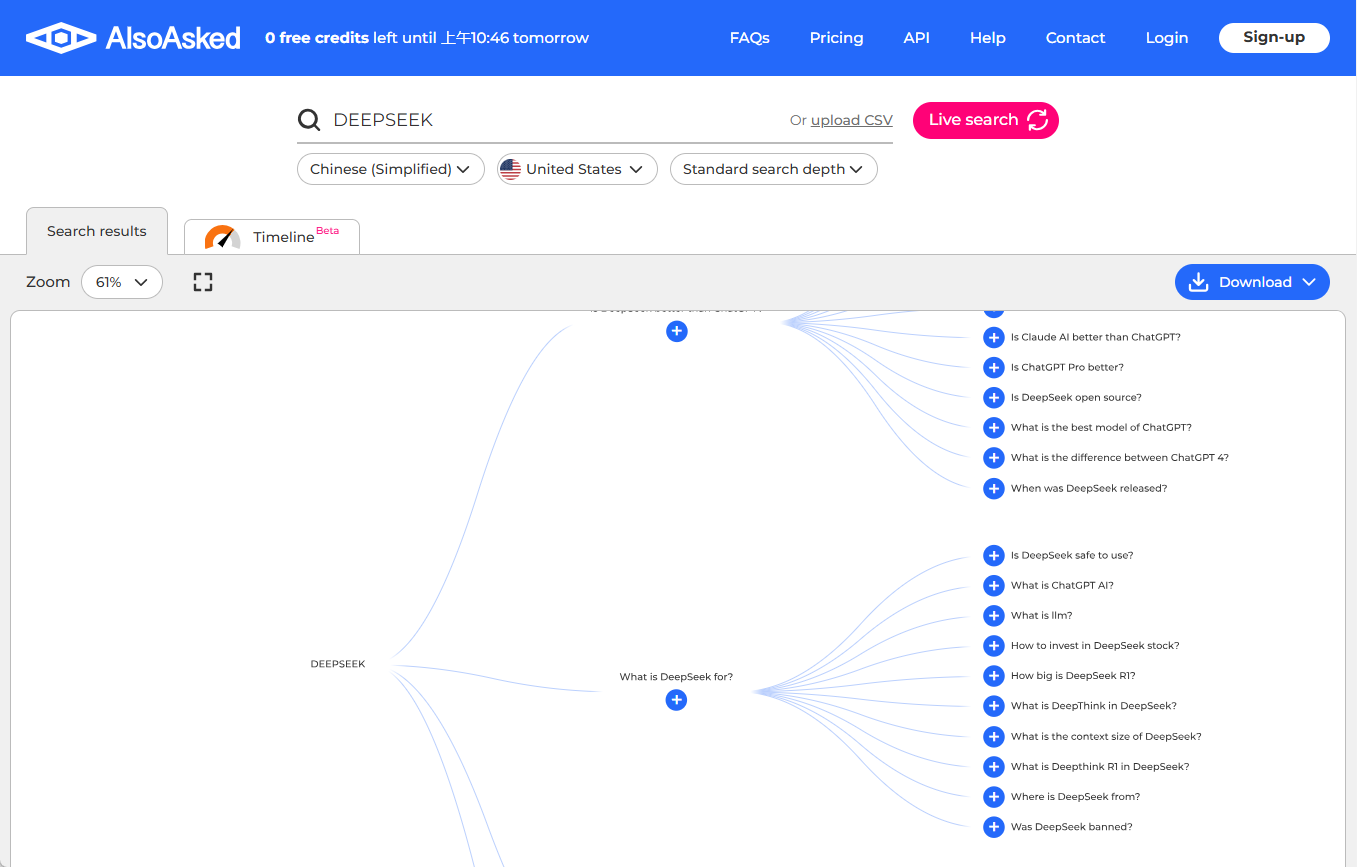

What is the Large Language Model (LLM) in one article?

Definition of a Large Language Model

Large Language Model (LLM) is a deep learning system trained on massive text data, with the Transformer architecture at its core. The self-attention mechanism of the architecture can effectively capture the long-distance dependencies in the language. The model is "large" in the sense that it has hundreds of millions to hundreds of billions of parameters, which are continuously adjusted during the training process to learn the statistical laws and semantic patterns of the language.

Training is divided into two stages: pre-training and fine-tuning: pre-training allows the model to acquire grammar, factual knowledge and initial reasoning skills by predicting the obscured word or the next word; fine-tuning optimizes the model's behavior to make it safer and more useful using specific instructions or human feedback data. Large language models are probabilistic models that compute the most likely sequence of outputs based on inputs, not a true understanding of the language. Representative models such as the GPT family and PaLM have become key tools for advancing AI applications.

The Historical Origins of the Great Language Model

- Early natural language processing research dates back to the 1950s with Turing tests and rule-based systems such as the ELIZA chatbot, which were based on fixed pattern matching and low flexibility.

- In the 1980s and 1990s, statistical language models emerged, such as the n-gram model, which uses word frequency to predict text, but is limited by the problem of data sparsity.

- At the beginning of the 21st century, neural network language models emerged, such as Word2Vec and LSTM, which represent semantics through word vectors and lay the foundation for deep learning.

- The proposal of the Transformer architecture in 2017 was the turning point, with its self-attention mechanism to solve long sequence processing challenges, giving rise to pre-trained models such as BERT and GPT.

- After 2020, the computational resources and data scale explode, and the model parameters break through the hundreds of billions, such as GPT-3 triggering industry changes and promoting the development of multimodal models.

- The historical lineage shows that the evolution of big language models relies on algorithmic innovations, hardware advances, and data accumulation, with each stage overcoming the shortcomings of the previous generation of models.

Core Principles of the Big Language Model

- The Transformer architecture's self-attention mechanism allows the model to process the vocabulary in parallel, calculating the association weights of each word with other words in the context, replacing the traditional recurrent neural network.

- The pre-training task mostly uses masked language modeling or autoregressive prediction, where the former randomly masks some of the input words for the model to recover, and the latter sequentially predicts the next word to develop language generation skills.

- Parameter scale expansion brings emergent capabilities, where complex tasks that cannot be performed by small models, such as mathematical reasoning or code writing, emerge spontaneously in large parameter models.

- The inference process relies on probabilistic sampling, where the model outputs a probability distribution for each candidate word, and the randomness is controlled by a temperature parameter, where high temperatures increase diversity and low temperatures enhance certainty.

- Fine-tuning techniques such as instruction tuning and alignment training use Reinforcement Learning with Human Feedback (RLHF) to optimize model outputs to match human values.

- The core principle reveals that big language models are essentially data-driven pattern matching rather than logic engines, and their performance is directly affected by the quality and diversity of training data.

Training Methods for Large Language Models

- Data collection involved large-scale text cleaning and de-duplication from sources such as Wikipedia, news sites, and academic papers, ensuring coverage of multi-domain linguistic phenomena.

- The pre-training phase consumes huge amounts of arithmetic, using GPU clusters for weeks or months of training at a cost of millions of dollars, reflecting resource-intensive characteristics.

- The fine-tuning approach consists of supervised fine-tuning, which adjusts model parameters with labeled data, and reinforcement learning based on human feedback to reduce harmful outputs.

- Distributed training frameworks such as Megatron-LM or DeepSpeed address memory bottlenecks by splitting model parameters across multiple devices.

- The training process focuses on data security and removes personal private information or biased content, but challenges remain in completely eliminating discrimination.

- The optimization algorithm uses an adaptive learning rate method, such as AdamW, to balance training speed and stability and prevent overfitting.

Application Scenarios for Large Language Modeling

- The education domain aids personalized learning by generating practice problems or explaining concepts, but requires teacher supervision to avoid error propagation.

- The healthcare industry uses it for literature summarization or diagnostic support to improve efficiency, although clinical decision-making still relies on human experts.

- Creative industries such as advertising copywriting or storytelling provide a source of inspiration, but raise controversies over copyright and originality.

- Customer service deploys chatbots to handle common inquiries, reducing labor costs, but redirecting complex questions to a human.

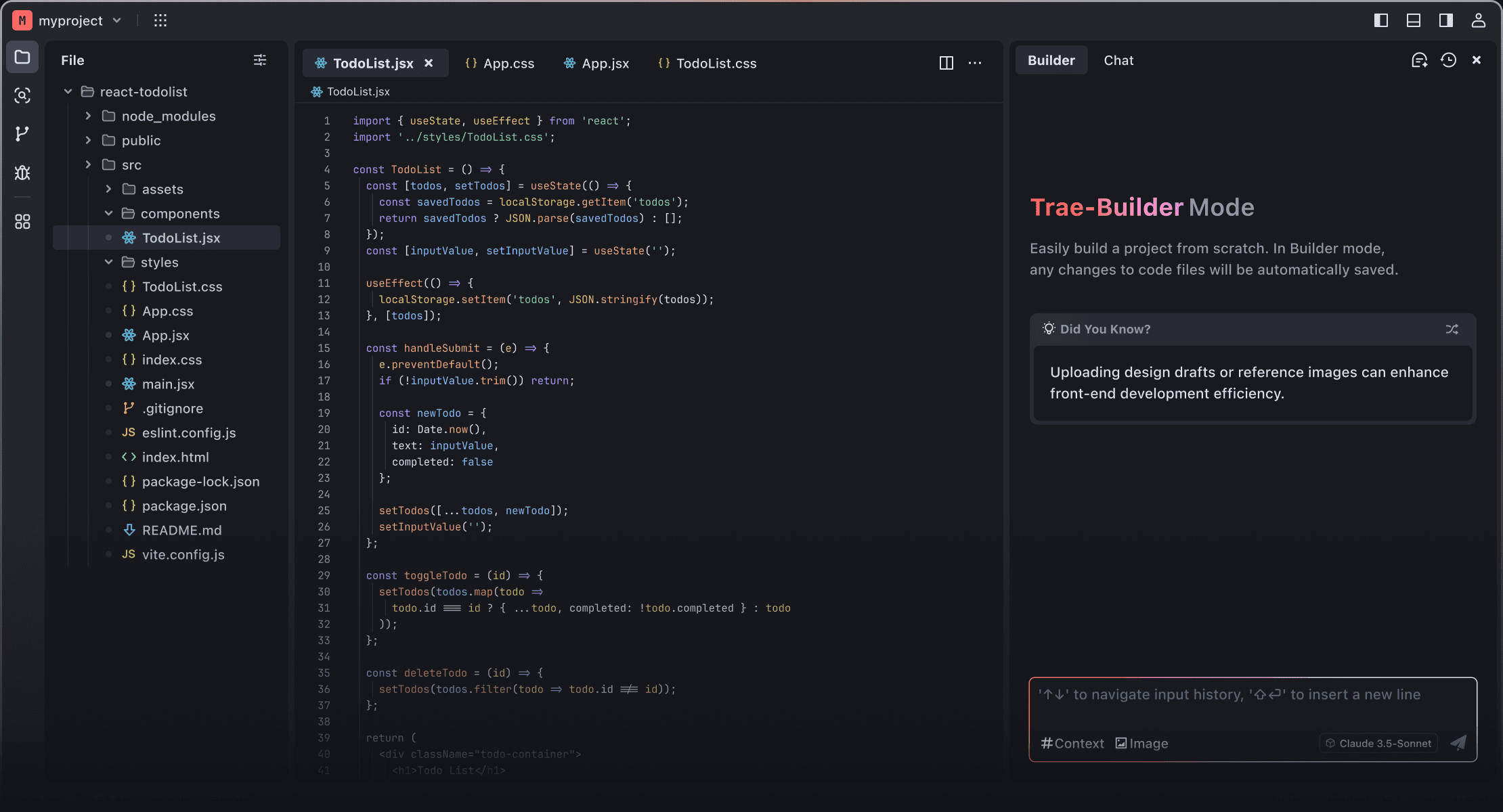

- Programming tools integrate code completion and debugging to accelerate the development process, such as GitHub Copilot.

- Translation service breaks through language barriers, realizes real-time multi-language conversion, and the quality is close to the level of professional translators.

Performance Benefits of Large Language Modeling

- The generated text is fluent and mimics human writing style for content generation scenarios.

- Strong multi-task generalization ability, a single model can handle different tasks such as question and answer, summary, classification, etc., reducing specialized model development.

- Interaction naturalness enhances the user experience, supports multiple rounds of dialog, and maintains contextual consistency.

- Processing speed is accelerated with hardware optimization, with millisecond response time to meet real-time application requirements.

- Scalability allows for continuous learning of new knowledge and adaptation to change through incremental updates.

- Cost-effectiveness is reflected in the automation of duplicated labor and the reduction of labor inputs.

Potential Risks of Large Language Modeling

- The problem of hallucinations leads to the output of false information, such as fabricated historical events or scientific facts that mislead users.

- Data bias amplifies social inequality, gender, racial discrimination in training data are learned and reproduced by models.

- Security vulnerabilities can be maliciously exploited to generate phishing emails or fake news, threatening network security.

- The risk of privacy leakage exists, and sensitive information in the model memory training data can be extracted by cue words.

- Employment shock affects professions such as copywriting and customer service, triggering a restructuring of the labor market.

- Energy consumption is huge, training a single model carbon emissions equivalent to dozens of cars annual emissions, the environmental burden is heavy.

Ethical Considerations for Large Language Modeling

- Insufficient transparency, modeling decision-making processes as black boxes, and difficulty in tracing the root causes of errors.

- Accountability mechanisms are missing, and when a model causes damage, the attribution of responsibility is blurred between the developer, the user or the platform.

- Fairness requires multiple samples to be represented, avoiding marginalized groups being overlooked, and requires continuous auditing of model outputs.

- Human rights protection involves balancing freedom of expression with content censorship and preventing abusive use of surveillance or censorship.

- Sustainability calls for green AI and optimized algorithms to reduce carbon footprint.

- Ethical frameworks require interdisciplinary collaboration to develop industry standards to regulate development and deployment.

The Future of Big Language Modeling

- Multi-modal fusion is becoming a trend, combining text, images, and audio to achieve richer human-computer interaction.

- Model lightweighting technology advances, distillation or quantization methods enable large models to be run on edge devices.

- Personalization is enhanced to adapt to different users' language habits and needs and to improve specialization.

- Regulations and policies are gradually improving, with countries introducing AI governance bills to guide responsible innovation.

- The open source community promotes democratization, lowers the technology barrier, and promotes adoption by SMEs.

- Basic research focuses on breaking through Transformer limitations and exploring new architectures to improve efficiency and interpretability.

Big Language Modeling vs. Human Intelligence

- While language processing is based on statistical models, humans incorporate emotion, context and common sense, and the models lack true understanding.

- The learning approach relies on data-driven, humans have the ability to learn and migrate from small samples, and models require massive amounts of data.

- Creativity manifests itself in combinatorial innovation, where humans can originate disruptive ideas and models only reorganize existing knowledge.

- In error handling, the model is not self-reflective, and humans are able to correct perceptions through logical tests.

- For social interactions, the model has no emotional resonance, and human communication includes nonverbal cues and empathy.

- The rate of evolution is such that model updates rely on manual adjustments, and human intelligence is passed down from generation to generation through culture and education.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...