What is Federated Learning (FL) in one article?

Definition of Federal Learning

Federated Learning is an innovative machine learning approach, first proposed by a Google research team in 2016, to address challenges in data privacy and distributed computing. Unlike traditional machine learning, Federated Learning does not require raw data to be centralized to a central server for processing, allowing data to remain on local devices such as smartphones, IoT sensors, or edge computing nodes. The core process involves multiple client devices collaborating to train a shared model: a central server initializes a global model and distributes it to participating devices; each device trains the model using local data to generate model updates (e.g., gradient or weight changes); these updates are encrypted and sent back to the server; and the server aggregates all the updates to optimize the global model without touching any of the raw data. This approach significantly reduces the risk of data breaches and complies with modern data protection regulations such as GDPR. The name Federated Learning is inspired by the concept of federalism in political science, which emphasizes collaboration among entities while maintaining autonomy. Application areas include healthcare, financial services, and smart devices, where data sensitivity and privacy are critical. Federated Learning not only supports supervised learning tasks, but also applies to unsupervised and reinforcement learning scenarios, pushing AI in the direction of privacy protection.

How federal learning works

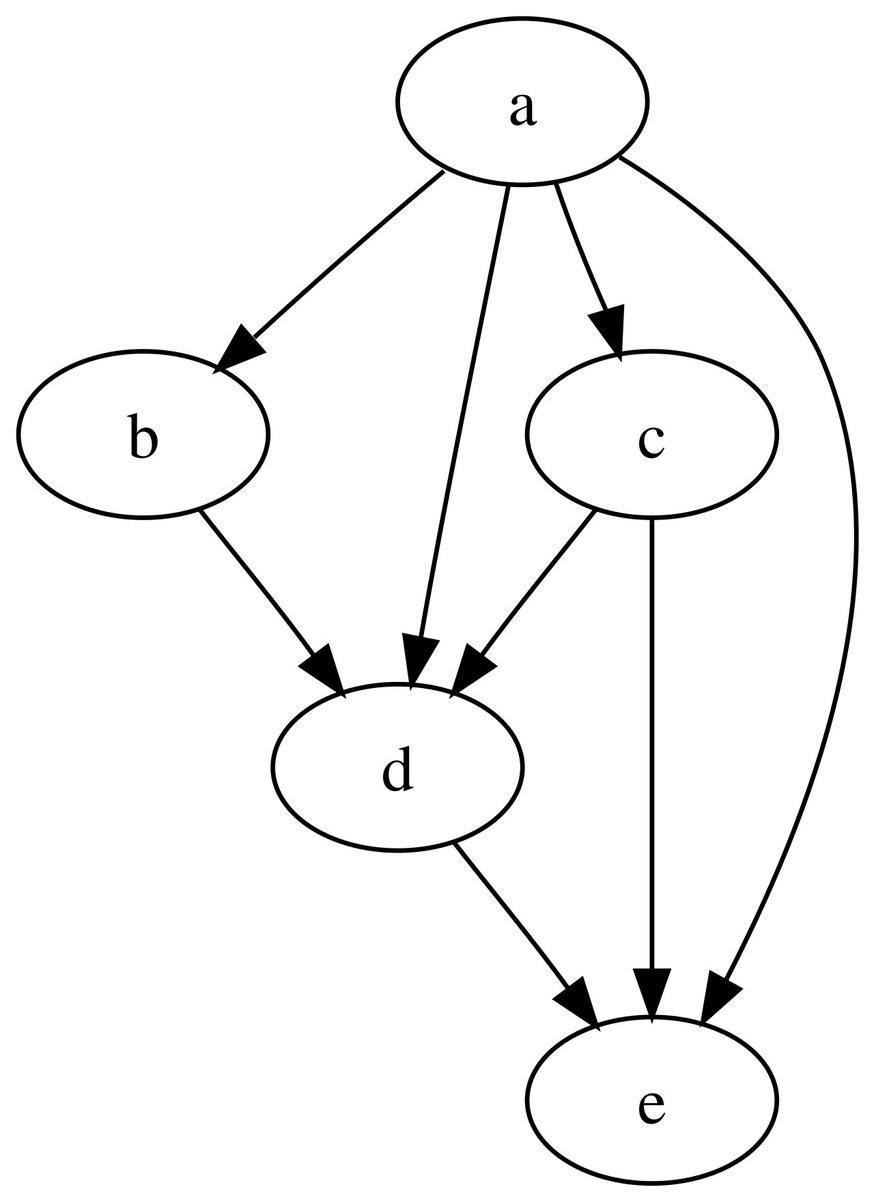

Federated learning works based on the combination of distributed computing and privacy-preserving techniques to achieve global model optimization through multiple rounds of collaborative training.

- The central server coordinates the initialization:The central server first generates an initial global model (e.g., a neural network structure), which is distributed to participating devices as a training starting point. The server is responsible for coordinating the training process, but does not have direct access to any local data.

- Client device local training:After the eligible devices (e.g., cell phones, IoT terminals) download the global model, the locally stored non-public data are utilized for model training. All calculations are done on the device side, and the raw data is kept locally throughout, fundamentally avoiding data outflow.

- Encrypted update upload:The device only uploads encrypted and compressed model update information (e.g., gradient or parameter tuning amount) to the server. This design dramatically reduces communication overhead while preventing information leakage at intermediate points through encryption.

- Secure aggregation mechanism:The server consolidates updates from multiple devices using secure aggregation algorithms, such as the federated averaging algorithm. This process supports parameter merging in an encrypted state, ensuring that the server cannot trace the content of updates from individual devices.

- Multiple rounds of iterative optimization:The model is optimized in a continuous iterative process by cycling through the "distribute-local-train-upload-aggregate" process. The training termination condition is usually set to model performance or convergence, and a global model with generalization capability is generated.

- Differentiated allocation mechanisms:The system supports dynamic adjustment of parameters such as the number of participating devices and the number of local training rounds to adapt to different network environments and computational capabilities to ensure the stability and efficiency of the training process.

Advantages of Federal Learning

Federated learning brings several benefits over traditional methods, especially in terms of data privacy and efficiency.

- Privacy enhancements:Raw data is always retained on the local device, avoiding the risk of leakage associated with centralized storage and complying with strict data regulations.

- Reduced communication costs:Transmitting only model updates and not raw data reduces network bandwidth requirements, especially for mobile devices or bandwidth-constrained environments.

- Use of decentralized data:The ability to integrate data from multiple sources improves model generalization without the need for data sharing or centralization.

- Enhance scalability:It supports parallel training of a large number of devices, adapts to IoT and edge computing scenarios, and realizes large-scale machine learning deployment.

- Enhance user trust:By being transparent and privacy-friendly, users are more willing to engage in data-driven services that promote the popularization of AI applications.

Application Scenarios for Federated Learning

Federated learning is finding practical applications in multiple industries to address data silos and privacy concerns.

- Healthcare:Hospitals or research institutions collaborate to train disease diagnostic models, with patient data retained at the original institution to avoid sharing sensitive medical information.

- Financial services:Banks use federated learning for fraud detection, integrating data from different branches without exposing customer transaction details and improving model accuracy.

- Smartphone input method:Google Keyboard utilizes federated learning to improve predictive models, and user input habits are trained locally on the device to protect personal privacy.

- The Internet of Things and the Smart Home:Devices such as smart speakers or sensors collaborate to optimize energy management or voice recognition, and data is processed at the edge, reducing cloud dependency.

- Self-driving cars:Vehicles share model updates to improve navigation systems, but do not upload driving data to ensure security and privacy compliance.

Federal Learning Challenges

Despite the advantages, federal learning also faces some technical and administrative challenges.

- Data Heterogeneity:Data distributions from different devices may be non-independently identically distributed (Non-IID), leading to model training bias or convergence difficulties, requiring advanced aggregation techniques.

- Communication bottlenecks:Frequent transmission of model updates may consume network resources, especially in rural or low-bandwidth areas, affecting training efficiency.

- Equipment resource constraints:Client devices such as cell phones may have limited computing power, battery life, or storage space, constraining training depth and engagement.

- Security Threats:Data is not centralized, model updates may still leak information and face inference attacks or malicious participants, and encryption and authentication mechanisms need to be strengthened.

- Coordination complexity:Managing a large number of asynchronous devices requires robust server architectures and troubleshooting mechanisms, increasing system design and maintenance costs.

Security mechanisms for federated learning

To ensure the security of the federal learning process, multiple technologies are integrated into the framework.

- Differential Privacy:Adding noise to model updates prevents inferring individual data information from updates, balancing privacy and model utility.

- Secure Multi-Party Computation (SMC):Allows multiple devices to collaborate in computing model aggregation without exposing their respective updates, achieved through cryptographic protocols.

- Homomorphic Encryption:The server performs the aggregation operation directly on the encrypted updates and decrypts only the final result to avoid intermediate data leakage.

- Device authentication and access control:Only authorized devices can participate in the training, preventing malicious nodes from joining, and reinforcing authentication through digital certificates or blockchain technology.

- Auditing and logging:Monitor the training process to detect anomalous behavior such as model poisoning attacks and ensure system integrity and transparency.

The Evolution of Federal Learning

The concept and practice of federal learning has undergone an evolution from its infancy to maturity.

- Germination and Early Exploration (Early 2010s):The theoretical foundation of federated learning stems from research at the intersection of distributed machine learning and cryptography. With the popularity of edge computing devices, researchers have begun to explore the possibility of direct model training at end devices, laying the foundation for federated learning architectures.

- Technology concept formalized (2016):For the first time, Google's research team systematically proposed the term "federated learning" and verified its feasibility through real cases such as cell phone input method prediction. This groundbreaking work has attracted widespread attention from industry and academia, and has led to a wave of systematic research.

- Algorithm Optimization and Breakthroughs (2017-2019):The research focus has shifted to solving practical deployment challenges, including non-independent and co-distributed data challenges, communication efficiency optimization, etc. The proposed core algorithms, such as the federated averaging algorithm, significantly improve the training efficiency, making it possible to apply federated learning in a variety of scenarios.

- Open Source Ecology and Framework Development (2020-present):The emergence of open source frameworks such as TensorFlow Federated and PySyft has dramatically lowered the threshold for using the technology. Various industries have begun to try to deploy federated learning systems in healthcare, finance, and other fields, pushing the technology from the lab to practical applications.

- Standardization and ecological construction (current stage):Standards organizations such as the IEEE have begun to develop federal learning technology frameworks and evaluation standards, focusing on security specifications, performance metrics, and system compatibility. These efforts are laying a solid foundation for the large-scale industrial application of the technology.

Federal versus centralized learning

Federal and traditional centralized learning differ in several dimensions.

- Data Location:Federated learning data is decentralized at the client and centralized learning data is centralized at the server, with the former having better privacy but more complex coordination.

- Communication Mode:Federated learning requires frequent upstream and downstream transmission of model updates, centralized learning uploads data all at once, and communication modes affect cost and latency.

- Scalability:Federated learning is more suitable for large-scale distributed environments; centralized learning is limited by server capacity and is less scalable.

- Compliance:Federal learning naturally complies with data localization regulations, and centralized learning requires additional measures to meet privacy requirements and increase compliance burdens.

Future trends in federal learning

The direction of Federated Learning focuses on technological innovation and broader applications.

- Algorithmic progress:Research on more efficient aggregation methods and algorithms adapted to Non-IID data to improve model convergence speed and accuracy.

- Hardware integration:Combined with edge computing chips and 5G networks, it enables low-latency training and supports real-time applications such as augmented reality.

- Cross-cutting integration:Combine with blockchain to enhance auditing capabilities or collaborate with federal databases to address data silos.

- Standardization and regulations:Industry organizations set uniform standards and governments issue guiding policies to promote federal learning for compliant deployment.

- User experience optimization:Simplified development tools and interfaces make implementation easy for non-experts and accelerate penetration into SMEs.

Practical examples of federal learning

In the real world, federated learning has been successfully applied in several programs.

- Google Keyboard Project:Millions of user devices collaboratively train text prediction models and process billions of inputs per day without uploading personal input data.

- Medical Image Analysis:Multiple hospitals use federated learning to train cancer detection models with data retained at each hospital to improve diagnostic accuracy and protect patient privacy.

- Financial Risk Control System:Banking consortium builds anti-fraud models through federated learning, sharing risk models without exchanging customer data to enhance overall security.

- Smart City Project:Traffic sensors collaborate to optimize signal control, model updates are shared to reduce congestion, and data are processed locally.

- Industrial IoT:Manufacturing equipment predicts maintenance needs and shares model insights between plants to avoid downtime while protecting proprietary operational data.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...