Cross-Validation (Cross-Validation) is what, an article to see and understand

Definition of cross validation

Cross-Validation is a core method for evaluating the generalization ability of a model in machine learning. The basic idea is to split the original data into a training set and a test set, and obtain more reliable performance estimates by rotating the training and validation with different data subsets. This approach simulates the model's performance on unknown data and helps detect overfitting. The most common K-fold cross-validation randomly divides the data into K mutually exclusive subsets, using K-1 subsets each time to train the model and the remaining 1 subset to test the model, and repeating the process K times to ensure that each subset acts as a test set once, and ultimately taking the average of the K results as the performance estimate. Leave-one-out cross-validation is a special form of K-fold where K equals the total number of samples. Stratified cross-validation keeps the category proportions in each fold consistent with the original data. Time series cross-validation considers the temporal order properties of the data. Repeated cross-validation reduces the resultant variance by randomizing the division several times. Cross-validation results are not only used for model evaluation, but also guide hyperparameter tuning and model selection, providing a solid validation foundation for the machine learning process.

The core idea of cross-validation

- Mechanisms for rotating the use of data: Maximize data utilization by rotating different subsets of data as test sets. Each sample has the opportunity to participate in training and testing, providing a comprehensive evaluation.

- Generalized Competency Assessment Orientation: Focuses on the performance of the model on unseen data rather than the degree of fit on the training data. This type of evaluation is closer to practical application scenarios.

- Overfitting detection function: Identify overfitting of training data by comparing the difference in model performance on the training and validation sets. Large differences suggest overfitting risk.

- Stability Verification Methods: Evaluate model performance by splitting the data multiple times to test the stability of the results. Models with less volatility are usually more reliable.

- Framework for a Fair Comparative Basis: Provide a unified evaluation framework for different algorithms and eliminate the comparison bias due to the randomness of a single data segmentation.

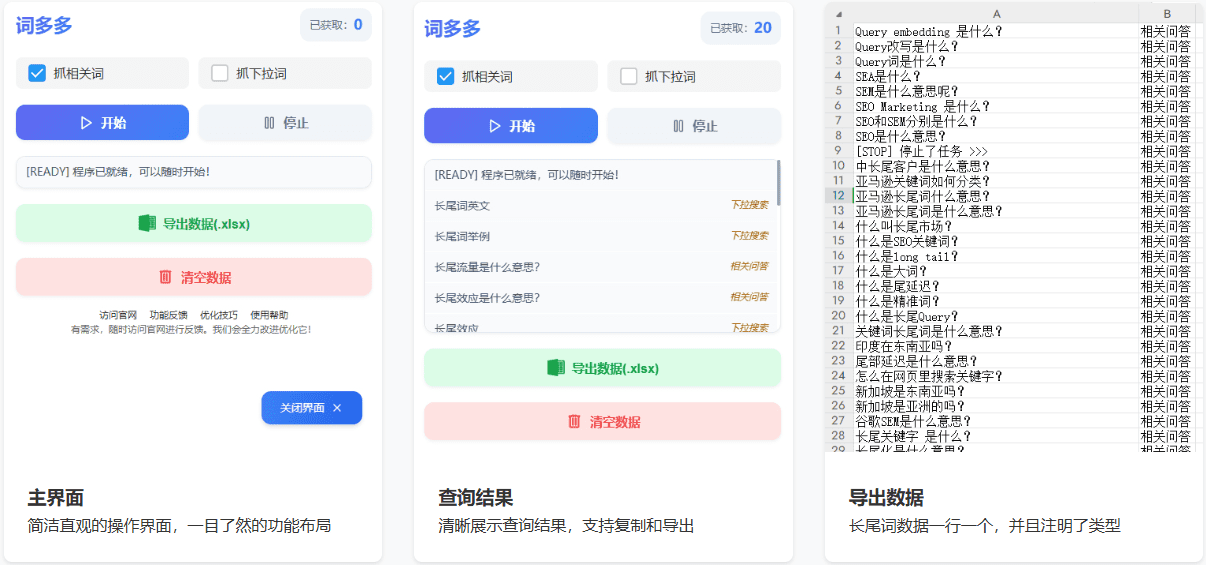

Common approaches to cross validation

- K-fold cross validation: The data is divided equally into K subsets and K rounds of training test cycles are performed. Usually K takes the value of 5 or 10, balancing computational cost with evaluation accuracy.

- leave-one-out method verification: Only one sample at a time is kept as a test set and all the rest are used for training. Suitable for small sample scenarios but with huge computational overhead.

- Layered K-fold validation: Keep the proportion of samples in each category in each fold consistent with the original dataset. Particularly suitable for data distributions with unbalanced categories.

- Time series validation: Split the training and test sets chronologically, taking into account the temporal dependence of the data. Avoid predicting the past with future data.

- Repeated randomization: Multiple randomized segmentation of the training test set and averaging the results. Further reduce the chance effect of a single random segmentation.

Implementation steps for cross validation

- Data preparation phase: Check data quality and deal with missing values and outliers. Ensure that data is in a usable standardized state.

- Discount determination process: Select the appropriate number of folds according to the size of the data volume and computational resources. Large datasets can choose smaller folds, small datasets need more folds.

- Data Segmentation Operation: Segment the data into training and test sets according to the selected method. The stratification method needs to maintain a balanced distribution of categories.

- Model Training Validation Cycle: Train the model in each round of the cycle and evaluate it on the test set. Record the performance metrics results for each time.

- Summary analysis of results: Calculate the mean and standard deviation of performance indicators for all rounds. Analyze the stability and reliability of the results.

Advantageous features of cross validation

- Efficient data utilization: Make the most of limited data, with each sample participating in both training and testing. Especially valuable for small dataset scenarios.

- Assessing the reliability of results: Reducing the variance of evaluation results through multiple validations provides more stable performance estimates. More convincing than a single segmentation.

- Overfitting Recognition Sensitivity: Effectively detect overfitting of models to training data. Provide clear direction for model improvement.

- Wide range of application scenarios: Suitable for a wide range of machine learning algorithms and task types. Works with everything from classification to regression to clustering.

- Realization of relative simplicity: The concepts are clear and easy to understand, and the code implementation is not complicated. Mainstream machine learning libraries provide ready-to-use implementations.

Limitations of cross-validation

- Higher computational costs: Multiple training of the model is required and the time overhead grows linearly with the number of folds. May become impractical on large datasets.

- Data independence assumptions: Assume that the samples are independent of each other and ignore possible data correlation. Scenarios such as time series require special treatment.

- small sample size (statistics): Limited effectiveness on very small amounts of data, making it difficult for methods other than the leave-one-out method to work.

- Model stability dependence: Evaluation results for unstable algorithms are more volatile and require more repetitions to obtain reliable estimates.

Practical applications of cross-validation

- Comparison of model selection: Compare the performance of different algorithms under the same cross-validation framework and select the optimal model. Ensure the fairness and reliability of the comparison.

- hyperparameter tuning: With methods such as grid search to find the optimal hyperparameter combinations. Each parameter combination is evaluated in a multifold validation.

- Feature Engineering Validation: Evaluate the impact of different feature combinations on model performance. Identify the most valuable subset of features.

- Algorithmic Research Assessment: Provides standardized performance evaluation protocols in academic research. Ensures reproducible and comparable results.

Parameter selection for cross-validation

- Selection of Folding Number K: A common choice is 5 or 10 percent off, which can be reduced to 30 percent off for very large amounts of data, and the leave-one-out method can be considered for very small amounts of data.

- Layered strategy application: Hierarchical cross-validation is recommended in classification problems to maintain the consistency of the category distribution.

- Random Seed Setting: Fixed random seeds to ensure reproducible results while trying different seeds to test stability.

- Repeat count determination: For high variance algorithms, increasing the number of repetitions improves the reliability of the assessment. Typically 10-100 repetitions.

- Data shuffle control: Non-time series data are usually randomly shuffled and time series data need to be kept in order.

Considerations for cross-validation

- Data Breach Prevention: Ensure that test set information is not included in the training process. Operations such as feature scaling should be applied to the test set after training.

- Category balance maintenance: Use stratified sampling or appropriate assessment metrics in unbalanced data. Avoid underestimating performance for a few classes.

- Computational efficiency optimization: Accelerate the multifold verification process using parallel computing. Leveraging the power of modern computing hardware.

- Results interpreted with caution: Cross-validation evaluates average performance and does not represent performance on a specific subset. It needs to be analyzed in context.

- Domain knowledge integration: Selection of appropriate validation methods considering data characteristics and business context. Medical data, time series, etc. require specialized handling.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...