Microsoft launches Correction tool: can it end the crisis of trust caused by AI hallucinations?

Microsoft recently launched an AI tool called 'Correction', which aims to address the long-standing problem of 'AI illusion' in generative AI models.AI illusion refers to the problem of errors, inaccuracies, or fictitious information in AI-generated content. This not only makes users question the credibility of AI, but also limits the application of AI in fields that require a high degree of accuracy. Microsoft's Correction tool, which is integrated into the Microsoft Azure AI Content Security API, aims to help users reduce the negative impact of such issues.

How does the Correction tool work?

The Correction tool employs two models working in tandem: on the one hand, a classifier model is used to flag generated content that may be incorrect, fictitious or irrelevant, while on the other hand, a language model is responsible for comparing and correcting these errors with certified 'facts'. This cross-validation approach can effectively improve the accuracy and reliability of AI-generated content. According to Microsoft, Correction not only supports its own generative models, but can also be used with mainstream generative models such as Meta's Llama and OpenAI's GPT-4.

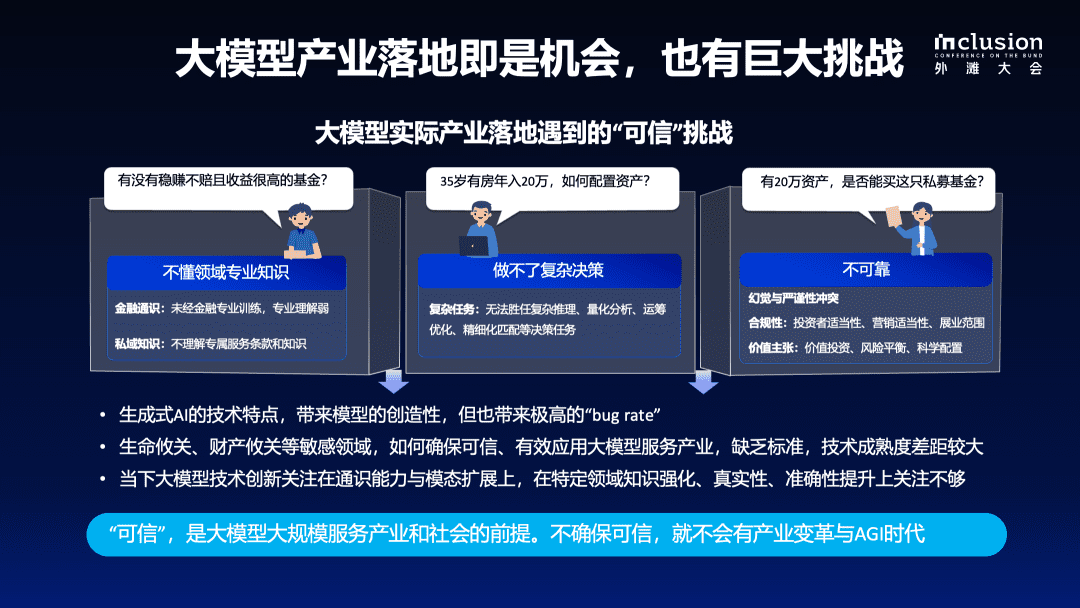

The conundrum of AI hallucinations remains

While the launch of the Correction tool offers a certain solution to the AI hallucination problem, experts still have reservations about it. Os Keyes, a researcher at the University of Washington, pointed out that trying to eliminate AI hallucinations is as difficult as trying to remove hydrogen from water, because the hallucination problem is part of the mechanism of generative AI operation. Meanwhile, other experts have warned that even if Correction is effective in reducing the hallucination problem, it could trigger a false sense of security in users who are overly reliant on AI.

In addition, the launch of the Correction tool reflects the high level of concern within the generative AI industry about content security and accuracy. Especially in areas such as healthcare and law, where accuracy is required, the problem of AI hallucinations can have serious consequences. Microsoft says Correction can help developers use AI technology more effectively in these critical areas, reducing user dissatisfaction and potential reputational risk.

Trust and the Future of AI

Microsoft's Correction tool is just one step in tackling the problem of AI hallucinations. While it may not completely solve this complex technical challenge, it is an important development in boosting the credibility of generative AI. In the future, as the technology continues to improve and other security measures are introduced, AI may gradually win the trust of more users. However, industry experts also caution that users still need to remain cautious when relying on AI-generated content and ensure that the information is verified as necessary.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...