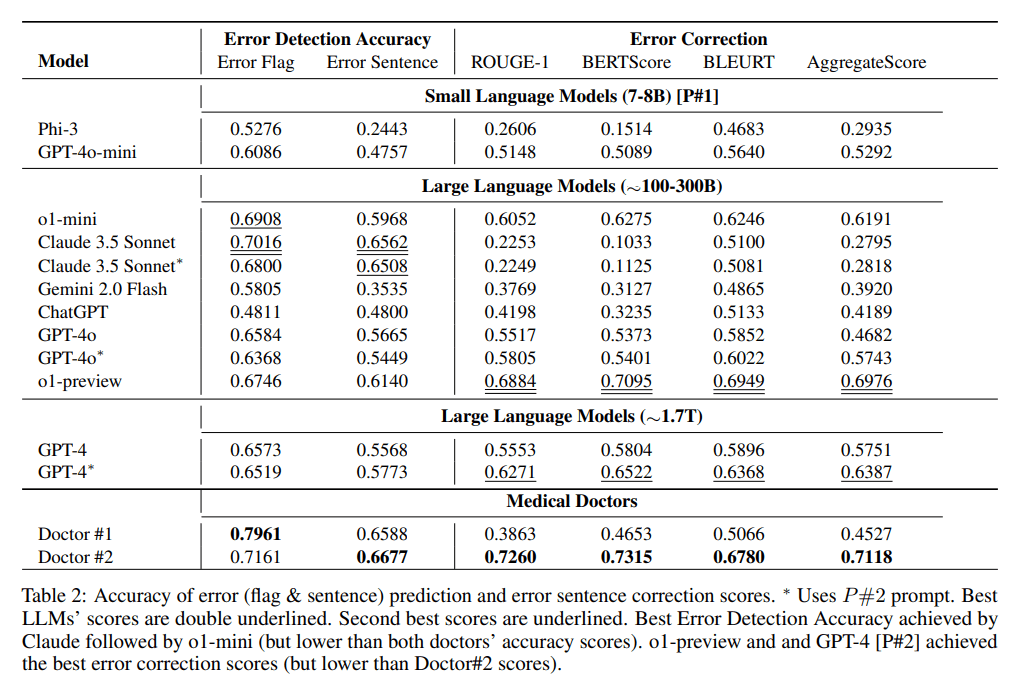

Leaked Microsoft paper: only 8B for GPT-4o-mini and 100B for o1-mini?

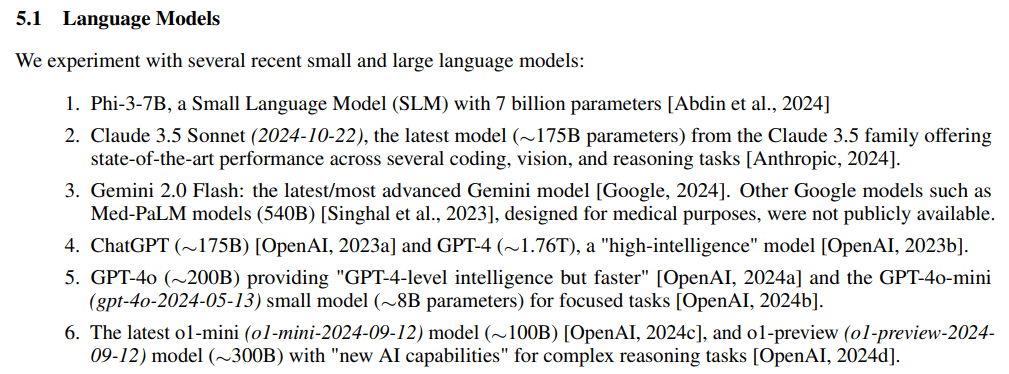

There has been an ongoing discussion about the parameter sizes of mainstream closed-source LLMs, and in the last 2 days of 2024 an article from Microsoft about theDetection and correction of medical errors in clinical notesconjectureteststandard of referenceThe MEDEC study accidentally and directly missed the scale of their parameters:o1-preview, GPT-4.GPT-4o andClaude 3.5 Sonnet.

Paper address: https://arxiv.org/pdf/2412.19260v1

The experimental part of the experiment also divides the large model parameter scales into 3 blocks:7-8B, ~100-300B, ~1.7Tbut (not)GPT-4o-miniBeing placed in the first slot with only 8B is a bit unbelievable.

summarize

- Claude 3.5 Sonnet (2024-10-22), ~175B

- ChatGPT, ~175B

- GPT-4, approximately 1.76T

- GPT-4o, ~200B

- GPT-4o-mini (gpt-4o-2024-05-13) only 8B

- Latest o1-mini (o1-mini-2024-09-12) only 100B

- o1-preview (o1-preview-2024-09-12) ~300B

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...