Microsoft Magma Model: An AI Intelligent Body That Takes Care of UI Operations and Robot Controls

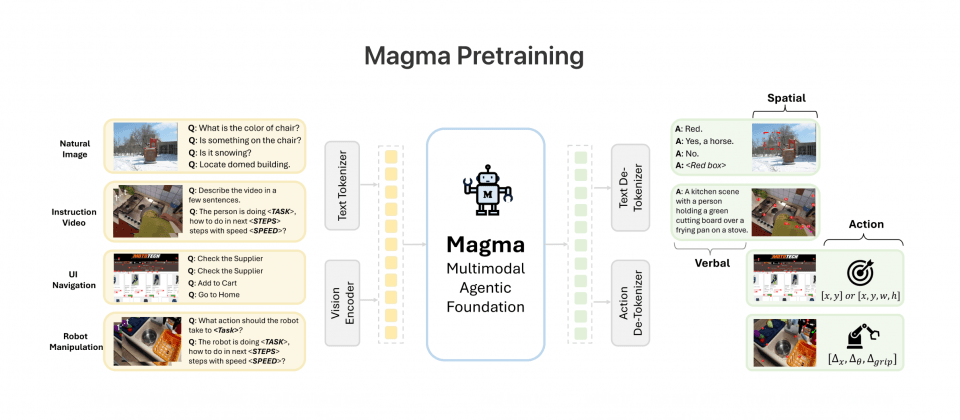

Recently, Microsoft Research released a major research achievement, Magma, a basic model of multimodal AI agents. This model is a multi-skilled model that not only "reads" images and "understands" language like humans, but also can directly operate user interfaces (UIs) and control robots, which is very impressive. Magma is a multi-skilled model that not only "reads" images and "understands" language like a human, but can also operate user interfaces (UIs) and control robots directly from the hand. This breakthrough breaks the limitations of previous visual language models, which can only understand images statically, and opens up new horizons for interactive applications of artificial intelligence.

According to Microsoft, the best thing about Magma is that it can handle a wide range of interactive tasks in both the digital and physical worlds with just one model. What's more surprising is that Magma is also very "versatile" and can demonstrate superior performance over existing specialized models without the need for domain-specific fine-tuning. This means that Magma is expected to be the cornerstone of a general-purpose AI intelligence, dramatically reducing the cost of developing and deploying AI applications.

Magma's Secret Sauce: SoM and ToM Technologies

The secret weapon that makes the Magma model so powerful is the two core technologies it employs: Set-of-Mark (SoM) and Trace-of-Mark (ToM).

Set-of-Mark (SoM)Magma's understanding of interactive elements is centered around the technique of "tagging collections", or "tagging sets". Simply put, it's like "tagging" objects in an image that can be manipulated, such as buttons on a user interface (UI) or a robotic arm in a real-world scene. In this way, the AI can more accurately recognize interactive elements in the image and act accordingly. For example, in user interface (UI) manipulation, SoM technology enables Magma to accurately recognize buttons that can be clicked on a web page or an APP, and to follow user commands to complete complex processes, such as online shopping, filling out information, and so on. In the field of robot control, SoM technology empowers Magma with the ability to sense the environment, enabling it to determine the location and characteristics of objects, and then accurately control the robotic arm to stably perform fine operations such as grasping, moving, and placing objects.

Trace-of-Mark (ToM) The technology, Marked Trajectories, focuses on allowing Magma to learn about temporal movements. This technology enables the AI to gain a deeper understanding of how objects change on the timeline by labeling movement trajectories in an image. ToM technology gives Magma the ability to predict future actions, for example, by determining the best path for a robotic arm to take when performing a task, or analyzing the behavioral patterns of a character in a video to more accurately plan its next move. Compared to traditional frame-by-frame prediction methods, ToM technology uses fewer Token to capture changes over a longer time range, significantly improving AI's decision-making ability in dynamic scenes and effectively reducing interference from environmental noise.

Magma performance in action: multiple reviews top the list

To validate Magma's strengths, researchers conducted several rigorous benchmark tests. The results showed that Magma excelled and outperformed in every test, proving its technology leadership.

In the field of user interface (UI) manipulation, Magma has achieved very high accuracy rates in both Mind2Web and AITW. This is a strong testament to Magma's ability to manipulate complex web pages and mobile app interfaces, even performing complex tasks such as web browsing and app manipulation as if it were a real user.

In terms of robot control, Magma outperforms the existing robot vision language model OpenVLA in the WidowX and LIBERO tests. The test results show that Magma is able to successfully perform complex tasks such as software manipulation and pick-and-place of solid objects, and demonstrates excellent generalization ability and stability in both known and unknown environments. This means that Magma has the potential to make a big impact in industrial and service robotics, such as automated production lines, intelligent logistics, home services, and so on.

Zero & Less Sample Learning: Adapting to New Environments Rapidly

Another highlight of Magma is its excellent zero- and few-sample learning capabilities. This allows Magma to be applied directly to new, never-before-seen environments without additional time-consuming fine-tuning. Test data has shown that Magma can complete a full task flow with zero samples, both in user interface (UI) operations and in robotics tasks. This feature lowers the barriers to Magma's adoption, making it quicker and easier to implement into real-world scenarios.

In addition to its outstanding performance in user interface (UI) operation and robotics applications, Magma has also demonstrated its strength in visual quizzing, temporal reasoning and other tasks. Especially in the spatial reasoning test, Magma even outperformed GPT-4o, which is widely regarded as the industry benchmark. Microsoft also admitted that spatial reasoning evaluation is still a challenging problem for GPT-4o, but Magma can better solve such problems even though the amount of pre-training data is far less than that of GPT-4o. This makes one look forward to Magma's future development.

All in all, the release of Microsoft's Magma model is undoubtedly another milestone breakthrough in the field of multimodal AI. With its unique SoM and ToM technologies, as well as its excellent zero- and few-sample learning capabilities, Magma is expected to lead the way in the development of a new generation of AI intelligences, and create a new technological revolution in the field of user interface (UI) interactions, robotics control, and a wider range of AI applications.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...