Microsoft Azure+Copilot+AI PC products fully support DeepSeek R1 models

On January 30, 2025, Microsoft said that DeepSeek's R1 model is now available on its Azure cloud computing platform and GitHub tools for developers in general. Microsoft also said that customers will soon be able to use DeepSeek's R1 model on their Copilot + Run the R1 model locally on the PC.

As we mentioned earlier, copilot will gradually use other big models besides OpenAI:

Microsoft has been in the news more recently, and one of the most notable items is that theGitHub's copilot is free and open!up, albeit in smaller numbers (2,000 per month for code completions and 50 per month for chat conversations), but at least it's a landmark event, and the model has been expanded from the original single GPT 4o to o1 Claude cap (a poem) Gemini and many other models.

What this really means is that Microsoft is now moving away from its reliance on OpenAI technology, and in a way OpenAI is no longer the single best model, and I'm afraid that the operating costs are no longer the most cost-effective.

As a result of the recent deepseek The more powerful influence in the world, a series of eye-catching new features continue to come online, but also caused the life and death competition between China and the United States in the field of AI, Microsoft in order to make copilot better support for the Chinese language, ready to use deepseek's R1 model in copilot.

Microsoft CEO Indian also emphasized on the call that DeepSeek R1 models are now available through Microsoft's AI platform Azure AI Foundry and GitHub, and will soon be able to run on Copilot+ computers. Nadella said DeepSeek "has some real innovations" and that the cost of AI is trending down: "The Scaling Law is building up in pre-training and inference time calculations. Over the years, we've seen significant improvements in efficiency in AI training and inference. On the inference side, we typically see more than a 2x improvement in price/performance per generation of hardware and more than a 10x improvement in price/performance per generation of models."

We are happy to see that.

copilot for Microsoft 365 is a native copilot plugin for office365 (word, excel, ppt, OneNote, teams, etc.), which can generate content based on other files in word, generate formulas and calculations in excel, create presentations based on other files in PPT You can also create presentations from other documents in PPT, organize notes and generate more notes in OneNote, summarize meetings in teams, and more.

Related reading 1: "Running Distillation-Optimized DeepSeek R1 Models Locally on Copilot+ PCs

AI is moving to the edge, and Copilot+ PCs are leading the way. As Azure AI Foundry goes live with cloud-hosted DeepSeek R1 (related reading2), we're bringing an NPU-optimized version of DeepSeek-R1 directly to Copilot+ PCs, with the first adaptations for the Qualcomm Snapdragon X platform, followed by support for platforms like Intel Core Ultra 200V. The first release of DeepSeek-R1-Distill-Qwen-1.5B will be available on AI Toolkit, with versions 7B and 14B to follow. These optimized models allow developers to build and deploy AI applications that run efficiently on the device, taking full advantage of the power of the NPU in the Copilot+ PC.

The Copilot+ PC's Neural Processing Unit (NPU) provides a highly efficient engine for model inference, opening up a new paradigm for generative AI to not only run on demand, but also enable semi-continuously running services. This enables developers to build proactive continuous experiences with a powerful inference engine. Through the accumulation of technologies from the Phi Silica project, we have achieved efficient inference - minimizing battery life and system resource consumption while ensuring competitive first-Token response times and throughput rates.The NPU-optimized DeepSeek model employs a number of the key technologies from the project, including: model modular design for optimal balance of performance and efficiency, low-bitwidth quantization techniques, and Transformer's mapping strategy on the NPU. In addition, we utilize the Windows Copilot Runtime (WCR) to enable scaling across the Windows ecosystem via the ONNX QDQ format.

Experience it now!

First... Let's get started quickly.

To experience DeepSeek on Copilot+ PCs, simply download the VS Code extension for AI Toolkit. ONNX QDQ-formatted optimized DeepSeek models will soon be available in the AI Toolkit model repository and pulled directly from the Azure AI Foundry. Click the "Download" button to download locally. Once downloaded, simply open the Playground, load the "deepseek_r1_1_5" model, and start experimenting with the prompts.

In addition to the ONNX model optimized for Copilot+ PCs, you can also get a new model by clicking on the "DeepSeek R1"Try in". Playground" button to experience the original model hosted in the cloud in Azure Foundry.

The AI Toolkit is an important part of the developer workflow, supporting model experimentation and deployment readiness. With this Playground, you can easily test DeepSeek models in Azure AI Foundry for local deployment.

Chip-level optimization

The distillation-optimized Qwen 1.5B model contains a Tokenizer, an embedding layer, a context processing model, a Token iteration model, a language model header, and a detokenizer module.

We use 4-bit chunked quantization for the embedding layer and language model header, and run these memory-intensive operations on the CPU. Focusing the main NPU optimization resources on the computationally intensive Transformer module containing context processing and Token iteration, we use int4 channel-by-channel quantization and implement a selective mixed-precision treatment for the weights, while the activation values use int16 precision.

While DeepSeek's release of Qwen 1.5B already has an int4 version, it cannot be directly adapted to NPUs due to the presence of dynamic input shapes and behaviors - these need to be optimized for compatibility and optimal efficiency. Additionally, we useONNX QDQformat to support the scaling of various types of NPUs in the Windows ecosystem. We optimize the operator layout between CPU and NPU to achieve the best balance between power consumption and speed.

To achieve the dual goals of low memory footprint and fast inference (similar to the Phi Silica scheme), we make two key improvements: firstly, we adopt a sliding-window design, which enables ultra-fast first Token response time and long context support even when the hardware stack does not support dynamic tensor; secondly, we use a 4-bit QuaRot quantization scheme to take full advantage of low-bitwidth processing. QuaRot eliminates outliers in weights and activation values through Hadamard rotation, making the model easier to quantize. Compared with existing methods such as GPTQ, QuaRot significantly improves quantization accuracy, especially in low-granularity scenarios such as channel-by-channel quantization. The combination of low-bitwidth quantization and hardware optimizations such as sliding window design enables the model to achieve the behavioral characteristics of large models with compact memory footprint. As a result of these optimizations, the model achieves a first Token response time of 130 ms and a throughput rate of 16 tokens/sec in short cue (<64 tokens) scenarios.

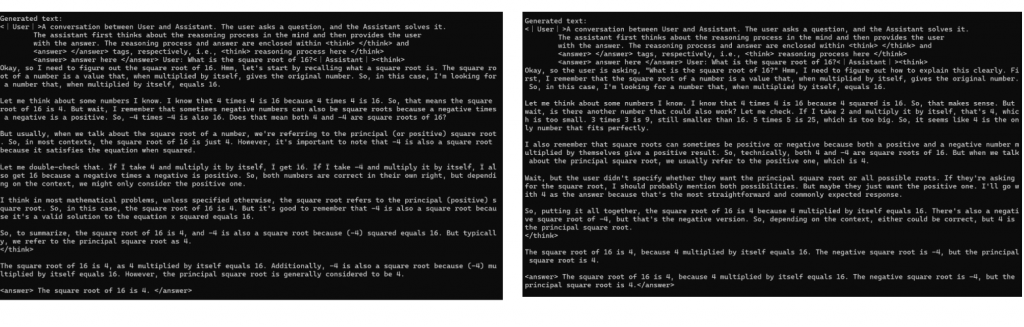

We show the subtle differences between the original model and the quantized model response with the following example, which achieves energy efficiency optimization while maintaining high performance:

Fig. 1: Qualitative comparison. Examples of the original model (left) and the NPU optimized model (right) responding to the same cues, including a demonstration of the model's reasoning power. The optimized model maintains the reasoning pattern of the original model and arrives at the same conclusion, demonstrating that it retains the reasoning power of the original model intact.

With the superior speed and energy-efficiency performance of the NPU-optimized DeepSeek R1 model, users will be able to interact with these breakthrough models locally and completely autonomously.

Related reading 2: "DeepSeek R1 Now Available on Azure AI Foundry and GitHub

DeepSeek R1 is now available Azure AI Foundry and GitHub's model catalog, joining a diverse portfolio of more than 1,800 models spanning cutting-edge, open-source, industry-specific and task-oriented AI models. As part of the Azure AI Foundry, DeepSeek R1 is accessible through a trusted, scalable and enterprise-ready platform that enables organizations to seamlessly integrate advanced AI technologies while meeting SLAs, security and responsible AI commitments - all backed by Microsoft's reliability and innovation. All supported by Microsoft's reliability and innovation.

Accelerating AI Reasoning for Azure AI Foundry Developers

AI reasoning is gaining popularity at an alarming rate, changing the way developers and organizations apply cutting-edge intelligence. As DeepSeek explains, R1 delivers powerful and cost-effective models that make state-of-the-art AI capabilities available to more users with minimal infrastructure investment.

The key benefit of using DeepSeek R1 or other models in Azure AI Foundry is the speed at which developers can experiment, iterate, and integrate AI into their workflows. With built-in model evaluation tools, developers can quickly compare outputs, evaluate performance benchmarks, and scale AI-driven applications. This rapid access, unimaginable just a few months ago, is at the heart of our vision for the Azure AI Foundry: to bring together the best AI models in one place to accelerate innovation and unlock new possibilities for organizations around the world.

Developing with Trusted AI

We are committed to helping our customers rapidly build production-ready AI applications while maintaining the highest standards of security. deepSeek R1 has passed rigorous "red team testing" and security assessments, including automated evaluations of model behavior and a comprehensive security review to mitigate potential risks. Built-in content filtering is enabled by default with flexible opt-out options through Azure AI Content Security. In addition, a security assessment system helps customers efficiently test applications before deployment. These safeguards enable Azure AI Foundry to provide organizations with a secure, compliant and responsible environment for deploying AI solutions.

How to use DeepSeek in a model catalog

- If you don't have an Azure subscription, you can nowRegistering for an Azure Account

- Search for DeepSeek R1 in the Model Catalog

- Open the model card in Azure AI Foundry's model catalog

- Click "Deploy" to get the inference API and key and access the testbed

- You will be taken to the deployment page showing the API and key within 1 minute and can try to enter the prompts in the testbed

- The API and key can be used by a variety of clients

Get Started Now

DeepSeek R1 is now accessible through a serverless endpoint in the Azure AI Foundry model catalog.Access the Azure AI Foundry today and select the DeepSeek model.

Explore more resources and get a step-by-step guide to seamlessly integrating DeepSeek R1 into your application on GitHub. Read more GitHub Models Blog Post.

Coming soon: customers will be able to run locally on Copilot+ PCs using a lite model of DeepSeek R1. Read more Windows Developer Blog Post.

As we continue to expand the Azure AI Foundry's catalog of models, we look forward to witnessing how developers and organizations use DeepSeek R1 to address real-world challenges and create transformative experiences. We are committed to providing the most comprehensive portfolio of AI models to ensure organizations of all sizes have access to cutting-edge tools to drive innovation and success.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...