Microsoft Getting Started with AI Agents: Building Trustworthy AI Agents

summary

This course will cover:

- How to Build and Deploy Secure and Effective AI Agents

- Important security considerations when developing AI Agents.

- How to maintain data and user privacy when developing AI Agents.

Learning Objectives

After completing this course, you will understand how:

- Identify and mitigate risks when creating AI Agents.

- Implement security measures to ensure that data and access are properly managed.

- Create AI Agents that maintain data privacy and provide a quality user experience.

safety

Let's first look at how to build secure Agentic applications. Security means that the AI Agent performs as designed. As builders of Agentic applications, we have the methods and tools to maximize security:

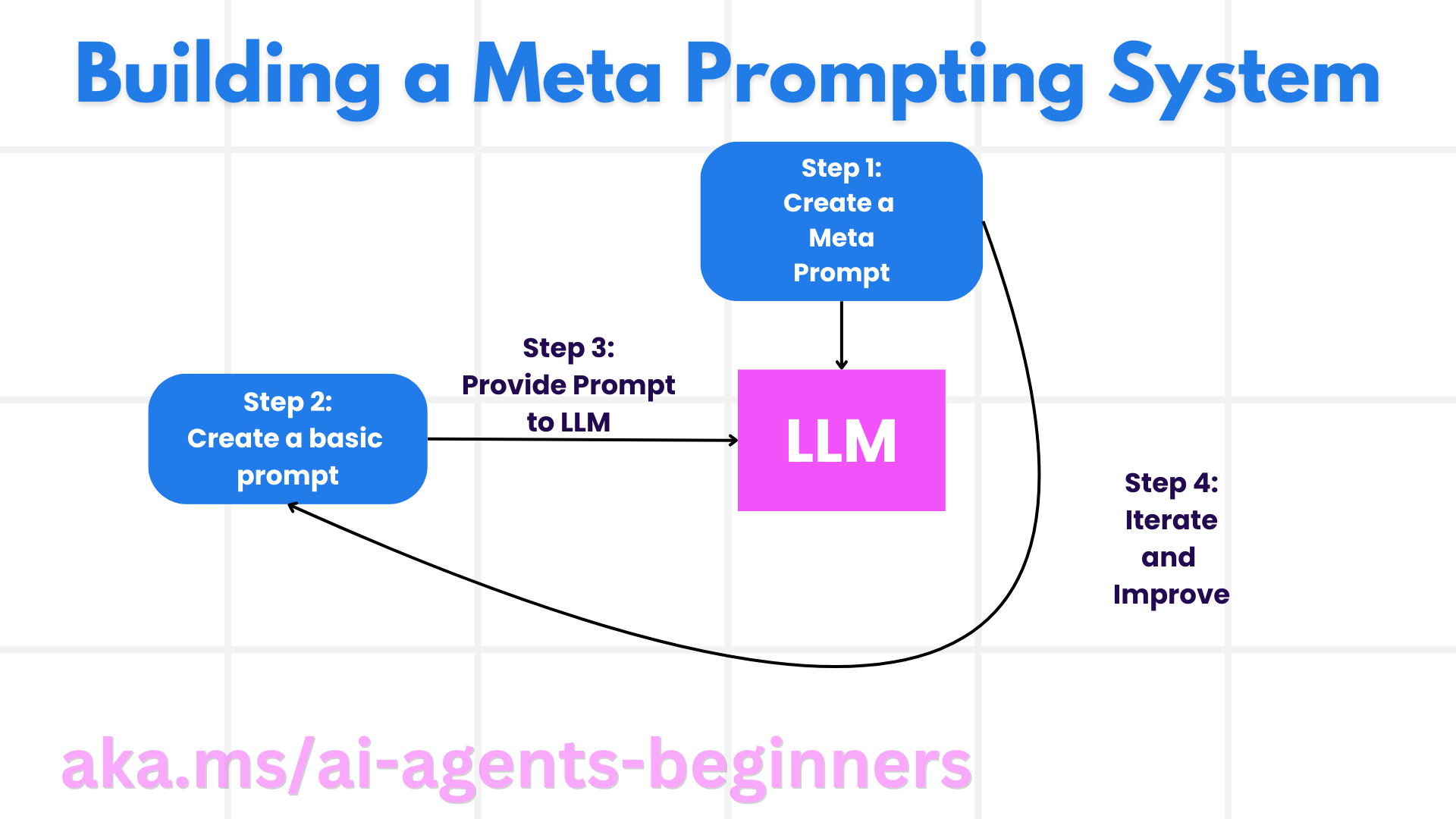

Building a meta-prompting system

If you've ever built an AI application using Large Language Models (LLMs), you know the importance of designing a strong system hint or system message. These prompts establish the meta-rules, instructions, and guidelines for how the LLM interacts with users and data.

For AI Agents, system cues are even more important because AI Agents will need highly specific instructions to accomplish the tasks we design for them.

To create scalable system prompts, we can use the meta-prompt system to build one or more Agents in our application:

Step 1: Create a meta-prompt or template prompt

Meta-prompts will be used by LLM to generate system prompts for the Agents we create. We have designed it as a template so that we can efficiently create multiple Agents when needed.

Below is an example of the meta-prompts we provide to LLM:

你是一位创建 AI Agent 助手的专家。

你将获得公司名称、角色、职责和其他信息,你将使用这些信息来提供系统提示。

为了创建系统提示,请尽可能详细地描述,并提供一个系统使用 LLM 可以更好地理解 AI 助手角色和职责的结构。

Step 2: Create a Basic Tip

The next step is to create a basic prompt to describe the AI Agent. you should include the Agent's role, the tasks the Agent will perform, and any other responsibilities of the Agent.

Here is an example:

你是 Contoso Travel 的旅行 Agent,擅长为客户预订航班。为了帮助客户,你可以执行以下任务:查找可用航班、预订航班、询问客户对座位和航班时间偏好、取消任何先前预订的航班,并提醒客户任何航班延误或取消。

Step 3: Provide Basic Tips to the LLM

We can now optimize this prompt by offering the meta-prompt as a system prompt and our basic prompt.

This will generate a prompt that is better suited to guide our AI Agent:

**公司名称:** Contoso Travel

**角色:** 旅行 Agent 助理

**目标:**

你是 Contoso Travel 的 AI 驱动的旅行 Agent 助理,专门负责预订航班和提供卓越的客户服务。你的主要目标是协助客户查找、预订和管理他们的航班,同时确保他们的偏好和需求得到有效满足。

**主要职责:**

1. **航班查询:**

* 根据客户指定的目的地、日期和任何其他相关偏好,协助客户搜索可用航班。

* 提供选项列表,包括航班时间、航空公司、中途停留和价格。

2. **航班预订:**

* 协助客户预订航班,确保所有详细信息都正确输入系统。

* 确认预订并向客户提供他们的行程,包括确认号码和任何其他相关信息。

3. **客户偏好查询:**

* 主动询问客户对座位(例如,过道、靠窗、额外腿部空间)和首选航班时间(例如,上午、下午、晚上)的偏好。

* 记录这些偏好以供将来参考,并相应地定制建议。

4. **航班取消:**

* 如果需要,根据公司政策和程序协助客户取消先前预订的航班。

* 通知客户任何必要的退款或可能需要取消的其他步骤。

5. **航班监控:**

* 监控已预订航班的状态,并实时提醒客户有关其航班时刻表的任何延误、取消或更改。

* 根据需要通过首选通信渠道(例如,电子邮件、短信)提供更新。

**语气和风格:**

* 在与客户的所有互动中保持友好、专业和 அணுகக்கூடிய (平易近人) 的态度。

* 确保所有沟通都清晰、信息丰富,并根据客户的具体需求和查询进行定制。

**用户交互说明:**

* 及时准确地响应客户查询。

* 使用对话风格,同时确保专业性。

* 通过在提供的所有协助中保持专注、同情和主动,优先考虑客户满意度。

**附加说明:**

* 及时了解可能影响航班预订和客户体验的航空公司政策、旅行限制和其他相关信息的任何更改。

* 使用清晰简洁的语言解释选项和流程,尽可能避免使用术语,以便客户更好地理解。

此 AI 助手旨在简化 Contoso Travel 客户的航班预订流程,确保他们的所有旅行需求都得到高效和有效的满足。

Step 4: Iterate and improve

The value of this meta-prompting system is the ability to more easily scale to create prompts from multiple Agents, as well as improve your prompts over time. Your prompts will rarely work for your full use case the first time around. The ability to fine-tune and improve by changing basic prompts and running them through the system will allow you to compare and evaluate results.

Understanding the threat

In order to build trustworthy AI Agents, it's important to understand and mitigate the risks and threats to AI Agents. Let's take a look at some of the different threats to AI Agents and how to better plan and prepare for them.

Mandates and directives

Description: An attacker attempts to change the AI Agent's commands or goals by prompting or manipulating inputs.

Mitigation Measures: Perform validation checks and input filters to detect potentially dangerous hints before they are processed by the AI Agent. Since these attacks usually require frequent interactions with the Agent, limiting the number of rounds in a dialog is another way to prevent such attacks.

Access to critical systems

Description: If the AI Agent has access to systems and services that store sensitive data, an attacker may be able to disrupt communications between the Agent and those services. These attacks may be direct attacks or attempts to obtain information about these systems indirectly through the Agent.

Mitigation Measures: The AI Agent should have access to the system only when needed to prevent such attacks. communication between the Agent and the system should also be secure. Implementing authentication and access control is another way to protect this information.

Resource and service overload

Description: The AI Agent can access different tools and services to accomplish tasks. Attackers can utilize this ability to attack these services by sending a large number of requests through the AI Agent, which can lead to system failures or high costs.

Mitigation Measures: Implement policies to limit the number of requests an AI Agent can make to the service. Limiting the number of conversation rounds and requests to the AI Agent is another way to prevent such attacks.

knowledge base poisoning

Description: This type of attack does not directly target the AI Agent, but rather the knowledge base and other services that the AI Agent will use. This may involve corrupting the data or information that the AI Agent will use to accomplish a task, resulting in a biased or unexpected response to the user.

Mitigation Measures: Regularly validate the data that the AI Agent will use in its workflow. Ensure that access to this data is secure and can only be changed by trusted individuals to avoid such attacks.

cascade error

Description: The AI Agent accesses a variety of tools and services to accomplish its tasks. Errors caused by an attacker can cause other systems that the AI Agent connects to to fail, making the attack more widespread and harder to troubleshoot.

Mitigation Measures: One way to avoid this is to have the AI Agent run in a restricted environment, such as performing tasks in a Docker container, to prevent direct system attacks. Creating fallback mechanisms and retry logic when certain systems respond incorrectly is another way to prevent larger system failures.

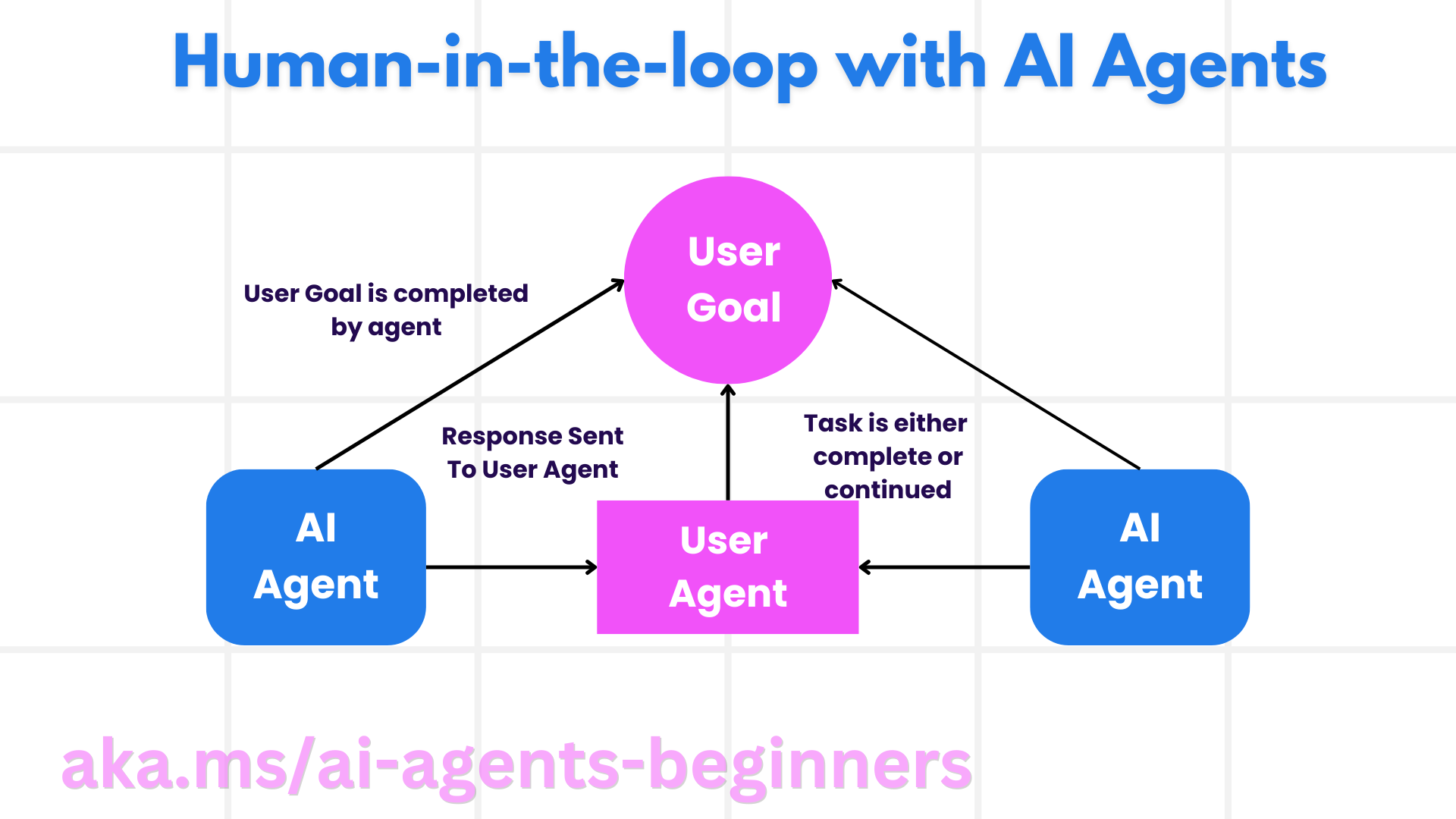

human intervention

Another effective way to build a trustworthy AI Agent system is to use human intervention. This creates a process where the user can provide feedback to the Agent during runtime. The user essentially acts as an Agent in a multi-agent system and by approving or terminating a running process.

This is an example of the use of AutoGen code snippet that shows how to implement this concept:

# 创建 agents。

model_client = OpenAIChatCompletionClient(model="gpt-4o-mini")

assistant = AssistantAgent("assistant", model_client=model_client)

user_proxy = UserProxyAgent("user_proxy", input_func=input) # 使用 input() 从控制台获取用户输入。

# 创建终止条件,当用户说“APPROVE”时,对话将结束。

termination = TextMentionTermination("APPROVE")

# 创建团队。

team = RoundRobinGroupChat([assistant, user_proxy], termination_condition=termination)

# 运行对话并流式传输到控制台。

stream = team.run_stream(task="Write a 4-line poem about the ocean.")

# 在脚本中运行时使用 asyncio.run(...)。

await Console(stream)

reach a verdict

Building a trusted AI Agent requires careful design, strong security measures, and continuous iteration. By implementing a structured system of meta-prompts, understanding potential threats, and applying mitigation strategies, developers can create AI Agents that are both secure and effective.Additionally, employing a human intervention approach ensures that AI Agents are aligned with user needs while minimizing risk. As AI continues to evolve, taking a proactive stance on security, privacy, and ethical considerations will be key to fostering trust and reliability in AI-driven systems.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...