Microsoft AI Agent Introductory Course: Exploring the AI Agent Framework

AI Agent frameworks are software platforms designed to simplify the creation, deployment, and management of AI Agents. These frameworks provide developers with pre-built components, abstractions, and tools to simplify the development of complex AI systems.

These frameworks help developers focus on the unique aspects of their applications by providing a standardized approach to common challenges in AI Agent development. They enhance the scalability, accessibility, and efficiency of building AI systems.

summary

This course will cover:

- What are AI Agent frameworks and what do they enable developers to do?

- How can teams use these frameworks to rapidly prototype, iterate, and improve Agent's capabilities?

- What is the difference between the frameworks and tools created by Microsoft AutoGen, Semantic Kernel and Azure AI Agent?

- Is it possible to integrate directly with existing Azure ecosystem tools or do I need a standalone solution?

- What is the Azure AI Agents service and how can it help?

Learning Objectives

The goal of this course is to help you understand:

- The role of AI Agent frameworks in AI development.

- How to Build Intelligent Agents with the AI Agent Framework.

- Key features implemented by the AI Agent framework.

- Difference between AutoGen, Semantic Kernel and Azure AI Agent Service.

What are AI Agent frameworks and what do they enable developers to do?

Traditional AI frameworks can help you integrate AI into your applications and make those applications better by:

- personalized: AI can analyze user behavior and preferences to deliver personalized recommendations, content, and experiences. For example, streaming services like Netflix use AI to recommend movies and shows based on viewing history to increase user engagement and satisfaction.

- Automation and efficiency: AI can automate repetitive tasks, streamline workflows, and improve operational efficiency. For example, customer service applications use AI-powered chatbots to handle common queries, reducing response times and allowing human agents to handle more complex issues.

- Enhanced User Experience: AI can improve the overall user experience by providing intelligent features such as speech recognition, natural language processing, and predictive text. For example, virtual assistants like Siri and Google Assistant use AI to understand and respond to voice commands, making it easier for users to interact with their devices.

This all sounds great, right? So why do we need an AI Agent framework?

AI Agent frameworks are more than just AI frameworks. They are designed to create intelligent agents that can interact with users, other agents, and the environment to achieve specific goals. these agents can exhibit autonomous behavior, make decisions, and adapt to changing conditions. Let's take a look at some of the key features implemented by AI Agent frameworks:

- Agent Collaboration and coordination: Supports the creation of multiple AI Agents that can work together, communicate and coordinate to solve complex tasks.

- Task automation and management: Provides mechanisms for automating multi-step workflows, task delegation, and dynamic task management between Agents.

- Contextual understanding and adaptation: Enables the Agent to understand context, adapt to changing environments, and make decisions based on real-time information.

In short, Agents allow you to do more, take automation to the next level, and create smarter systems that can adapt and learn from their environment.

How to rapidly prototype, iterate and improve Agent's capabilities?

It's a rapidly evolving field, but most AI Agent frameworks have a few things in common that can help you prototype and iterate quickly, namely modular components, collaboration tools, and real-time learning. Let's dive into these:

- Using Modular Components: The AI framework provides pre-built components such as hints, parsers, and memory management.

- Utilization of collaboration tools: Design Agents with specific roles and tasks so they can test and improve collaborative workflows.

- Real-time learning: A feedback loop is achieved where the Agent learns from the interaction and dynamically adapts its behavior.

Using Modular Components

Frameworks such as LangChain and Microsoft Semantic Kernel provide pre-built components such as hints, parsers, and memory management.

How do teams use these: Teams can quickly assemble these components to create a functional prototype without having to start from scratch, enabling rapid experimentation and iteration.

How it works in practice: You can use pre-built parsers to extract information from user input, in-memory modules to store and retrieve data, and prompt generators to interact with users, all without having to build these components from scratch.

sample code (computing). Let's see an example of how to extract information from user input using a pre-built parser:

// Semantic Kernel 示例

ChatHistory chatHistory = [];

chatHistory.AddUserMessage("I'd like to go To New York");

// 定义一个包含预订旅行功能的插件

public class BookTravelPlugin(

IPizzaService pizzaService,

IUserContext userContext,

IPaymentService paymentService)

{

[KernelFunction("book_flight")]

[Description("Book travel given location and date")]

public async Task<Booking> BookFlight(

DateTime date,

string location,

)

{

// 根据日期、地点预订旅行

}

}

IKernelBuilder kernelBuilder = new KernelBuilder();

kernelBuilder..AddAzureOpenAIChatCompletion(

deploymentName: "NAME_OF_YOUR_DEPLOYMENT",

apiKey: "YOUR_API_KEY",

endpoint: "YOUR_AZURE_ENDPOINT"

);

kernelBuilder.Plugins.AddFromType<BookTravelPlugin>("BookTravel");

Kernel kernel = kernelBuilder.Build();

/*

在后台,它识别要调用的工具、它已经具有的参数 (location) 和它需要的参数 (date)

{

"tool_calls": [

{

"id": "call_abc123",

"type": "function",

"function": {

"name": "BookTravelPlugin-book_flight",

"arguments": "{\n\"location\": \"New York\",\n\"date\": \"\"\n}"

}

}

]

*/

ChatResponse response = await chatCompletion.GetChatMessageContentAsync(

chatHistory,

executionSettings: openAIPromptExecutionSettings,

kernel: kernel)

Console.WriteLine(response);

chatHistory.AddAssistantMessage(response);

// AI 响应:"Before I can book your flight, I need to know your departure date. When are you planning to travel?"

// 也就是说,在前面的代码中,它找出了要调用的工具,它已经拥有的参数 (location) 以及它从用户输入中需要的参数 (date),此时它最终会向用户询问缺失的信息

From this example you can see how a pre-built parser can be utilized to extract key information from user input, such as the origin, destination and date of a flight booking request. This modular approach allows you to focus on high-level logic.

Utilization of collaboration tools

CrewAI and Microsoft AutoGen Frameworks such as this one facilitate the creation of multiple Agents that can work together.

How do teams use these: Teams can design Agents with specific roles and tasks, enabling them to test and improve collaborative workflows and increase overall system efficiency.

How it works in practice: You can create a team of Agents, each of which has a specialized function, such as data retrieval, analysis, or decision making. These Agents can communicate and share information to achieve common goals, such as answering user queries or completing tasks.

Sample Code (AutoGen)::

# 创建 Agent,然后创建一个循环调度,让他们可以协同工作,在本例中是按顺序

# 数据检索 Agent

# 数据分析 Agent

# 决策 Agent

agent_retrieve = AssistantAgent(

name="dataretrieval",

model_client=model_client,

tools=[retrieve_tool],

system_message="Use tools to solve tasks."

)

agent_analyze = AssistantAgent(

name="dataanalysis",

model_client=model_client,

tools=[analyze_tool],

system_message="Use tools to solve tasks."

)

# 当用户说 "APPROVE" 时,对话结束

termination = TextMentionTermination("APPROVE")

user_proxy = UserProxyAgent("user_proxy", input_func=input)

team = RoundRobinGroupChat([agent_retrieve, agent_analyze, user_proxy], termination_condition=termination)

stream = team.run_stream(task="Analyze data", max_turns=10)

# 在脚本中运行时使用 asyncio.run(...)。

await Console(stream)

What you saw in the preceding code is how to create a task that involves multiple Agents working together to analyze data. Each Agent performs a specific function and coordinates the Agents to perform the task to achieve the desired results. By creating dedicated Agents with specialized roles, you can improve task efficiency and performance.

Real-time learning

The Advanced Framework provides real-time contextual understanding and adaptation.

How teams use these frameworks: Teams can implement feedback loops that allow Agents to learn from interactions and dynamically adjust their behavior, enabling continuous improvement and refinement of capabilities.

practical application: Agents analyze user feedback, environmental data, and task results to update their knowledge base, adjust decision-making algorithms, and improve performance over time. This iterative learning process allows Agents to adapt to changing conditions and user preferences, thereby improving overall system efficiency.

What is the difference between AutoGen, Semantic Kernel and Azure AI Agent Service frameworks?

There are many ways to compare these frameworks, but let's look at some key differences in terms of their design, functionality, and target use cases:

AutoGen

Open source framework developed by Microsoft Research's AI Frontiers Lab. Focuses on event-driven, distributed agentic Applications that support multiple Large Language Models (LLMs) and SLMs, tools, and advanced Multi-Agent design patterns.

The core concept of AutoGen is Agents, which are autonomous entities that can sense their environment, make decisions, and take actions to achieve specific goals. Agents communicate through asynchronous messaging, allowing them to work independently and in parallel, thus increasing the scalability and responsiveness of the system.

Agents are based on actor models. According to Wikipedia, actor is The basic building blocks of concurrent computation. In response to an incoming message, an actor can: make local decisions, create more actors, send more messages, and determine how to respond to the next incoming messageThe

use case: Automate code generation, data analysis tasks, and build customized intelligences (Agents) for planning and research functions.

Here are some important core concepts of AutoGen:

- Agents.. An intelligent body (Agent) is a software entity that:

- Communicating via messages, these messages can be synchronous or asynchronous.

- Maintaining one's statusThis state can be modified by incoming messages.

- executable operation in response to a received message or a change in its state. These actions may modify the state of the Agent and have external effects, such as updating the message log, sending new messages, executing code, or making API calls.

Below is a short code snippet in which you can create an Agent with chat functionality:

from autogen_agentchat.agents import AssistantAgent from autogen_agentchat.messages import TextMessage from autogen_ext.models.openai import OpenAIChatCompletionClient class MyAssistant(RoutedAgent): def __init__(self, name: str) -> None: super().__init__(name) model_client = OpenAIChatCompletionClient(model="gpt-4o") self._delegate = AssistantAgent(name, model_client=model_client) @message_handler async def handle_my_message_type(self, message: MyMessageType, ctx: MessageContext) -> None: print(f"{self.id.type} received message: {message.content}") response = await self._delegate.on_messages( [TextMessage(content=message.content, source="user")], ctx.cancellation_token ) print(f"{self.id.type} responded: {response.chat_message.content}")In the preceding code, the

MyAssistanthas been created and inherited fromRoutedAgent. It has a message handler that prints the contents of the message and then uses theAssistantAgentDelegate the sending of the response. Pay particular attention to how we're delegating theAssistantAgentThe instance of theself._delegate(math.) genusAssistantAgentis a pre-built intelligence (Agent) that can handle chat complementation.

Next, let AutoGen know about this Agent type and start the program:# main.py runtime = SingleThreadedAgentRuntime() await MyAgent.register(runtime, "my_agent", lambda: MyAgent()) runtime.start() # Start processing messages in the background. await runtime.send_message(MyMessageType("Hello, World!"), AgentId("my_agent", "default"))In the preceding code, Agents are registered with the runtime, and then messages are sent to the Agent to produce the following output:

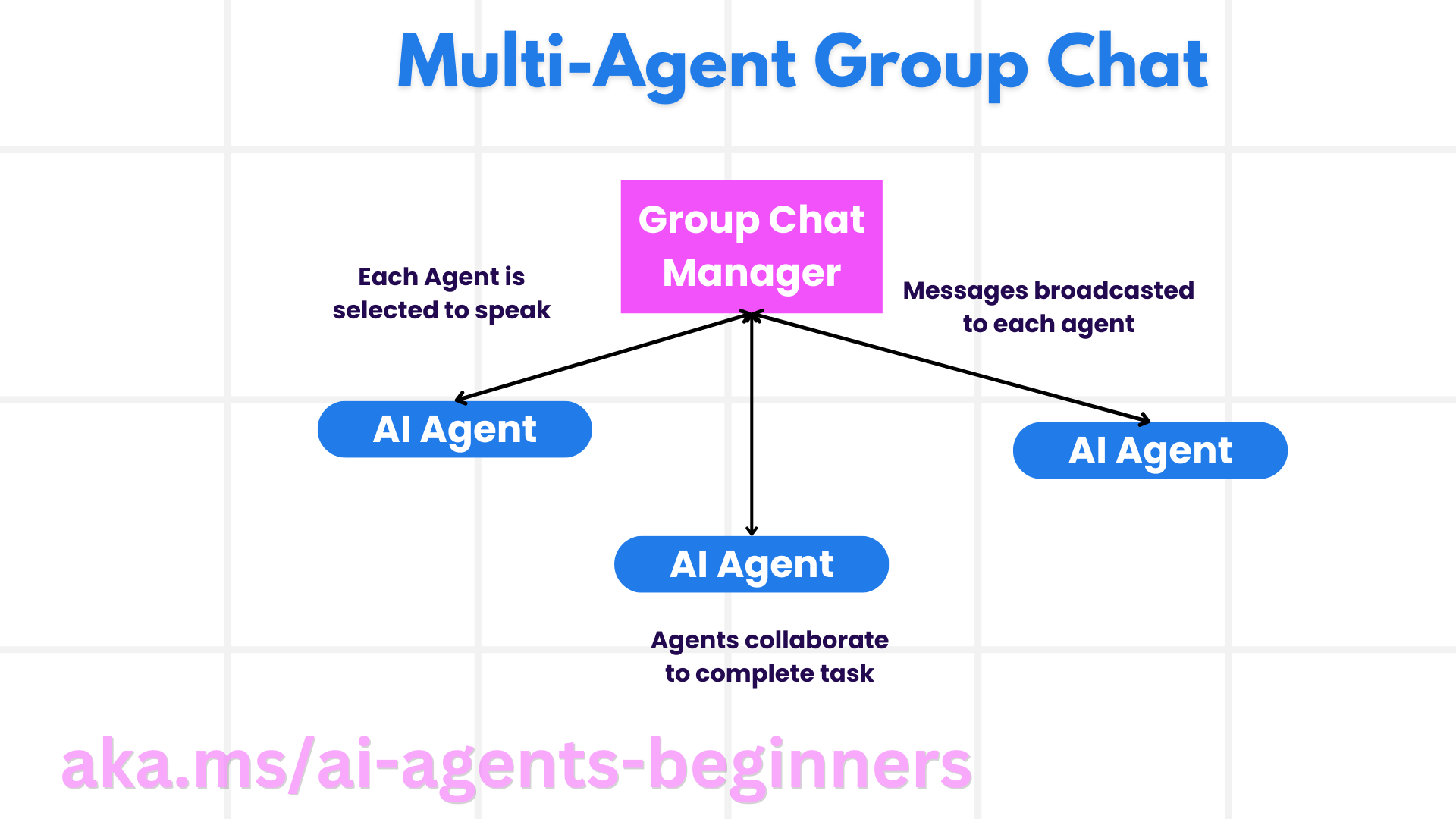

# Output from the console: my_agent received message: Hello, World! my_assistant received message: Hello, World! my_assistant responded: Hello! How can I assist you today? - Multi AgentsAutoGen supports the creation of multiple Agents that can work together to accomplish complex tasks. Agents can communicate, share information, and coordinate their actions to solve problems more effectively. To create a Multi-Agent system, you define different types of Agents with specialized functions and roles, such as data retrieval, analysis, decision making, and user interaction. Let's see what such a creation looks like so we can understand it:

editor_description = "Editor for planning and reviewing the content."

# Example of declaring an Agent

editor_agent_type = await EditorAgent.register(

runtime,

editor_topic_type, # Using topic type as the agent type.

lambda: EditorAgent(

description=editor_description,

group_chat_topic_type=group_chat_topic_type,

model_client=OpenAIChatCompletionClient(

model="gpt-4o-2024-08-06",

# api_key="YOUR_API_KEY",

),

),

)

# remaining declarations shortened for brevity

# Group chat

group_chat_manager_type = await GroupChatManager.register(

runtime,

"group_chat_manager",

lambda: GroupChatManager(

participant_topic_types=[writer_topic_type, illustrator_topic_type, editor_topic_type, user_topic_type],

model_client=OpenAIChatCompletionClient(

model="gpt-4o-2024-08-06",

# api_key="YOUR_API_KEY",

),

participant_descriptions=[

writer_description,

illustrator_description,

editor_description,

user_description

],

),

)

In the previous code, we have a GroupChatManagerIt is registered with the runtime. This manager is responsible for coordinating interactions between different types of Agents, such as writers, illustrators, editors, and users.

- Agent RuntimeThe framework provides a runtime environment that supports communication between Agents and enforces security and privacy boundaries. The framework provides a runtime environment that supports communication between Agents, manages their identity and lifecycle, and enforces security and privacy boundaries. This means that you can run your Agents in a secure and controlled environment, ensuring that they can interact safely and efficiently. There are two runtimes of interest:

- Stand-alone runtimeThe following is an example of how to use Agents. This is a good choice for single-process applications where all Agents are implemented in the same programming language and run in the same process. Here is a diagram of how it works:

Stand-alone runtime

application stack (computing)

Agents communicate via messages through the runtime, which manages the lifecycle of Agents. - Distributed agent runtimeThis is applicable to multi-process applications where Agents can be implemented in different programming languages and run on different machines. Here is a diagram of how it works:

Distributed runtime

- Stand-alone runtimeThe following is an example of how to use Agents. This is a good choice for single-process applications where all Agents are implemented in the same programming language and run in the same process. Here is a diagram of how it works:

Semantic Kernel + Agent Framework

Semantic Kernel consists of two parts, Semantic Kernel Agent Framework and Semantic Kernel itself.

Let's talk about the Semantic Kernel for a moment. it has the following core concepts:

- Connections: This is the interface to external AI services and data sources.

using Microsoft.SemanticKernel;

// Create kernel

var builder = Kernel.CreateBuilder();

// Add a chat completion service:

builder.Services.AddAzureOpenAIChatCompletion(

"your-resource-name",

"your-endpoint",

"your-resource-key",

"deployment-model");

var kernel = builder.Build();

Here's a simple example of how to create a kernel and add a Chat Completion service.Semantic Kernel creates a connection to an external AI service, in this case Azure OpenAI Chat Completion.

- Plugins: Encapsulates functions that an application can use. There are both ready-made plug-ins and plug-ins that you can create yourself. There is a concept called Semantic functions. The reason it is semantic is that you provide it with semantic information to help the Semantic Kernel determine that it needs to call the function. Here is an example:

var userInput = Console.ReadLine();

// Define semantic function inline.

string skPrompt = @"Summarize the provided unstructured text in a sentence that is easy to understand.

Text to summarize: {{$userInput}}";

// Register the function

kernel.CreateSemanticFunction(

promptTemplate: skPrompt,

functionName: "SummarizeText",

pluginName: "SemanticFunctions"

);

Here you start with a template tip skPromptIt leaves the user to enter text $userInput space of the plugin. You then use the plugin SemanticFunctions registered function SummarizeText. Note the name of the function, which helps the Semantic Kernel understand what the function does and when it should be called.

- Native function: There are also native functions that the framework can call directly to perform tasks. The following is an example of such functions retrieving content from a file:

public class NativeFunctions {

[SKFunction, Description("Retrieve content from local file")]

public async Task<string> RetrieveLocalFile(string fileName, int maxSize = 5000)

{

string content = await File.ReadAllTextAsync(fileName);

if (content.Length <= maxSize) return content;

return content.Substring(0, maxSize);

}

}

//Import native function

string plugInName = "NativeFunction";

string functionName = "RetrieveLocalFile";

var nativeFunctions = new NativeFunctions();

kernel.ImportFunctions(nativeFunctions, plugInName);

- Planner: The planner orchestrates execution plans and policies based on user input. The idea is to express how the execution should be done and then make it an instruction for the Semantic Kernel to follow. It then calls the necessary functions to execute the tasks. The following is an example of such a plan:

string planDefinition = "Read content from a local file and summarize the content.";

SequentialPlanner sequentialPlanner = new SequentialPlanner(kernel);

string assetsFolder = @"../../assets";

string fileName = Path.Combine(assetsFolder,"docs","06_SemanticKernel", "aci_documentation.txt");

ContextVariables contextVariables = new ContextVariables();

contextVariables.Add("fileName", fileName);

var customPlan = await sequentialPlanner.CreatePlanAsync(planDefinition);

// Execute the plan

KernelResult kernelResult = await kernel.RunAsync(contextVariables, customPlan);

Console.WriteLine($"Summarization: {kernelResult.GetValue<string>()}");

special attention planDefinition, which is the simple instruction followed by the planner. The appropriate function is then called according to this plan, in this case our semantic function SummarizeText and native functions RetrieveLocalFileThe

- Memory: Abstract and simplify context management for AI applications. The idea of memory is that this is something that the Large Language Model (LLM) should know. You can store this information in a vector store, which eventually becomes an in-memory database or a vector database or something similar. Here's a very simplified example of a scenario where the Facts is added to memory:

var facts = new Dictionary<string,string>();

facts.Add(

"Azure Machine Learning; https://learn.microsoft.com/azure/machine-learning/",

@"Azure Machine Learning is a cloud service for accelerating and

managing the machine learning project lifecycle. Machine learning professionals,

data scientists, and engineers can use it in their day-to-day workflows"

);

facts.Add(

"Azure SQL Service; https://learn.microsoft.com/azure/azure-sql/",

@"Azure SQL is a family of managed, secure, and intelligent products

that use the SQL Server database engine in the Azure cloud."

);

string memoryCollectionName = "SummarizedAzureDocs";

foreach (var fact in facts) {

await memoryBuilder.SaveReferenceAsync(

collection: memoryCollectionName,

description: fact.Key.Split(";")[1].Trim(),

text: fact.Value,

externalId: fact.Key.Split(";")[2].Trim(),

externalSourceName: "Azure Documentation"

);

}

These facts are then stored in the memory collection SummarizedAzureDocs in memory. This is a very simplified example, but you can see how information can be stored in memory for use in the Large Language Model (LLM).

That's the basics of the Semantic Kernel framework, what about the Agent Framework?

Azure AI Agent Service

The Azure AI Agent Service is a newer member, introduced on Microsoft Ignite 2024. It allows for the development and deployment of AI Agents with more flexible models, such as directly invoking open source Large Language Models (LLMs) such as Llama 3, Mistral, and Cohere.

Azure AI Agent Service provides stronger enterprise security mechanisms and data storage methods that make it suitable for enterprise applications.

It works out-of-the-box with Multi-Agent orchestration frameworks such as AutoGen and Semantic Kernel.

This service is currently in public preview and supports Python and C# for building Agents.

Core concepts

The Azure AI Agent Service has the following core concepts:

- AgentThe Azure AI Agent Service integrates with Azure AI Foundry. In AI Foundry, AI Intelligentsia (Agents) act as "smart" microservices that can be used to answer questions (RAG), perform operations, or completely automate workflows. It does this by combining the capabilities of generative AI models with tools that allow them to access and interact with real-world data sources. Below is an example of an Agent:

agent = project_client.agents.create_agent(

model="gpt-4o-mini",

name="my-agent",

instructions="You are helpful agent",

tools=code_interpreter.definitions,

tool_resources=code_interpreter.resources,

)

In this example, a model is created with the model gpt-4o-miniName my-agent and directives You are helpful agent The agent is equipped with tools and resources to perform code interpretation tasks. The Agent is equipped with tools and resources to perform code interpretation tasks.

- Thread and messages. Thread is another important concept. It represents a dialog or interaction between an agent and a user. Threads can be used to track the progress of a conversation, store contextual information, and manage the state of an interaction. Here is an example of a thread:

thread = project_client.agents.create_thread()

message = project_client.agents.create_message(

thread_id=thread.id,

role="user",

content="Could you please create a bar chart for the operating profit using the following data and provide the file to me? Company A: $1.2 million, Company B: $2.5 million, Company C: $3.0 million, Company D: $1.8 million",

)

# Ask the agent to perform work on the thread

run = project_client.agents.create_and_process_run(thread_id=thread.id, agent_id=agent.id)

# Fetch and log all messages to see the agent's response

messages = project_client.agents.list_messages(thread_id=thread.id)

print(f"Messages: {messages}")

In the previous code, a thread was created. Thereafter, a message is sent to the thread. A message is sent to the thread by calling the create_and_process_runThe Agent is asked to perform work on the thread. Finally, messages are captured and logged to see the response of the Agent. Messages indicate the progress of the dialog between the user and the Agent. It is also important to understand that messages can be of different types, such as text, images, or files, where the work of the Agents results in, for example, an image or text response. As a developer, you can use this information to further process the response or present it to the user.

- Integration with other AI frameworksThe Azure AI Agent Service can interact with other frameworks, such as AutoGen and Semantic Kernel, meaning that you can build parts of your application in one of these frameworks, such as using the Agent Service as an orchestrator, or you can build everything in the Agent Service.

use case: Azure AI Agent Service is designed for enterprise applications that require secure, scalable and flexible AI intelligence (Agent) deployment.

What is the difference between these frameworks?

It sounds like there is a lot of overlap between these frameworks, but there are some key differences in their design, functionality, and target use cases:

- AutoGen: Focuses on event-driven, distributed agentic applications, supporting multiple Large Language Models (LLMs) and SLMs, tools, and advanced Multi-Agent design patterns.

- Semantic Kernel: Focuses on understanding and generating human-like text content by capturing deeper semantic meaning. It is designed to automate complex workflows and initiate tasks based on project goals.

- Azure AI Agent Service: Provides more flexible models, such as direct calls to open source Large Language Models (LLMs) such as Llama 3, Mistral, and Cohere. it provides more robust enterprise security mechanisms and data storage methods, making it suitable for enterprise applications.

Still not sure which one to choose?

use case

Let's go through some common use cases to see if we can help you:

Q: My team is developing a project that involves automating code generation and data analysis tasks. Which framework should we use?

A: AutoGen is a good choice in this case because it focuses on event-driven, distributed agentic applications and supports advanced Multi-Agent design patterns.

Q: What makes AutoGen better than Semantic Kernel and Azure AI Agent Service for this use case?

A: AutoGen is designed for event-driven, distributed agentic applications, making it ideally suited for automating code generation and data analysis tasks. It provides the tools and features needed to efficiently build complex Multi-Agent systems.

Q: It sounds like the Azure AI Agent Service works here as well, and it has tools for code generation and such?

A: Yes, Azure AI Agent Service also supports code generation and data analysis tasks, but it may be better suited for enterprise applications that require secure, scalable, and flexible AI agent deployments. autoGen is more focused on event-driven, distributed agentic applications and advanced Multi-Agent design patterns. AutoGen is more focused on event-driven, distributed agentic applications and advanced Multi-Agent design patterns.

Q: So you're saying if I want to get into enterprise, I should go with Azure AI Agent Service?

A: Yes, Azure AI Agent Service is designed for enterprise applications that require secure, scalable, and flexible AI Intelligentsia (Agent) deployments. It provides stronger enterprise security mechanisms and data storage methods that make it suitable for enterprise use cases.

Let's summarize the key differences in a table:

| organizing plan | recount (e.g. results of election) | Core concepts | use case |

|---|---|---|---|

| AutoGen | Event-driven, distributed agentic applications | Agents, Personas, Functions, Data | Code generation, data analysis tasks |

| Semantic Kernel | Understanding and generating humanoid text content | Agents, Modular Components, Collaboration | Natural language understanding, content generation |

| Azure AI Agent Service | Flexible Modeling, Enterprise Security, Code Generation, Tool calling | Modularity, Collaboration, Process Orchestration | Secure, Scalable and Flexible Deployment of AI Intelligentsia (Agents) |

What is the ideal use case for each of these frameworks?

- AutoGen: Event-driven, distributed agentic applications, advanced Multi-Agent design pattern. Ideal for automated code generation, data analysis tasks.

- Semantic Kernel: Understand and generate human-like text content, automate complex workflows, and initiate tasks based on project goals. Ideal for natural language understanding, content generation.

- Azure AI Agent Service: Flexible models, enterprise security mechanisms, and data storage methods. Ideal for secure, scalable, and flexible deployment of AI intelligences (agents) in enterprise applications.

Can I integrate directly with my existing Azure ecosystem tools or do I need a standalone solution?

The answer is yes, you can integrate your existing Azure ecosystem tools directly with the Azure AI Agent Service, especially since it is designed to work seamlessly with other Azure services. For example, you can integrate Bing, Azure AI Search, and Azure Functions. there is also deep integration with Azure AI Foundry.

For AutoGen and Semantic Kernel, you can also integrate with Azure services, but this may require you to call Azure services from code. Another integration method is to use the Azure SDK to interact with Azure services from your Agents. In addition, as mentioned earlier, you can use the Azure AI Agent Service as an orchestrator for Agents built in AutoGen or Semantic Kernel, which will give you easy access to the Azure ecosystem.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...