Why are multi-intelligence collaborative systems more prone to error?

introductory

In recent years, multi-intelligent systems (MAS) have attracted much attention in the field of artificial intelligence. These systems attempt to solve complex, multi-step tasks through the collaboration of multiple Large Language Model (LLM) intelligences. However, despite the interest in MAS Expectations were high, and its performance in real-world applications was less than stellar. Compared to single-intelligent-body frameworks, MAS shows minimal performance gains in various benchmarks. In order to delve deeper into the reasons behind this phenomenon, a comprehensive study led by Mert Cemri et al. was developed.

Background and objectives of the study

The study aimed to reveal the key challenges that hinder the effectiveness of MAS. The research team analyzed five popular MAS frameworks, covering over 150 tasks, and invited six experts to manually annotate them. Through in-depth analysis of more than 150 conversation traces, the research team identified 14 unique failure patterns and proposed a comprehensive taxonomy, the Multi-Intelligent System Failure Taxonomy (MASFT), that is applicable to various MAS frameworks.

Key findings

1. Classification of failure modes

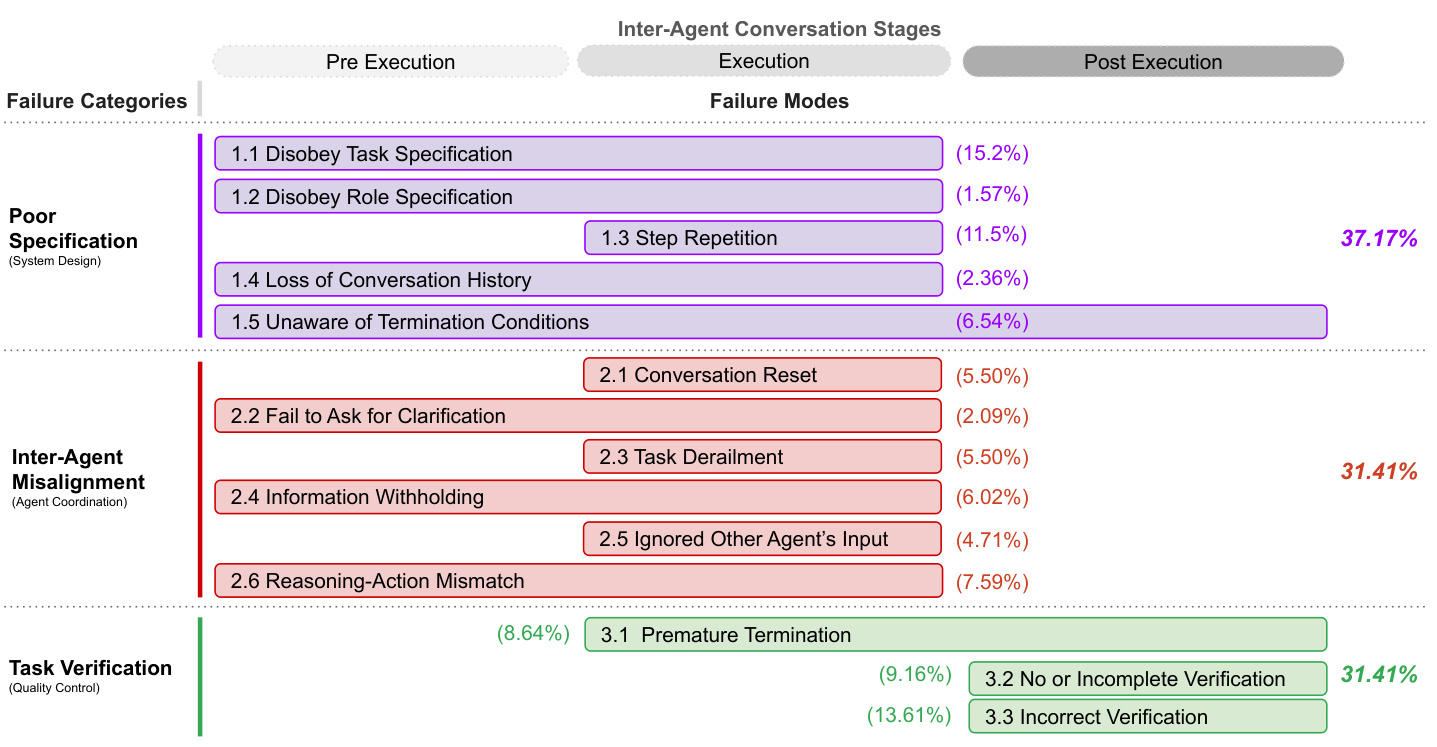

MASFT divides the intelligent body execution process into three phases: pre-execution, execution, and post-execution, and identifies fine-grained failure modes that may occur in each phase. These failure modes are categorized into the following three broad categories:

- Failure of specification and system design:: Includes system architecture design flaws, poor dialog management, unclear or violated constraints on task specifications, and inadequate definition of or adherence to intelligentsia roles and responsibilities. For example, ChatDev fails to properly understand user input when performing a chess game task, resulting in a generated game that does not meet the initial requirements.

- inter-intelligent body mismatch: covers ineffective communication, poor collaboration, conflicting behaviors between intelligences, and gradual deviation from the initial task. For example, in ChatDev's creation of a Wordle-like game, the programmer intelligences engaged in seven rounds of conversations with multiple characters but failed to update the initial code, resulting in a lack of playability in the generated game.

- Task validation and termination: Involves early termination of execution and the lack of mechanisms to ensure the accuracy, completeness, and reliability of interactions, decisions, and results. For example, in ChatDev's chess game implementation scenario, the validating intelligence only checks that the code compiles without running the program or ensuring that it conforms to the rules of chess.

2. Failure mode analysis

The research team found that the failure of MAS was not due to a single cause, but rather a combination of factors. Here are some of the key findings:

- Failure of specification and system designcap (a poem)inter-intelligent body mismatchis the main reason for the failure of MAS. This suggests that the architectural design of MAS and the interaction mechanism between intelligences need to be further optimized.

- There are significant differences in the distribution of failure modes across MAS frameworks. For example, AG2 has fewer failures on inter-intelligent mismatches but performs poorly on specification and validation issues, while ChatDev has fewer failures on validation issues but faces more challenges on specification and inter-intelligent mismatches. These differences stem from different system topology designs, communication protocols, and interaction management approaches.

- Validation mechanisms play a crucial role in MAS, but not all failures can be attributed to inadequate validation. Other factors, such as unclear specifications, poor design, and inefficient communication, are also important contributors to failure.

Improvement strategies

In order to improve the robustness and reliability of MAS, the research team proposed the following two types of improvement strategies:

1. Tactical approach

- Improvement of prompts: Provide clear task descriptions and role definitions, encourage active dialog between intelligences, and add a self-validation step upon task completion.

- Optimizing the Organization of Intelligent Bodies: A modular design with well-defined conversation patterns and termination conditions.

- cross-validation: Improve the accuracy of validation through multiple LLM calls and majority voting mechanisms, or by resampling prior to validation.

2. Structural strategies

- Establishment of standardized communication protocols: Clarify intentions and parameters to reduce ambiguity and enhance coordination between intelligences.

- Enhanced validation mechanisms: Develop generic validation mechanisms across domains or customize validation methods for different domains.

- Intensive learning: Fine-tuning MAS intelligences through reinforcement learning, rewarding task-aligned behavior and penalizing inefficient behavior.

- Uncertainty quantification: Introducing a probabilistic confidence measure in intelligent body interactions, where the intelligent body can pause to gather more information when the confidence level falls below a preset threshold.

- Memory and state management: Develop more effective memory and state management mechanisms to enhance contextual understanding and reduce ambiguity in communication.

Case Studies

The research team applied some of the tactical methods in two case studies, AG2 and ChatDev, with varying degrees of success:

- AG2 - MathChat: Improved cueing and intelligentsia configurations resulted in improved task completion rates, but the new topology did not lead to significant improvements. This suggests that the effectiveness of these strategies depends on the characteristics of the underlying LLM.

- ChatDev: Task completion rates increased by refining the role-specific prompts and modifying the framework topology, but the improvement was limited. This suggests the need for a more comprehensive solution.

reach a verdict

This study provides the first systematic investigation of failure modes in LLM-based multi-intelligent body systems and proposes MASFT as a taxonomy, which provides a valuable reference for future research. Although a tactical approach can lead to some improvements, more in-depth structural strategies are needed to build more robust and reliable MAS.

future outlook

Future research should be devoted to developing more effective verification mechanisms, standardized communication protocols, enhanced learning algorithms, and memory and state management mechanisms to address the challenges faced by MAS. In addition, exploring how to apply the principles of high-reliability organizations to MAS design is a direction that deserves in-depth discussion.

Charts and data

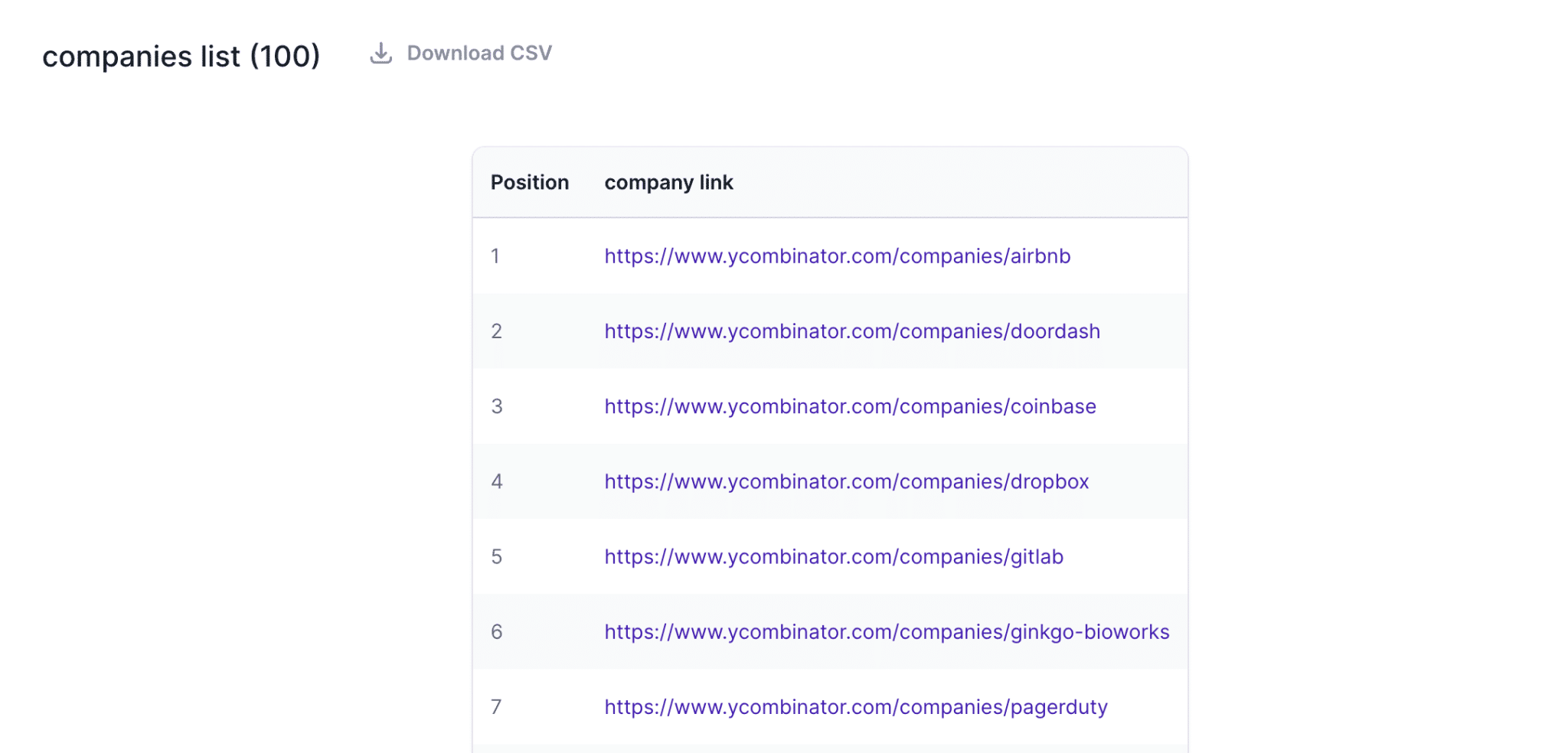

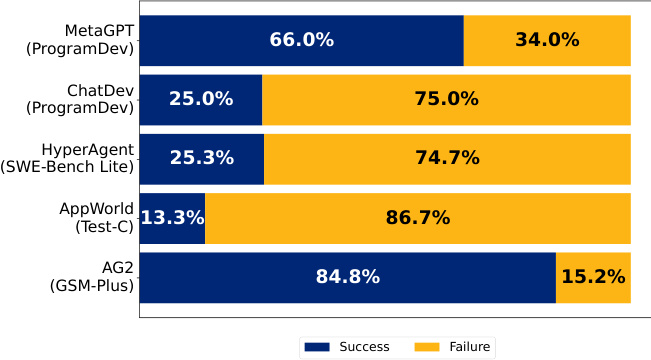

Figure 1. Failure rates of five popular multi-intelligent body LLM systems containing GPT-4o and Claude-3.

Figure 2. taxonomy of MAS failure modes. The inter-intelligent body dialog stages indicate that the failure may occur in different stages of the end-to-end MAS system. If a failure mode spans multiple stages, it implies that the problem involves or may occur in different stages. Percentages indicate how often each failure mode and category occurs in the 151 trajectories we analyzed.

Figure 3. MAS failure mode correlation matrix.

Through this research, practitioners in the MAS field can better understand why systems fail and take more effective steps to improve the performance and reliability of MAS.

Original: https://arxiv.org/pdf/2503.13657

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...