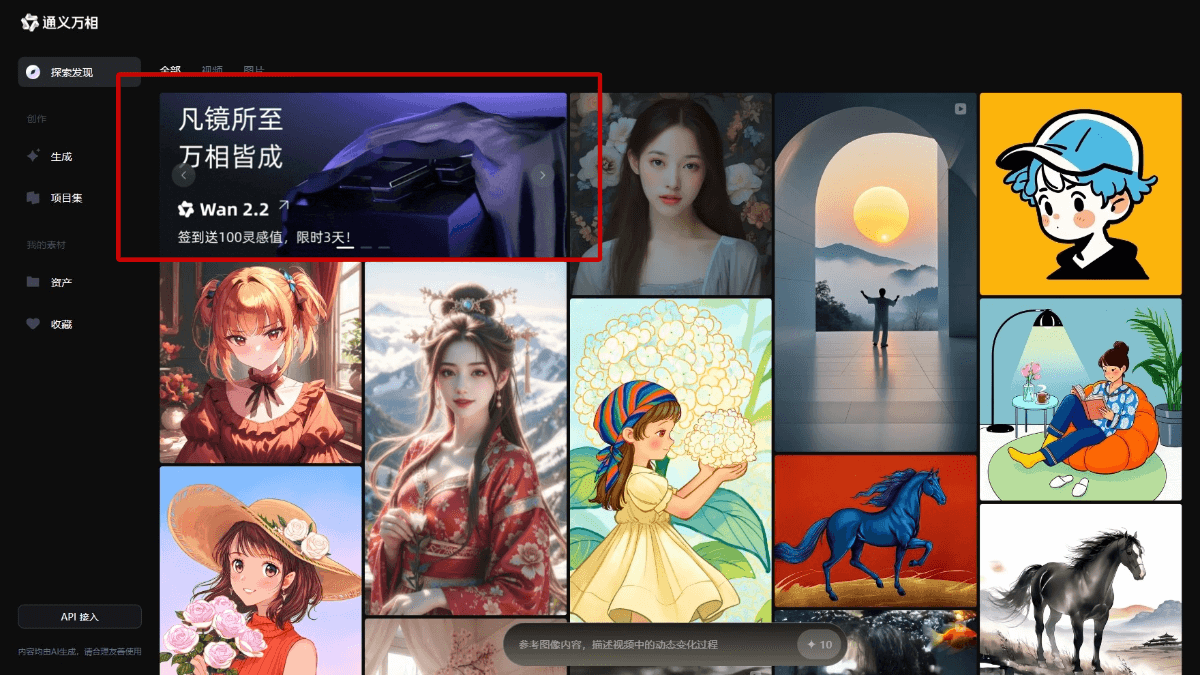

Tongyi Wanphase Wan 2.2 - Open source AI video generation model launched by Ali

What is Tongyi Wan Phase Wan 2.2?

Tongyi Wanphase Wan2.2 is an advanced AI video generation model open-sourced by Alibaba, with 27 billion total references. The model contains three modes of text-generated video, graph-generated video and unified video generation, which can generate high-quality videos based on text descriptions, images or a combination of both. For the first time, the model introduces the Mixed Expert (MoE) architecture, which combines diffusion modeling and high-compression 3D VAE to dramatically improve the quality and efficiency of the generation, while supporting operation on consumer graphics cards. The model is equipped with a cinematic aesthetic control system that supports precise customization of lighting, shadow, color, and other effects. Currently, developers can access the model and code through GitHub, HuggingFace and other platforms, enterprises call APIs for application development through AliCloud Hundred Refine, and users can access the model and code on thea complete picture of everythingDirectly experienced on the official website and Tongyi APP, the model is widely applicable to short video creation, advertising and marketing, education, film and television production and other scenes.

Main features of Tongyi Wan Phase Wan 2.2

- Vincennes VideoThe user simply enters a textual description, such as "sunset by the sea", and the model generates video content that matches the description.

- Toussaint VideoUpload a picture, the model can be transformed into a dynamic video, so that the static picture "live".

- Unified Video Generation: Combine text and images to generate more accurate and user-friendly videos and improve generation results.

- Cinematic aesthetic control: Users can customize the aesthetics of the video by entering keywords (e.g., "high contrast", "symmetrical composition") to create a professional-looking video with aesthetic styles such as light, shadow, color, and composition.

- Complex motion generation: It can generate complex motion scenes and character interactions, making the video more dynamic and realistic.

Tongyi Wanphase Wan 2.2's official website address

- GitHub repository:: https://github.com/Wan-Video/Wan2.2

- HuggingFace Model Library:: https://huggingface.co/Wan-AI/models

How to use Tongyi WanPhase Wan 2.2

- Access platforms::

- Official website experience: Visit the official website of Tongyi Mansang directly or download the Tongyi App.

- Developer Platform: For more in-depth development, get the model code via GitHub or find relevant model resources on HuggingFace.

- Selection Mode::

- Vincennes VideoInput a text description, such as "a puppy playing in the park", click the Generate button, and the model generates a video based on the description.

- Toussaint Video: Upload an image and the model transforms it into a dynamic video to make the image content move.

- Unified Video Generation: Enter both text and images, and the model combines the information from both to generate more accurate video content.

- Video Settings: Adjust the video resolution, frame rate and other parameters to meet different needs.

- Aesthetic controlCustomize the aesthetics of your videos by entering keywords (e.g., "warm tones", "slow-mo") to enhance the professional feel of your videos.

- View Results: The generated video is displayed directly on the page and the user is able to preview the effect.

- Download or share: Download videos locally or share them directly to social media and other platforms.

Core Advantages of Tongyi Wan Phase Wan 2.2

- Powerful generative capabilities: Tongyi Vanphase 2.2 supports text-generated video, graph-generated video and unified video generation, generating high-quality video based on multiple inputs to meet diversified needs.

- Efficient Computing PerformanceThe newest version of the VAE is based on the Mixed Expert (MoE) architecture and high compression rate 3D VAE, which dramatically improves generation quality and computational efficiency, and supports fast HD video generation on consumer graphics cards.

- Cinematic aesthetic control: Customize light, color and composition with keywords to generate videos with a professional cinematic texture that meets users' high demands for aesthetics.

- Wide range of applicabilityIt is suitable for short video creation, advertising and marketing, education, film and television production, news media and other scenarios, and significantly improves the efficiency of creation and content quality.

- Open Source and Openness: Open source code and models to facilitate developer research and secondary development, as well as community support and API interfaces for easy integration into enterprise systems.

- Efficient data training: Training based on large-scale datasets improves the generalization ability and generation quality of the model, ensuring stable performance in different scenarios.

People who are interested in Tongyi Wan Phase Wan 2.2

- Short video creators: Users quickly generate engaging short video content, saving creation time and costs.

- Advertising and marketing staff: Generate high-quality advertisement videos to enhance advertisement effectiveness and brand impact.

- educator: Help users generate vivid educational videos and training materials to enhance learning effect and training quality.

- Film & TV Production Team: Rapidly generate scene designs and animation clips to improve creative efficiency and reduce production costs.

- Journalism and media practitioners: Generate animations and visual effects to enhance the visual impact and audience engagement of news stories.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...