ViTLP: Extracting Structured Data from Typographically Complex PDF Documents and Visually Guided Generation of Text Layout Pre-training Models

General Introduction

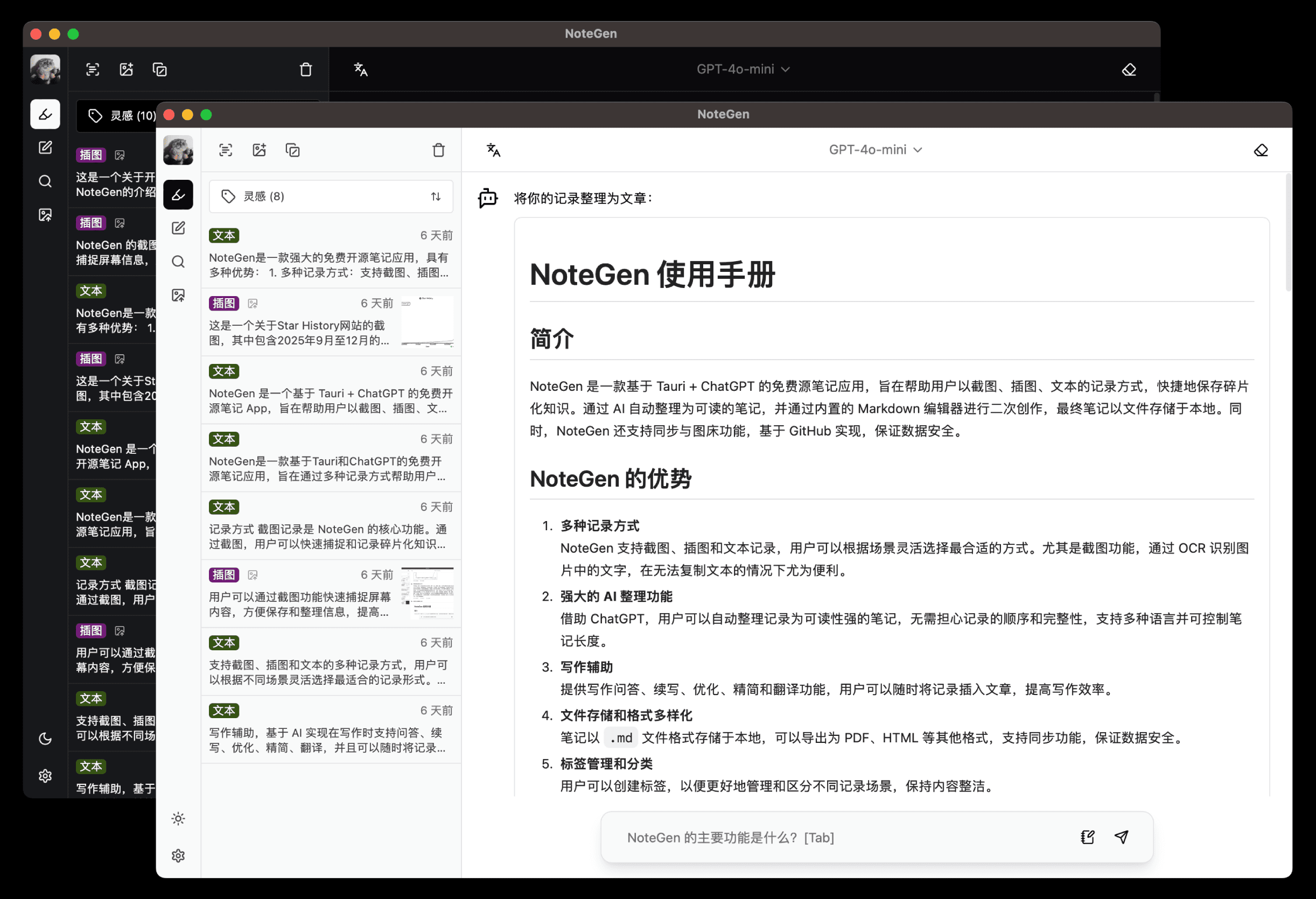

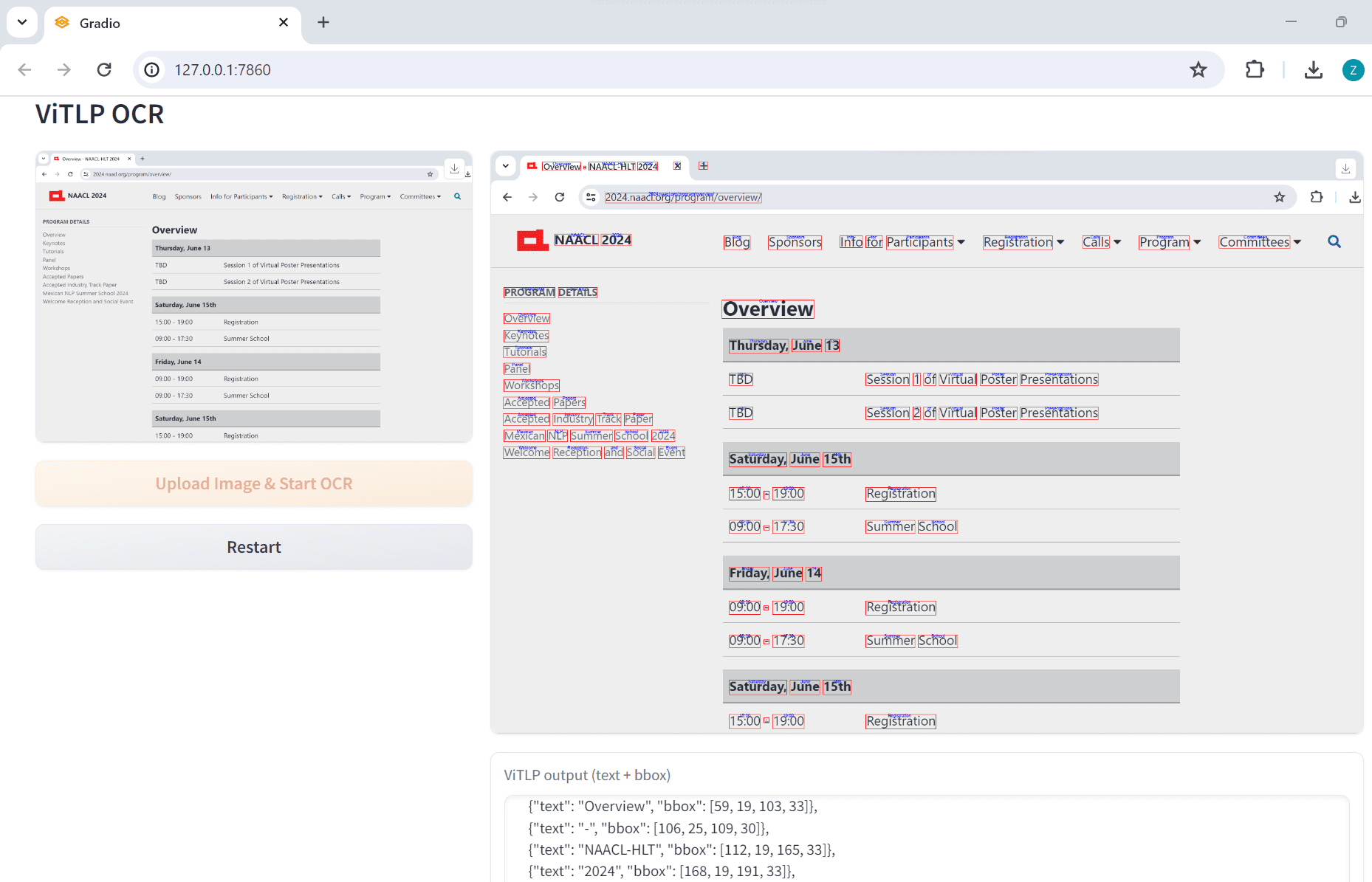

ViTLP (Visually Guided Generative Text-Layout Pre-training for Document Intelligence) is an open-source project that aims to enhance document intelligence processing through visually guided generative text layout pre-training models. The project was developed by the Veason-silverbullet team and presented at NAACL 2024.The ViTLP model is capable of localizing and recognizing OCR text, and provides pre-trained ViTLP-medium (380M) checkpoints, which can be accessed by users on Huggingface. The code and model weights for the project are available on GitHub and support OCR processing of document images and text layout generation.

Function List

- OCR text localization and recognition: The ViTLP model enables efficient OCR text localization and recognition.

- Pre-trained models: ViTLP-medium (380M) pre-trained checkpoints are provided, which can be used directly or fine-tuned by the user.

- Document Image Processing: Support for uploading document images and OCR processing.

- Model fine-tuning: Provide fine-tuning tools to support subsequent training on OCR datasets and VQA datasets.

- Document Composition Tools: Provides document synthesis tools with positioning box metadata.

Using Help

Installation process

- Clone the ViTLP project code:

git clone https://github.com/Veason-silverbullet/ViTLP

cd ViTLP

- Install the dependencies:

pip install -r requirements.txt

- Download Pre-training Checkpoints:

mkdir -p ckpts/ViTLP-medium

git clone https://huggingface.co/veason/ViTLP-medium ckpts/ViTLP-medium

Usage Process

- OCR text recognition::

- Run the OCR script:

python ocr.py- Upload a document image and the model will automatically perform OCR processing and output the results.

- Model fine-tuning::

- consultation

./finetuninginstruction file in the directory for subsequent training on the OCR dataset and the VQA dataset. - Use the document synthesis tool to generate synthetic documents with positioning box metadata to enhance model training.

- consultation

- Batch Decode::

- Use batch decoding scripts:

bash

bash decode.sh - The script will batch process document images and output OCR results.

- Use batch decoding scripts:

Detailed Function Operation

- OCR text localization and recognition: After uploading the document image, the model will automatically detect and recognize the text area, and output the text content and location information.

- Model fine-tuning: Users can use the provided fine-tuning tools to further train the model according to their dataset needs and improve the recognition effect in specific scenes.

- Document Composition Tools: Generate documents with positioning box metadata via a synthesis tool to help the model better understand text layout and structure during training.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...