VOP: OCR Tool for Extracting Complex Diagrams and Math Formulas

General Introduction

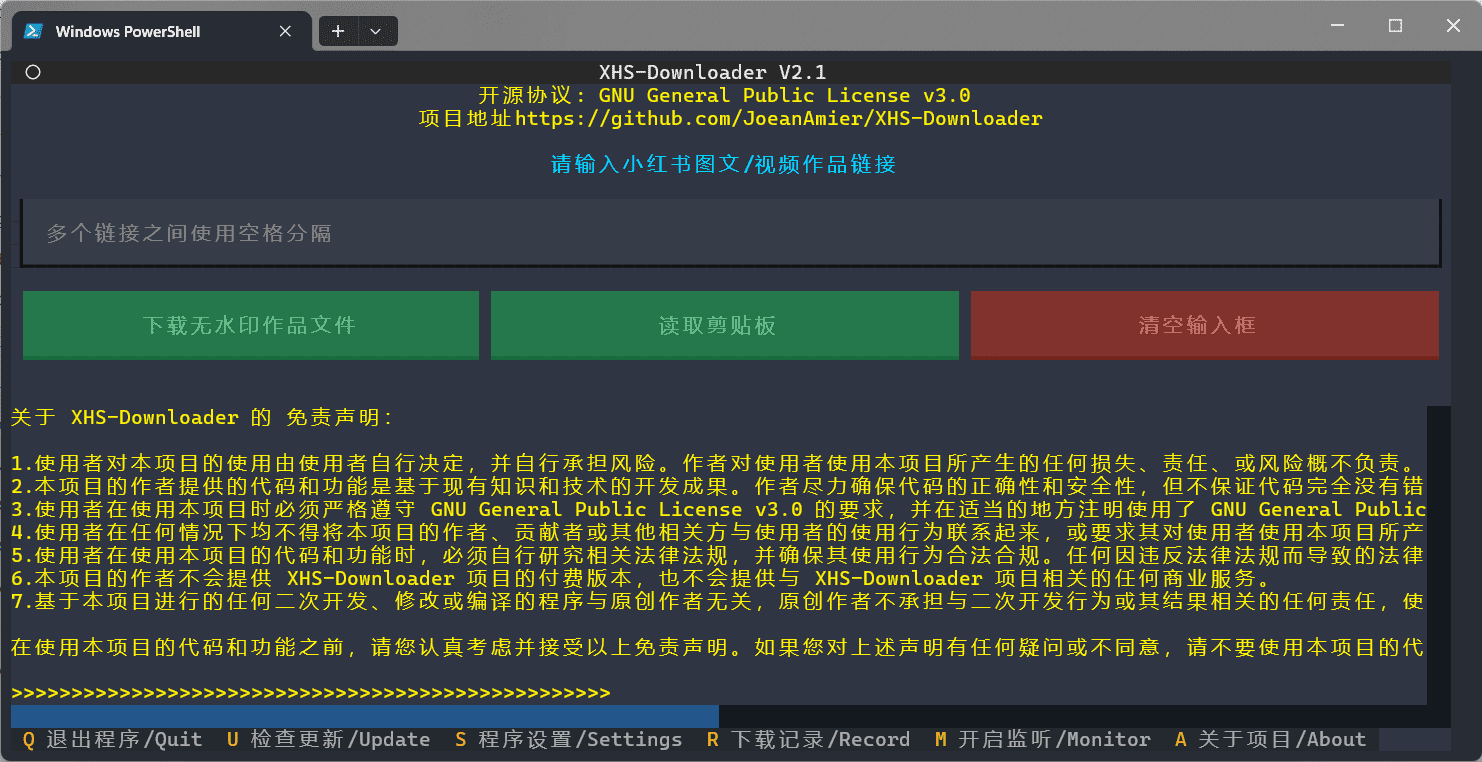

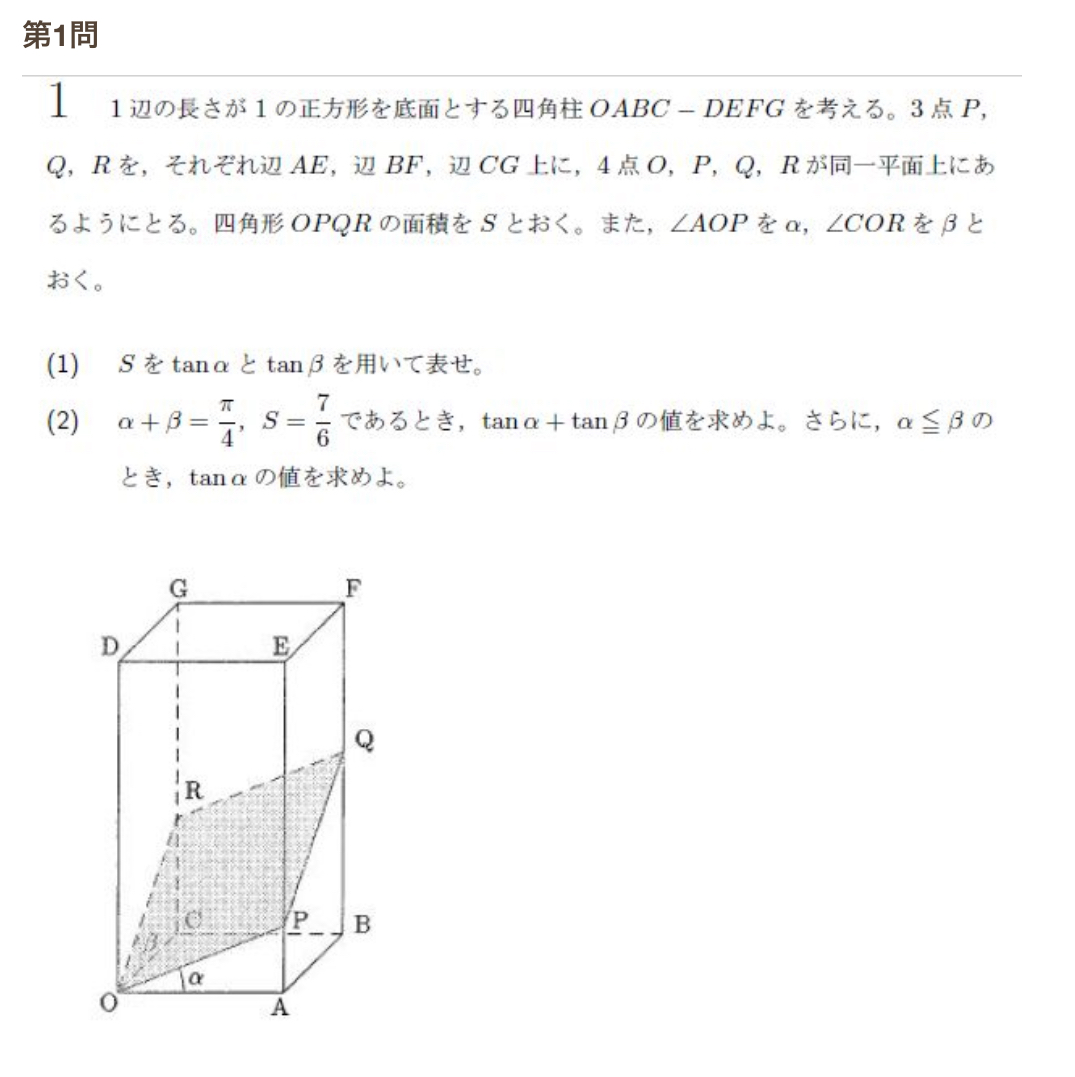

Versatile OCR Program is an open source Optical Character Recognition (OCR) tool designed specifically for processing complex academic and educational documents. It can extract text, tables, mathematical formulas, charts and schematics from PDF, images and other documents, and generate structured data suitable for machine learning training. It supports multiple languages, including English, Japanese and Korean, and the output format is JSON or Markdown, which is convenient for developers.

Function List

- Extract multi-language text, support English, Japanese, Korean, etc., can be extended to other languages.

- Recognize mathematical formulas and generate LaTeX code and natural language descriptions.

- Parses tables, preserves row and column structure, and outputs structured data.

- Analyze diagrams and schematics to generate semantic annotations and descriptions (e.g., "This diagram shows the four stages of cell division").

- Handle complex layout PDFs, accurately identify formula-intensive paragraphs and visual elements.

- Outputs JSON or Markdown format containing semantic context to optimize AI training.

- Use DocLayout-YOLO, Google Vision API, MathPix and other technologies to improve recognition accuracy.

- Provides high accuracy of 90-951 TP3T for real academic datasets (e.g., EJU Biology, Eastern University Math).

- Supports batch processing to handle multiple file inputs.

Using Help

Installation process

To use Versatile OCR Program, you need to clone the repository and configure the environment. Below are the detailed steps:

- clone warehouse

Runs in the terminal:git clone https://github.com/ses4255/Versatile-OCR-Program.git cd Versatile-OCR-Program - Creating a Virtual Environment

Python 3.8 or higher is recommended. Create and activate a virtual environment:python -m venv venv source venv/bin/activate # Linux/Mac venv\Scripts\activate # Windows - Installation of dependencies

Install the libraries required for the project:pip install -r requirements.txtDependencies include

opencv-python,google-cloud-vision,mathpix,pillowetc. Ensure that the network connection is stable. - Configuring the API Key

The project relies on external APIs (e.g. Google Vision, MathPix) for advanced OCR processing:- Google Vision API: in

config/directory to create thegoogle_credentials.json, fill in the service account key. To get the key, please visitGoogle Cloud ConsoleThe - MathPix API: in

config/directory to create themathpix_config.jsonFill inapp_idcap (a poem)app_key.. Register for a MathPix account to get the key. - Configuration file templates are available in the project

README.mdThe

- Google Vision API: in

- Verify Installation

Run the test script to make sure the environment is correct:python test_setup.pyIf there are no errors, the installation is complete.

workflow

The Versatile OCR Program runs in two phases: initial extraction and semantic processing.

1. Initial OCR extraction

(of a computer) runocr_stage1.pyExtract raw elements (text, tables, charts, etc.):

python ocr_stage1.py --input sample.pdf --output temp/

--inputSpecify the input file (PDF or image, e.g. PNG, JPEG).--outputSpecifies the intermediate results directory containing coordinates, cropped images, etc.- Support for batch processing: use

--input_dirSpecify the folder.

2. Semantic processing and final output

(of a computer) runocr_stage2.pyConvert intermediate data to structured output:

python ocr_stage2.py --input temp/ --output final/ --format json

--inputSpecifies the output directory for the first stage.--formatSelect the output format (jsonmaybemarkdown).- The output contains text, formula descriptions, tabular data, and semantic labeling of charts.

Main Functions

1. Multilingual text extraction

Extract text from PDF or images with multi-language support:

python ocr_stage1.py --input document.pdf --lang eng+jpn+kor --output temp/

python ocr_stage2.py --input temp/ --output final/ --format markdown

--langSpecify the language in the formateng(English),jpn(Japanese),kor(Korean), Multi-language+Connections.- The output file contains text content and semantic context, saved as Markdown or JSON.

2. Mathematical formula identification

Recognize formulas and generate LaTeX codes and descriptions. For example, the formulax^2 + y = 5The output is "a quadratic equation with variables x and y". Operation:

python ocr_stage1.py --input math.pdf --mode math --output temp/

python ocr_stage2.py --input temp/ --output final/ --format json

--mode mathActivation formula recognition.- The output contains LaTeX code and natural language descriptions.

3. Analysis of tables

Extracts the table, preserving the row and column structure:

python ocr_stage1.py --input table.pdf --mode table --output temp/

python ocr_stage2.py --input temp/ --output final/ --format json

--mode tableSpecializing in forms processing.- The output is JSON with row and column data and a summary description.

4. Graphical and schematic analysis

Analyze charts or diagrams to generate semantic annotations. For example, a line graph might output "Line graph showing temperature change from 2010 to 2020". Action:

python ocr_stage1.py --input diagram.pdf --mode figure --output temp/

python ocr_stage2.py --input temp/ --output final/ --format markdown

--mode figureEnable chart analysis.- The output contains the image description, data point extraction and context.

Tips for use

- Improved accuracy: Input high-resolution files (300 DPI recommended). Add at runtime

--dpi 300Optimize image parsing. - batch file: Use of

--input_dir data/Processes all files in the folder. - custom language:: Editorial

config/languages.jsonTo add a language, you need to install the corresponding OCR model (e.g. Tesseract Language Pack). - Debug Log: Add

--verboseView detailed runtime information. - compressed output: Use of

--compressReduce JSON file size.

caveat

- Ensure that input files are clear; low quality files may reduce recognition accuracy.

- External APIs require a stable network, and it is recommended to configure a backup key.

- The output directory needs to have enough disk space, large PDFs may generate larger files.

- Under the GNU AGPL-3.0 license, derivative projects must make the source code publicly available.

- The project plans to release the AI pipeline integration within a month, so stay tuned.

With these steps, users can quickly get started, process complex documents and generate AI training data.

application scenario

- Academic Research Data Extraction

Researchers can extract formulas, tables and charts from exam papers or essays to generate datasets with semantic annotations. For example, Eastern University math exam papers were converted to JSON for geometry model training. - Digitization of educational resources

Schools can convert paper textbooks or test papers into electronic format, extract multilingual text and graphics, and generate searchable archives. Suitable for multilingual processing of international courses. - Machine Learning Dataset Construction

Developers can extract structured data from academic documents to generate high-quality training sets. For example, extracting cell division diagrams from biology papers, labeling stage descriptions, and training image recognition models. - Archives document processing

Libraries can convert historical academic documents to digital format, preserving formulas and table structures to improve retrieval efficiency. Support PDF processing of complex layouts. - Exam analysis tools

Educational institutions can analyze the content of test papers, extract question types and charts, generate statistical reports, and optimize instructional design.

QA

- What input formats are supported?

Supports PDF and images (PNG, JPEG). High resolution PDFs are recommended to ensure accuracy. - How can I improve my form recognition accuracy?

Use clear documentation to enable--dpi 300. For Japanese tables, the Google Vision API outperforms MathPix and can be used in theconfig/Adjustment in the middle. - Do I have to use a paid API?

Google Vision and MathPix APIs require a paid account, but open source modules such as DocLayout-YOLO are free. It is recommended to configure the API for best results. - How do I add a new language?

compilerconfig/languages.json, add the language code and OCR model (e.g. Tesseract language pack). Restart the program to take effect. - What if the output file is too large?

expense or outlay--compressCompress JSON, or choose Markdown format. It is also possible to limit the output module, e.g. extracting only text (--mode text). - How can I participate in program improvement?

Pull Requests can be submitted via GitHub or by contacting the author at ses425500000@gmail.com. Feel free to contribute code or give feedback on issues.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...