UltraLight Digital Human: Open source end-side real-time running ultra-lightweight digital human with one-click installation package

General Introduction

Ultralight Digital Human is an open source project aiming to develop an ultra-lightweight digital human model that can run in real time on mobile devices. The project achieves smooth operation on mobile devices by optimizing algorithms and model structure for a variety of scenarios such as social applications, games and virtual reality. Users can easily train and deploy their own digital human models to enjoy personalized and immersive experiences.

Regarding the fact that it works fine on mobile, just change the number of channels of this current model a little bit smaller and use wenet for the audio features and you'll be fine.

Function List

- real time operation: Models can run in real time on mobile devices and are responsive.

- Lightweight design: Optimized model structure for resource-limited mobile devices.

- open source project: The code and model are completely open source and can be freely modified and used by users.

- multi-scenario application: Suitable for a variety of scenarios such as social applications, gaming, and virtual reality.

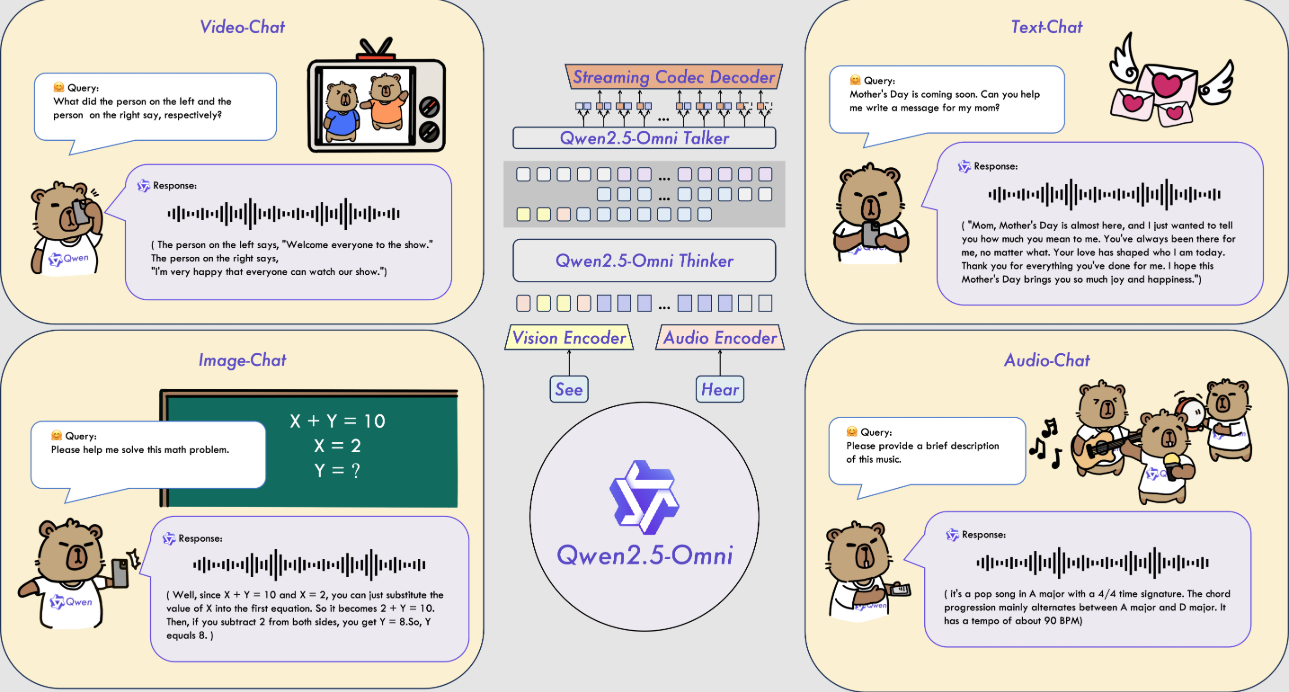

- Audio Feature Extraction: Supports both wenet and hubert audio feature extraction schemes.

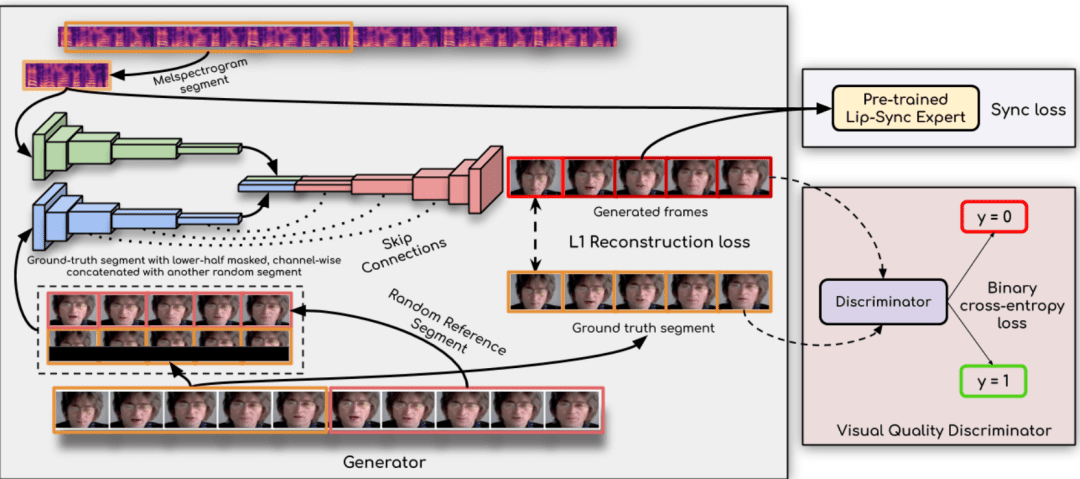

- synchronous network: Enhanced lip synchronization with syncnet technology.

- Detailed Tutorial: Provides detailed training and usage tutorials to help users get started quickly.

Using Help

Installation process

- environmental preparation::

- Install Python 3.10 and above.

- Install PyTorch 1.13.1 and other dependencies:

conda create -n dh python=3.10 conda activate dh conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.7 -c pytorch -c nvidia conda install mkl=2024.0 pip install opencv-python transformers numpy==1.23.5 soundfile librosa onnxruntime

- Download model files::

- Download the wenet encoder.onnx file from the following link and place it in the

data_utils/Catalog: download link

- Download the wenet encoder.onnx file from the following link and place it in the

Usage Process

- Preparation Video::

- Prepare a 3-5 minute video, making sure that each frame has a full facial exposure and that the sound is clear and free of noise.

- Place the video in a new folder.

- Extracting audio features::

- Use the following commands to extract audio features:

cd data_utils python process.py YOUR_VIDEO_PATH --asr hubert

- Use the following commands to extract audio features:

- training model::

- Train the syncnet model to get better results:

cd .. python syncnet.py --save_dir ./syncnet_ckpt/ --dataset_dir ./data_dir/ --asr hubert - Train the digital human model using the lowest loss checkpoint:

python train.py --dataset_dir ./data_dir/ --save_dir ./checkpoint/ --asr hubert --use_syncnet --syncnet_checkpoint syncnet_ckpt

- Train the syncnet model to get better results:

- inference::

- Extracting test audio features:

python extract_test_audio.py YOUR_TEST_AUDIO_PATH --asr hubert - Running Reasoning:

python inference.py --dataset ./data_dir/ --audio_feat ./your_test_audio_hu.npy --save_path ./output.mp4 --checkpoint ./checkpoint/best_model.pth

- Extracting test audio features:

caveat

- Make sure that the video frame rate matches the chosen audio feature extraction scheme: 20fps for wenet and 25fps for hubert.

- During the training and inference process, the loss value of the model is regularly monitored and the optimal checkpoint is selected for training.

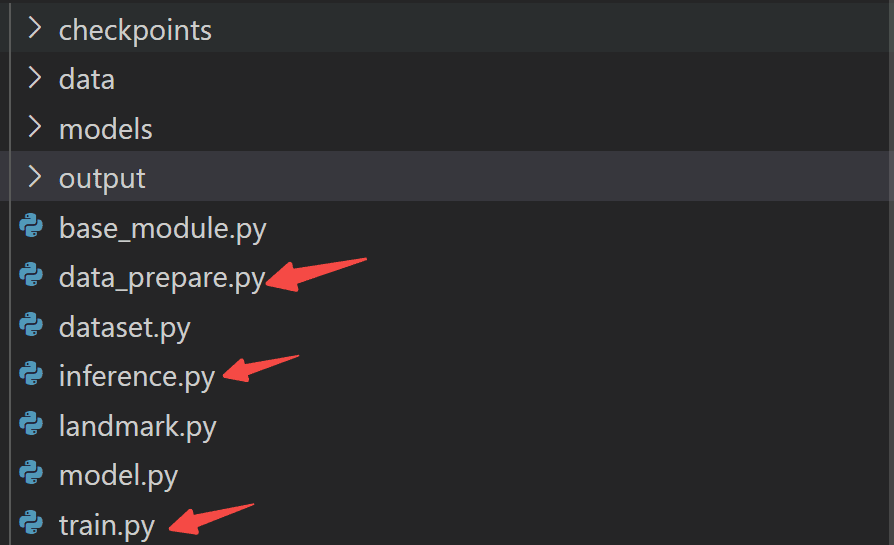

Monkey One Click Integration Pack

https://pan.baidu.com/s/19DcRlR0kJVg4bLb7snUrBQ?pwd=tct1

For your practical convenience, I have consolidated and encapsulated the above process (Includes model weights and test cases):

You just need to fool around:

step 0: Install the dependencies:

conda create -n udh python=3.10 conda activate udh pip install -r requirements.txt

STEP 1: Data preparation:

python data_prepare.py

STEP 2: Model training:

python train.py

STEP 3: Model Reasoning:

python inference.py

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...