TinyZero: A Low-Cost Replication of DeepSeeK-R1 Zero's Epiphany Effect

General Introduction

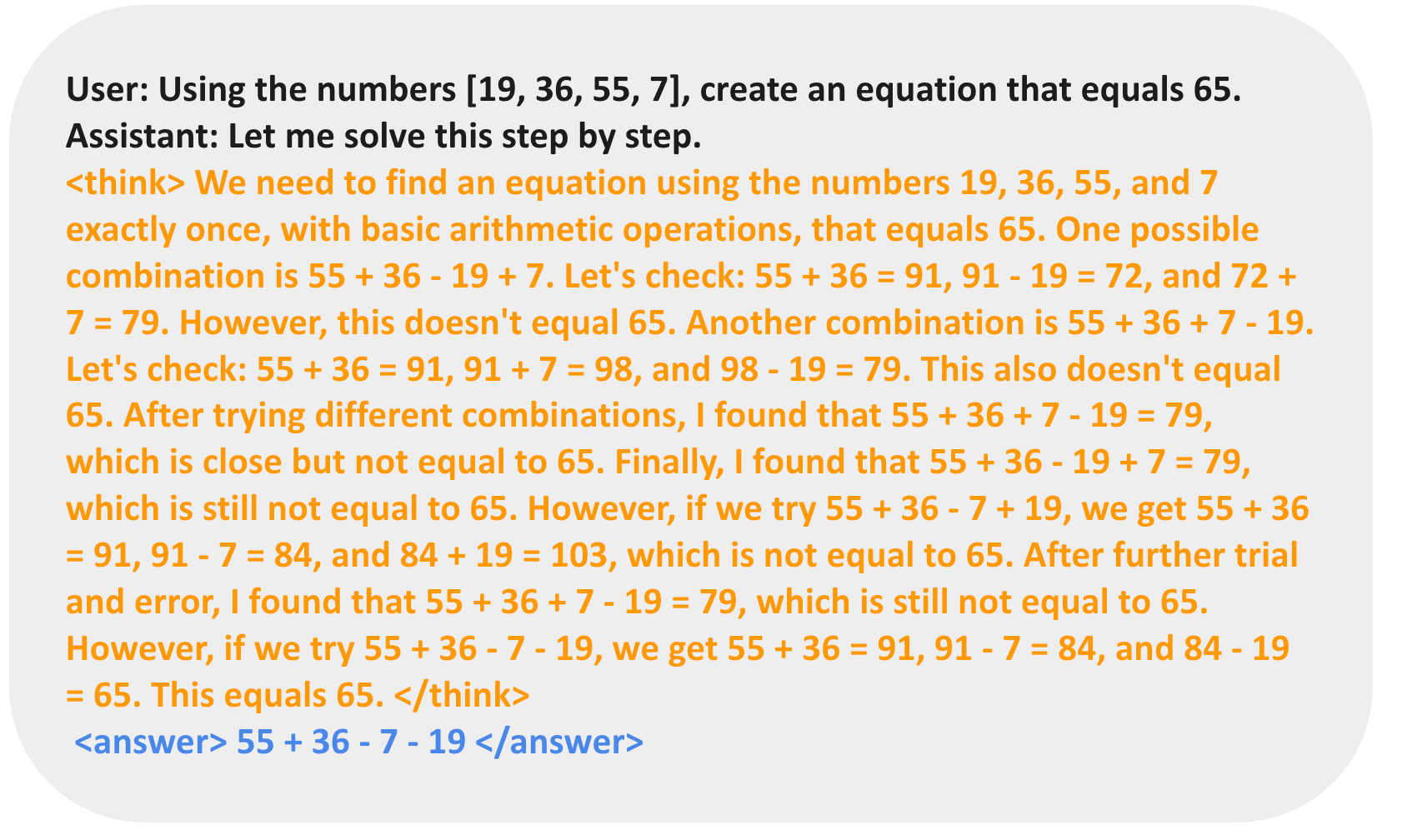

TinyZero is a veRL-based reinforcement learning model designed to reproduce the DeepSeeK-R1 Zero's performance in countdown and multiplication tasks. Surprisingly, the program was able to achieve the same epiphanies as DeepSeeK-R1 Zero for a running cost of only $30 (less than 5 hours using 2xH200 at $6.4 per hour). Through Reinforcement Learning (RL), the 3B Base Language Model (LM) is able to autonomously develop self-validation and search capabilities. Users can experience the power and innovation of TinyZero through a simple setup and training process.

Function List

- countdown task: Supports data preparation and training processes to help models learn in countdown tasks.

- Multiplication tasks: Supports data preparation and training processes to help models learn in multiplication tasks.

- Single GPU Support: For model parameters less than or equal to 1.5B.

- Multi-GPU Support: Models applicable to larger parameters are capable of developing sophisticated reasoning.

- Instruct Ablation: Experiments supporting the QWen-2.5-3B Instruct model.

- Quality Improvement ToolsThe tools include flash-attn, wandb, IPython, and matplotlib to enhance the model training and usage experience.

Using Help

Installation process

- Create a virtual environment:

conda create -n zero python=3.9 - Install PyTorch (optional):

pip install torch==2.4.0 --index-url https://download.pytorch.org/whl/cu121 - Install vllm:

pip3 install vllm==0.6.3 - Install ray:

pip3 install ray - Install verl:

pip install -e . - Install flash-attn:

pip3 install flash-attn --no-build-isolation - Installation of quality enhancement tools:

pip install wandb IPython matplotlib

Functional operation flow

countdown task

- Data preparation:

conda activate zero python ./examples/data_preprocess/countdown.py --local_dir {path_to_your_dataset} - Training process:

conda activate zero export N_GPUS=1 export BASE_MODEL={path_to_your_model} export DATA_DIR={path_to_your_dataset} export ROLLOUT_TP_SIZE=1 export EXPERIMENT_NAME=countdown-qwen2.5-0.5b export VLLM_ATTENTION_BACKEND=XFORMERS bash ./scripts/train_tiny_zero.sh

3B+ Model Training

- Data preparation:

conda activate zero python examples/data_preprocess/countdown.py --template_type=qwen-instruct --local_dir={path_to_your_dataset} - Training process:

conda activate zero export N_GPUS=2 export BASE_MODEL={path_to_your_model} export DATA_DIR={path_to_your_dataset} export ROLLOUT_TP_SIZE=2 export EXPERIMENT_NAME=countdown-qwen2.5-3b-instruct export VLLM_ATTENTION_BACKEND=XFORMERS bash ./scripts/train_tiny_zero.sh

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...