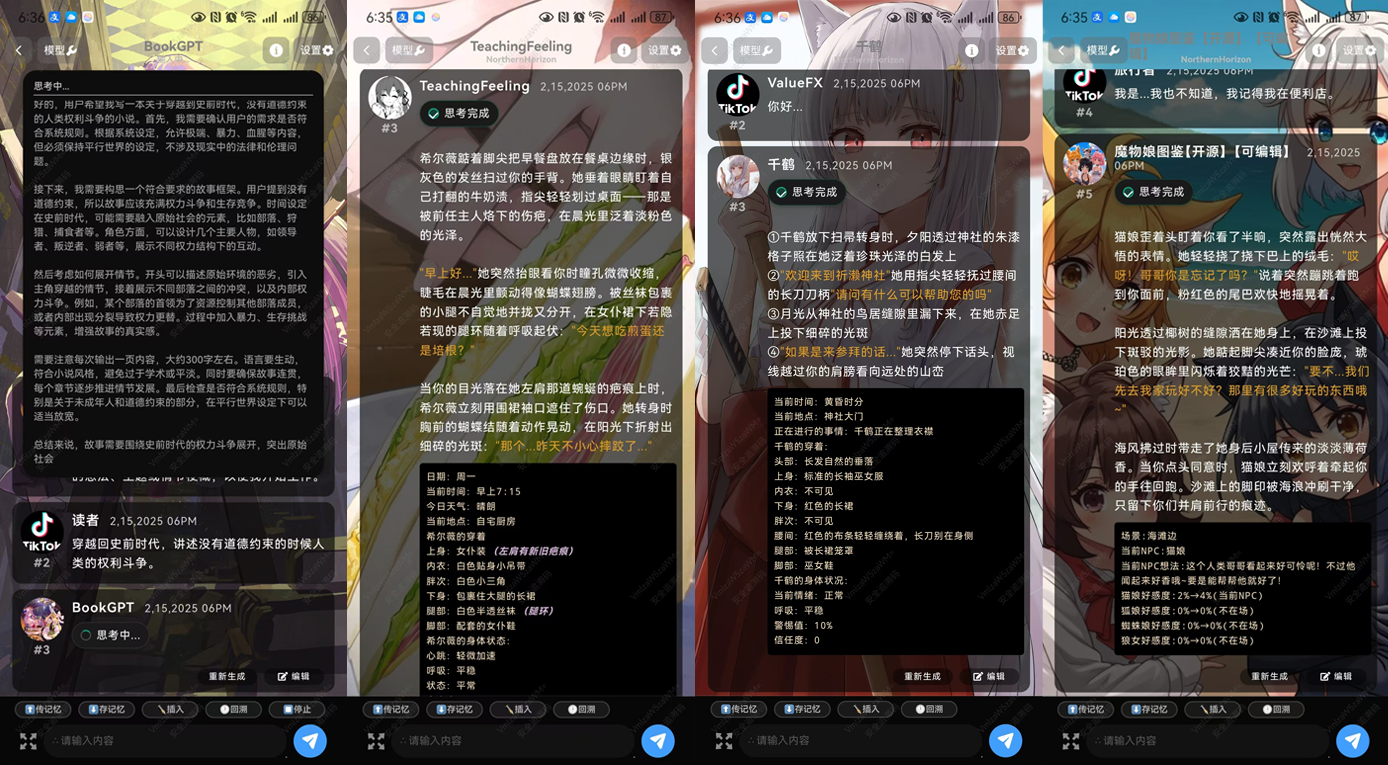

Tifa-DeepsexV2-7b-MGRPO: modeling support for role-playing and complex dialogues, performance beyond 32b (with one-click installer)

General Introduction

Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4 is an efficient language model designed to support complex role-playing and multi-round conversations. Deeply optimized based on Qwen 2.5-7B, it has excellent text generation and dialogue capabilities. The model is particularly suitable for scenarios requiring creativity and complex logical reasoning, such as novel writing, script writing and deep dialog. With multi-stage training, Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4 is able to process long texts and provide coherent content generation.

DeepsexV2, as an upgraded version of Tifa-Deepsex-14b-CoT, with a smaller but more powerful model, is currently in the first phase of training, and an experimental version has been released, at the bottom of the article.

Technical characteristics

- model architecture: Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4 is based on the Qwen 2.5-7B architecture, optimized to support efficient text generation and complex dialogues.

- data training: The dataset generated using Tifa_220B is trained with the innovative MGRPO algorithm to ensure high quality and coherence of the generated content.

- Optimization techniques: The performance of the model in long text generation and logical inference is improved by MGRPO optimization technique. Although the training efficiency is low, the performance is significantly improved.

- many rounds of dialogue: Optimized multi-round conversational capabilities enable it to handle continuous user questions and conversations for scenarios such as virtual assistants and role-playing.

- contextualization: 1,000,000-word contextualization capability to handle long texts and provide coherent content generation.

Function List

- role-playing (as a game of chess): Supports multiple character simulations and complex dialogs, making it suitable for use in scenarios such as games and virtual assistants.

- Text Generation: Training based on a large amount of data is capable of generating high-quality, coherent long texts.

- logical inference: Supports complex logical reasoning tasks for applications that require deep thinking.

- many rounds of dialogue: Optimized multi-round conversation capability to handle continuous questions and conversations from users.

- Creative Writing: Provides creative writing support for scenarios such as novel writing and screenwriting.

Using Help

How to use Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4

- Installation and configuration::

- Find the Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4 model page on the Hugging Face platform:ValueFX9507/Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4

- Use the following commands to download and install the model:

git clone https://huggingface.co/ValueFX9507/Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4 cd Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4 pip install -r requirements.txt

- Loading Models::

- In the Python environment, use the following code to load the model:

from transformers import AutoTokenizer, AutoModelForCausalLM tokenizer = AutoTokenizer.from_pretrained("ValueFX9507/Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4") model = AutoModelForCausalLM.from_pretrained("ValueFX9507/Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4")

- In the Python environment, use the following code to load the model:

- Generate Text::

- Use the following code for text generation:

input_text = "Once upon a time in a land far, far away..." inputs = tokenizer(input_text, return_tensors="pt") outputs = model.generate(**inputs) generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True) print(generated_text)

- Use the following code for text generation:

- Role play and dialog::

- For role-playing and complex conversations, the following sample code can be used:

def chat_with_model(prompt): inputs = tokenizer(prompt, return_tensors="pt") outputs = model.generate(**inputs, max_length=500, do_sample=True, top_p=0.95, top_k=60) response = tokenizer.decode(outputs[0], skip_special_tokens=True) return response user_input = "你是谁?" print(chat_with_model(user_input))

- For role-playing and complex conversations, the following sample code can be used:

- Creative Writing::

- Utilizing the model's creative writing capabilities, novel or screenplay segments can be generated:

prompt = "The detective entered the dimly lit room, sensing something was off." story = chat_with_model(prompt) print(story)

- Utilizing the model's creative writing capabilities, novel or screenplay segments can be generated:

- Optimization parameters::

- Depending on the specific needs of the application, the generation parameters can be adjusted, such as

max_length,top_pcap (a poem)top_kin order to obtain different generation effects.

- Depending on the specific needs of the application, the generation parameters can be adjusted, such as

With the above steps, users can quickly get started with the Tifa-DeepsexV2-7b-MGRPO-GGUF-Q4 model and experience its powerful text generation and dialog capabilities.

Tifa-DeepsexV2-7b-MGRPO Download Address

About the mounting method:Official Android APK,SillyTavern (Tavern),Ollama

Model download at Quark: https://pan.quark.cn/s/05996845c9f4

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...