Tifa-Deepsex-14b-CoT: a large model that specializes in roleplaying and ultra-long fiction generation

General Introduction

Tifa-Deepsex-14b-CoT is a large model based on Deepseek-R1-14B deep optimization, focusing on role-playing, fictional text generation, and Chain of Thought (CoT) reasoning ability. Through multi-stage training and optimization, the model solves the original model's problems of insufficient coherence in long text generation and weak role-playing ability, which is especially suitable for creative scenarios that require long-range contextual correlation. By fusing high-quality datasets and incremental pre-training, the model significantly enhances the contextual association ability, reduces non-answers, and eliminates Chinese-English mixing and increases domain-specific vocabulary for better performance in role-playing and novel generation. In addition, the model supports 128k ultra-long contexts for scenarios requiring deep dialog and complex authoring.

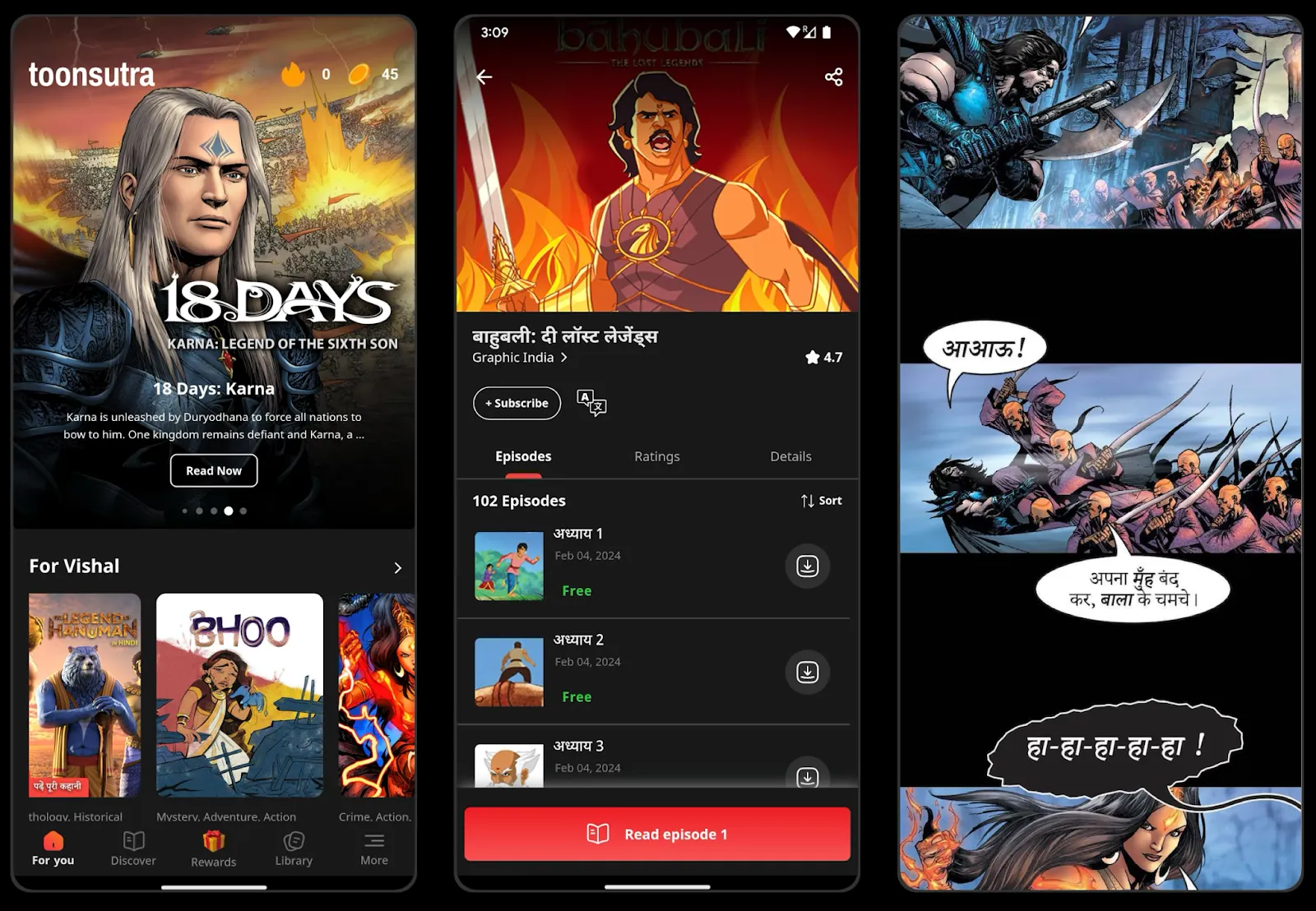

This is a version of Deepseek-R1-14B that is deeply optimized for long-form fiction and roleplaying scenarios, and has a simple Android client available for download. Current Upgrades Deepsex2 Edition.

Function List

- Supports in-depth dialog for role-playing scenarios, generating responses that match the character's personality and background.

- Provide fictional text-generation skills to create coherent, long-form stories or plots.

- Chain of Thought (CoT) reasoning skills for scenarios requiring logical deduction and complex problem solving.

- Supports 128k ultra-long context to ensure high coherence and consistency of long text generation.

- The optimized model reduces the phenomenon of answer rejection, and security is moderately preserved for diverse authoring needs.

- Provide a variety of quantization versions (e.g., F16, Q8, Q4), adapting to different hardware environments and facilitating user deployment and use.

Using Help

Installation and Deployment

The Tifa-Deepsex-14b-CoT model is hosted on the Hugging Face platform, and users need to select the appropriate model version (e.g., F16, Q8, Q4) based on their hardware environment and requirements. Below is the detailed installation and deployment process:

1. Download model

- Visit the Hugging Face model page at https://huggingface.co/ValueFX9507/Tifa-Deepsex-14b-CoT.

- Select the appropriate quantization version (e.g. Q4_K_M.gguf) according to the hardware support. Click on the corresponding file to download the model weights.

- If you need to use the Demo APK, you can directly download the officially provided demo program (you need to manually import the character card and select the custom API).

2. Environmental preparation

- Ensure that the Python environment is installed (Python 3.8 or above is recommended).

- Install the necessary dependent libraries such as transformers, huggingface_hub, etc. They can be installed with the following commands:

pip install transformers huggingface-hub - If you are using a GGUF format model, it is recommended to install the llama.cpp or related support libraries. They can be cloned and compiled with the following commands:

git clone https://github.com/ggerganov/llama.cpp cd llama.cpp make

3. Model loading

- Use transformers to load the model:

from transformers import AutoModelForCausalLM, AutoTokenizer model_name = "ValueFX9507/Tifa-Deepsex-14b-CoT" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForCausalLM.from_pretrained(model_name) - If the GGUF format is used, it can be run via llama.cpp:

./main -m Tifa-Deepsex-14b-CoT-Q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "你的提示词"where -c 4096 can be adjusted to a larger context length (e.g. 128k) as needed, but be aware of hardware limitations.

4. Configuration and optimization

- Ensure that the returned context is stripped of think labels (e.g. ) to avoid affecting the model output. This can be achieved with the following code:

content = msg.content.replace(/<think>[\s\S]*?<\/think>/gi, '') - If you use the front-end interface, you need to manually modify the front-end code to adapt the context processing, refer to the official sample template.

Functional operation flow

role-playing feature

- Enter the character setup: specify the character's background, personality, dialog scenes, etc. in the prompt. Example:

你是一个勇敢的冒险者,名叫蒂法,正在探索一座神秘的古城。请描述你的冒险经历,并与遇到的 NPC 进行对话。 - Generate Responses: The model generates personality-appropriate dialog or narratives based on characterization. The user can continue to input and the model will maintain contextual coherence.

- Adjusting parameters: Optimize the output by adjusting temperature (to control the randomness of the generated text) and repeat_penalty (to control repeated content).

Novel Generation Function

- Setting the story's context: provide the beginning or outline of the story, for example:

在一个遥远的王国,有一位年轻的法师试图解开时间的秘密。请续写这个故事。 - Generate Story: The model will generate coherent long stories based on prompts, supporting multi-paragraph output.

- Long context support: thanks to 128k context support, users can input longer story context and the model still maintains plot consistency.

Chain of Thought (CoT) reasoning

- Enter complex problems: e.g:

如果一个城市每天产生100吨垃圾,其中60%可回收,40%不可回收,但回收设施每天只能处理30吨可回收垃圾,剩余的可回收垃圾如何处理? - Generate a reasoning process: the model analyzes the problem step by step, provides logical and clear answers, and supports long-range reasoning.

caveat

- Hardware Requirements: The model requires a high level of graphics memory to run, a GPU or high performance CPU with at least 16GB of graphics memory is recommended.

- Security and Compliance: The model retains certain security settings during training, and users need to ensure that the usage scenario complies with relevant laws and regulations.

- Context management: When using very long contexts, it is recommended to enter prompt words in segments to avoid exceeding hardware limits.

With these steps, users can easily get started with the Tifa-Deepsex-14b-CoT model, whether for role-playing, novel creation, or complex reasoning, and get high-quality generated results.

Tifa-Deepsex-14b-CoT Version Differences

Tifa-Deepsex-14b-CoT

- Validate the model and test the impact of the RL reward algorithm on role-playing data, the initial version has a flexible but uncontrolled output and is intended for research use only.

Tifa-Deepsex-14b-CoT-Chat

- Trained with standard data, using proven RL strategies with additional anti-repeat reinforcement learning, suitable for normal use. Output text quality is normal, with divergent thinking in a few cases.

- Incremental training of 0.4T novel content, 100K SFT data generated by TifaMax, 10K SFT data generated by DeepseekR1, and 2K high-quality manual data.

- 30K DPO reinforcement learning data generated by TifaMax for preventing duplication, enhancing contextualization, and improving political security.

Tifa-Deepsex-14b-CoT-Crazy

- A large number of RL strategies are used, mainly using data distilled from 671B full-blooded R1, with high output dispersion, inheriting the advantages of R1 as well as the hazards of R1, and good literary performance.

- Incremental training of 0.4T novel content, 40K SFT data generated by TifaMax, 60K SFT data generated by DeepseekR1, and 2K high-quality manual data.

- 30K DPO reinforcement learning data generated by TifaMax for preventing duplicates, enhancing contextual associations, and improving political security.10K PPO data generated by TifaMax and 10K PPO data generated by DeepseekR1.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...