Text generation web UI: Gradio-based large language modeling chat interface with support for multiple back-end services

General Introduction

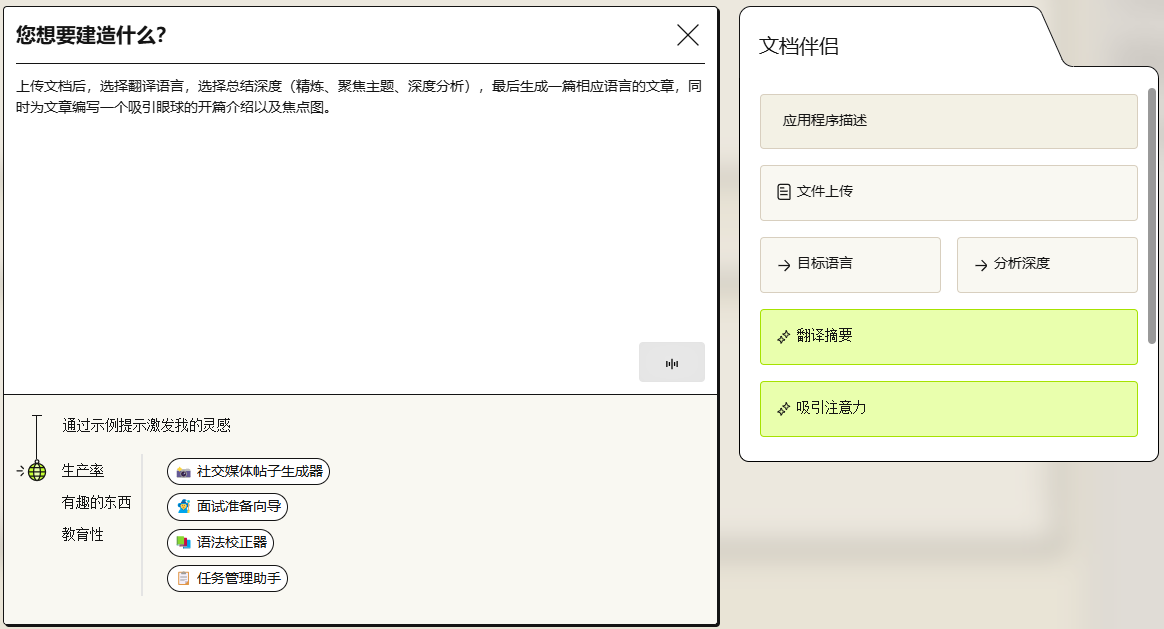

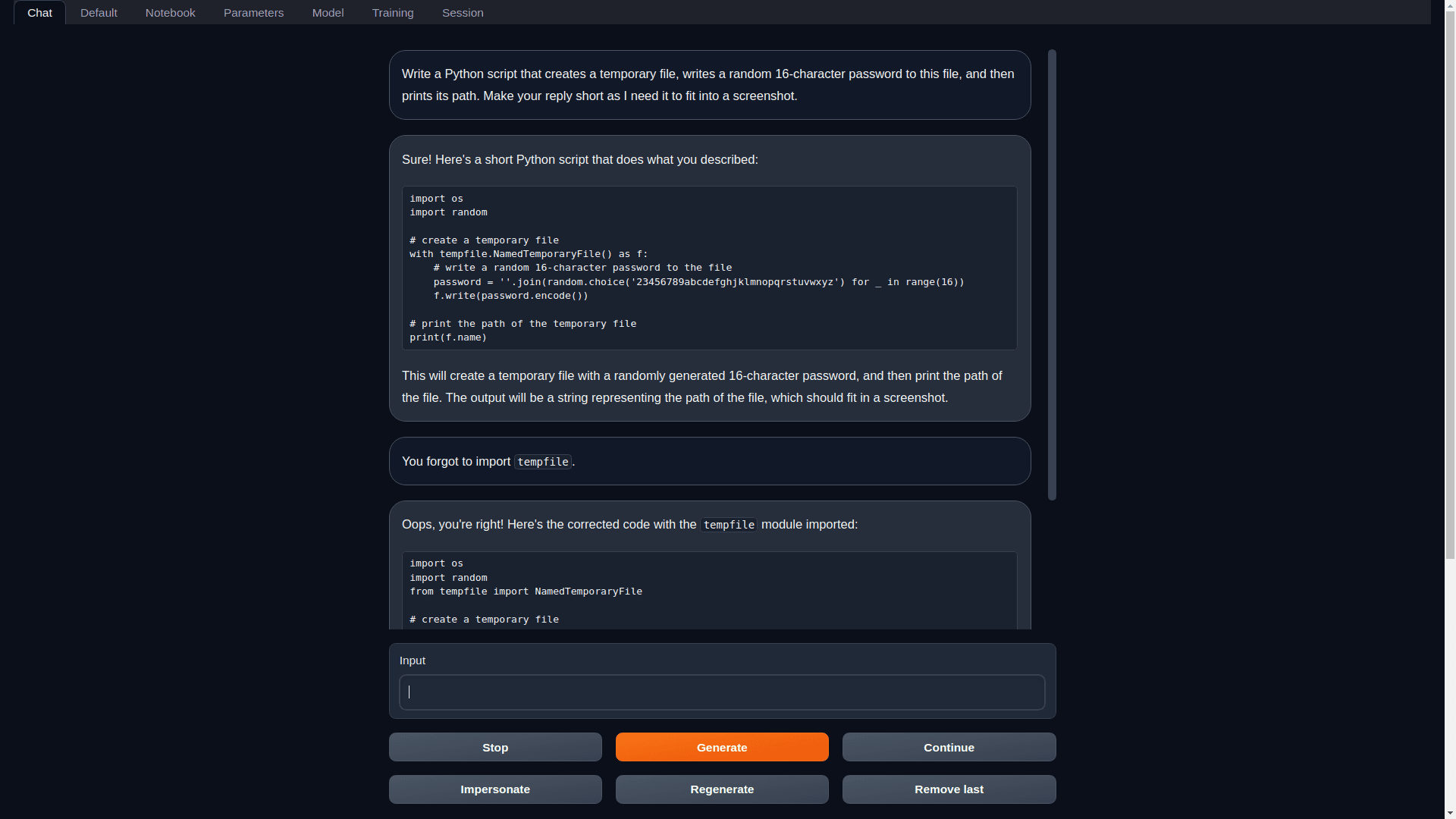

Text generation web UI is a Gradio-based web UI designed for the Large Language Model (LLM). It supports a variety of text generation backends, including Transformers, llama.cpp and ExLlamaV2. Users can quickly deploy and use the tool for text generation tasks with simple installation steps.

Function List

- Multi-model support: Support Transformers, llama.cpp, ExLlamaV2 and many other text generation backends.

- Automatic prompt formatting: Use Jinja2 templates to format tips automatically.

- Multiple chat modesThe chat modes are instruct, chat-instruct and chat.

- Chat History: Quickly switch between different conversations.

- Free text generation: Free text generation in default/notebook tabs, regardless of chat rounds.

- Multiple sampling parameters: A wide range of sampling parameters and generation options are provided for fine control of text generation.

- Model switching: Easily switch between models in the interface without rebooting.

- LoRA fine-tuning tool: Provides simple LoRA fine-tuning tools.

- Extended Support: Supports a wide range of built-in and user-contributed extensions.

Using Help

Installation process

- Clone or download the repository::

git clone https://github.com/oobabooga/text-generation-webui

cd text-generation-webui

- Run a script that matches the operating system::

- For Linux users:

bash

./start_linux.sh - For Windows users:

cmd

start_windows.bat - For macOS users:

bash

./start_macos.sh - For WSL users:

bash

./start_wsl.bat

- For Linux users:

- Select GPU Vendor: Follow the prompts to select your GPU vendor.

- browser access: At the end of the installation, open the browser to visit

http://localhost:7860The

Guidelines for use

- Launch Interface: After running the appropriate startup script, open a browser and visit the

http://localhost:7860The - Select Model: Select the desired text generation model in the interface.

- input prompt: Enter your cue word in the input box and select the generation parameters.

- Generate Text: Click on the Generate button to see the result of the generated text.

- Switching Mode: Switch between instruct, chat-instruct and chat modes as needed.

- View History: Use the "History" menu to quickly switch between conversations.

- Extended functionality: Install and enable required extensions for enhanced functionality.

Detailed Function Operation

- Automatic prompt formatting: When prompts are entered, they are automatically formatted using the Jinja2 template to ensure that they conform to the model.

- Multiple sampling parameters: Users can adjust parameters such as temperature, maximum length, and repeat penalty to control the style and length of the generated text.

- Free text generation: In the Default/Notebook tab, users can perform free text generation, not limited to chat rounds, suitable for long text generation tasks.

- LoRA fine-tuning: Using the built-in LoRA fine-tuning tool, users can fine-tune the model to enhance the generation of specific tasks.

- Extended Support: By installing extensions, users can add more features such as speech synthesis, image generation, etc.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...