Tencent Cloud LKE (Large Model Knowledge Engine): Third-Party Stable Networked Version DeepSeek-R1

General Introduction

Large Model Knowledge Engine (LKE for short) is a smart application building platform for enterprise users launched by Tencent Cloud. It combines powerful big language modeling technology with enterprise proprietary data to help users quickly build knowledge quizzes, RAG (Retrieval Augmented Generation) applications, Agent intelligences and workflows, etc. LKE is designed to promote the application of big models in enterprise scenarios, and it is applicable to a variety of industries such as education, finance, and e-commerce. Whether it is to improve customer service efficiency or optimize internal knowledge management, LKE can provide efficient solutions through natural language conversations and low-code configuration. Currently, the platform supports a limited-time free call to the DeepSeek model API, which lowers the threshold of enterprise use and enables more users to experience the convenience of intelligence.

It is a tool for quickly building a professional knowledge base, so the large models provided are "pure" and stable enough to be used on a daily basis.The experience portal is here. And of course you can create a smart knowledge base at low cost here.

Complete response rate + truncation rate + no response rate = 100%

- Complete response rate: The model gives complete responses without truncation, no response, etc., but does not take into account whether the answer is correct or incorrect; it is then divided by the total number of questions to give a ratio.

- truncation rate: The model was disconnected in the response process and did not give a complete answer; the former was then divided by the total number of questions to give the ratio.

- no reply rate: The model does not give an answer for special reasons, such as no response/request error; the former is then divided by the total number of questions to obtain the ratio.

- accuracy: For questions where the model gives a complete response, the proportion of answers to the model that agree with the correct answer; for correct answers, only the final answer is looked at and the solution process is not checked.

- Reasoning elapsed time (seconds/question): For questions for which the model gives a complete response, the model infers the average time used for each answer.

Testing Issues

Problem: Two persons, A and B, are traveling from A to B at the same time on a battery-operated bicycle, with A traveling 1.5 times as fast as B. B is traveling 10 kilometers on a battery-operated bicycle, and B is traveling 10 kilometers on a bicycle. After B has ridden for 10 kilometers, the bicycle breaks down and B starts to repair it immediately. The repair takes 1/9th of the time it took B to ride at the original speed, but after the repair, B's speed has increased by 1001 TP3 T. Eventually, both A and B arrive at place B at the same time. What is the distance between places A and B?

Reference answer: 90 kilometers.

Question: Ming starts his homework between 4:00 and 5:00 p.m. when the minute hand and hour hand on the clock coincide, and stops when the minute hand and hour hand are at right angles for the first time; then he starts reading a comic strip and stops when the minute hand and hour hand are in the same line for the first time. So, how many minutes did Ming spend on his homework and reading the cartoon? (Keep the result in one decimal place)

Reference answer: about 16.4 minutes for writing homework and 16.4 minutes for reading comics.

Function List

- Knowledge Quiz System: Rapidly build intelligent Q&A services based on proprietary enterprise data, where users can input questions and get accurate answers.

- RAG Application Support: Generate more accurate responses by combining external knowledge bases through search-enhanced generation techniques.

- Agent Intelligence Building: Create intelligent assistants that automate tasks, such as auto-responding or handling simple processes.

- Workflow optimization: Design and automate business processes to improve team collaboration.

- Document parsing and previewing: Extract key information after uploading documents, support preview and content analysis.

- Multiple rounds of dialog rewriting: Optimize user queries based on conversation history to enhance the multi-round interaction experience.

- text vectorization: Convert text into numeric vectors for retrieval, recommendation, and other scenarios.

- Low-code configuration: Provides a visual interface that allows operators to manage the knowledge base without programming.

Using Help

1. Registration and login

- Open the Tencent Cloud website (cloud.tencent.com), click on the "Register" button in the upper right corner, and fill in your email or cell phone number to complete the account creation.

- After successful registration, return to the LKE page, click "Login" and enter your account password to access the console.

- If you already have a Tencent Cloud account, you can log in directly.

2. Access to the LKE console

- After logging in, search for "Large Model Knowledge Engine" in the Tencent Cloud console or visit the LKE website directly.

- Click the "Experience Now" button to enter the LKE management interface. You may need to authorize LKE for the first time, just agree to the Terms of Service.

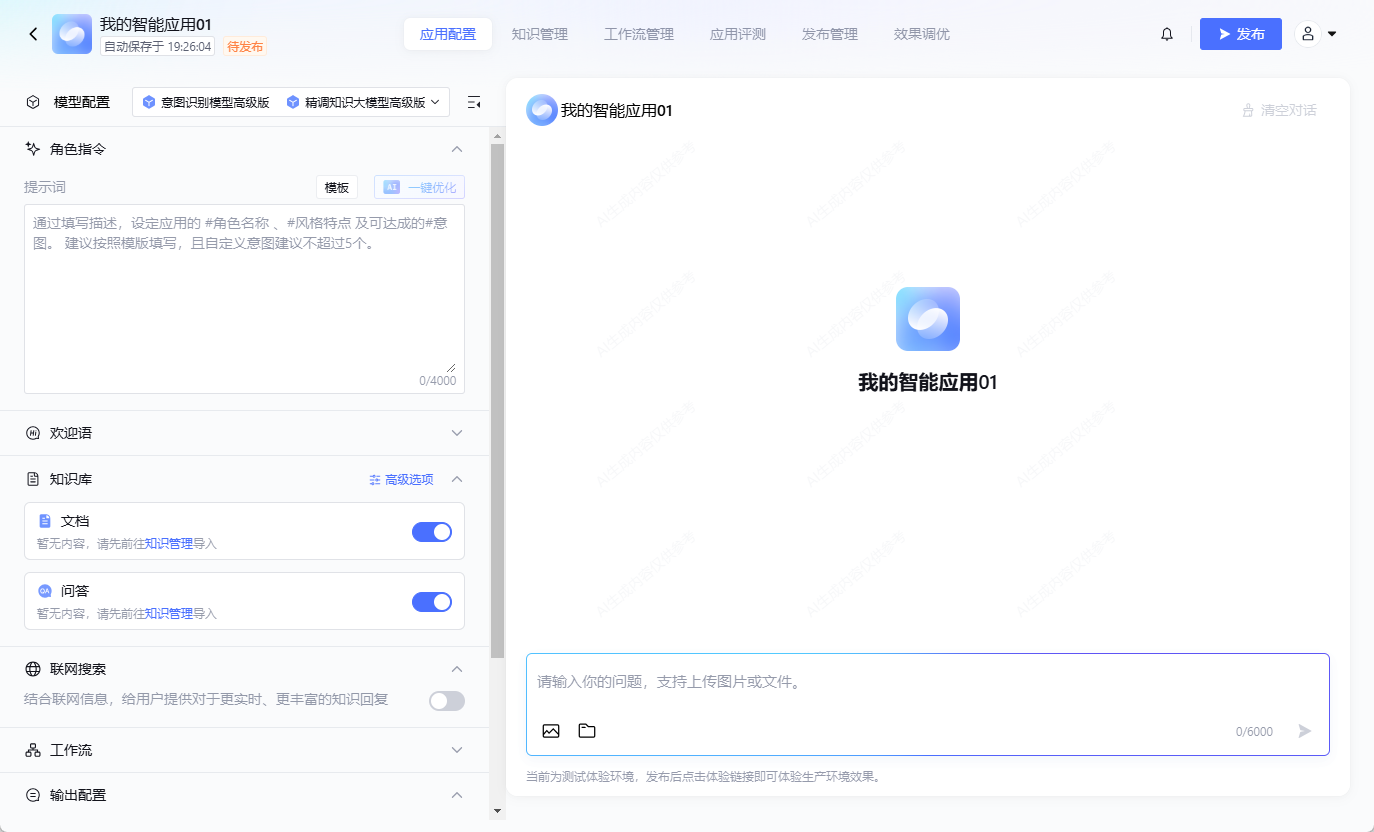

3. Creating the first knowledge application

- Step 1: New Application

- From the LKE homepage, click on the "Create Application" button.

- Enter the application name (e.g. "Customer Service Assistant"), select the application type (Q&A, Agent, etc.) and click "OK".

- Step 2: Uploading knowledge data

- Click on "Knowledge Base Management" within the application and select "Upload Files".

- Support for uploading PDF, Word, TXT and other formats, click "Confirm Upload", the system will automatically parse the document content.

- After uploading, you can check the parsing results in the "Preview" function to make sure the content is accurate.

- Step 3: Configure the Q&A logic

- Go to the "Q&A Configuration" module to set up FAQs and answer templates.

- Enable the RAG function, check "Search Enhanced Generation", the system will optimize the answer according to the knowledge base.

- Step 4: Testing and Adjustment

- Enter a question in the "Test" area, such as "What is the company's policy?" to see the system's response.

- Adjust knowledge base content or quiz logic based on test results until satisfied.

4. Detailed explanation of the operation of special functions

- Document parsing and previewing

- In the Knowledge Base Manager, click on the uploaded file and select "Get Preview".

- The system displays the key content of the document, such as headings and paragraph summaries, and the user can manually adjust the parsing range.

- If you need more detailed parsing, you can contact Tencent Cloud customer service to open the "Document Parsing Atomic Capability".

- Multiple rounds of dialog rewriting

- Enable the "QueryRewrite" feature to enter vague questions such as "What about it?" in a multi-round dialog. .

- The system will rewrite the question in the context of the preceding text (e.g., "Company policy") as "What is company policy?" and generates the full answer.

- Usage scenarios: intelligent customer service, conversational search.

- Text vectorization (GetEmbedding)

- On the Atomic Capabilities screen, select Get Vector.

- Input a text, such as "Product Description", click "Generate", the system returns a vector of values.

- Can be used for knowledge retrieval or recommendation system, need to cooperate with the API call (detailed documentation see tencent cloud official website).

- Agent Intelligence

- In "Agent Management", click "New Agent".

- Set task goals, such as "Autoresponders", and upload relevant templates.

- Test the effect of Agent execution, adjust the details of the instructions and save and go live.

5. Recommendations and precautions for use

- Data preparation: Ensure that the document is clear before uploading, avoid scanned or confusingly formatted documents.

- test and verify: Multiple rounds of testing before going live to ensure answer accuracy.

- Rights Management: Set access rights in the Tencent Cloud console to avoid data leakage.

- free call: Take advantage of the free credits of the DeepSeek Model API to prioritize your experience with the core functionality.

With the above steps, users can quickly get started with LKE. Whether you are a small or medium-sized enterprise or a large organization, LKE's low-code operation and powerful functions can meet diversified needs. Regular updating of the knowledge base is recommended to keep the Q&A current.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...