TANGO: a tool for voice-generated coordinated gesture portrait videos with full-body digital humans

General Introduction

TANGO (Co-Speech Gesture Video Reenactment with Hierarchical Audio-Motion Embedding and Diffusion Interpolation) is an open source jointly developed by the University of Tokyo and CyberAgent AI Labs framework for collaborative speech gesture video generation. The project utilizes hierarchical audio-motion embedding space and diffusion interpolation to automatically generate natural, smooth and synchronized character gesture videos based on input speech.TANGO achieves high quality gesture-action generation through an innovative action graph retrieval method, which first retrieves the reference video clip that best matches the target speech in the implicitly hierarchical audio-motion embedding space, and then performs the action interpolation using the diffusion model. action generation. This project not only advances the research on AI-driven human-computer interaction, but also provides important technical support for applications such as virtual anchors and digital humans.

The current open source TANGO only supports up to 8s audio, before using, you need to do segmentation of the audio file!

Work with the Voice Lip Sync tool for a complete digital person project: sync ,Wav2Lip Ultralight Digital Human. The complete workflow is: Ultralight Digital Human counterpoint, TANGO generates body movements, FaceFusion face swap, perfect!

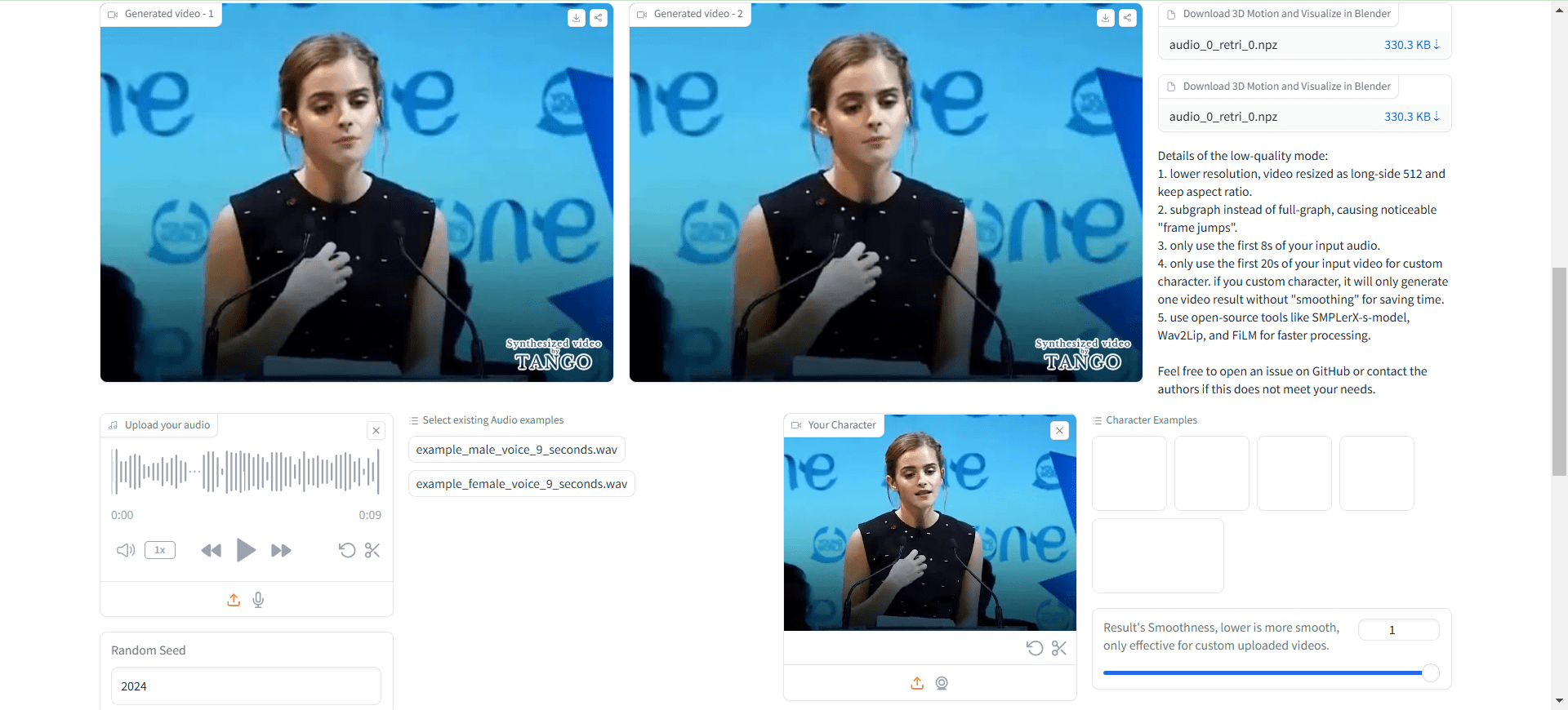

Online experience: https://huggingface.co/spaces/H-Liu1997/TANGO

Function List

- Highly Accurate Gesture Synchronization : Accurately synchronize any audio with the gestures in the video.

- Multi-language support: Works with a variety of languages and sounds, including CGI faces and synthesized sounds.

- Open source and free : The code is completely public, and users are free to use and modify it.

- Interactive Demo: Provides an online demo where users can upload video and audio files to experience.

- Pre-training models: Provide a variety of pre-training models, users can directly use or secondary training.

- Complete training code: Includes training code for gesture synchronization discriminator and TANGO model.

Using Help

1. Environmental configuration

1.1 Basic requirements:

- Python version: 3.9.20

- CUDA version: 11.8

- Disk space: at least 35 GB (for storing models and pre-calculated maps)

1.2 Installation steps:

# 克隆项目仓库

git clone https://github.com/CyberAgentAILab/TANGO.git

cd TANGO

git clone https://github.com/justinjohn0306/Wav2Lip.git

git clone https://github.com/dajes/frame-interpolation-pytorch.git

# 创建虚拟环境(可选)

conda create -n tango python==3.9.20

conda activate tango

# 安装依赖

pip install -r ./pre-requirements.txt

pip install -r ./requirements.txt

2. Utilization process

2.1 Quick start:

- Run the reasoning script:

python app.py

On the first run, the system automatically downloads the necessary checkpoint files and pre-calculated maps. The generation of approximately 8 seconds of video takes about 3 minutes of processing time.

2.2 Custom role creation:

- If you need to create an action figure for a new character:

python create_graph.py

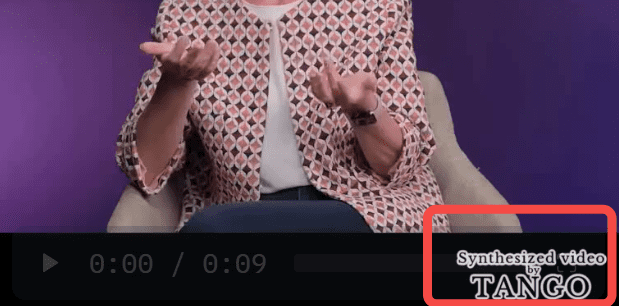

Among other things, the project generates videos with TANGO watermarks by default, similar to the one below:

Essentially a local ffmpeg is called to synthesize the original video and the watermarked image into a new video.

If you don't want a watermark, you can change theapp.pyCenter:

gr.Video(value="./datasets/cached_audio/demo1.mp4", label="Demo 0", , watermark="./datasets/watermark.png") # 修改为 gr.Video(value="./datasets/cached_audio/demo1.mp4", label="Demo 0")

Non-localhost access, modifications required:

demo.launch(server_name="0.0.0.0", server_port=7860)

Open it again to find no watermark in the loaded video.

The final video generated has no audio, so you need to manually synthesize the audio into it.

/usr/bin/ffmpeg -i outputs/gradio/test_0/xxx.mp4 -i gen_audio.wav -c:v libx264 -c:a aac result_wav.mp4

It can be noticed: there is nothing wrong with the body movements, the mouth shape is completely wrong.

This is not. Ultralight Digital Human And it came in handy?

Usage Process

- To access the local server: Open the

http://localhost:3000The - Upload Video and Audio : Upload the audio and video files you want to synchronize in the input box.

- Perform gesture synchronization : Tap the "Synchronize" button, the system will automatically perform the gesture synchronization process.

- Viewing and Downloading Results : After synchronization is complete, you can preview the results and download the synchronized video files.

- Use Interactive Demo : Upload video and audio files on the Demo page to experience the gesture synchronization effect in real time.

- Manage Projects : View and manage all uploaded projects on the My Projects page, supporting version control and collaboration.

Advanced Features

- Smart Gesture Synchronization : Improve the presentation of your video content with smart gesture synchronization provided by AI.

- Multi-language support : Select different languages and voices according to your project needs.

- Customized development: Since TANGO is open source, users can develop it according to their needs.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...