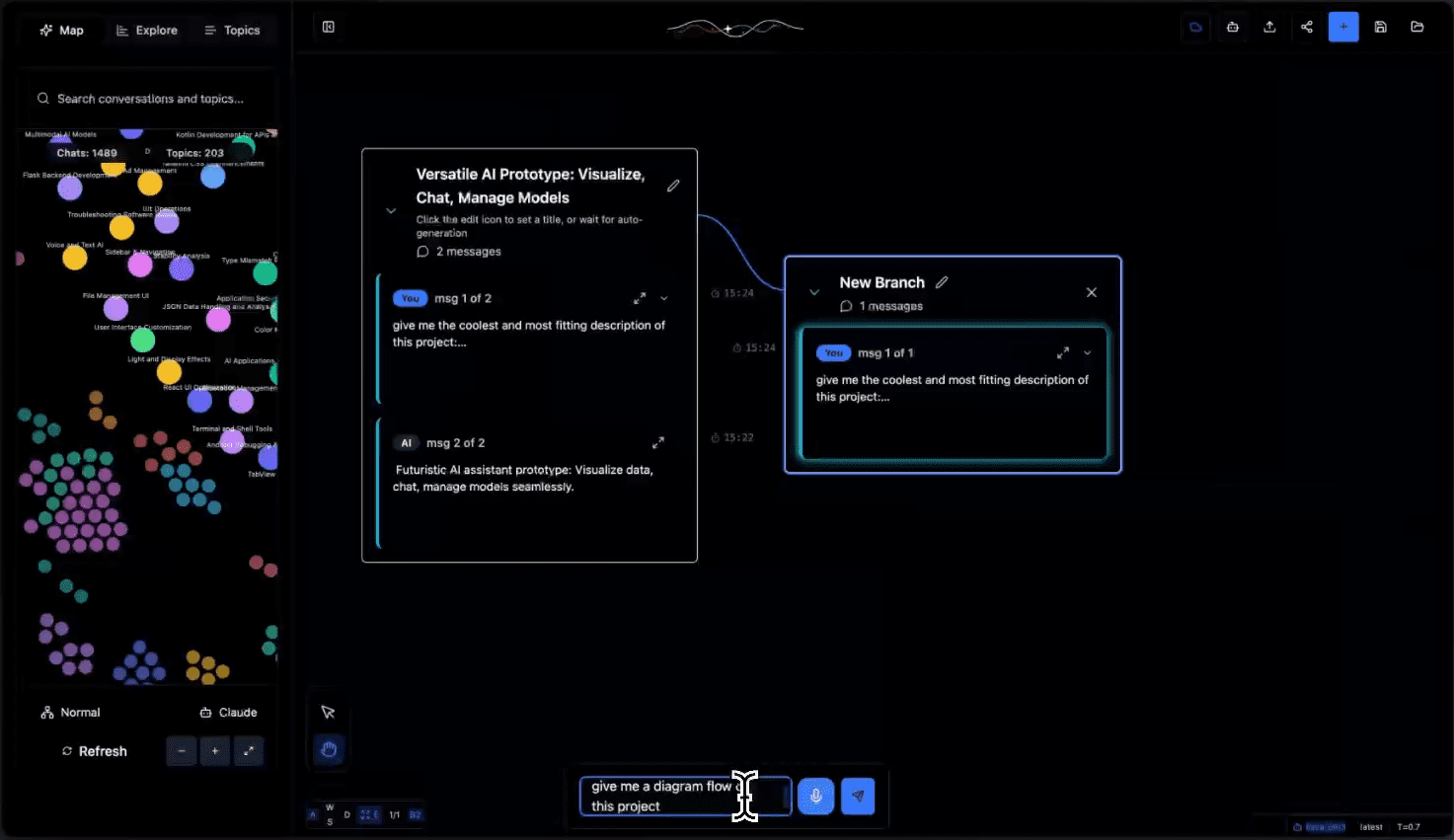

Tangent: interactive AI dialog canvas tool to create multiple dialog branches with support for merging, comparing and deleting branches

General Introduction

Tangent is an innovative AI conversation canvas tool that combines the visual interaction of Excalidraw with the flexibility of ComfyUI, focusing on creating a new exploration experience for LLM (Large Language Model) conversations. Unlike traditional chat interfaces, Tangent transforms AI conversations into visual experiments, giving users the freedom to branch, merge and compare different conversation paths. It supports an offline-first local deployment model that relies entirely on local models to run, and is currently implemented primarily through Ollama, with plans to expand to support more backends. The project is open-sourced under the Apache 2.0 license, encourages community contributions, and provides a new experimental platform for AI conversation exploration.

Function List

- Conversation Resuscitation and Continuity: Breaking through contextual constraints to seamlessly resume previous conversations

- Branching exploration system: create branches at any dialog node to test multiple dialog directions

- Offline Local Deployment: Runs entirely on a local model to protect private data security

- Dynamic topic clustering: automatically inferring conversation topics and organizing them into categories to optimize the navigation experience

- Export data compatibility: support for Claude and ChatGPT data export formats

- Visualization of the conversation tree: showing conversation branches and experimental processes in a tree structure

- API support: Provides a full REST API for processing and managing dialog data.

- Real-time status tracking: monitor dialog processing progress and task status

Using Help

1. Environmental preparation

1.1 Install the necessary dependencies:

- Whisper.cpp: for speech processing

git clone https://github.com/ggerganov/whisper.cpp cd whisper.cpp sh ./models/download-ggml-model.sh base.en make

- Ollama: local model runtime environment

- Visit https://ollama.com/ to download the appropriate version for your system

- Verify the installation:

ollama --version - Download the necessary models:

ollama pull all-minilm ollama pull qwen2.5-coder:7b

1.2 Start the Ollama service:

ollama serve

2. Back-end setup

2.1 Initialize the Python environment:

cd tangent-api

source my_env/bin/activate

pip install -r requirements.txt

2.2 Configure the local model:

cd src

python3 app.py --embedding-model "custom-embedding-model" --generation-model "custom-generation-model"

The back-end service will start at http://localhost:5001/api

3. Front-end setup

cd simplified-ui

npm i

npm start

Visit http://localhost:3000 to use the interface

4. Description of the use of the main functions

4.1 Dialogue management:

- Create a new dialog: click the "+" button in the upper right corner of the screen.

- Branching dialogs: Right click on any dialog node and select "Create Branch".

- Merge dialogs: Drag and drop dialogs from different branches to the target node to merge them.

4.2 Topic organization:

- Automatic clustering: the system automatically analyzes the content of the conversation to generate topic labels

- Topic Filtering: Quickly locate relevant conversations through the list of topics on the left side.

- Manual tagging: supports customized topic tags and categories

4.3 Data import and export:

- Support for importing Claude and ChatGPT conversation logs

- Conversation logs can be exported to multiple formats

- Provide API interface to automate processing

5. Guidelines for the use of the API

Main endpoints:

- POST

/api/process: Processing of uploaded dialog data - GET

/api/process/status/<task_id>: Query processing status - POST

/api/chats/save: Save dialog data - GET

/api/chats/load/<chat_id>: Load a specific dialog - GET

/api/topics: Get the list of generated topics

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...