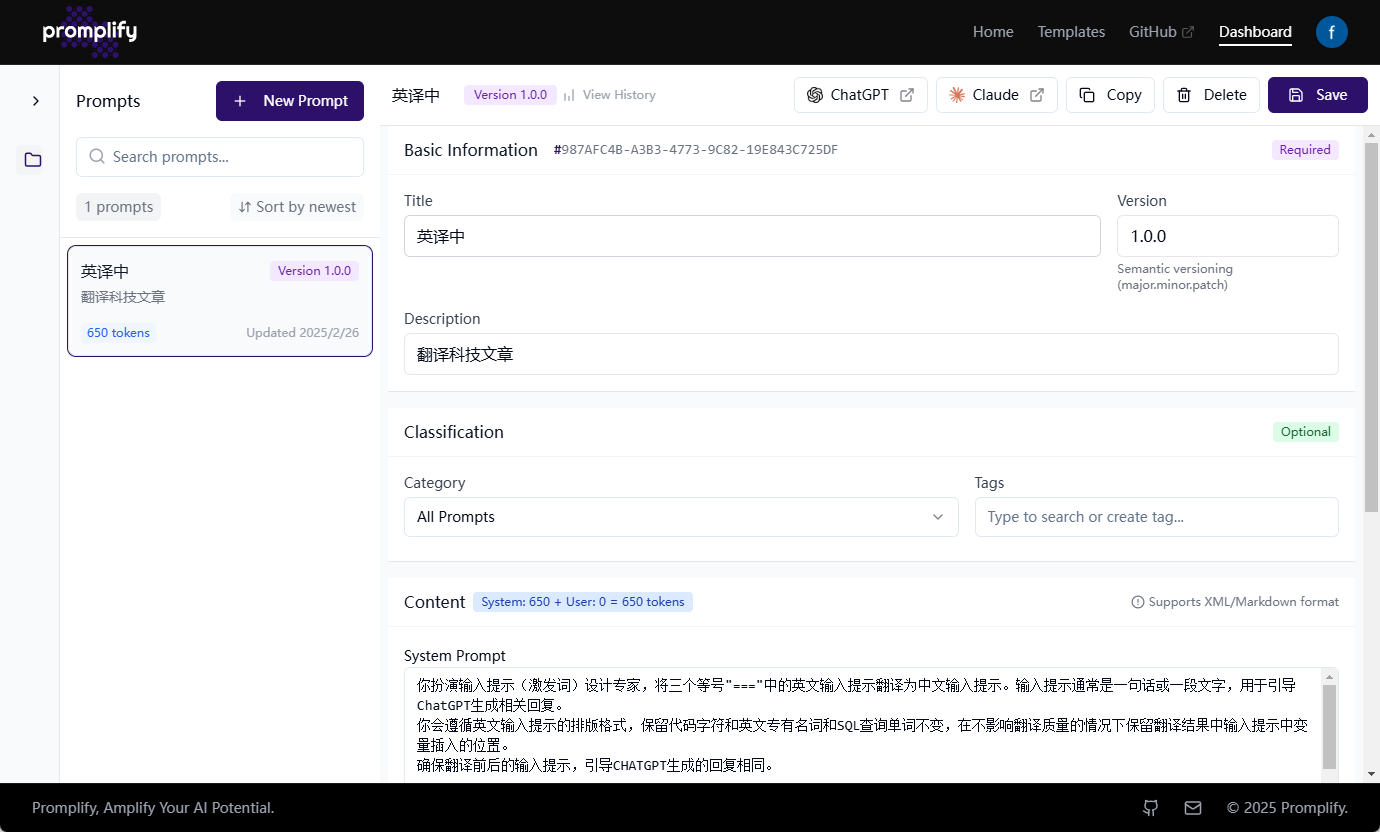

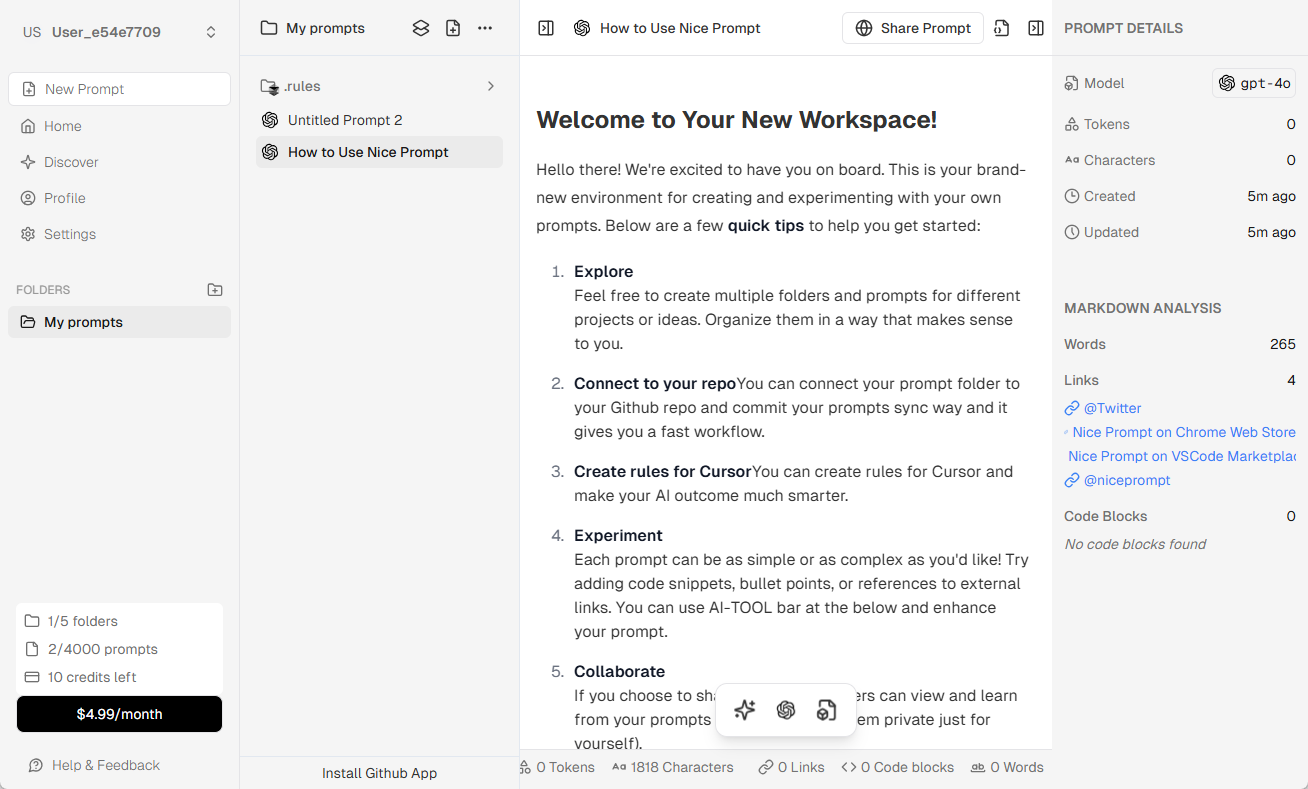

Quick Prompt: Browser Extension for Quickly Managing and Using Prompts

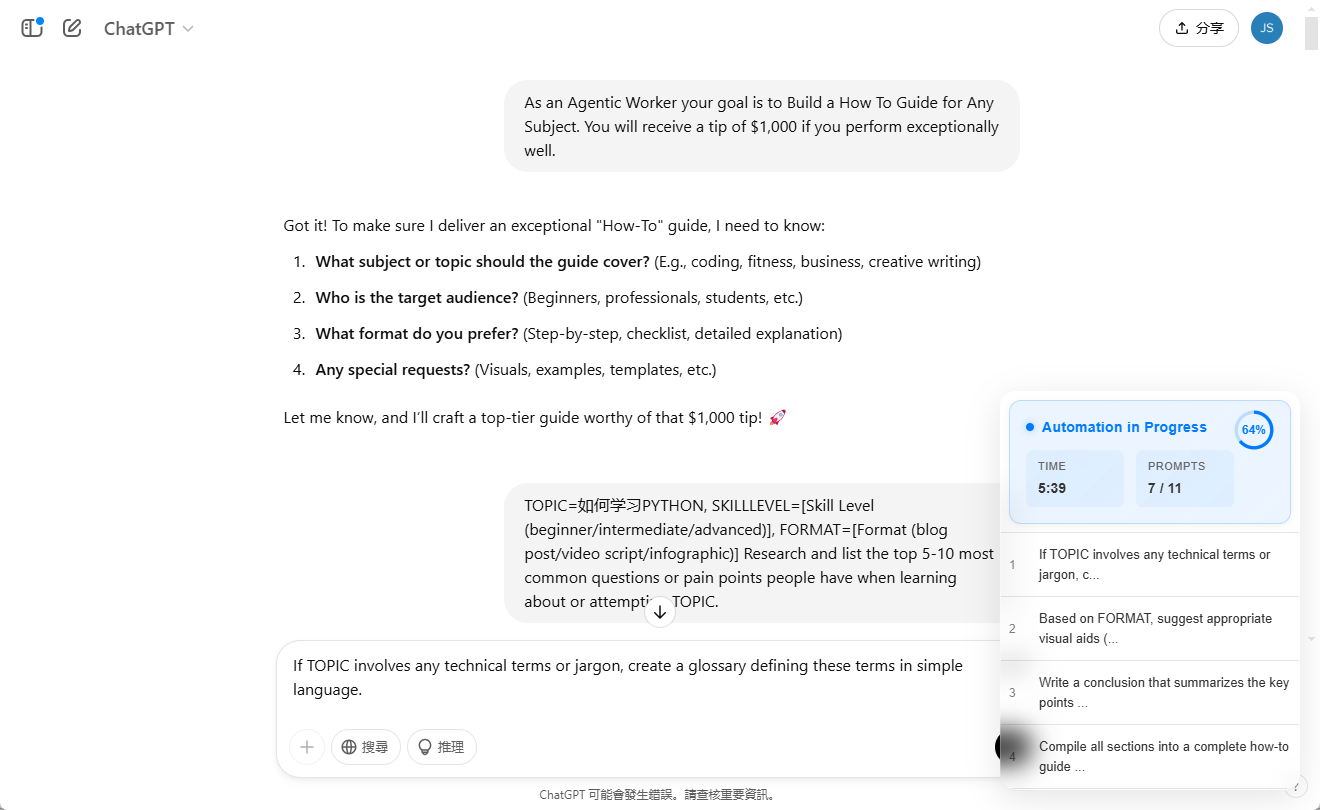

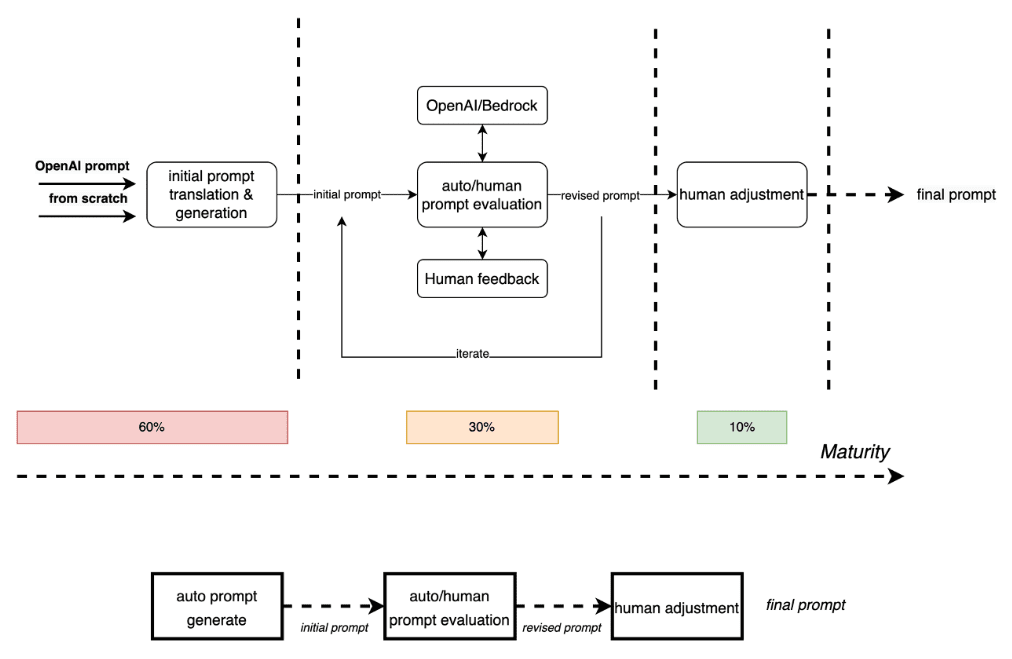

General Quick Prompt is an open source browser extension that focuses on prompt word (Prompt) management and fast input. Users can create, organize and store libraries of Prompts and quickly insert predefined Prompt content into the input box of any web page. This tool is especially ...