Story-Flicks: Input topics to automatically generate children's short story videos

General Introduction

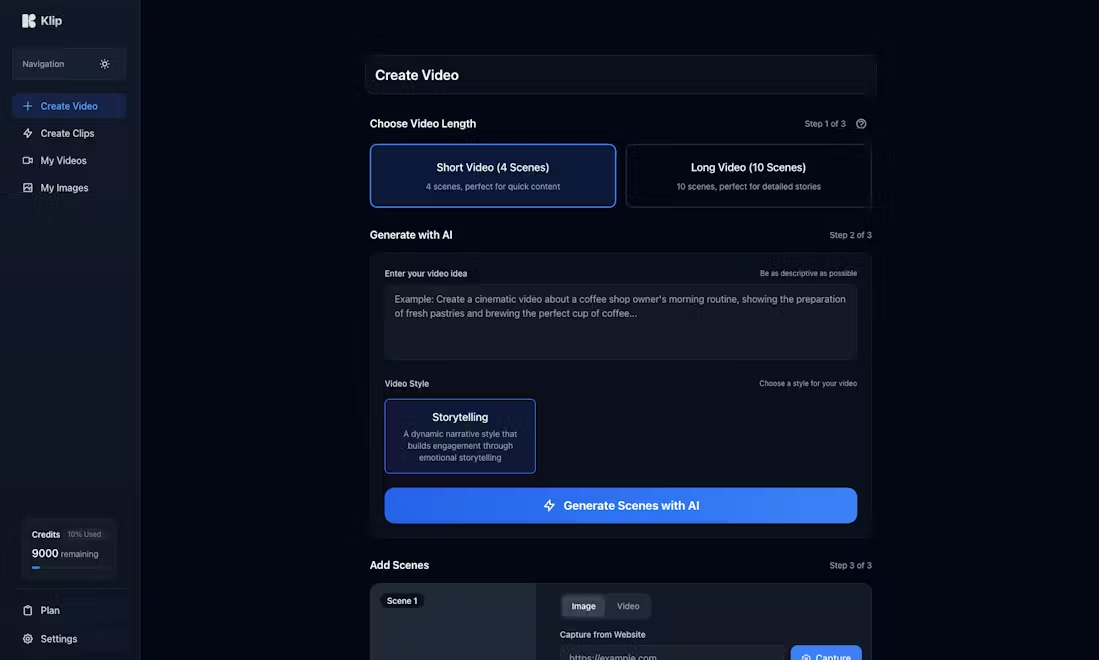

Story-Flicks is an open source AI tool focused on helping users quickly generate HD story videos. Users only need to input a story topic , the system will generate story content through the large language model , and combined with AI generated images , audio and subtitles , the output of the complete video work . The backend of the project is based on Python and FastAPI framework, and the frontend is built with React, Ant Design and Vite. It supports OpenAI, AliCloud, DeepSeek and other model service providers, and users can flexibly choose text and image generation models. Whether it's creating children's stories, short animations, or teaching videos, Story-Flicks can easily meet the demand, and is very suitable for developers, creators, and educators.

Function List

- Generate video with one click: Enter a story topic and automatically generate a video containing images, text, audio and subtitles.

- Multi-model support: Compatible with OpenAI, Aliyun, DeepSeek, Ollama and SiliconFlow of text and image modeling.

- Segment customization: The user can specify the number of story paragraphs, and each paragraph generates a corresponding image.

- multilingual output: Support for text and audio generation in multiple languages, adapted for global users.

- open source deployment: Provides both manual installation and Docker deployment for easy local operation.

- intuitive interface: The front-end page is easy to use and supports parameter selection and video preview.

Using Help

Installation process

Story-Flicks offers two installation methods: manual installation and Docker deployment. Below are the detailed steps to ensure that users can build the environment smoothly.

1. Manual installation

Step 1: Download the project

Clone the project locally by entering the following command in the terminal:

git clone https://github.com/alecm20/story-flicks.git

Step 2: Configure Model Information

Go to the backend directory and copy the environment configuration file:

cd backend

cp .env.example .env

show (a ticket) .env file to configure the text and image generation model. Example:

text_provider="openai" # 文本生成服务商,可选 openai、aliyun、deepseek 等

image_provider="aliyun" # 图像生成服务商,可选 openai、aliyun 等

openai_api_key="你的OpenAI密钥" # OpenAI 的 API 密钥

aliyun_api_key="你的阿里云密钥" # 阿里云的 API 密钥

text_llm_model="gpt-4o" # 文本模型,如 gpt-4o

image_llm_model="flux-dev" # 图像模型,如 flux-dev

- If you choose OpenAI, it is recommended to use the

gpt-4oas a textual model.dall-e-3as an image model. - If you choose AliCloud, it is recommended to use

qwen-plusmaybeqwen-max(text model) andflux-dev(image model, currently available for free trial, see details atAliCloud Documentation). - Save the file when the configuration is complete.

Step 3: Launch the backend

Go to the backend directory in the terminal, create the virtual environment and install the dependencies:

cd backend

conda create -n story-flicks python=3.10 # 创建 Python 3.10 环境

conda activate story-flicks # 激活环境

pip install -r requirements.txt # 安装依赖

uvicorn main:app --reload # 启动后端服务

After successful startup, the terminal will display:

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Application startup complete.

This indicates that the back-end service has run http://127.0.0.1:8000The

Step 4: Launch the front end

Go to the front-end directory in a new terminal, install the dependencies and run it:

cd frontend

npm install # 安装前端依赖

npm run dev # 启动前端服务

After successful startup, the terminal displays:

VITE v6.0.7 ready in 199 ms

➜ Local: http://localhost:5173/

Accessed in a browser http://localhost:5173/You can see the front-end interface.

2. Docker deployment

Step 1: Prepare the environment

Make sure that Docker and Docker Compose are installed locally; if not, download them from the official website.

Step 2: Starting the Project

Run it in the project root directory:

docker-compose up --build

Docker automatically builds and starts the front- and back-end services. When it's done, access the http://localhost:5173/ View the front-end page.

Usage

After installation, users can generate story videos through the front-end interface. The following is the specific operation flow:

1. Access to the front-end interface

Type in your browser http://localhost:5173/The Story-Flicks main page opens.

2. Setting the generation parameters

The interface provides the following options:

- Text Generation Model Provider: Selection

openai,aliyunetc. - Image Generation Model Provider: Selection

openai,aliyunetc. - text model: Enter the model name, e.g.

gpt-4omaybeqwen-plusThe - imagery model: Enter the model name, e.g.

flux-devmaybedall-e-3The - Video Language: Select a language, such as Chinese or English.

- Voice type: Select an audio style, such as male or female.

- Story topics: Enter a theme, e.g. "The Adventures of the Rabbit and the Fox".

- Number of story paragraphs: Enter a number (e.g., 3), with each segment corresponding to an image.

3. Video generation

After filling in the parameters, click "Generate" button. The system will generate the video according to the settings. The generation time is related to the number of paragraphs, the more paragraphs the longer it takes. When finished, the video will be displayed on the page, support playback and download.

caveat

- If the generation fails, check the

.envfile for the correct API key, or verify that the network connection is working. - utilization Ollama When setting the

ollama_api_key="ollama"Recommendedqwen2.5:14bor larger models, smaller models may not work as well. - SiliconFlow's image model has only been tested so far.

black-forest-labs/FLUX.1-dev, make sure to select a compatible model.

Featured Function Operation

Generate full video with one click

In the interface, enter "The story of the wolf and the rabbit", set 3 paragraphs and click "Generate". After a few minutes, you will get a video with 3 images, voiceover and subtitles. For example, the official demo video shows the stories "The Rabbit and the Fox" and "The Wolf and the Rabbit".

Multi-language support

Want to generate English video? Set the "Video Language" to "English" and the system will generate English text, audio and subtitles. Switching to other languages is just as easy.

Customized Segmentation

Need a longer story? Set the number of paragraphs to 5 or more. Each paragraph generates a new image and the story expands accordingly.

With these steps, users can easily install and use Story-Flicks to quickly create HD storytelling videos. Whether it's for personal entertainment or educational use, this tool can help you get creative!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...