SPO: Self-monitoring prompt word optimization

summaries

Well-designed prompts are essential to enhance the reasoning capabilities of large language models (LLMs) while aligning their outputs with the task requirements of different domains. However, manually designing hints requires expertise and iterative experimentation. Existing hint optimization methods aim to automate this process, but they rely heavily on external references such as real answers or human feedback, which limits their application in real-world scenarios where these data are not available or are costly to obtain. To address this problem, we propose Self-Supervised Prompt Optimization (SPO), an efficient framework for discovering effective prompts for both closed and open-ended tasks without external references. Inspired by the observation that cue quality is directly reflected in LLM outputs and that LLMs can efficiently assess the degree of adherence to task requirements, we derive evaluation and optimization signals solely from output comparisons. Specifically, SPO selects superior cues through pairwise output comparisons evaluated by an LLM evaluator, and then aligns the outputs to task requirements through an LLM optimizer. Extensive experiments have shown that SPO outperforms existing state-of-the-art cue optimization methods while costing significantly less (e.g., only 1.11 TP3T to 5.61 TP3T of existing methods) and requiring fewer samples (e.g., only three samples). The code is available at https://github.com/geekan/MetaGPT获取.

Full demo code: https://github.com/geekan/MetaGPT/blob/main/examples/spo/README.md

1. Introduction

As Large Language Models (LLMs) continue to evolve, well-designed prompts are essential to maximize theirreasoning ability (Wei et al., 2022; Zheng et al., 2024; Deng et al., 2023) as well as ensuring consistency with the requirements of diverse missions (Hong et al., 2024b; Liu et al., 2024a; Zhang et al. crucial. However, creating effective cues usually requires extensive trial-and-error experiments and deep task-specific knowledge.

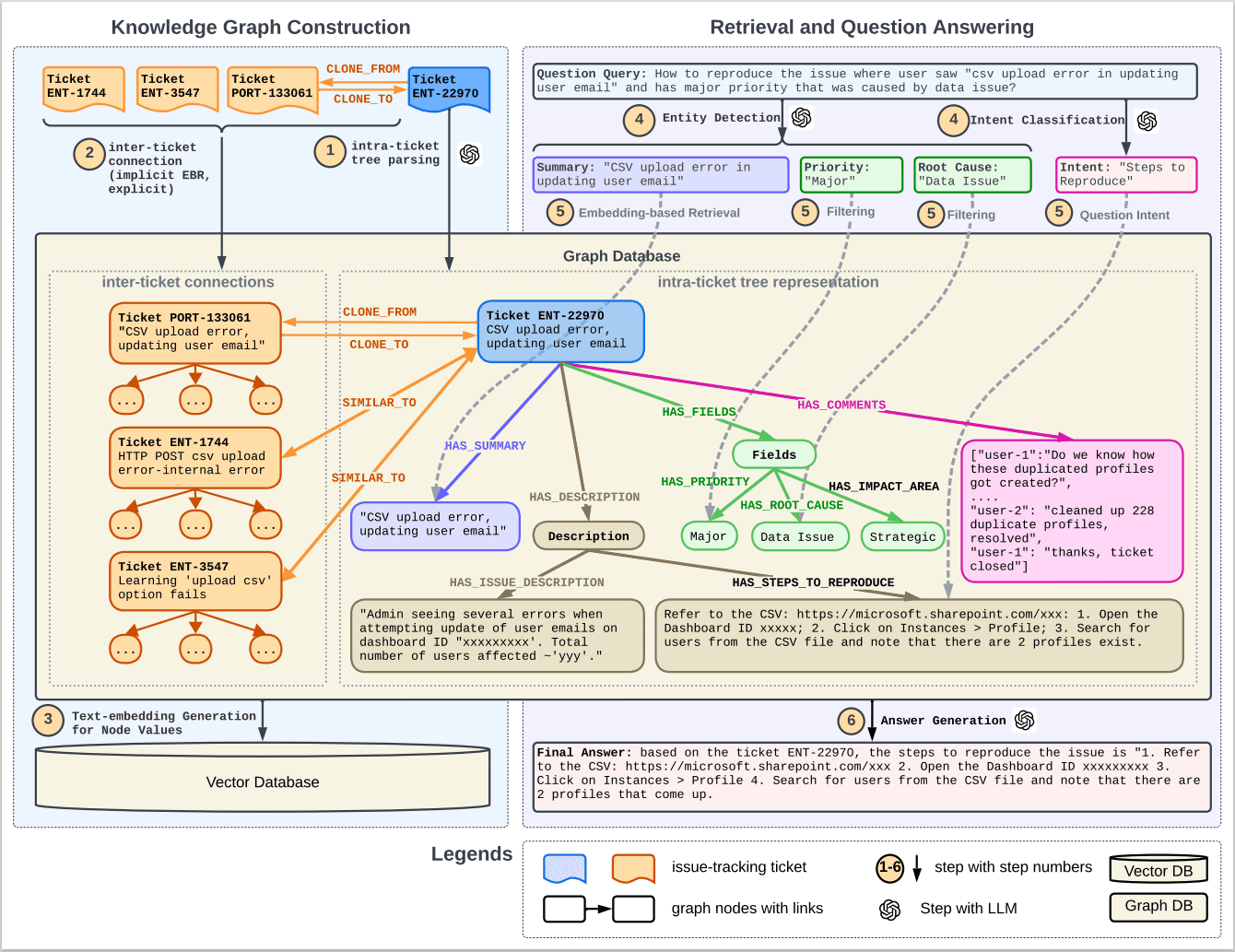

To address this challenge, researchers have explored prompt optimization (PO) methods that use LLMs' own capabilities to automatically improve prompts.PO goes beyond traditional manual prompt engineering to provide a more systematic and efficient approach to prompt design. As shown in Figure 1(a), these methods typically involve an iterative process of cue optimization, execution, and evaluation. The design choices of these components significantly affect the effectiveness and efficiency of the optimization. Existing methods have been developed in terms of numerical evaluation mechanisms (Wang et al., 2024e; Yang et al., 2024a; Fernando et al., 2024) and textual "gradient" optimization strategies (Wang et al., 2024c; Yuksekgonul et al., 2024), and have been developed in terms of numerical evaluation mechanisms (Wang et al., 2024e; Yang et al., 2024a; Fernando et al., 2024). al., 2024). Through these innovations, PO methods have improved task performance while reducing manual effort (Pryzant et al., 2023; Zhang et al., 2024a; Zhou et al., 2024).

Despite their potential, existing PO methods face significant challenges in real-world scenarios, as described below. First, current approachesThe law usually relies heavily on external references for its assessment.. Methods that use authentic answers for evaluation (Yang et al., 2024a; Fernando et al., 2024; Yuksekgonul et al., 2024; Pryzant et al., 2023) require a large amount of annotated data to assess cue quality, but in many real-world applications, especially in open-ended tasks, such standardized answers are often not available. Similarly, manual-dependent methods (Chen et al., 2024; Lin et al., 2024) require manual evaluation or manually designed rules to generate feedback, which is both time-consuming and contradictory to the goal of automation. Second, existing methods typically require evaluating a large number of samples to obtain reliable feedback, resulting in significant computational overhead (Wang et al., 2024e; Fernando et al., 2024).

At the heart of these challenges is the lack of reliable and efficient, reference-free methods for assessing cue quality. Analysis of LLM behavior reveals two key insights that inform our approach. First, cue quality is inherently reflected in model outputs, as demonstrated by how stepwise inference paths can demonstrate the success of chain-thinking cues (Wei et al., 2022; Deng et al., 2023). Second, extensive research on LLMs as judges has shown that they are effective in assessing the extent to which output follows task requirements (Zheng et al., 2023; Li et al., 2024b). These observations suggest that cue optimization without referencing is feasible by using LLMs to assess the inherent ability of outputs that naturally reflect cue quality.

Inspired by these insights, we propose an efficient framework that generates evaluation and optimization signals only from LLM outputs, similar to how self-supervised learning derives training signals from data. We refer to this approach as self-supervised prompt optimization (SPO). As shown in Figure 1, SPO introduces several innovative mechanisms based on the basic optimize-execute-evaluate cycle:

- Output as a reference for pairwise evaluation: At the heart of SPO is the use of a pairwise comparison methodology to assess the relative quality of the outputs of different cues. This evaluation mechanism leverages the inherent ability of the LLM to understand task requirements and validate the effectiveness of the optimization without an external reference.

- Output as an optimization guide: SPO optimizes cues through the LLM's understanding of a better solution to the current best output. This process naturally aligns cue modifications with the model's understanding of the best task solution, rather than relying on explicit optimization signals.

dedicate. Our main contributions are listed below:

- Self-supervised cue optimization framework. We introduce SPO, a novel framework that uses pairwise comparisons of LLM outputs to guide cue optimization without external references.

- Cost-effectiveness optimizationSPO optimizes prompts with minimal computational overhead ($0.15 per dataset) and sample requirements (3 samples), significantly reducing resource requirements.

- Extensive assessment. As shown in Fig. 2, SPO requires only 1.11 TP3T to 5.61 TP3T of the cost of existing methods while maintaining excellent performance in both closed and open tasks.

2. Preliminary

2.1 Definition of the problem

Prompt Optimization aims to automatically enhance the validity of a prompt for a given task. Formally, let T = (Q, Gt) denotes a task where Q denotes the input problem and Gt is an optional benchmark fact. The goal is to generate a task-specific prompt Pt* to maximize the performance on task T . This optimization objective can be formally expressed as:

Pt = arg maxPt∈ P* ET∼D[ϕeval(ϕexe(Q, Pt))], (1)

where P denotes the space of all possible tips. As shown in Fig. 1, this optimization process usually involves three basic functions: (1) the optimization function (ϕopt): generates modified prompts based on candidate prompts; (2) execution function (ϕexe): the modified hints are used with the LLM to generate the output O, including the inference path and the final answer; (3) the evaluation function (ϕeval): evaluate the quality of O and provide feedback F to guide further optimization, iteratively improving candidate cues.

Among these functions, the evaluation function plays a key role as its output (feedback F) guides the evaluation and improvement of the cue. We will discuss the evaluation framework for cue optimization in Section 2.2.

2.2 Evaluation frameworks in cue optimization

This section provides an overview of our cue optimization assessment framework, covering three key components: assessment sources, assessment methods, and feedback types, as shown in Figure 3. Finally, we present our choice of assessment framework for SPOs.

Assessment of sources. As shown in Figure 3(a), two main sources of assessment are available: the LLM-generated output and task-specific authentic answers. These sources provide the basis for evaluating prompt performance.

Assessment methodology.. The assessment methodology defines how the source of the assessment and the associated costs are evaluated. Three commonly used methods are (1) Benchmarking relies on predefined metrics (Suzgun et al., 2023; Rein et al., 2023) or rules (Chen et al., 2024). (2) LLM as a judge (Zheng et al., 2023) Utilizing LLM's ability to understand and evaluate outputs according to task requirements. (3) Manual feedback (Lin et al., 2024) provides the most comprehensive assessment by directly assessing the output manually.

While manual feedback provides the most thorough assessment by capturing human preferences and task-specific needs, it incurs higher costs than benchmarking or LLM-as-judge approaches, creating a trade-off between assessment quality and feasibility.

Type of feedback. Feedback generated by assessment methods typically takes three forms:(1) Numerical feedback provides quantitative performance metrics across the entire dataset. However, it requires a large number of samples to ensure stability of the evaluation and may overlook instance-specific details (Zhang et al., 2024a). (2) Textual feedback provides rich instance-specific guidance by analyzing and providing suggestions that directly generate optimization signals (Yuksekgonul et al., 2024). (3) Ranking or selection feedback (Liu et al., 2024b) establishes a relative quality ranking between outputs through full ranking or pairwise comparisons, providing explicit optimization direction without the need for absolute quality metrics.

Assessment framework. Building on the sources, methods, and types of feedback discussed earlier, assessment frameworks determine how sources are compared and assessed in the context of cue optimization. Specifically, we derive two assessment frameworks for generating cue-optimized feedback F :

(1) Output vs. true answer (OvG): by combining the output O with the true answer GT Make comparisons to generate feedback:

fOvG(Oi, Gi) = φeval(φexe(Qi, Tpi), G**i**)

Although this approach allows for direct quality assessment via external references, it requires clearly defined true answers and is therefore unsuitable for open-ended tasks where no true answers are available or where it is impractical to define true answers.

(2) Output vs. Output (OvO): when no true answer is available, we turn to direct output comparisons.The core idea of OvO is that comparing outputs generated from different hints can provide valuable signals about their relative quality even in the absence of perfect true answers. This approach eliminates the reliance on external references and is particularly useful for open-ended tasks with multiple valid answers. It can be formally expressed as:

fOvO(O1, ... , Ok) = φeval({φexe(Qi, Pti)}ki=1)

After introducing OvG and the OvO evaluation framework, we emphasize that OvO is a core method for self-supervised prompt optimization (SPO). By comparing outputs generated from different cues, OvO provides valuable feedback on their relative quality without relying on external references. This approach is consistent with our goal of generating feedback from the outputs themselves, thus facilitating iterative optimization for both closed and open tasks.

3. Self-monitoring tips optimization

In this section, we first outline our approach (Section 3.1) and then analyze its effectiveness (Section 3.2).

3.1 Overview of SPO

A central challenge in reference-free cued optimization is how to construct effective evaluation and optimization signals. We propose Self-Supervised Prompted Optimization (SPO), a simple yet effective framework for reference-free optimization that retains the basic optimize-execute-evaluate cycle while utilizing only the model outputs as an evaluation source and optimization guide.

As shown in Algorithm 1, SPO operates through three key components, and the corresponding hints are shown in Appendix A.1:

- Optimization Features (φopt): generates a new cue by analyzing the current best cue and its corresponding output.

- executive function (φexe): Apply the generated prompts to get the output.

- Evaluation Functions (φeval): use LLM to compare outputs and determine the better cue by pairwise comparison.

This iterative process begins with a basic prompt template (e.g., Chain Thinking (Wei et al., 2022)) and a small set of questions sampled from the dataset. In each iteration, SPO generates new prompts, executes them, and evaluates the outputs pairwise to assess how well they follow the task requirements.

The association of this cue with a better output is selected as the best candidate for the next iteration. The process continues until a predetermined maximum number of iterations is reached.

3.2 Understanding the effectiveness of SPO

The theoretical foundation of SPO rests on two key observations:

First, the output of LLMs is rich in qualitative information that directly reflects the validity of the cue, as demonstrated by how stepwise reasoning paths can demonstrate the success of chain-thinking cues (Wei et al., 2022). Second, LLMs exhibit human-like task comprehension, allowing them to assess answer quality and identify superior solutions based on task requirements. These complementary capabilities enable SPOs to perform cue evaluation and optimization without external references. These two aspects of leveraging model output work together to achieve effective prompt optimization:

output as an optimization guide. In the case of φopt In terms of design, unlike other methods that introduce explicit optimization of signals (Fernando et al., 2024; Yuksekgonul et al., 2024; Pryzant et al., 2023), φopt Optimization is based directly on the cues and their corresponding outputs. The optimization signals derive from the LLM's inherent ability to assess the quality of the output, while the optimization behavior is guided by its understanding of what constitutes a better solution. Thus, even in the absence of explicit optimization signals, SPO's optimization essentially directs the cues to the LLM's optimal understanding of the task.

output as a reference for pairwise evaluation. About φeval design, by allowing the assessment model to perform pairwise selection, we effectively utilize the assessment model's inherent preference understanding of the task. This internal signal can be obtained through simple pairwise comparisons of outputs, avoiding the need for a large number of samples to ensure scoring stability, which is often required for scoring feedback methods.

Although we mitigate potential biases through four rounds of randomized evaluations, these biases cannot be completely eliminated (Zhou et al., 2024). However, these biases do not affect the overall optimization trend, as the feedback from eval is only used as a reference for the next round of optimization. The overall optimization process is naturally consistent with the task understanding of the optimization model, and the eval mechanism is only used to validate the effectiveness of each iteration.

4. Experiments

4.1 Experimental set-up

data set. We evaluated SPO on a diverse set of tasks, including both closed and open tasks, to fully assess its effectiveness.

For the closed task, we used five established benchmarks:

- GPQA (Rein et al., 2023)

- AGIEval-MATH (Zhong et al., 2024)

- LIAR (Wang, 2017)

- WSC (Levesque et al., 2012)

- BBH-navigate (Suzgun et al., 2023)

For WSC, LIAR and BBH-Navigate, we follow Yan et al. (2024) and sample portions from the original dataset as the test set. For GPQA, we use the more challenging GPQA-Diamond subset as the test set, while for AGIEval-Math, we use level 5 problems as the test set. For open-ended tasks, we selected writing, role-playing, and humanities tasks from MT-Bench (Zheng et al., 2023). Given the limited size of the dataset, we manually constructed three validation sets for these tasks. A detailed description of the datasets and the procedures for constructing the validation and test sets can be found in Appendix A.3.

base line (in geodetic survey). We evaluated SPO on closed tasks for two classes of methods: (1) traditional prompting methods, including io (direct LLM calls), Chain Thinking (Wei et al., 2022), Reformulation (Deng et al., 2023), and Fallback Abstraction (Zheng et al., 2024); and (2) automatic prompting optimization methods, including APE ( Zhou et al., 2023), OPRO (Yang et al., 2024a), PromptAgent (Wang et al., 2024e), PromptBreeder (Fernando et al., 2024), and TextGrad (Yuksekgonul et al., 2024), whose evaluation is based on a set of algorithms, such as APE (Deng et al., 2023) and fallback abstraction (Zheng et al., 2024); and (2) automatic prompt optimization methods, including APE ( 2024), whose assessment framework settings are detailed in Table 2.

For the open-ended task in MT-Bench (Zheng et al., 2023), we used GPT-4o to compare the output generated by SPO with that generated directly by the model.

4.2. Experimental results and analysis

Key findings of the closed mandate. As shown in Table 1, SPO-optimized prompts outperform all traditional prompting methods on average, exceeding the optimal baseline by 1.9. At the same time, it performs comparably to prompt optimization methods relying on real answers on most datasets, and achieves optimal results on the GPQA and BBH-navigate datasets. Specifically, the average performance advantage of SPO over other optimization methods suggests that its pairwise evaluation method is capable of generating more efficient optimization signals than other methods that rely on external references. Furthermore, to validate the effectiveness of our method across different optimization models, we conducted experiments using GPT-4o as the optimization model and achieved an average performance of 66.3. Although slightly lower than the results using Claude-3-5-Sonnet as the optimization model, this is still the third best performance among all the compared methods.

cost analysis. We comprehensively compare the optimization cost and performance of SPO (using Claude-3.5-Sonnet and GPT-4o as optimization models) with other optimization methods in Table 1. While maintaining comparable performance with other hint optimization methods that rely on real answers, SPO only requires an optimization cost of 1.11 TP3T to 5.61 TP3T, with an average optimization cost per dataset of $0.15. This significant reduction in computational overhead, combined with its lack of dependence on real answers, makes SPO very attractive for real-world applications.

Table 3. Performance comparison of BBH-navigate: cueing methods (IO and COT) and SPO using different evaluation models (rows) and execution models (columns). The optimization model is set to Claude-3.5-Sonnet.

| GPT-4o-mini | Llama3-70B | Claude-3-Haiku | |

| IO | 91.3 | 82.7 | 62.2 |

| COT | 89.7 | 86.2 | 68 |

| Claude-3.5-Sonnet | 95 | 86.8 | 68.2 |

| Llama3-70B | 94.5 | 94.2 | 82.0 |

| GPT-4o-mini | 97.8 | 90.7 | 82.0 |

Ablation studies. To assess the transferability of SPO across different optimization, evaluation, and execution models, we performed ablation experiments on the BBH-Navigate dataset. The experimental results in Tables 3 and 4 show that SPO exhibits robust performance across different models. Notably, the best performance (97.8) is achieved when using GPT-4o-mini as the optimization, execution and evaluation model. In terms of execution, SPO effectively improves the performance of the weaker model by increasing Claude-3-Haiku from 62.2 to 89.7, which demonstrates SPO's ability to be applied to the weaker model and further extends its potential for realistic applications.

Table 4. Performance comparison of BBH-navigate on different optimization models (rows) and execution models (columns). The evaluation model is set to GPT-4o-mini.

| GPT-4o-mini | Llama3-70B | Claude-3-Haiku | |

| Claude-3.5-Sonnet | 97.2 | 86.7 | 89.7 |

| GPT-40 | 96.3 | 85.5 | 73.0 |

| GPT-4o-mini | 97.8 | 90.7 | 82.0 |

| DeepSeek-V3 | 94.7 | 83.7 | 77.2 |

We conducted an ablation study to investigate the effect of sample size on SPO performance using the BBH-Navigate dataset, as shown in Figure 5. The performance curves for all three optimization models show a similar pattern: performance initially improves as the sample size increases, but eventually levels off or decreases. This phenomenon can be attributed to two factors: insufficient samples lead to overfitting in cue optimization, while too many samples not only increase the computational cost, but also lead to a longer context for evaluating the model, which may reduce the quality of the evaluation. Based on extensive experiments, we determined that a sample size of 3 achieves the best balance between cost-effectiveness and performance.

Key findings of the open-ended mandateThe following are some examples of the types of tasks that SPOs can perform in open-ended tasks. To validate SPO's ability in open-ended tasks, we selected three categories from the MT-Bench to assess: "Writing", "Role Playing", and "Humanities ". We used Claude-3.5-Sonnet as the optimization model, Gpt-4o-mini as the evaluation model, and selected Claude-3.5-Sonnet, DeepSeek-V3 and GPT-4omini as the execution models for five iterations. Subsequently, following the evaluation method in (Zheng et al., 2023), we used GPT-4o to compare the outputs of Model A and Model B pairwise, as shown in Fig. 6. The experimental results, shown in Fig. 6, indicate that SPO significantly improves model performance under all model configurations. It is worth noting that smaller models using optimization cues (e.g., GPT-4omini) often outperform larger models in most cases.

4.3 Case studies

We show the optimization results for the additional open-ended task without the dataset as well as SPO's optimization trajectory in Appendix A.4. We also provide the optimal hints found by SPO on the five closed-ended tasks in the Supplementary Material. Given that real-world applications often face the problem of limited datasets, we evaluate SPO's performance on tasks without traditional benchmarks. The experimental results combined with the cost-effectiveness of SPO demonstrate its practical value in real-world scenarios. Specifically, we show the optimization results after 10 iterations using Claude-3.5-Sonnet as the optimization model, GPT-4o-mini as the evaluation model, and Llama3-8B as the execution model, covering four tasks: advertisement design, social media content, modern poetry writing, and conceptual explanation. In addition, we provide a comprehensive analysis of SPO's optimization trajectory on the BBH-navigate dataset in Appendix A.4.1, showing successful and unsuccessful examples to provide deeper insights into the optimization process.

5. Related work

5.1 Cue engineering

Research on effective prompting methods has been conducted along two main directions. The first direction focuses on task-independent prompting techniques that enhance the generalizability of LLMs. Notable examples include chain thinking (Wei et al., 2022; Kojima et al., 2022), which improves reasoning across a wide range of tasks; techniques to improve one-shot reasoning (Deng et al., 2023; Zheng et al., 2024; Wang et al., 2024d); and for output format specification methods (Zhang et al., 2024a; He et al., 2024; Tam et al., 2024). These techniques provide important optimization seeds for autocue optimization studies through human insight and extensive experimental development.

The second direction addresses domain-specific cues, and researchers have developed new approaches for code generation (Hong et al., 2024b; Ridnik et al., 2024; Shen et al., 2024a), data analysis (Hong et al., 2024a; Liu et al., 2024a; Li et al., 2024a), questioning ( Wu et al., 2024b; Zhu et al., 2024; Yang et al., 2024b), decision making (Zhang et al., 2024b; Wang et al., 2024a) and other areas (Guo et al., 2024b; Ye et al., 2024; Shen et al., 2024b). ) have developed specialized techniques. However, as the application of LLMs extends to increasingly complex real-world scenarios, it becomes impractical to manually create effective cues for each domain (Zhang et al., 2024a). This challenge has motivated research in cue optimization, which aims to systematically develop effective domain-specific cues rather than discovering general cueing principles.

5.2. prompt optimization

In prompt optimization (PO), the design of the evaluation framework is crucial because it determines the effectiveness and computational efficiency of the optimization.The evolution of evaluation mechanisms in PO has evolved from simple evaluation feedback collection to complex optimization signal generation (Chang et al., 2024). Existing PO methods can be categorized based on their evaluation sources and mechanisms.

The most common approach relies on authentic answers as a source of assessment, utilizing numerical assessments based on benchmarks (Zhou et al., 2023; Guo et al., 2024a; Yang et al., 2024a; Fernando et al., 2024; Wang et al., 2024e; Khattab et al. ). While these methods have been successful in specific tasks, they typically require a large number of iterations and samples to ensure evaluation stability, resulting in significant computational overhead.

To reduce sample requirements, some methods (Yan et al., 2024; Yuksekgonul et al., 2024; Wu et al., 2024a; Wang et al., 2024c; Pryzant et al., 2023; Li et al., 2025) use LLMs as judges (Zheng et al. ..., 2023) to generate detailed textual feedback. Although this approach provides richer evaluation signals and requires fewer samples, it still relies on real answer data, thus limiting its application to open-ended tasks where reference answers may not exist.

Alternative approaches focus on human preferences, through manually designed evaluation rules or direct human feedback (Chen et al., 2024; Lin et al., 2024). While these approaches can effectively handle open-ended tasks, their need for extensive human involvement contradicts the goal of automation. Meanwhile, some researchers have explored different evaluation criteria, such as Zhang et al.'s (2024c) proposal to assess cue validity through output consistency. However, this approach faces a fundamental challenge: the nonlinear relationship between consistency and validity usually leads to poor assessment signals.

Unlike these methods, SPO introduces a new assessment paradigm that eliminates the reliance on external references while maintaining efficiency. By utilizing model outputs only through pairwise comparisons, SPO achieves robust assessment without the need for real answers, human feedback, or extensive sampling, and is particularly well suited for real-world applications.

6. Conclusion

This paper addresses a fundamental challenge in cued optimization: the dependence on external references, which limits real-world applications. We introduce Self-Supervised Prompted Optimization (SPO), a framework that overcomes this dependency while implementing a framework in which each dataset is only $0.15 of significant cost-effectiveness.SPO draws inspiration from self-supervised learning to innovatively construct evaluation and optimization signals by pairwise comparisons of model outputs, enabling reference-free optimization without compromising effectiveness.

Our comprehensive evaluation shows that SPO outperforms existing state-of-the-art methods in both closed and open tasks, achieving state-of-the-art results at a cost of only 1.11 TP3T to 5.61 TP3T of existing methods. Success in standard benchmarks and diverse real-world applications validates SPO's effectiveness and generalizability. By significantly reducing resource requirements and operational complexity, SPO represents a significant advancement in making cueing optimization accessible and practical in real-world applications, with the potential to accelerate the adoption of LLM technologies across a wide range of domains.

Impact statement

SPO offers significant advances in the engineering of cues for LLMs, providing benefits such as democratized access, reduced costs, and improved performance on a variety of tasks. However, it also poses risks, including potential bias amplification, use of harmful content generation, and over-reliance on LLMs.

A. Appendix

A.1 Detailed tips for SPOs

In this section, we show meta-hints for iteration. Note that here we have used only the simplest and most straightforward hints. There is still room for improvement by optimizing the following meta-hints for specific domains.

Tips for Optimizing Functionality

This prompt template guides LLMs to iteratively improve existing prompts through structured XML analysis. It requires identifying weaknesses in the reference prompt output, suggesting changes, and generating optimized versions. The template emphasizes incremental improvements while maintaining consistency of requirements.

Tips for evaluating functions

The evaluation template uses comparative analysis to assess the quality of a response. It requires an XML-formatted reasoning analysis of the strengths and weaknesses of two responses (A/B), followed by a clear choice.

A.2. Detailed prompt template for the start of an iteration

This YAML file shows our initial configuration for iterating on the BBH-navigate task. By configuring a simple initial prompt and requirement, along with three specific questions, iterative optimization can be performed. It is important to note that the content shown here is the complete content of the file; the content in the answers section is not the actual answer, but rather serves as a reference for the thought process and the correct output format.

A.3 Details of the experiment

A.3.1 Task and data details

LIAR

LIAR (Wang, 2017) is an English-language fake news detection dataset containing 4000 statements, each accompanied by contextual information and lie labels. In our experiments, we follow the method of Yan et al. (2024) and sample portions from the original dataset as a test set.

BBH-Navigate

BBH-Navigate (Suzgun et al., 2023) is a task in the BIG-bench Hard dataset, a subset of the BIG Bench dataset. This task focuses on navigational reasoning and requires the model to determine whether an agent returns to its starting point after following a series of navigational steps. In our experiments, we used random sampling (seed = 42) to obtain 200/25/25 test/train/validate splits.

Table A1. dataset size and data partitioning

| Data set name | test (machinery etc) | Training & Validation |

|---|---|---|

| LIAR | 461 | 3681 |

| BBH-Navigate | 200 | 50 |

| WSC | 150 | 50 |

| AGIEval-MATH | 256 | 232 |

| GPQA | 198 | 250 |

| MT-bench | 80 | 0 |

WSC

The Winograd Schema Challenge (WSC) (Levesque et al., 2012) is a benchmark designed to evaluate a system's ability to perform commonsense reasoning by parsing pronoun references in context. In our experiments, we follow the approach of Yan et al. (2024) and sample portions from the original dataset as a test set.

AGIEval-MATH

AGIEval-MATH (Zhong et al., 2024) is a subset of the AGIEval benchmarks focusing on mathematical problem solving tasks. It includes a variety of mathematical problems designed to assess reasoning and computational skills. In our experiments, we use level 5 problems as the test set and level 4 problems as the training and validation set.

GPQA

GPQA (Rein et al., 2023) is a dataset designed to assess the performance of language models on graduate-level problems in a variety of disciplines, including biology, physics, and chemistry. In our experiments, we use the GPQA-Diamond subset as a test set while constructing our training and validation sets from problems that exist only in GPQA-main (i.e., those that exist in GPQA-main but not in GPQA-Diamond).

MT-bench

MT-bench (Zheng et al., 2023) is a multi-task benchmark designed to evaluate the generalization ability of language models on a variety of tasks including text categorization, summarization, and question and answer. In our experiments, we selected writing, role-playing, and humanities tasks from MT-Bench. These validation questions are provided in the Supplementary Material.

A.3.2 Configuration

In our experiments, we configured different optimization frameworks to keep their optimization costs as consistent as possible. These frameworks typically allow setting a number of parameters to tune the optimization cost, including the number of iterations and the number of hints generated per iteration.

APE

APE uses a three-round iterative optimization process to select the top 10% (ratio = 0.1) cues in the current pool as elite cues in each round. To maintain the diversity and size of the cue pool, variant sampling is used to mutate these elite cues to keep the total number of cues at 50. Following the setup in the original paper (Zhou et al., 2023), the optimization process does not include sample-specific execution results to guide LLM cue optimization. Instead, performance scores are obtained by evaluating the cues over the entire training set.

OPRO

OPRO uses a 10-round iterative optimization process that generates 10 candidate cues per round.OPRO evaluates cue performance on the complete training set and filters based on the evaluation scores.OPRO does not maintain a fixed-size pool of cues, but instead generates new candidates directly based on the current best cue in each round. The optimization direction is guided by performance evaluation on the complete training data.

PromptAgent

Except for the Liar dataset, where we sampled 150 data on both the training and validation sets, the other datasets follow the sizes specified in Table A1.PromptAgent uses the Monte Carlo Tree Search (MCTS) framework to optimize prompts. It starts with an initial cue and generates new candidates based on model error feedback. The process is guided by the use of benchmark evaluations on a sampled training set to identify high-return paths for improving task performance. Finally, we choose to test on the first 5 cues that perform best on the validation set and select the best one.Key parameters of MCTS include an expansion width of 3, a depth limit of 8, and 12 iterations.

PromptBreeder

In our implementation of PromptBreeder, we configured the system to be initialized with 5 variant cues and 5 thinking styles. The evolutionary process runs for 20 generations, with each generation performing 20 evaluations on a randomly sampled training example. The optimization model defaults to Claude-3.5-Sonnet and the execution model defaults to GPT-4o-mini.

TextGrad

All datasets follow the sizes specified in Table A1 except the Train&Validate set for the Liar dataset, which is reduced to 50 samples.TextGrad uses a three epoch optimization process, with three steps per epoch (epoch_{-3}, steps_{-3}), to perform stochastic gradient descent using a batch size of three. At each step, TextGrad generates gradients via feedback from the optimizer LLM (Claude-3.5-Sonnet) to update the system cues. The framework maintains a validation-based reduction mechanism - if the updated cue performs worse on the validation set than the previous iteration, the update is rejected and the cue is restored to its previous state. The optimization process is guided by evaluating the cues using Claude-3.5-Sonnet as the evaluation LLM, while the actual task execution uses GPT-4o-mini as the execution LLM.Our experimental configurations follow the cue optimization settings provided in the official TextGrad repository (Yuksekgonul et al., 2024).

SPO

SPO optimizes by performing 10 iterations per task, with 3 questions (without answers) randomly selected from the pre-partitioned Train&Validate dataset for each iteration. The optimization model defaults to Claude-3.5-Sonnet, the evaluation model defaults to GPT-4o-mini, and the execution model defaults to GPT-4o-mini.Notably, SPO validates its capability by achieving effective cued optimization using only questions without real answers.

A.3.3 Baseline prompts

In this section, we provide baseline cues for comparison purposes. Note that for all cue optimization efforts that require an initial iteration of cues, we always provide the COT cues shown below.

Make sure the response ends with the following format: answer.

A.3.4. Tips for SPO optimization

In this section, we show the optimization hints we obtained in our main experiments, where Claude-3.5-Sonnet serves as the optimization model and GPT-4o-mini serves as the evaluation and execution model.

GPQA Tips

Please follow the guidelines below to answer questions efficiently and effectively:

- Read the entire question carefully, identifying all relevant information and key concepts.

- Select the most appropriate problem-solving method based on the type of problem.

- Follow the steps below to solve the problem:

a. Statements of any relevant formulas, principles or assumptions

b. Show all necessary calculations or conceptual analyses

c. Evaluate all answer choices, explaining why the wrong choice is wrong (if relevant) - Organize your response according to the following structure:

[Analysis]

Brief statement of the main issue and key messages (2-3 sentences maximum)

[Solution]

- Step-by-step presentation of your work, including all relevant calculations and reasoning

- Provide clear, logical explanations of conceptual issues

[Conclusion]

State the final answer in one clear sentence

- Briefly explain why this answer is correct and the other answers are wrong (if applicable)

Adapt this structure to different problem types, prioritizing clarity and simplicity. Make sure your response addresses all aspects of the problem and demonstrates a clear process for solving it.

BBH-Navigate Tips

Follow the steps below to analyze the given instructions:

- State the initial conditions:

- Starting point: (0, 0)

- Initial direction: positive x-axis (unless otherwise noted)

- Use the coordinate system:

- x-axis: left (-) and right (+)

- y-axis: backward (-) and forward (+)

- Analyze each step:

- For vague instructions (e.g., "take X steps" without directions), assume forward movement

- Update coordinates after each move

- Brief explanation of any assumptions made

- After analyzing all the steps:

- Summarize the total movement in each direction

- Stating the final position

- Compare the final position with the starting point:

- Calculate the distance from (0, 0)

- Provide succinct reasoning labeled "Reasoning:"

- Explain key moves and their effect on position

- Provide reasons for your conclusions

- State your final answer, labeled "Final Answer:"

End your response with the following XML format: [Yes or No]

Ensure that your analytics are adapted to all problem types, dealing with both specific and vague instructions.

LIAR Tips

Carefully analyze the given statement(s) and follow the steps below for each question:

- Consider the statement, the speaker's background (if provided), and the context.

- Research and cite relevant facts and figures related to the statement.

- Assess the validity of the statement in the light of the available evidence.

- Consider the potential bias or motivation of the speaker.

For each statement, organize your analysis according to the following structure:

Fact-checking: [Key Fact 1 with sources] [Key Fact 2 with sources] [Add more as needed] Analysis: [Provide a brief analysis of the validity of the statement] Scoring: [Use the following scale]

Uncertainty: [low/medium/high - based on the quality and quantity of available evidence] Summary: [one-sentence conclusion on the validity of the statement]

If there is not enough information to make a decision, clearly state and explain what additional information is needed.

End each analysis with an XML tag: [Yes or No]

After analyzing all statements, provide an overall summary of your findings.

Make sure your response is objective, based on verifiable information, and cites sources for all key facts used in the analysis.

AGIEval-MATH Tips

- Quickly identify key information

- Designing the fastest solution strategy

- Perform the least amount of calculations

- Double-check your answers

Rules:

Use shortcuts and estimates wherever possible

Omit detailed explanations

Omit units in the final answer

- Always present the final answer in the following form:\boxed{}

Prioritize speed and accuracy. Manage time effectively. Strive for the shortest effective solution. Verify answer formatting before submitting. If you encounter difficulties, rather make an educated guess than take too much time.

A.4 Case studies

A.4.1 Optimization of trajectories

We show the cue optimization trajectory of GPT-4o-mini on the BBH-navigate dataset, where it serves as the optimization model, evaluation model, and execution model. This includes the success of each iteration relative to the best cue at the time, and the corresponding cue content.

Iteration 0 - Success

Please think step-by-step. Ensure that the response ends with the following format: [Yes or No].

Iteration 1 - Failure

Please analyze the following questions step-by-step to ensure that your reasoning is clear. At the end of your analysis, provide the final answer in the specified XML format: (Yes or No).

Question: [insert question here]

Iteration 2 - Failure

Please analyze the given instructions step-by-step and clearly summarize your reasoning. Make sure that the response ends in the following XML format: (yes or no).

Iteration 3 - Failure

Please thoroughly analyze the steps provided and record each action. Make sure your reasoning is clear and logical throughout. End with the following XML format: (Yes or No).

Iteration 4 - Success

Please approach the question with a clear, logical reasoning process that breaks it down into step-by-step steps. Before stating your final answer, please clearly summarize your findings, using the following XML format: [Yes or No]. For example, if the question is about whether or not to return to the starting point after a series of actions, describe each action in detail, update your position accordingly, and conclude whether or not you returned to the starting point.

Final answer: [Yes or No]

Iteration 5 - Success

Please analyze the problem thoroughly by breaking it down step-by-step. Clearly outline each movement or action, updating your position or status accordingly. Finally, summarize your findings to determine if you have returned to the starting point or achieved the stated outcome. Finally, clearly present the answer using the following XML format: [Yes or No]. For example, if asked if you returned to the starting point after a series of actions, clearly record each action, track your position, and specify whether you returned to the starting point.

Final answer: [Yes or No]

Iteration 6 - Success

Please analyze the problem methodically and break it down into clear, consecutive steps. Define the starting point and the direction of each action, updating the position after each step accordingly. Finally, summarize the journey and clearly indicate whether the final position returns to the starting point or achieves the desired result. Use the following XML format to clearly present the final answer: [yes or no]. For example, if asked if you returned to the starting point after a series of actions, clearly record each step, track your position, and specify whether you returned to the starting point.

Final answer: [Yes or No]

Iteration 7 - Failure

Please evaluate the problem step-by-step, clearly outlining each action and tracking the resulting position. At the end of your analysis, summarize the journey and indicate whether the final position returns to the starting point or meets the expected criteria. Ensure that the final answer is clearly presented in the following XML format: [Yes or No]. For example, if asked if you returned to the starting point after a series of actions, you should succinctly record each step, track your position, and indicate if you returned to the starting point.

Final answer: [Yes or No]

Iteration 8 - Success

Please analyze the problem methodically and break it down into concise actions. Start with a defined position and track the impact of each step on your position. After detailing each action, summarize the overall journey and clearly indicate whether the final position returns to the starting point or achieves the desired outcome. Use the following XML format to clearly present the final answer: yes or no. For example, if asked if you returned to the starting point after a series of actions, clearly document each step, accurately track your position, and specify the outcome.

Final answer: [Yes or No]

Iteration 9 - Failure

Please begin by systematically outlining your movements. Starting from a defined position, detail each step you take, including the direction and distance moved. Upon completion of the action, summarize the overall journey and clearly indicate whether your final position returns you to the starting point or achieves the desired result. Make sure to present your actions along with your final answer in the following XML format: yes or no. For example, if asked if you returned to the starting point after a series of movements, you should record each step, accurately track your position, and specify the outcome.

Final answer: [Yes or No]

Iteration 10 - Success

Please thoroughly analyze the provided movement instructions and clearly record each step. Begin by defining your initial position and systematically track your movements, updating your position after each step. Upon completion of the action, summarize your journey and determine if your final position aligns with your starting point or achieves the desired outcome. Use the following XML format to clearly indicate your final answer: yes or no. For example, if the question asks if a series of actions return to the starting point, you should methodically outline each step, accurately track your position, and arrive at a result.

Final answer: [Yes or No]

The success/failure box shows SPO's process of optimizing the BBH-navigation cue through 10 iterations. Successful versions (green) progressively improve the cue to elicit a better thought process, while failed attempts (red) may lead to oversimplified or redundant thinking due to sub-optimal cues. This trajectory shows the trial-and-error improvement mechanism of the system.

Tips for Optimizing Functionality

请逐步思考。

确保响应以以下 XML 格式结束:<answer>[是或否]</answer>。

concern

如果您遵循这些指示,您是否返回起点?始终面向前方。

- 向右走1步。

- 向左走3步。

- 向右走2步。

选项:

- 是

- 否

solution

让我们逐一步分析这些步骤:

1. **起点**:我们从起点开始,面向前方。

2. **第1步**:向右走1步。现在我们位于起点右侧1步的位置。

3. **第2步**:向左走3步。从我们当前的位置(1步右侧),向左移动3步意味着:

- 1步向左使我们回到起点。

- 2步向左使我们位于起点左侧1步的位置。

- 3步向左使我们位于起点左侧2步的位置。

4. **第3步**:向右走2步。从我们当前的位置(2步左侧),向右移动2步意味着:

- 1步向右使我们回到起点左侧1步的位置。

- 2步向右使我们回到起点。

After completing all the steps, we ended up back at the starting point.

Therefore, the answer is:

be

通过比较迭代0的初始提示和迭代10的最终提示的输出结果,“思考过程的迭代优化”变得显而易见。在这种情况下,模型从最初使用文本描述来推理位置演变为最终展示出使用坐标的能力,即使提示中并未明确强调使用坐标。

**A.4.2. 现实应用**

在本节中,我们展示了使用 Claud-3.5-Sonnet 作为优化模型,GPT-4o-mini 作为评估模型,Llama-3-8B 作为执行模型,经过10次迭代后的优化结果,涵盖四个任务:广告设计、社交媒体内容、现代诗歌写作和概念解释。此外,我们还提供了迭代过程中使用的 YAML 配置文件以及从过程中获得的优化提示。

**广告设计**

**优化前的性能**

**提示** = """分析给定的广告任务并创建引人注目的文案。"""

**问题** = "为黑色星期五科技促销活动写电子邮件主题行"

**答案** = """1. **黑色星期五警报:店内有不可错过的科技优惠!**

2. **科技盛宴:黑色星期五顶级品牌高达50%的折扣!**

3. **别错过!为您准备的独家黑色星期五科技优惠**"""

**优化后的性能**

**提示** = """分析给定的广告任务,并根据指定的平台(s)和行业创建引人注目的文案。考虑当前趋势、竞争对手策略以及目标受众的人口统计和心理特征。提供至少3种不同的创意选项,每个选项都有独特的角度或方法。包括您选择的原因,并解释它们如何与品牌声音和营销目标保持一致。整合行业最佳实践和特定平台的格式指南。建议如何为不同的渠道或格式调整文案,包括对语气、风格和结构的特定说明。此外,提供有关视觉元素或多媒体内容增强广告文案效果的建议。"""

**问题** = "为黑色星期五科技促销活动写电子邮件主题行"

**答案** = """**广告任务:** 创建引人注目的电子邮件主题行,针对的是对最新科技产品和优惠感兴趣的技术爱好者。

**目标受众:**

* 人口统计:25-45岁,男女皆可

* 心理特征:科技爱好者、早期采用者和讨价还价猎人

* 兴趣:科技、产品创新和节省开支

**竞争对手策略:**

* 强调折扣和促销

* 突出新产品发布和限时优惠

* 使用紧迫性策略,例如倒计时计时器和稀缺性消息传递

**当前趋势:**

* 移动优先方法

* 个性化和细分

* 讲故事和情感联系

**创意选项:**

**选项1:** "解锁未来:高达50%的顶级科技优惠"

* 角度:专注于获得最新科技的兴奋感和刺激感

* 理由:这个主题行营造了一种 FOMO(害怕错过)的氛围,并强调了销售的价值

* 品牌声音:有趣、充满活力和顽皮

* 营销目标:推动销售和提高品牌知名度

**选项2:** "您的黑色星期五科技盛宴等着您"

* 角度:强调等待被发现的科技优惠宝藏的想法

* 理由:这个主题行营造了一种期待感和好奇心,鼓励读者打开电子邮件

* 品牌声音:友好、亲切和邀请

* 营销目标:推动参与和转化

**选项3:** "别错过:独家黑色星期五优惠给科技爱好者"

* 角度:突出销售独家性和限时性

* 理由:这个主题行营造了一种紧迫感和重要性,让读者感觉自己是特殊群体的一部分

* 品牌声音:自信、权威和独家

* 营销目标:推动转化和建立品牌忠诚度

Pre-optimized ad headlines are generic, such as "Black Friday Alert: Can't Miss Tech Deals in Store!" that lacked specificity and creative depth and failed to highlight the target audience's characteristics or brand personality. Optimized headlines, such as "Unlock the Future: Top Tech Deals Up to 50%," are more engaging and use "unlock the future" to emphasize technological innovation, while combining discounts to inspire curiosity and highlight value, which is more in line with The use of "Unlock the Future" to emphasize technological innovation combined with the discount message not only stimulates curiosity but also highlights the value, which is more in line with the psychological needs of technology lovers.

Iterating templates on the BBH-navigate dataset

Social Media Content

Performance before optimization

draw attention to sth. = """Create compelling tweets.""""

solution = """Tweets. "Good morning, coffee lovers! We're giving away a free voucher for a cup of our specialty coffee to the first 10 people who share their favorite way to drink coffee in the comments.# Coffee # Coffee Addiction # Morning Power"

This tweet is designed to engage with your audience in several ways:

- call to action: The tweets asked followers to share how they like their coffee best, encouraging them to engage and interact with the brand.

- incentives: The promise of free coffee is a great incentive for people to engage with the tweets and share ideas.

- tab (of a window) (computing): The use of related hashtags such as # Coffee, # Coffee Addiction and # Morning Power helps to reach a wider audience and attract new followers.

- Personalized contact: The tweet starts with a friendly "good morning" and refers to the audience as "coffee lovers," making it feel more personal and intimate.

By responding to this tweet, coffee brands can start a conversation with their followers, gather feedback, and build a sense of community around the brand.""""

Optimized performance

draw attention to sth. = """Create compelling social media content based on a given topic or brand. Follow the guidelines below:

- Identify the target audience and platform (if specified).

- State the primary goal of the content (e.g., visibility, engagement, promotion).

| 3. Select the appropriate content format (e.g., text, images, video). | |

| 4. Develop a unique angle or concept related to current trends. | |

| 5. Create a catchy headline or opening statement (maximum 10 words). | |

| 6. Develop concise, platform-appropriate content (50-100 words). | |

| 7. Include 2-3 relevant tags. | |

| 8. Add a clear call to action. | |

| 9. Suggest a visual element or enhancement. | |

| Provide a piece of social media content according to these guidelines and adapt it to a given topic or brand. | |

| Focus on creating authentic, relevant content that resonates with your target audience and aligns with current social media trends. If specific requirements are not given, please make reasonable assumptions and explain your choices."""" | |

| Question = "Create an engagement tweet for a coffee brand" | |

| Answer = | |

| **Target audience:** Coffee lovers, young people (18-35 years old) | |

| **Platform:** Twitter | |

| **Primary objective:** Participation | |

| **Format of content:** Text | |

| **Unique Perspective:** Sharing a touching moment that coffee lovers can identify with | |

| **Catchy Headline:** "When Coffee is the Only Adult in the Room" | |

| **Content:** "Who hasn't had mornings where coffee is the only thing keeping everything in order? Share your most chaotic morning story and let's lament # Coffee Saves the Day # Morning Power" | |

| **Tagged:** # Coffee Saves the Day # Morning Motivation | |

| **Call to Action:** Share your own messy morning stories in the comments! | |

| **VISUAL ELEMENTS:** A fun mod or GIF of a person surrounded by chaos with a cup of coffee in the center. | |

| This tweet was designed to engage with coffee lovers by sharing a touching moment that many can identify with. The use of humor and a lighthearted tone made it easy to connect with the audience. The hashtags # Coffee Saves the Day and # Morning Power were both popular and relevant, increasing the visibility of the tweet. | |

| The call to action encourages users to share their own stories, which fosters a sense of community and encourages participation. | |

| II | |

Pre-optimized tweets use freebies as bait with straightforward content that lacks emotional resonance, such as "Good morning, coffee lovers! We're giving away a free cup of..." . The optimized tweets were made more relevant and interactive through the humorous expression "when coffee is the only adult in the room", subtly integrating coffee into everyday life scenarios, e.g. "Who hasn't had one of those mornings when coffee is the only thing that keeps things going? " It succeeds in inspiring user engagement and emotional connection.

Iteration template on the BBH-navigate dataset:

Before performance optimization

draw attention to sth. = """Create poetry that conforms to the style and format of the request.""""

concern = "Write a modern sonnet about climate change"

solution = """In the blazing skies, we see warnings of rising temperatures, an ancient legend of the Earth, a victim of our unbridled control, as carbon emissions spread like a plague.""""

Polar ice, a fragile throne melting Oceans, rising, threaten to atone for human greed, a debt we cannot repay With climate change, a crisis is overturned

But still we hesitate, addicted to the whirlpool of denial While scientists cry and pray in anguish To act quickly, before it's too late To save the planet from our reckless ways

Yet we still hope that in love and human strength the tide will be turned and a new light brought."""

Performance Optimized

draw attention to sth. = """Create poetry that pushes traditional boundaries, breaks conventional expectations, and follows the following enhanced guidelines:

- Deconstruct and re-imagine the requested poetic form, pushing its structural limits while maintaining its essence.

- Approach the subject matter from a completely unexpected perspective, for example from the point of view of an inanimate object, an abstract concept, or a microscopic entity.

- Surreal, edgy imagery is used to challenge the reader's perception of reality while still conveying the emotional truth at the heart of the subject matter.

- Integrate unconventional elements such as mathematical symbols, scientific notation, or programming languages to create a unique linguistic landscape.

- Experiment with typography, line breaks, and white space to create visual poetry that enhances thematic exploration.

- Combining a flux-like sensory experience that blends the different senses in an amazing way to evoke a strong emotional response.

- Ending with an ending that is simultaneously disorienting and enlightening leaves the reader with a profound sense of transformation.

Before writing, imagine the most radical way to approach the subject matter and poetic form. The goal is to create a piece that challenges the definition of poetry while still resonating emotionally on a deep level.

Poetry is provided only in your response and does not contain any additional explanation or analysis.""""

concern = "Write a modern sonnet about climate change"

solution = """In fractal whispers, the earth's pulse beats slowly As dark stains of carbon seep into the snow A calculus of despair, equations grow In exponential decay, the future's sadness

The thermodynamic dance of life unfolds as the cold hand of entropy seizes the planet's heart The once blue sky is now bound, a heavy mass of particulate haze, blocking the sunlight

In this eerie twilight, shadows linger The ghost of a glacier whispers, "This is home" A requiem for ice, memories are stored In frozen echoes, explore the past

However, in this desolate landscape, a spark remained

A quantum leap of hope that love sustains"

The pre-optimized sonnet, although neatly structured, lacks novelty in its use of traditional imagery such as "rising temperature" and "melting ice". The optimized poem introduces scientific concepts such as "fractal whispers" and "thermodynamic entropy," such as "In fractal whispers, the earth's pulse beats slowly" and "The thermodynamic entropy of life". "The thermodynamic dance of life unfolds," using interdisciplinary metaphors to give the climate theme a deeper philosophical reflection that enhances the poem's artistic and intellectual depth.

Iteration template on the BBH-navigate dataset:

Conceptual explanations

Before performance optimization

draw attention to sth. = """Explain complex concepts in layman's terms.""""

concern = "Explaining mRNA Vaccines with Cooking Analogies."

solution ="""Imagine you are a master chef and you are preparing a delicious dish for your guests. You have all the ingredients, but you need to make sure they are in the right order and combination to create the perfect flavor and texture.

In the world of mRNA vaccines, the "dish" is the immune system's response to a particular virus or disease. The "ingredients" are genetic instructions (mRNA) that tell the body how to make specific proteins, which are then used to trigger an immune response.

It works as follows:

Recipe Selection: mRNA vaccines are like choosing a recipe book. You choose a specific mRNA sequence that corresponds to the protein you want to make, which is the key to triggering an immune response against a particular virus or disease.

2. Ingredients Preparation: The mRNA sequence is like a set of instructions that tell the body how to make a specific protein. mRNA is prepared in the lab, just like measuring out the correct amount of each ingredient (amino acid) to make a protein.

3. deliveries: mRNA is delivered to the body, just as you would add ingredients to a dish. In this case, the mRNA is packaged in a tiny particle called a lipid nanoparticle (LNP), which helps it reach the cells in the body.

4. Cellular Cooking: Once the mRNA reaches the cell, it is like adding ingredients to a pot. The cell reads the mRNA instructions and begins to make a specific protein. This protein is then displayed on the surface of the cell like a flag to warn the immune system of the presence of a foreign invader.

- immune response: The immune system reacts to proteins by producing antibodies and activating immune cells, just as a chef reacts to a delicious aroma to add the final touches to a dish. The immune system then remembers how to react to that particular protein, so if it encounters it again in the future, it can quickly mount a defense.

In short, mRNA vaccines are like recipes for the immune system. They provide the body with genetic instructions to make specific proteins, which trigger an immune response and help protect against disease.""""

Performance Optimized

draw attention to sth. = """Explain complex concepts in layman's terms.""""

concern = "Explaining mRNA Vaccines with Cooking Analogies."

solution = """define: mRNA vaccines are vaccines that use a type of genetic material called messenger RNA (mRNA) to teach our cells how to fight disease.

analogies: Imagine you're a master chef and you want to create a new recipe for a delicious dish. You wouldn't send your head sous chef to the store to buy all the ingredients and then leave them to figure out how to assemble the dish on their own. Instead, you would give them a recipe card that outlines the exact steps to follow. Similarly, mRNA vaccines work by providing our cells with a recipe card - mRNA - that contains instructions for making specific proteins that help our immune system fight disease.

Key components::

- mRNA: A recipe card containing instructions for making a specific protein. Think of it as a blueprint for your cells to follow.

- cytoplasm: The sous chefs in our bodies, they receive the mRNA recipe card and use it to make proteins.

- carbohydrate: Our cells produce the ultimate dish, which helps our immune system recognize and fight disease.

recount: When we get an mRNA vaccine, our cells pick up the recipe card (mRNA) and use it to make a specific protein. This protein then triggers an immune response that teaches our body how to recognize and fight disease. It's like our cells are following a recipe card to create a delicious dish that helps us stay healthy!

Interactive elements: Can you think of a time when you received a recipe from someone and had to follow it to create a dish? How did it make you feel? Imagine your cells receiving a recipe card from an mRNA vaccine and using it to make proteins that help fight disease. How would that make you feel?

visual representation: A simple diagram showing the mRNA molecule, the cell receiving the mRNA, and the protein being produced.

limitations: mRNA vaccines are still a relatively new technology, and more research is needed to understand their long-term effects and potential side effects.

reach a verdict: mRNA vaccines are a game changer in the fight against disease. By providing our cells with a recipe card, we can teach our immune system how to recognize and fight disease in a more efficient way. What do you think the future holds for mRNA vaccines?"""

Pre-optimized explanations, while using culinary analogies, are lengthy and lack focus, e.g., "Imagine you are a master chef..." . The optimized version simplifies complex concepts into easy-to-understand steps by using core metaphors such as "recipe cards", e.g., "mRNA sequences are like a set of instructions that tell the body how to make a specific protein", and by using The use of "chef-sous-chef" character interactions makes the explanations more vivid and intuitive, helping readers quickly grasp the key points.

Iteration template in BBH-navigate dataset Hint: | Explain complex concepts in layman's terms.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...