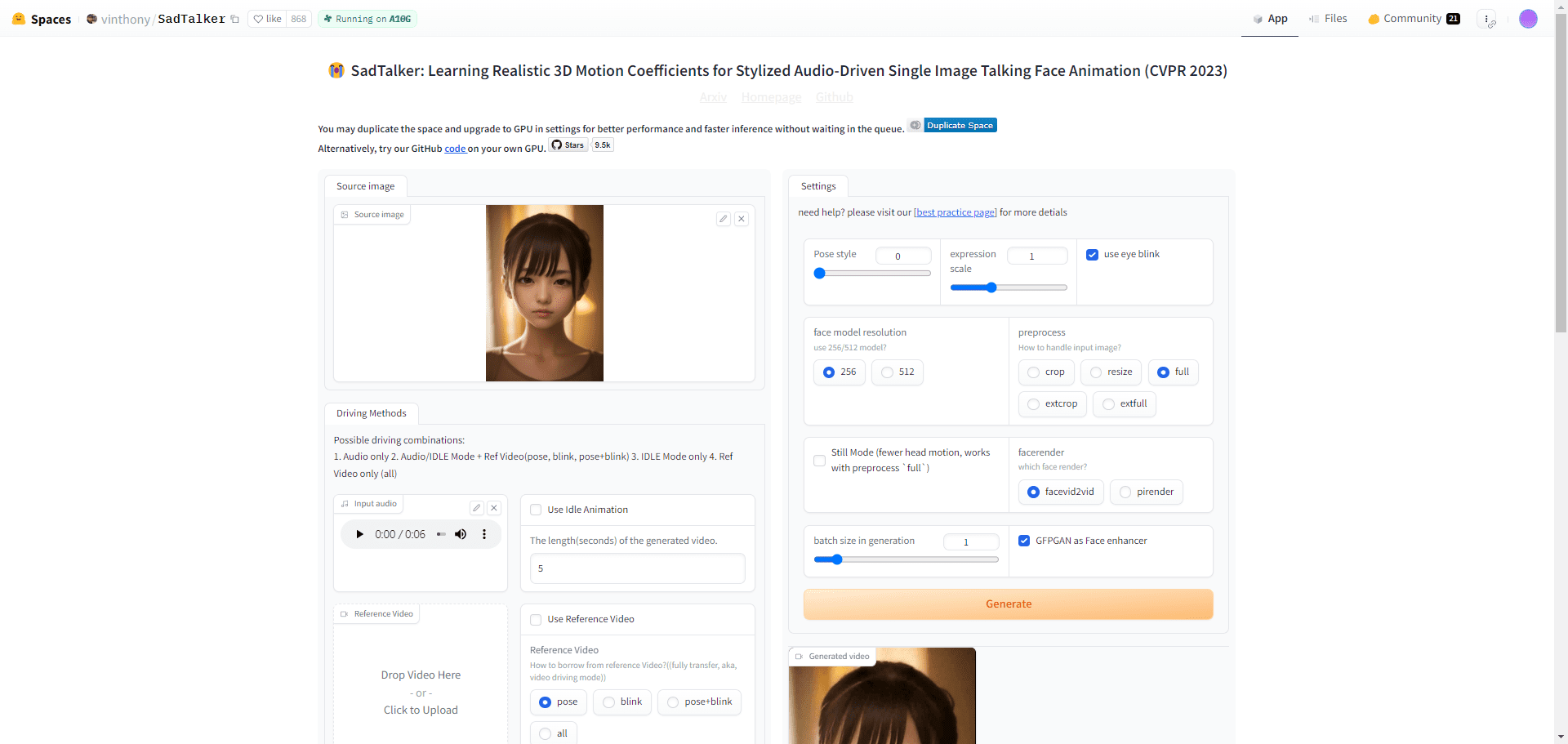

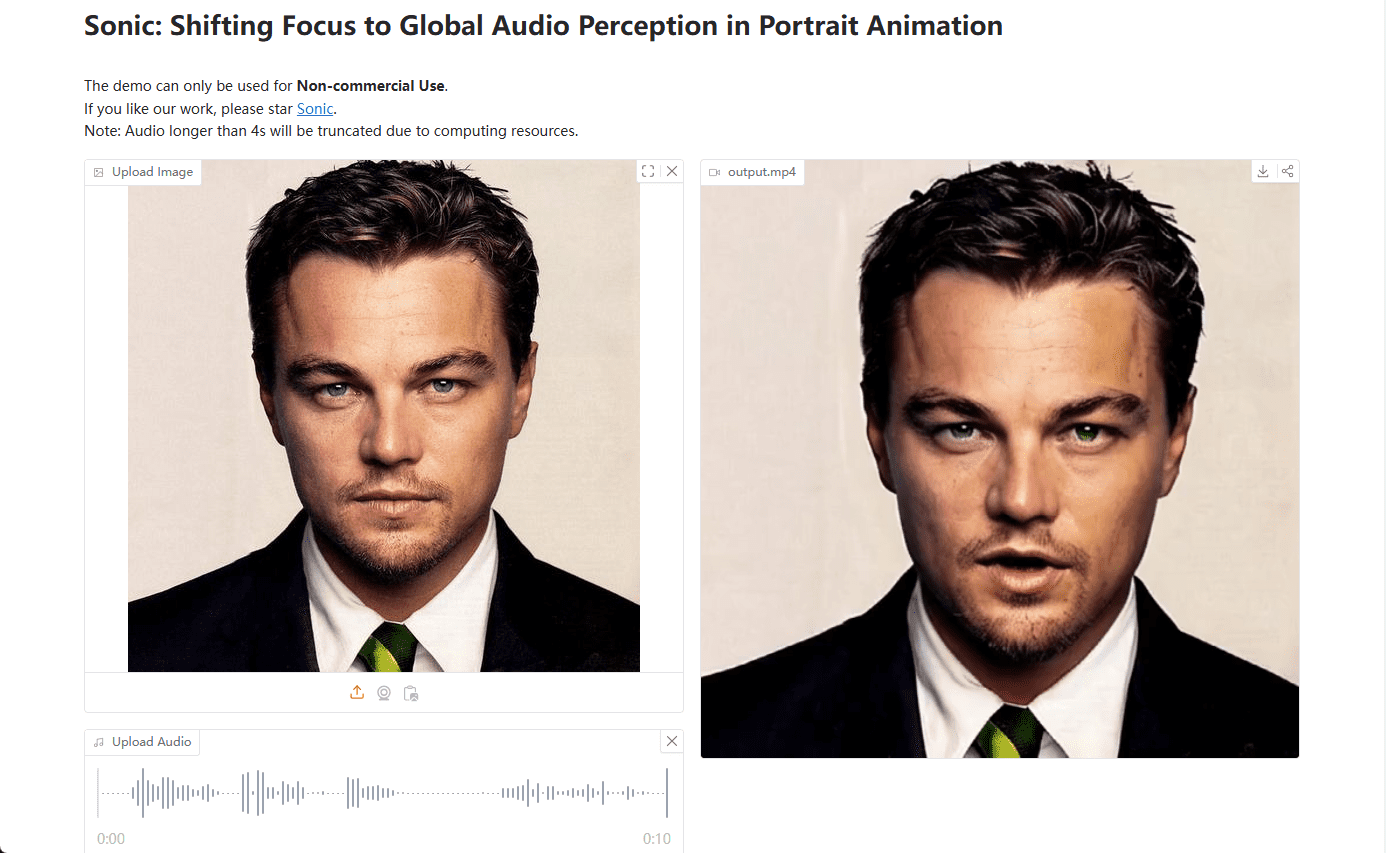

Sonic: Audio-driven portrait images generate digital demo videos with vivid facial expressions

General Introduction

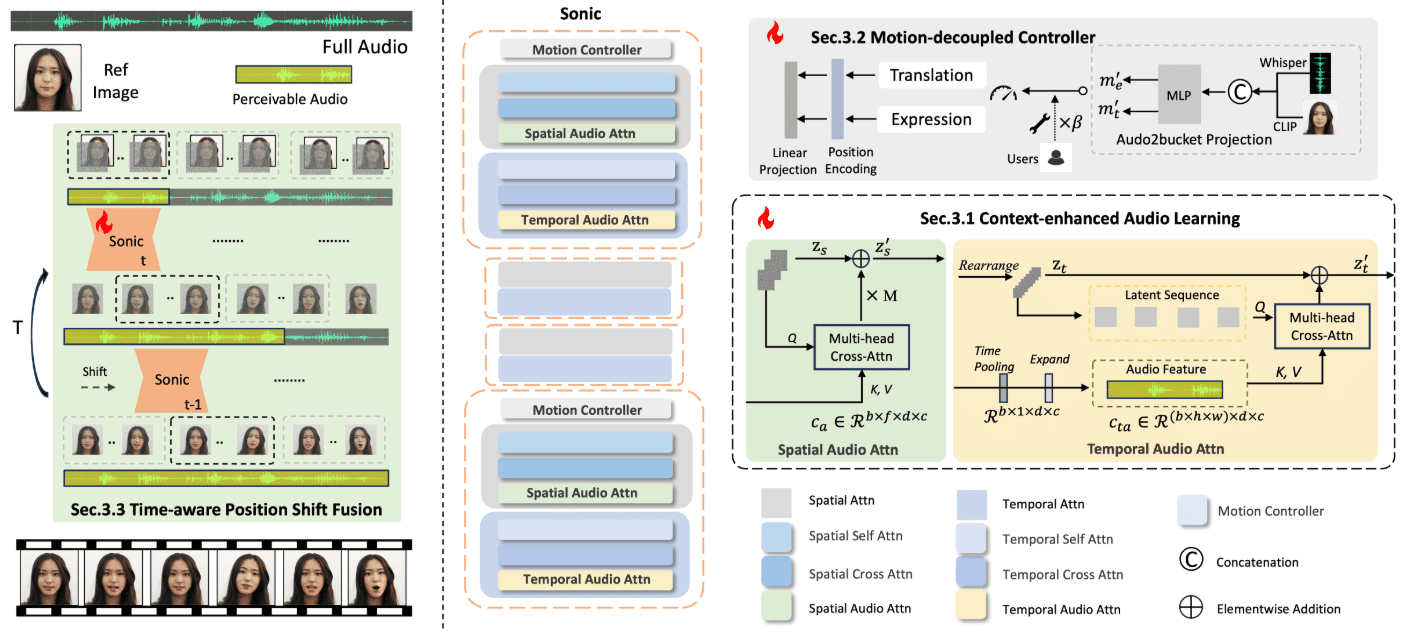

Sonic is an innovative platform focused on global audio perception designed to generate vivid portrait animations driven by audio. Developed by a team of researchers from Tencent and Zhejiang University, the platform utilizes audio information to control facial expressions and head movements to generate natural and smooth animated videos.Sonic's core technologies include context-enhanced audio learning, motion decoupled controllers, and time-aware position shift fusion modules. These technologies enable Sonic to generate long, stable and realistic videos with different styles of images and various types of audio inputs.

The code and weights for this project will be released (already released) after it passes internal open source review.Installation instructions for Windows usersThe

Demo: https://huggingface.co/spaces/xiaozhongji/Sonic

Function List

- Context-enhanced audio learning: Extracting audio knowledge from long time segments provides a priori information about facial expressions and lip movements.

- Motion decoupling controller: Independent control of head and expression movements for more natural animation.

- Time-aware position shift fusion: Fuse global audio information to generate long and stable video.

- Versatile video generation: Supports different styles of images and multiple resolutions for video generation.

- Comparison with open and closed source methods: Demonstrates Sonic's strengths in expression and natural head movement.

Using Help

Installation process

The Sonic platform is currently undergoing an internal open source review, and the code and weights will be uploaded to GitHub once the review is complete. users can install and use Sonic by following these steps:

- Visit Sonic's GitHub page.

- Cloning Warehouse:

git clone https://github.com/jixiaozhong/Sonic.git - Install the dependencies:

pip install -r requirements.txt - Download the pre-trained model weights and place them in the specified directory.

Usage Process

- Preparing to enter data: Collect video images and audio files that need to be generated for animation.

- Run the generated script: Run the generation process using the provided scripts, for example:

python generate.py --image input.jpg --audio input.wav - Adjustment parameters: Adjust the parameters in the generation script as needed to get the best results.

- View Output: The generated video will be saved in the specified output directory.

Detailed Function Operation

- Context-enhanced audio learning: By learning audio over long periods of time, Sonic is able to capture subtle changes in the audio to produce more natural facial expressions and lip movements.

- Motion decoupling controller: The controller handles head motion and expression motion separately, making the generated animation more realistic. Users can optimize the animation effect by adjusting the controller parameters.

- Time-aware position shift fusion: This module ensures that the generated video remains stable over a long period of time by fusing global audio information. The user can control the smoothness and stability of the video by adjusting the time window parameters.

- Versatile video generation: Sonic supports different styles of images (e.g. cartoon, realistic) and multiple resolutions for video generation. Users can choose the appropriate image and audio inputs according to their needs to generate video effects that meet their expectations.

Sonic One-Click Installer

Baidu: https://pan.baidu.com/share/init?surl=iCR4l4ClSRZswm1E2K_NNA&pwd=8520

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...