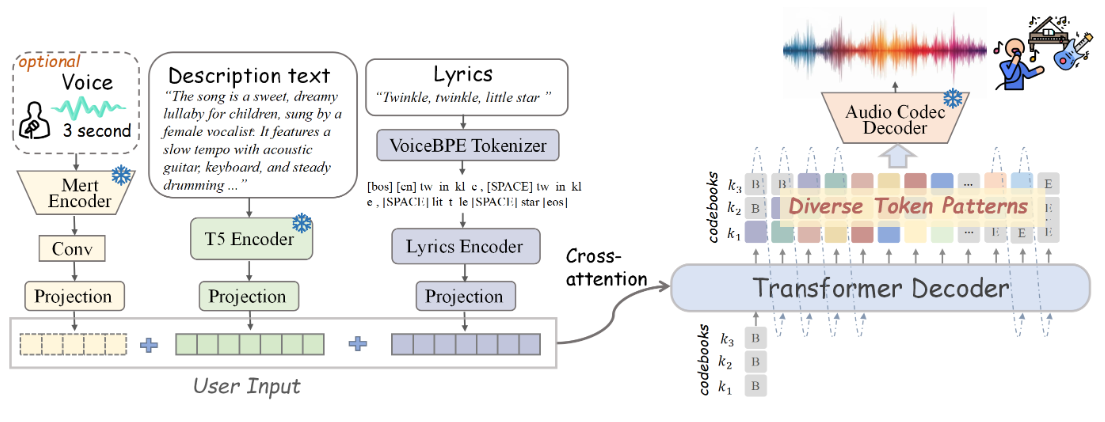

SongGen: A Single-Stage Autoregressive Transformer for Automatic Song Generation

General Introduction

SongGen is an open source, single-stage autoregressive Transformer model designed for text-to-song generation tasks. The model is capable of generating songs containing vocals and backing tracks from text input.SongGen provides fine-grained control over a wide range of musical attributes, including lyrics, instrument descriptions, musical style, mood, and timbre. In addition, users have the option of using a three-second reference audio clip for sound cloning. songGen supports two output modes: hybrid mode generates a mixed track containing vocals and backing vocals directly, and two-track mode generates separate vocal and backing tracks for subsequent applications. The project also provides an automated data preprocessing pipeline and an efficient quality control mechanism designed to facilitate community engagement and future research.

Function List

- Text-to-Song Generation

- Supports fine-grained control of lyrics, instrument descriptions, musical style, mood and timbre

- Provides three-second reference audio clips for sound cloning

- Mixed mode and dual track mode outputs

- Automated data preprocessing pipeline

- Open source model weights, training code, annotated data and processing pipeline

Using Help

Thanks for providing the official installation process information! I'll be making corrections based on these. Below is the updated help section for use:

Using Help

Installation process

- Cloning Project Warehouse:

git clone https://github.com/LiuZH-19/SongGen.git

cd SongGen

- Create and activate a new Conda environment:

conda create -n songgen python=3.9.18

conda activate songgen

- Install CUDA 11.8 and related dependencies:

conda install pytorch torchvision torchaudio cudatoolkit=11.8 -c pytorch -c nvidia

- Use SongGen in inference mode only:

pip install .

Download Checkpoints

Please download the pre-trained model checkpoints for xcodec and songgen.

running inference

hybrid model

- Import the necessary libraries:

import torch

import os

from songgen import (

VoiceBpeTokenizer,

SongGenMixedForConditionalGeneration,

SongGenProcessor

)

import soundfile as sf

- Load the pre-trained model:

ckpt_path = "..." # 预训练模型的路径

device = "cuda:0" if torch.cuda.is_available() else "cpu"

model = SongGenMixedForConditionalGeneration.from_pretrained(

ckpt_path,

attn_implementation='sdpa').to(device)

processor = SongGenProcessor(ckpt_path, device)

- Define the input text and lyrics:

lyrics = "..." # 歌词文本

text = "..." # 音乐描述文本

ref_voice_path = 'path/to/your/reference_audio.wav' # 参考音频路径,可选

separate = True # 是否从参考音频中分离人声轨道

- Generate songs:

model_inputs = processor(text=text, lyrics=lyrics, ref_voice_path=ref_voice_path, separate=True)

generation = model.generate(**model_inputs, do_sample=True)

audio_arr = generation.cpu().numpy().squeeze()

sf.write("songgen_out.wav", audio_arr, model.config.sampling_rate)

dual-track model

- Import the necessary libraries:

import torch

import os

from songgen import (

VoiceBpeTokenizer,

SongGenDualTrackForConditionalGeneration,

SongGenProcessor

)

import soundfile as sf

- Load the pre-trained model:

ckpt_path = "..." # 预训练模型的路径

device = "cuda:0" if torch.cuda.is_available() else "cpu"

model = SongGenDualTrackForConditionalGeneration.from_pretrained(

ckpt_path,

attn_implementation='sdpa').to(device)

processor = SongGenProcessor(ckpt_path, device)

- Define the input text and lyrics:

lyrics = "..." # 歌词文本

text = "..." # 音乐描述文本

ref_voice_path = 'path/to/your/reference_audio.wav' # 参考音频路径,可选

separate = True # 是否从参考音频中分离人声轨道

- Generate songs:

model_inputs = processor(text=text, lyrics=lyrics, ref_voice_path=ref_voice_path, separate=True)

generation = model.generate(**model_inputs, do_sample=True)

vocal_array = generation.vocal_sequences[0, :generation.vocal_audios_length[0]].cpu().numpy()

acc_array = generation.acc_sequences[0, :generation.acc_audios_length[0]].cpu().numpy()

min_len = min(vocal_array.shape[0], acc_array.shape[0])

vocal_array = vocal_array[:min_len]

acc_array = acc_array[:min_len]

audio_arr = vocal_array + acc_array

sf.write("songgen_out.wav", audio_arr, model.config.sampling_rate)

Detailed Function Operation

- Text-to-Song Generation: Input text containing lyrics and a description of the music, and the model will generate the corresponding audio of the song.

- fine-grained control: By entering a description in the text, the user can control various attributes of the generated song, such as instrumentation, style, mood, etc.

- sound cloning: A three-second reference audio clip is provided and the model can mimic that sound for song generation.

- Output Mode Selection: Select hybrid mode or dual-track mode according to demand, flexible application in different scenes.

- Data preprocessing pipeline: Automated data preprocessing and quality control to ensure high quality of generated results.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...