SiteMCP: Crawling website content and turning it into MCP services

General Introduction

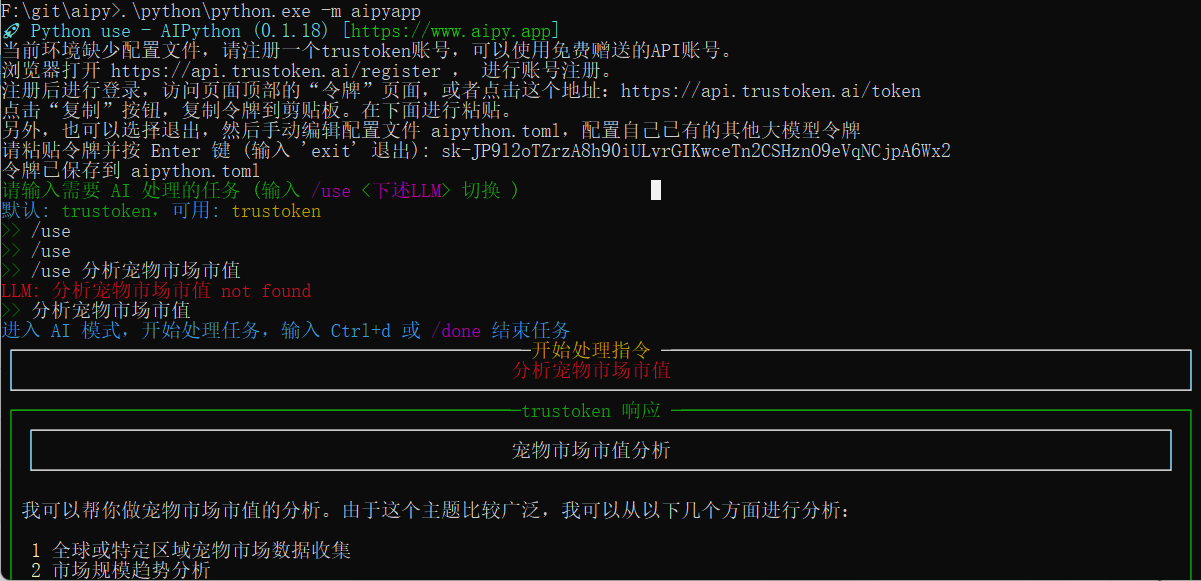

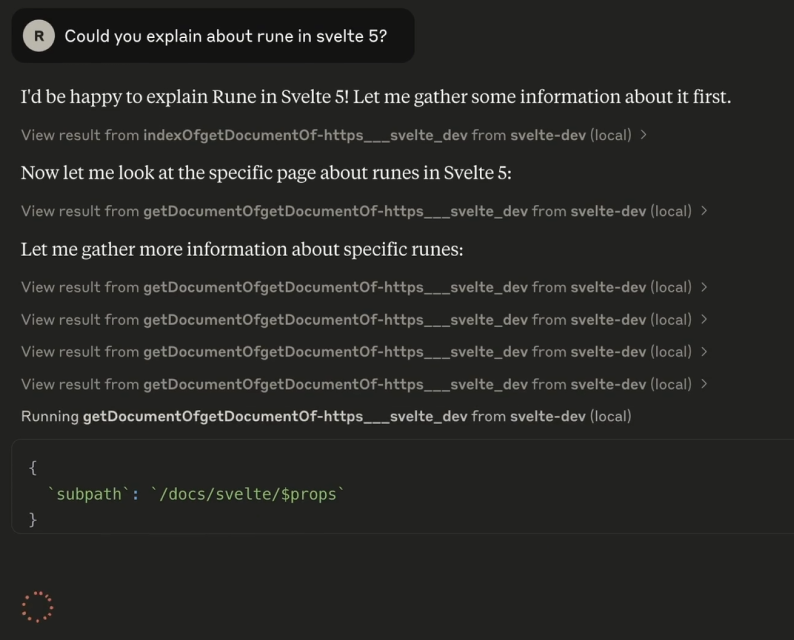

SiteMCP is an open-source tool whose core function is to crawl an entire website's content and turn it into an MCP (Model Context Protocol) server that allows AI assistants (such as the Claude Desktop) to access website data directly. It was developed by developer ryoppippi, hosted on GitHub, and inspired by another tool sitefetchThe purpose of SiteMCP is to make it easier for AI to access external information. siteMCP was released on April 7, 2025 on npm and allows users to quickly cache a page and launch a local server by simply typing in a website address. The whole process is simple and efficient for developers, tech enthusiasts, and casual users.

Function List

- Crawls all pages or parts of a specified website and caches them locally.

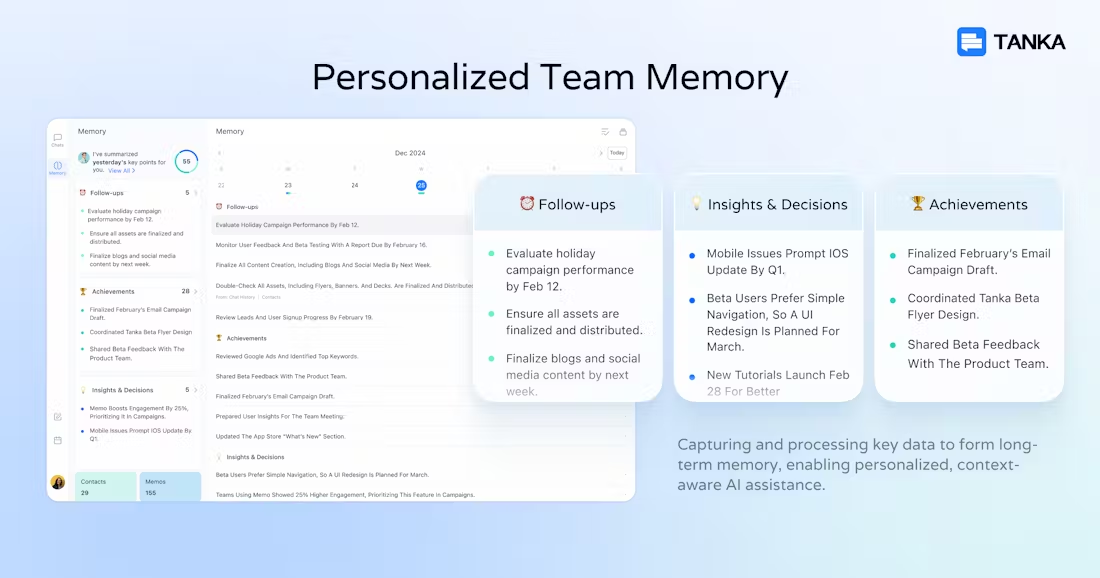

- Convert crawled website data into MCP server for AI access.

- Support for setting the number of concurrencies via the command line (e.g.

--concurrency) to improve crawl speed. - furnish

-mparameter, which matches a specific page path (e.g./blog/**). - be in favor of

--content-selectorparameter to extract the content of the specified area of the web page. - Default caching of pages to

~/.cache/sitemcp, supports customizing the cache path or disabling the cache. - Seamless integration with clients that support the MCP protocol, such as Claude Desktop.

Using Help

SiteMCP is easy to install and use, so you can get started quickly. The following is a detailed description of how to install, operate, and use the features.

Installation process

SiteMCP runs on Node.js and can be used without manually downloading the source code. Here are the steps:

- Confirm the Node.js environment

Open a terminal and typenode -vIf you don't have Node.js, go to the Node.js website and download and install it. If you don't have it, go to the Node.js website and download it. - Single use (no installation required)

Enter any of the following commands in the terminal, replacing it with the site you want to crawl:

npx sitemcp https://example.com

bunx sitemcp https://example.com

pnpx sitemcp https://example.com

These commands will automatically download SiteMCP and run it, starting the MCP server when the crawl is complete.

- Global installation (optional)

If you use it a lot, you can install it globally:

npm i -g sitemcp

bun i -g sitemcp

pnpm i -g sitemcp

After installation, it is straightforward to use the sitemcp command to run, for example:

sitemcp https://example.com

basic operation

After running the command, SiteMCP will crawl the website content and cache it to the default path ~/.cache/sitemcp. The terminal will display similar:

Fetching https://example.com...

Server running at http://localhost:3000

At this point, the MCP server is up and the AI Assistant can be accessed via the http://localhost:3000 Access data.

Featured Function Operation

SiteMCP provides some parameters to make crawling more flexible. Here are the detailed usage:

- Improve crawl speed

The default concurrency is limited, if the site has many pages, you can add the--concurrencyParameters. Example:

npx sitemcp https://daisyui.com --concurrency 10

This will crawl 10 pages at the same time, which is much faster.

- Match specific pages

expense or outlay-mmaybe--matchparameter specifies the path and supports multiple uses. Example:

npx sitemcp https://vite.dev -m "/blog/**" -m "/guide/**"

This will only grab vite.dev of the blog and guide pages. Path matching is based on the micromatchThe support for wildcards (e.g. ** (indicating all subpaths).

- Extract specific content

expense or outlay--content-selectorparameter specifies a CSS selector. For example:

npx sitemcp https://vite.dev --content-selector ".content"

This will only crawl the page class="content" to avoid extraneous information.SiteMCP defaults to using the mozilla/readability Extracts readable content, but can be more precise with selectors.

- Customize the cache path or disable caching

Default cache to~/.cache/sitemcpIt can be done with--cache-dirChange the path:

npx sitemcp https://example.com --cache-dir ./my-cache

If you don't want to cache, add --no-cache::

npx sitemcp https://example.com --no-cache

- Integration with Claude Desktop

To configure the SiteMCP server in Claude Desktop, proceed as follows:

- Find the configuration file (usually in JSON format) and add it:

{ "mcpServers": { "daisy-ui": { "command": "npx", "args": ["sitemcp", "https://daisyui.com", "-m", "/components/**"] } } } - Save and restart Claude Desktop. after that, Claude can access the component page data through "daisy-ui".

- If the site has many pages, it is recommended to run the command to cache the data first:

npx sitemcp https://daisyui.com -m "/components/**"

caveat

- first run

npxWhen it will download the dependency, it may take a few seconds with a slow network. - If the site has an anti-crawl mechanism, the crawl may fail, it is recommended to reduce the number of concurrency or contact the webmaster.

- The size of the cache file depends on the size of the site and can be cleaned regularly

~/.cache/sitemcpThe

Through these operations, SiteMCP can turn any website into an AI-ready data source, especially for users who need quick access to documents or content.

application scenario

- Developer Debugging Code

Developers crawl technical documentation sites (e.g., Vite's guide page) and let AI answer usage questions.

For example, runningnpx sitemcp https://vite.dev -m "/guide/**"The AI will be able to access the content of the guide directly. - Blog Content Organizer

Bloggers crawl their own sites (e.g.https://myblog.com), letting AI analyze articles or generate summaries.

expense or outlaynpx sitemcp https://myblog.com -m "/posts/**"Ready to go. - Learning the new framework

Students capture the official website of the framework (e.g., DaisyUI's component page) and use AI to explain the functionality.

(of a computer) runnpx sitemcp https://daisyui.com -m "/components/**", learning is more efficient.

QA

- Which clients does SiteMCP support?

Any client that supports the MCP protocol will work, such as Claude Desktop. other tools need to be checked for compatibility. - What if the crawl fails?

Check the network, or use the-mNarrowing. If the site restricts crawling, lower the--concurrency值。 - Does the cache take up much space?

Small sites are a few megabytes, large sites can be hundreds of megabytes.--cache-dirCustomize the path and clean it up regularly.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...