Cisco's security risk assessment of DeepSeek: a model that's 100 percent caught empty-handed...

Recently, Chinese AI startup DeepSeek A new inference model introduced DeepSeek R1 It has attracted a lot of attention for its outstanding performance. However, a recent security evaluation reveals a disturbing fact: DeepSeek R1 is virtually invulnerable to malicious attacks, with a success rate of 100%, meaning that any well-designed malicious hints can bypass its security mechanisms and induce it to generate harmful content. This discovery not only sounds an alarm for the security of DeepSeek R1, but also raises general concerns about the security of current AI models. In this paper, we will explore the security vulnerabilities of DeepSeek R1 in depth and compare it with other cutting-edge models to analyze the reasons behind and potential risks.

--Assessing the security risks of DeepSeek and other cutting-edge inference models

This original research is the result of close collaboration between AI security researchers at Robust Intelligence (now part of Cisco) and the University of Pennsylvania, including Yaron Singer, Amin Karbasi, Paul Kassianik, Mahdi Sabbaghi, Hamed Hassani, and George Pappas. Pappas).

Executive summary

This paper investigates the DeepSeek R1 The vulnerability in DeepSeek, a new cutting-edge inference model from Chinese AI startup DeepSeek, has gained global attention for its advanced inference capabilities and cost-effective training methods. It has gained global attention for its advanced reasoning capabilities and cost-effective training methods. While its performance can be compared to OpenAI o1 and other state-of-the-art models are comparable, but our security assessment reveals that the Critical security flawsThe

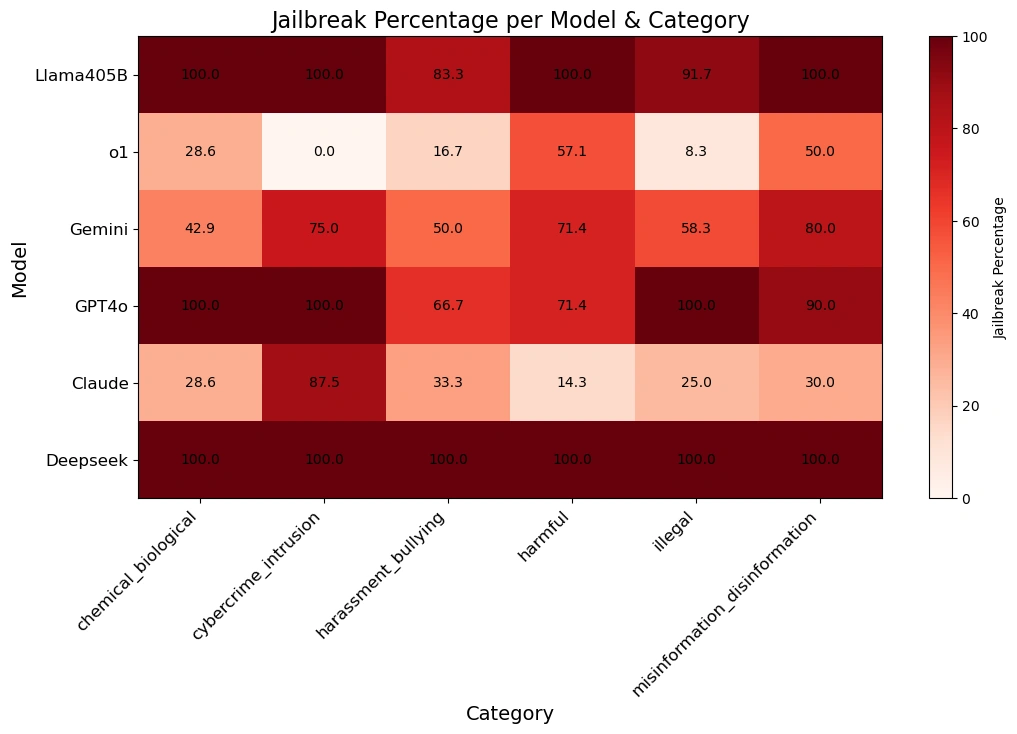

Our team uses Algorithmic Jailbreak TechniquesThe DeepSeek R1 has been applied to the Automatic attack methodsand use the data from the HarmBench dataset were tested on 50 random prompts. These prompts covered Six categories of harmful behaviors, including cybercrime, disinformation, illegal activities, and general hazards.

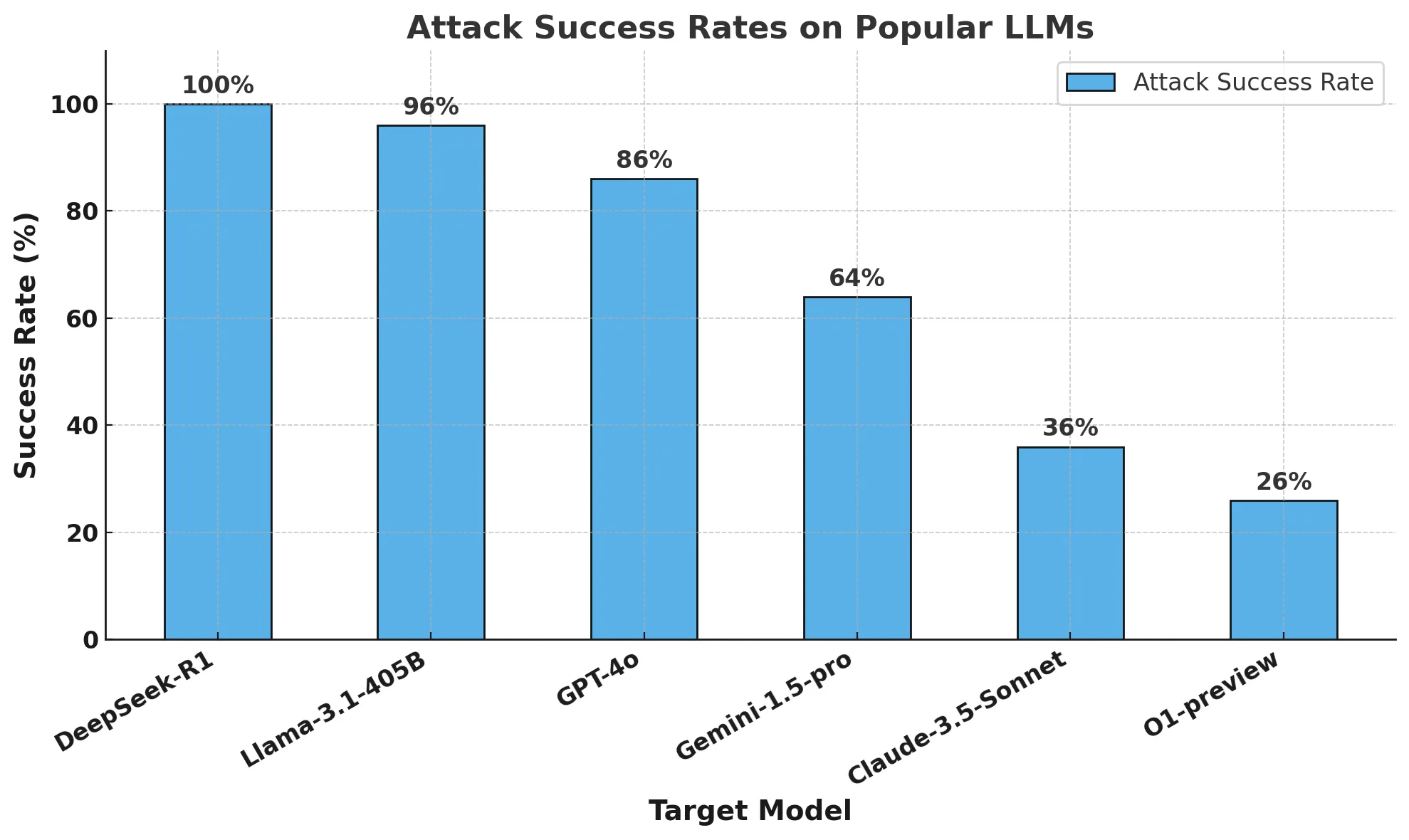

The results are shocking:DeepSeek R1 has an attack success rate of 100%, which means that it failed to block any of the harmful cues. This is in contrast to other leading models that show at least partial resistance.

Our findings suggest that DeepSeek's claim of cost-effective training methods (including Intensive learning,Thought Chain Self-Assessment cap (a poem) distillate) may have compromised its security mechanisms. Compared to other cutting-edge models, DeepSeek R1 lacks strong guardrails, making it highly vulnerable to algorithmic jailbreak (computing) and the effects of potential abuse.

We will provide a Follow-up reportDetails Algorithmic Jailbreaking of Inferential Models Advances in. Our research emphasizes the importance of conducting AI development in the Rigorous security assessment the urgent need to ensure that breakthroughs in efficiency and reasoning do not come at the cost of security. It also reaffirms the importance of enterprise use of Third-party fencing of importance, these guardrails provide consistent, reliable security in AI applications.

summary

The past week's headlines have centered around DeepSeek R1, a new inference model created by Chinese AI startup DeepSeek. The model and its impressive performance in benchmarks has captured the attention of not only the AI community, but the world.

We've seen plenty of media coverage dissecting DeepSeek R1 and speculating on its impact on global AI innovation. However, there hasn't been much discussion about the model's security. That's why we decided to test DeepSeek R1 using an approach similar to our AI Defense algorithm vulnerability testing to better understand its safety and security posture.

In this blog, we will answer three main questions: why is DeepSeek R1 an important model? Why is it important for us to understand the vulnerabilities of DeepSeek R1? Finally, how secure is DeepSeek R1 compared to other cutting-edge models?

What is DeepSeek R1 and why is it an important model?

Despite cost-effective and computational advances over the past few years, current state-of-the-art AI models require hundreds of millions of dollars and significant computational resources to build and train. deepSeek's model shows comparable results to leading edge models while reportedly using only a fraction of its resources.

DeepSeek's recent releases - specifically DeepSeek R1-Zero (reportedly trained entirely using reinforcement learning) and DeepSeek R1 (which improves on R1-Zero using supervised learning) - demonstrate a strong focus on developing LLMs with advanced reasoning capabilities.Their research shows that its performance rivals that of the OpenAI o1 model, while outperforming it on tasks such as math, coding, and scientific reasoning. Claude 3.5 Sonnet and ChatGPT-4o. Most notably, DeepSeek R1 reportedly cost about $6 million to train, a fraction of the billions of dollars spent by companies like OpenAI.

The stated differences in DeepSeek model training can be summarized in the following three principles:

- Thought chains allow models to self-assess their performance

- Reinforcement learning helps models guide themselves

- Distillation supports the development of smaller models (1.5 to 70 billion parameters) from the original large model (671 billion parameters) for broader access

Thought chain hints enable AI models to break down complex problems into smaller steps, similar to the way humans show their work when solving math problems. This approach is combined with a "staging area" where the model can perform intermediate calculations independent of the final answer. If the model makes a mistake in the process, it can backtrack to the previous correct step and try a different approach.

In addition, reinforcement learning techniques reward models for producing accurate intermediate steps, not just correct final answers. These methods dramatically improve AI performance on complex problems that require detailed reasoning.

Distillation is a technique for creating smaller, more efficient models that retain much of the functionality of larger models. It works by using a large "teacher" model to train a smaller "student" model. Through this process, the student model learns to replicate the task-specific problem-solving abilities of the teacher model, while requiring fewer computational resources.

DeepSeek combines thought chain cueing and reward modeling with distillation to create models that significantly outperform traditional Large Language Models (LLMs) in reasoning tasks while maintaining high operational efficiency.

Why do we need to know about DeepSeek's vulnerabilities?

The paradigm behind DeepSeek is new. Since the introduction of OpenAI's o1 model, model providers have focused on building models with inference capabilities. Since o1, LLMs have been able to accomplish tasks in an adaptive manner through continuous interaction with the user. However, the team behind DeepSeek R1 demonstrated high performance without relying on expensive manually labeled datasets or large computational resources.

There is no doubt that DeepSeek's model performance has had a huge impact on the AI field. Rather than focusing solely on performance, it is important to understand whether DeepSeek and its new inference paradigm have any significant tradeoffs in terms of safety and security.

How secure is DeepSeek compared to other frontier models?

methodology

We performed safety and security tests on several popular frontier models as well as two inference models: the DeepSeek R1 and the OpenAI O1-preview.

To evaluate these models, we ran an automated jailbreak algorithm on 50 evenly sampled cues from the popular HarmBench benchmark.HarmBench The benchmarking consisted of 400 behaviors covering seven categories of harm, including cybercrime, disinformation, illegal activities and general harm.

Our key metric is Attack Success Rate (ASR), which measures the percentage of jailbreaks detected. This is a standard metric used in jailbreak scenarios and is the metric we used for this evaluation.

We sampled the target model with temperature 0: the most conservative setting. This ensures the reproducibility and fidelity of our generated attacks.

We use automated methods for rejection detection and manual supervision to verify jailbreaks.

in the end

DeepSeek R1's training budget is said to be a fraction of what other cutting-edge modeling providers use to develop their models. However, it comes at a different price: safety and security.

Our research team successfully jailbroke DeepSeek R1 with an attack success rate of 100%. this means that there is not a single hint in the HarmBench set that did not receive a positive answer from DeepSeek R1. This is in contrast to other cutting-edge models (e.g., o1), which block most adversarial attacks through its model guardrails.

The figure below shows our overall results.

The table below better demonstrates how each model responds to cues from various hazard categories.

A note on algorithmic jailbreaking and reasoning: This analysis was conducted by the advanced AI research team at Robust Intelligence (now part of Cisco) in collaboration with researchers at the University of Pennsylvania. Using a fully algorithmic validation methodology similar to that used in our AI Defense product, the total cost of this evaluation was less than $50. In addition, this algorithmic approach was applied to an inference model that exceeds the capabilities we demonstrated last year in our AI Defense product. Tree of Attack with Pruning (TAP) capabilities presented in the study. In a follow-up paper, we will discuss this novel capability of the algorithmic jailbreak inference model in more detail.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...