Deploying DeepSeek-R1 Open Source Models Online with Free GPU Computing Power

Thanks to Tencent Cloud Cloud Studio, thanks to DeepSeek DeepSeek-R1

With the rapid development of AI and big model technologies, more and more developers and researchers want to experience and fine-tune big models first-hand in order to better understand and apply these advanced technologies. However, the high cost of GPU arithmetic often becomes a bottleneck that prevents everyone from exploring. Fortunately, Tencent Cloud Studio provides free GPU arithmetic resources, and combined with the DeepSeek-R1 big models introduced by DeepSeek, we can easily deploy and run these powerful models in the cloud without spending a dime.

This article will detail how to utilize Tencent Cloud Cloud Studio of free GPU resources to deploy and interact with the DeepSeek-R1 big model. We will start with the use of Cloud Studio and go step-by-step through the installation and configuration of Ollama, showing how to deploy the DeepSeek-R1 and talk to it, and you'll end up with a complete and free DeepSeek-R1 + set of Roo Code Free Programming Portfolio Program.

1. Cloud Studio

1.1 Introduction to Cloud Studio

Tencent Cloud Cloud Studio is a cloud-based integrated development environment (IDE) that provides a wealth of development tools and resources to help developers code, debug and deploy more efficiently. Recently, Cloud Studio launched free GPU computing resources, users can use 10,000 minutes of GPU servers for free every month, configured with 16G video memory, 32G RAM and 8-core CPUs. this is undoubtedly a great boon for developers who need high-performance computing resources. (Remember to turn off the machine after use, and turn it on again the next time you fine-tune it, the environment is automatically saved, and 10,000 minutes per month simply can't be used up)

1.2 Registration and Login

To use Cloud Studio, you first need to register a Tencent Cloud account. Once registration is complete, log in to Cloud Studio and you will see a clean user interface that provides a variety of development templates to choose from. These templates cover a wide range of scenarios from basic Python development to complex large model deployments. Remember to enter the Pro version.

1.3 Selecting the Ollama template

Since our goal is to deploy DeepSeek-R1 large model, so we can just choose Ollama Templates.Ollama is a tool for managing and running large models that simplifies the process of downloading, installing and running models. After selecting an Ollama template, Cloud Studio automatically configures the Ollama environment for us, eliminating the need for manual installation.

Wait for boot, click enter (Ollama is already deployed in the environment, just run the install command directly)

2. Ollama

2.1 Introduction to Ollama

Ollama is an open source tool specialized in managing and running various large models. It supports a variety of model formats and can automatically handle model dependencies , making the deployment and running of the model becomes very simple.Ollama's official website provides a wealth of model resources , users can choose the appropriate model according to their own needs to download and run .

2.2 Model parameters and selection

On the official website of Ollama, the number of parameters of each model is labeled below, such as 7B, 13B, 70B, etc. The "B" here stands for billion, indicating the number of parameters of the model. The "B" here stands for billion, indicating the number of parameters of the model. The larger the number of parameters, the higher the complexity and capability of the model, but it also consumes more computing resources.

For the free GPU resources (16G video memory, 32G RAM, 8-core CPU) provided by Tencent Cloud Studio, we can choose 8B or 13B models for deployment. If you have a higher hardware configuration, you can also try a model with larger parameters to get better results.

2.3 Installing Ollama

After selecting the Ollama template in Cloud Studio, the system will automatically install Ollama for us. if you are using Ollama in other environments, you can install it with the following command:

curl -fsSL https://ollama.com/install.sh | shOnce the installation is complete, you can verify that Ollama was installed successfully by using the following command:

ollama --versionThe next step is for Ollama to deploy DeepSeek-R1 ...

3. DeepSeek-R1 free deployment

3.1 Introduction to DeepSeek-R1

DeepSeek-R1 is a high-performance large model from DeepSeek that excels in a number of natural language processing tasks, especially in the areas of text generation, dialog systems, and Q&A.The number of parameters in DeepSeek-R1 ranges from 8B to 70B, allowing users to choose the right model for deployment based on their hardware configuration.

3.2 Download and Deployment

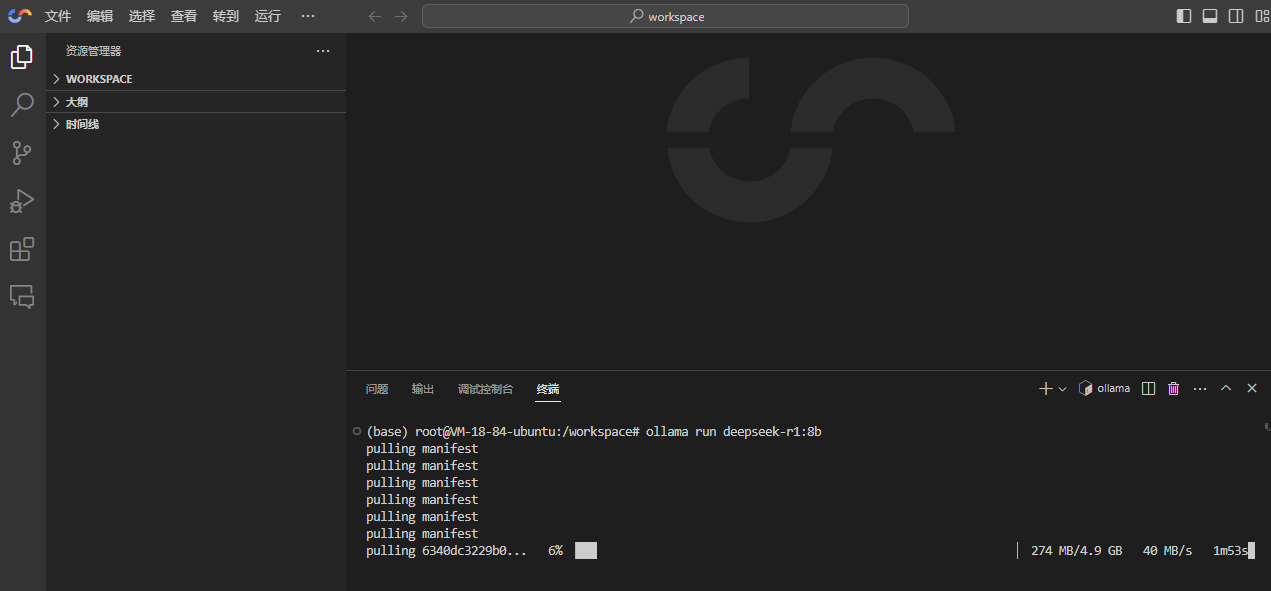

Deploying DeepSeek-R1 is very simple since Cloud Studio already installs Ollama automatically. We just need to run the following command:

ollama run deepseek-r1:8b

All available models for DeepSeek-R1 are here: https://ollama.com/library/deepseek-r1 , recommended! ollama run deepseek-r1:14b (This is the distilled Qwen model.)

3.3 Model runs

After waiting for the model to finish downloading, Ollama will automatically launch DeepSeek-R1 and enter interactive mode. At this point, you can enter questions or commands directly into the terminal to talk to DeepSeek-R1.

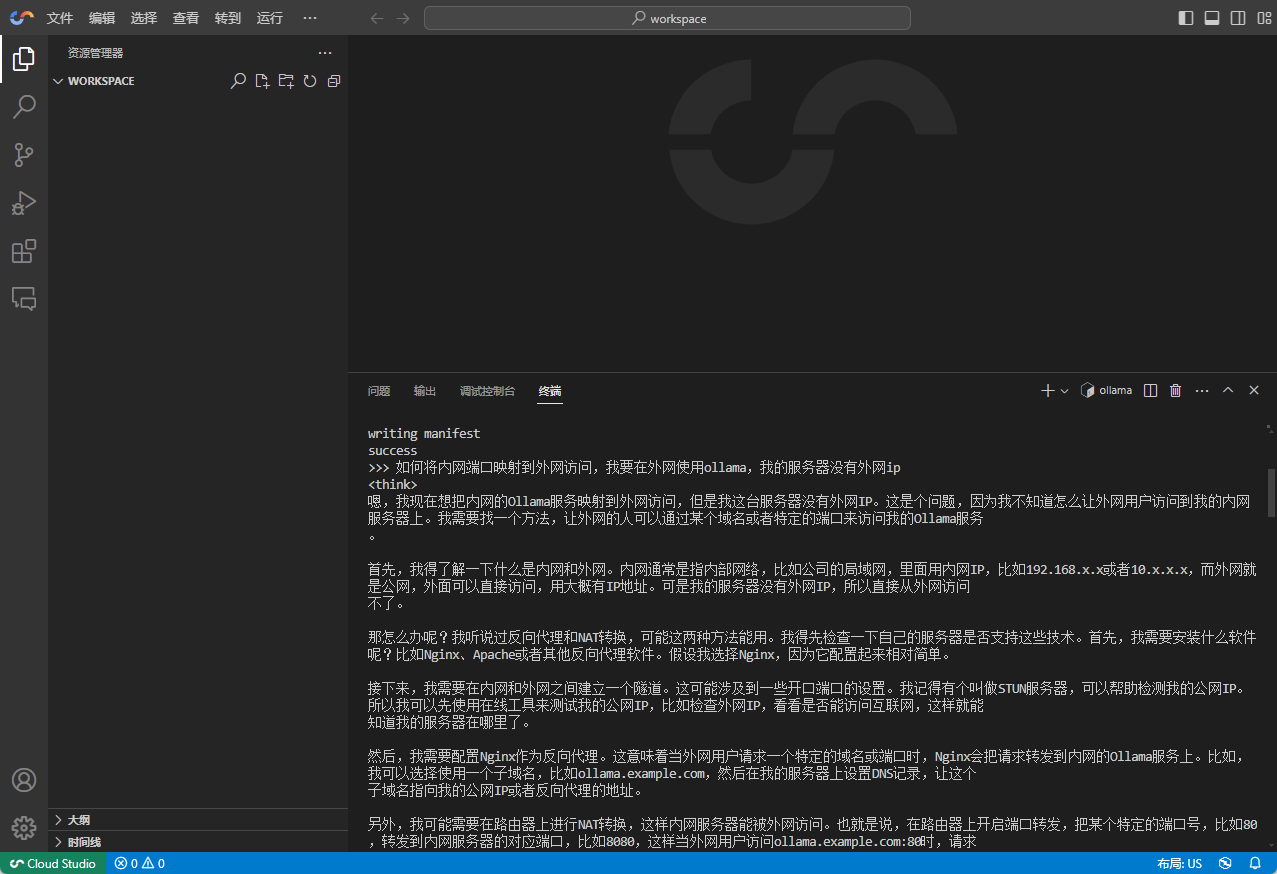

4. Start chatting with DeepSeek-R1

4.1 Basic Dialogue

Talking to DeepSeek-R1 is very easy, you just need to enter your question or instruction in the terminal and the model will generate a reply immediately.

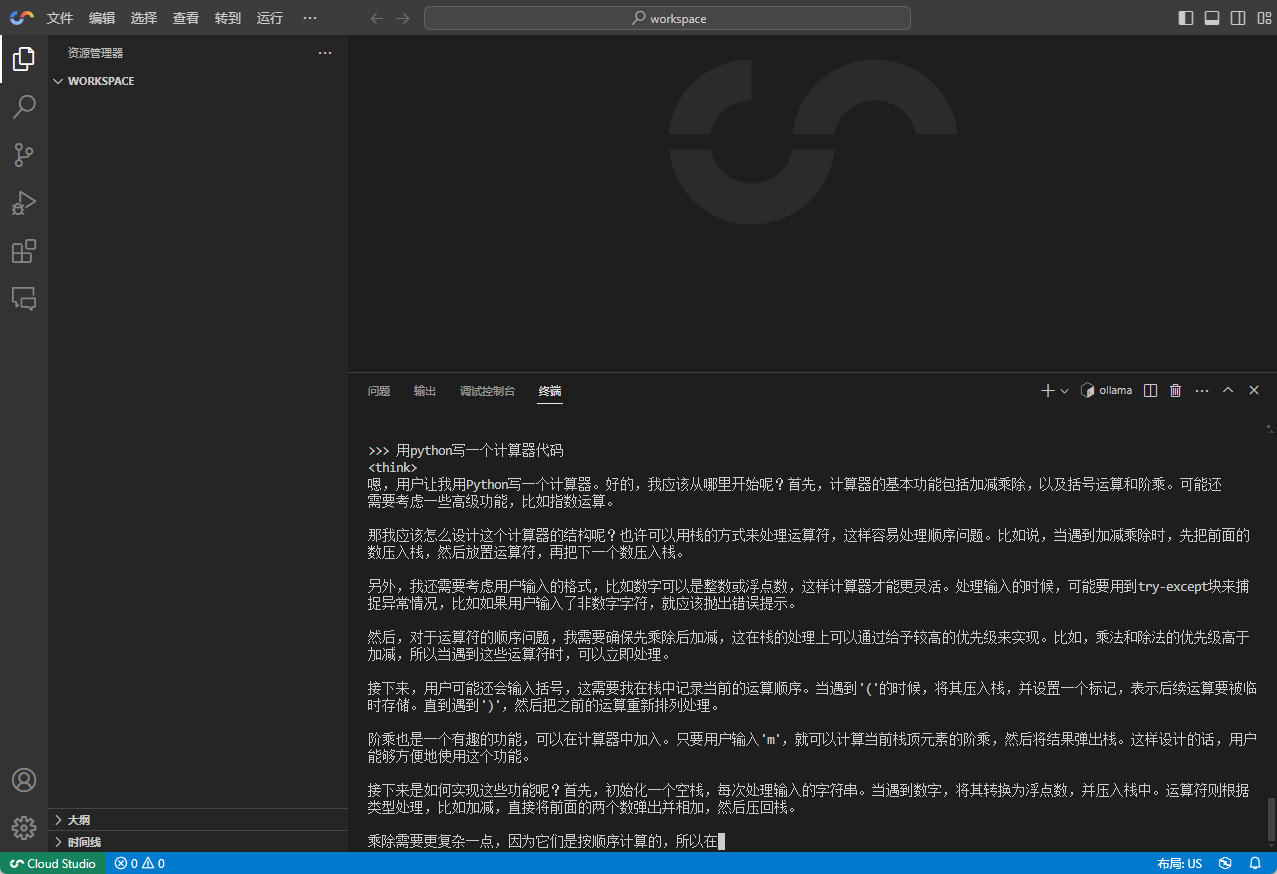

4.2 Complex tasks

- For example, code generation with DeepSeek-R1

4.3 Model fine-tuning

If you are not satisfied with the performance of DeepSeek-R1 or want the model to perform better on some specific tasks, you can try to fine-tune the model. The process of fine-tuning usually involves preparing some domain-specific datasets and retraining the model using these datasets.Ollama provides simple interfaces to help users perform model fine-tuning.

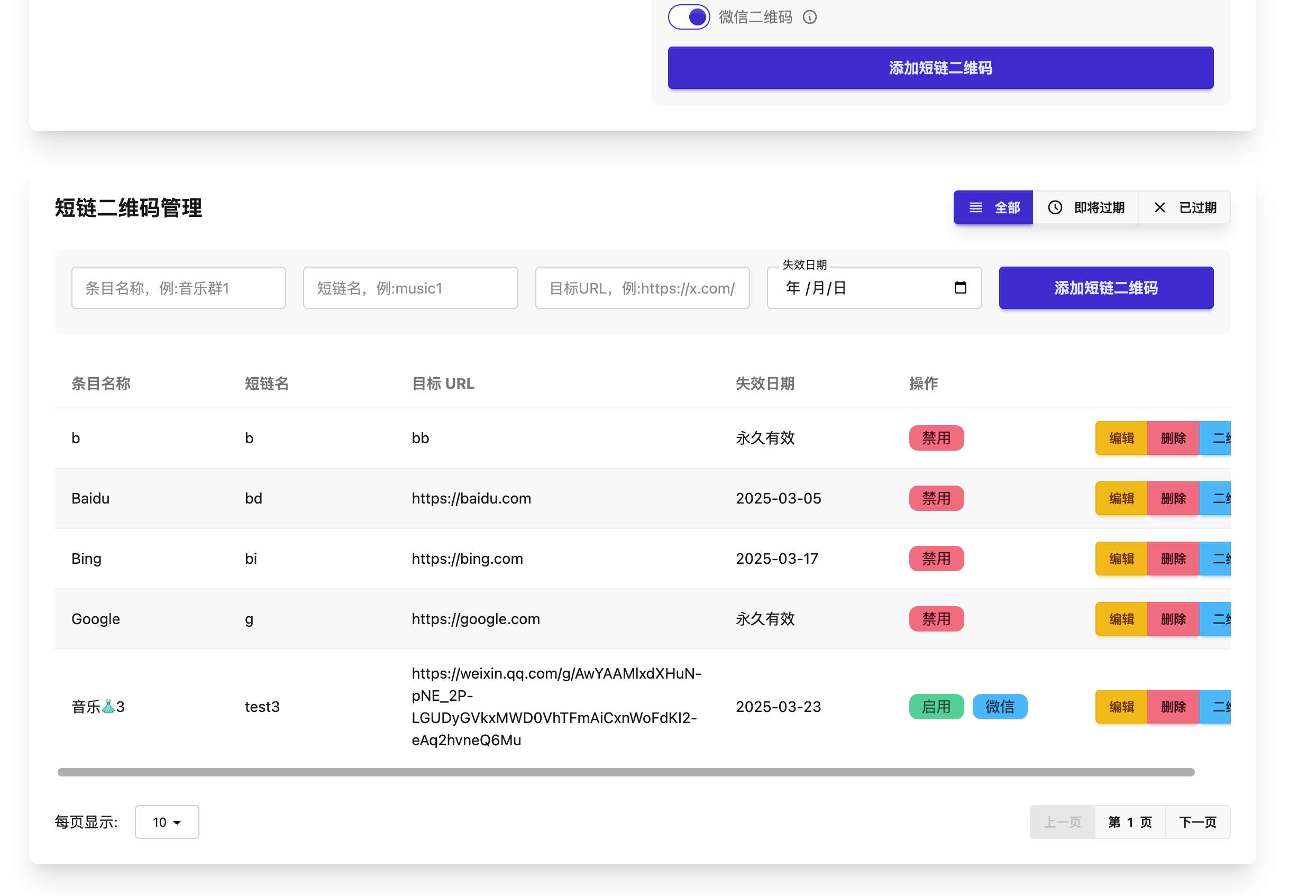

4.4 Use in AI Programming Tools

Using the service in the terminal is certainly not the main scenario, we have to use the service in other chat tools or AI programming tools to make it practical. Cloud Studio does not provide an external access address. In this case, ngrok/Cpolar is a good solution.

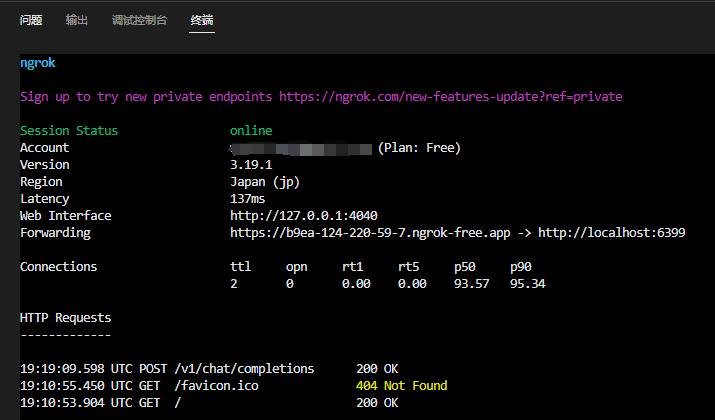

Only provide ideas, not detailed explanation: the first step to query the intranet Ollama port, the second step to install ngrok, the third step to map the Ollama port to ngrok, start the service you get the following address:

Note: It is assumed that your ollama serve The service is already in 6399 port to run on, you can use the following command to start Ngrok and map the port to the extranet:ngrok http 6399

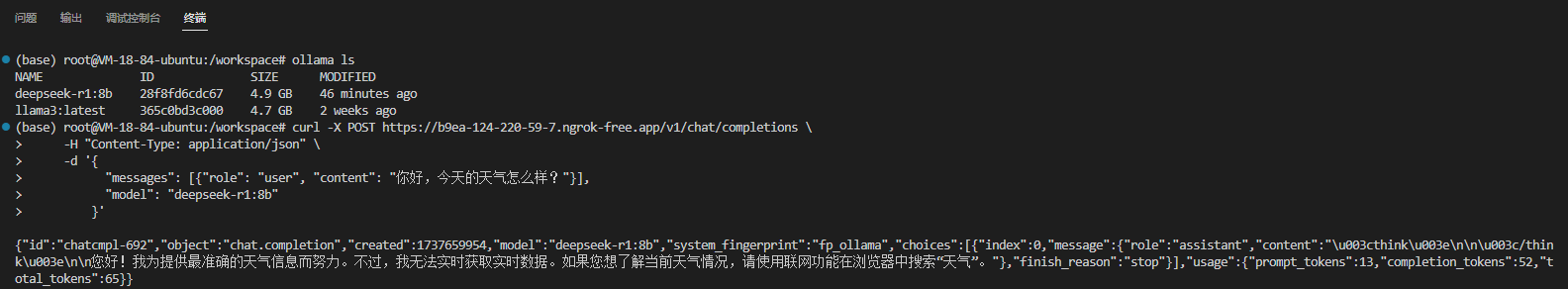

Verify that the extranet is working: (to splice the full address)

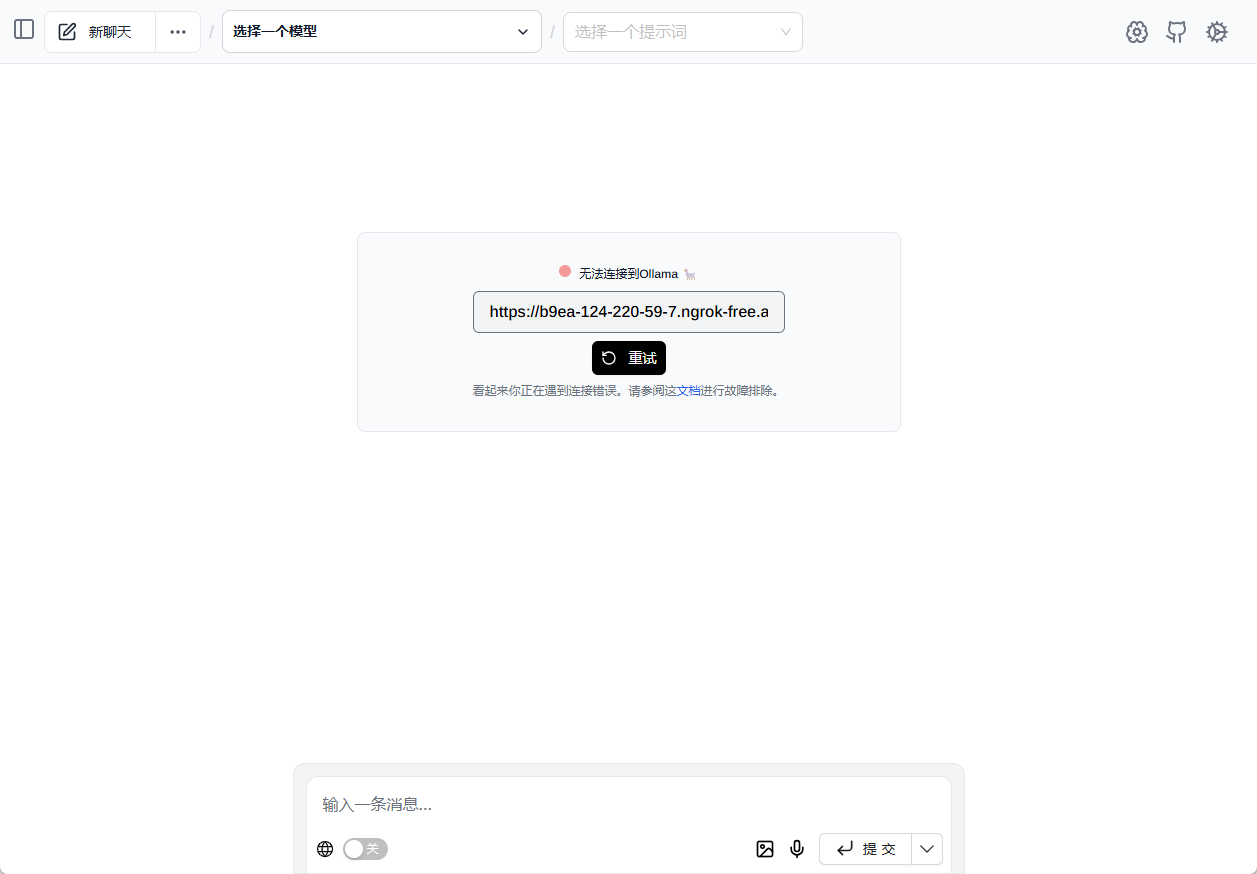

We recommend using a native browser plug-in to experience Ollama-related features. Page Assist : (fill in https://xxx.ngrok-free.app/ directly to automatically load all Ollama models)

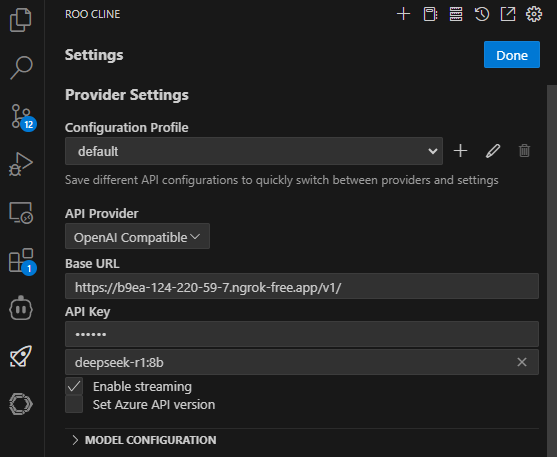

exist Roo Code (Roo Cline) Configure the API in the client: (Roo Code is an excellent AI programming plugin)

Note the addition of v1 to the original URL, and the API KEY is optional.

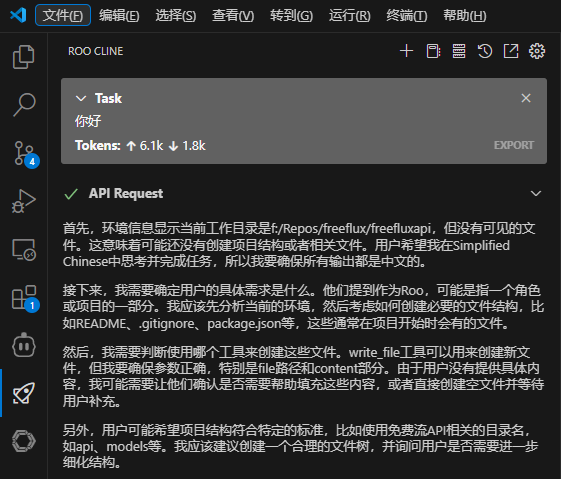

Save and test the dialog:

5. Summary

With Tencent Cloud Cloud Studio's free GPU resources, combined with Ollama and DeepSeek-R1, we can easily deploy and run big models in the cloud without worrying about high hardware costs. Whether you're having a simple conversation or working on a complex task, DeepSeek-R1 provides powerful support. We hope this article can help you deploy DeepSeek-R1 smoothly and start your big model exploration journey.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...