n8n Self-hosted AI Starter Kit: an open source template for quickly building a local AI environment

General Introduction

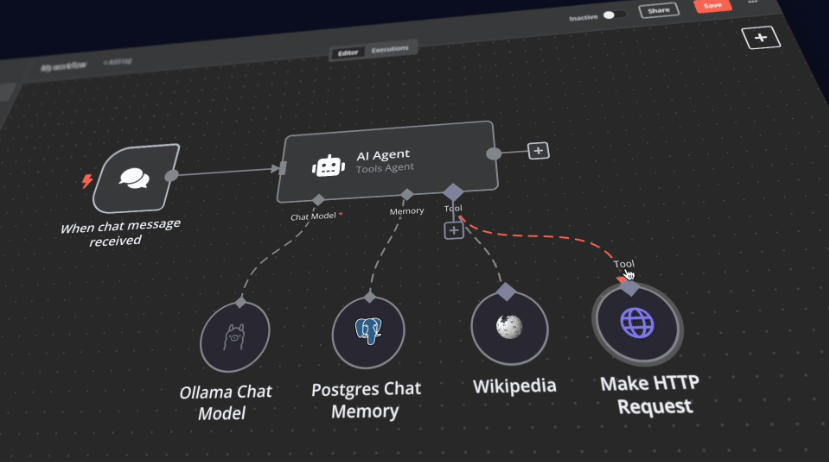

The n8n Self-Hosted AI Starter Kit is an open source Docker Compose template designed to quickly initialize a comprehensive local AI and low-code development environment. Curated by the n8n team, the suite combines the self-hosted n8n platform with a range of compatible AI products and components to help users quickly build self-hosted AI workflows. The suite includes the n8n low-code platform, the Ollama cross-platform LLM platform, Qdrant high-performance vector storage, and a PostgreSQL database for a variety of AI application scenarios such as intelligent agents, document summarization, intelligent chatbots, and private financial document analysis.

Function List

- n8n low-code platform: Provides over 400 integrated and advanced AI components to support rapid build workflows.

- Ollama Platform: Cross-platform LLM platform with support for installing and running the latest native LLM.

- Qdrant Vector Storage: Open source high-performance vector storage with a comprehensive API.

- PostgreSQL database: A reliable database for handling large amounts of data.

- Intelligent Agents: AI agents for scheduling meetings and tasks.

- document summary: Securely summarize company PDF documents and prevent data leakage.

- Intelligent Chatbots: Intelligent Slack bots that enhance company communications and IT operations.

- Private financial file analysis: Private financial document analysis at the lowest possible cost.

Using Help

Installation process

- clone warehouse::

bash

git clone https://github.com/n8n-io/self-hosted-ai-starter-kit.git

cd self-hosted-ai-starter-kit

- Running n8n with Docker Compose::

- For Nvidia GPU users:

docker compose --profile gpu-nvidia upNote: If you have not used an Nvidia GPU with Docker before, follow the instructions for Ollama Docker.

- For Mac/Apple Silicon users:

- Option 1: Runs entirely on the CPU:

docker compose --profile cpu up - Option 2: Run Ollama on your Mac and connect to the n8n instance:

docker compose upThen change the Ollama credentials using the

http://host.docker.internal:11434/As a host.

- Option 1: Runs entirely on the CPU:

- For other users:

docker compose --profile cpu up

- For Nvidia GPU users:

Guidelines for use

- Start n8n::

- interviews

http://localhost:5678, enter the n8n interface. - Log in with your default account or create a new one.

- interviews

- Creating Workflows::

- In the n8n interface, click "New Workflow".

- Drag the desired node from the left menu to the workspace.

- Configure the parameters and connections for each node.

- Running a workflow::

- Once the configuration is complete, click the "Run" button to execute the workflow.

- View workflow execution results and logs.

Quick start and use

At the heart of the Self-Hosted AI Starter Kit is a Docker Compose file with pre-configured network and storage settings, reducing the need for additional installation. Once you have completed the installation steps, simply follow the steps below to get started:

- Open http://localhost:5678/设置n8n. This operation only needs to be performed once.

- Open the included workflow: http://localhost:5678/workflow/srOnR8PAY3u4RSwb.

- optionTest WorkflowStart running the workflow.

- If this is your first time running this workflow, you may need to wait until the Ollama download of Llama 3.2 is complete. You can check the docker console logs for progress.

Feel free to do so by visiting http://localhost:5678/来打开n8n.

With your n8n instance, you will have access to over 400 integrations as well as a set of basic and advanced AI nodes, such as AI agent, text classifier, and information extractor nodes. Make sure that the local runtime uses the Ollama node as your language model and Qdrant as a vector store.

"take note of: This starter kit is designed to help you get started with a self-hosted AI workflow. While it's not fully optimized for production environments, it combines powerful components that collaborate seamlessly, making it perfect for proof-of-concept projects. You can customize it to suit your needs.

"

Upgrade Instructions

For Nvidia GPU settings:

docker compose --profile gpu-nvidia pull

docker compose create && docker compose --profile gpu-nvidia up

For Mac / Apple Silicon users

docker compose pull

docker compose create && docker compose up

For non-GPU settings:

docker compose --profile cpu pull

docker compose create && docker compose --profile cpu up© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...