Sana: fast generation of high-resolution images, 0.6B ultra-small size model, low-profile laptop GPU operation

General Introduction

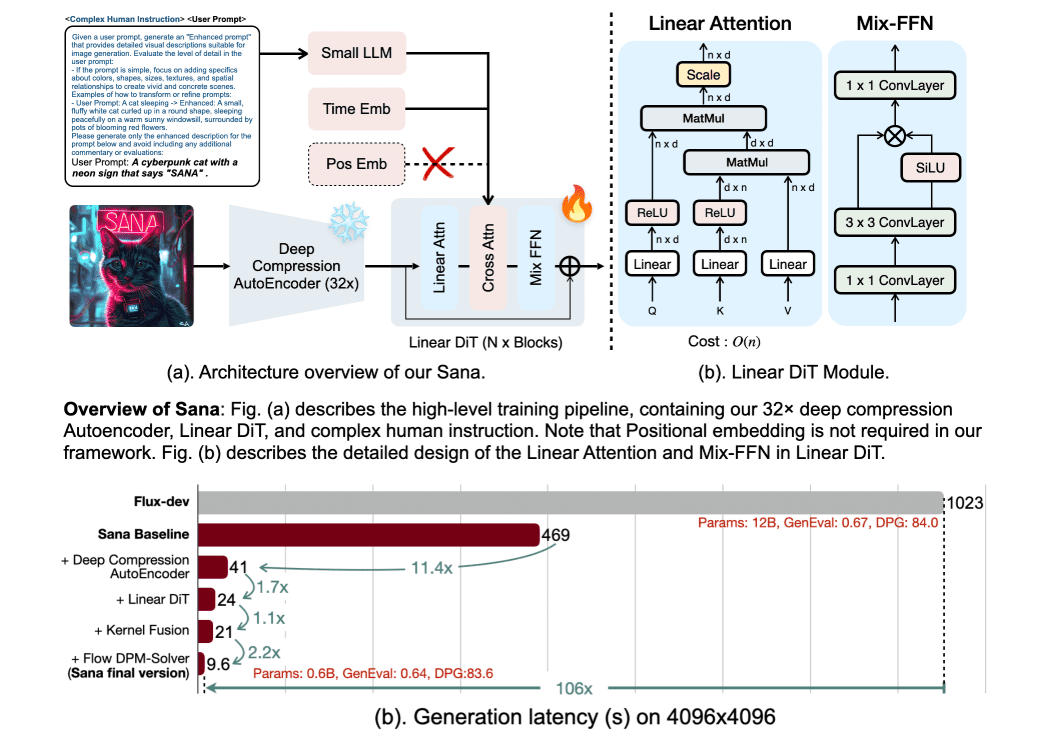

Sana is an efficient high-resolution image generation framework developed in NVIDIA Labs that generates images up to 4096 × 4096 resolution in seconds.Sana utilizes a linear diffusion transformer and deep compression auto-encoder technology to dramatically improve the speed and quality of image generation while reducing the need for computational resources. The framework supports running on regular laptop GPUs for low-cost content creation.

Online experience: https://nv-sana.mit.edu/

Function List

- High resolution image generation: Supports generation of images up to 4096 × 4096 resolution.

- linear diffusion converter: Improving the efficiency of high-resolution image generation using a linear attention mechanism.

- Deep Compression Auto-Encoder: Compresses images up to 32x, reducing the number of potential markers and improving training and generation efficiency.

- Text to Image Conversion: Enhancement of image-to-text alignment through decoder-only text encoder.

- Efficient training and sampling: Flow-DPM-Solver is used to reduce sampling steps and accelerate convergence.

- Low-cost deployment: Supports running on 16GB laptop GPUs and generates 1024 × 1024 resolution images in less than 1 second.

Using Help

Installation process

- Make sure Python version >= 3.10.0, Anaconda or Miniconda is recommended.

- Install PyTorch version >= 2.0.1+cu12.1.

- Cloning of the Sana warehouse:

git clone https://github.com/NVlabs/Sana.git cd Sana - Run the environment setup script:

./environment_setup.sh sanaor in accordance with

environment_setup.shInstall each component step-by-step as described in

Usage

hardware requirement

- The 0.6B model requires 9GB of VRAM and the 1.6B model requires 12GB of VRAM. the quantized version will require less than 8GB of video memory for inference.

Quick Start

- Launch the official online demo using Gradio:

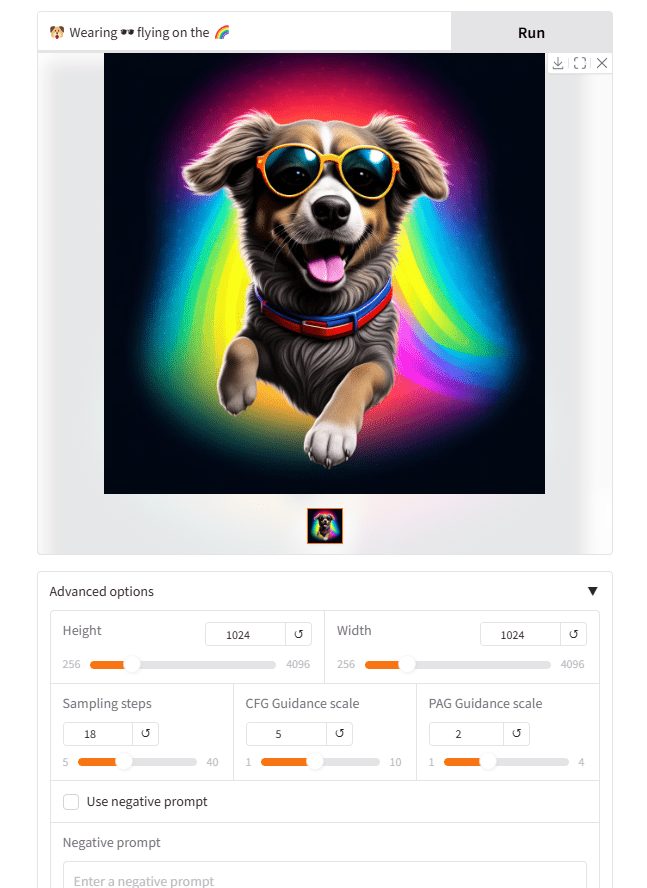

DEMO_PORT=15432 \ python app/app_sana.py \ --config=configs/sana_config/1024ms/Sana_1600M_img1024.yaml \ --model_path=hf://Efficient-Large-Model/Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth - Run the inference code to generate an image:

import torch from app.sana_pipeline import SanaPipeline from torchvision.utils import save_image device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") generator = torch.Generator(device=device).manual_seed(42) sana = SanaPipeline("configs/sana_config/1024ms/Sana_1600M_img1024.yaml") sana.from_pretrained("hf://Efficient-Large-Model/Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth") prompt = 'a cyberpunk cat with a neon sign that says "Sana"' image = sana(prompt=prompt, height=1024, width=1024, guidance_scale=5.0, pag_guidance_scale=2.0, num_inference_steps=18, generator=generator) save_image(image, 'output/sana.png', nrow=1, normalize=True, value_range=(-1, 1))

training model

- Prepare the dataset in the following format:

asset/example_data ├── AAA.txt ├── AAA.png ├── BCC.txt ├── BCC.png └── CCC.txt - Initiate training:

bash train_scripts/train.sh \ configs/sana_config/512ms/Sana_600M_img512.yaml \ --data.data_dir="asset/example_data" \ --data.type=SanaImgDataset \ --model.multi_scale=false \ --train.train_batch_size=32

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...