Sam Altman: OpenAI Confirms Release of AI Agents to Revolutionize Enterprise Efficiency

Yesterday, OpenAI CEO and co-founder Sam Altman, posted his latest in-depth article, Reflections, on his personal blog.

The main review of the creation of OpenAI this 9 years: from the initial not outside the outside world, to the release of ChatGPT in 2022 set off a global AI revolution users all the way soared more than 300 million, and then to his sudden dismissal, the whole OpenAI into a state of chaos.

This also made him realize that his management was quite a failure, well he was more fortunate to have a lot of people who helped him during the recovery process and subsequently revamped the OpenAI board to diversify the management.

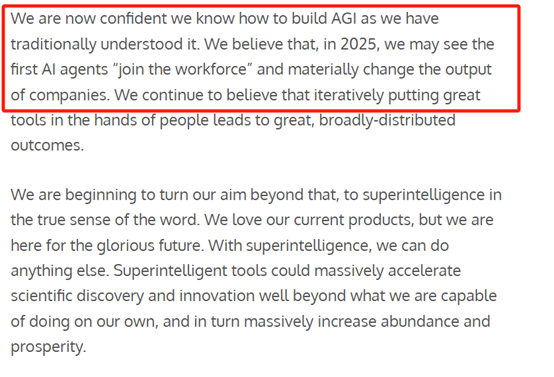

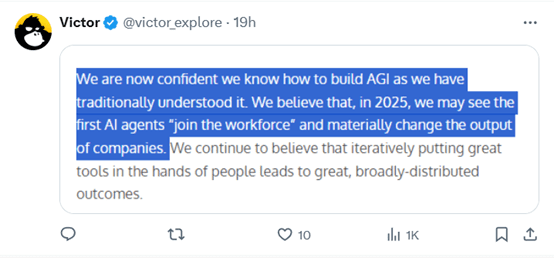

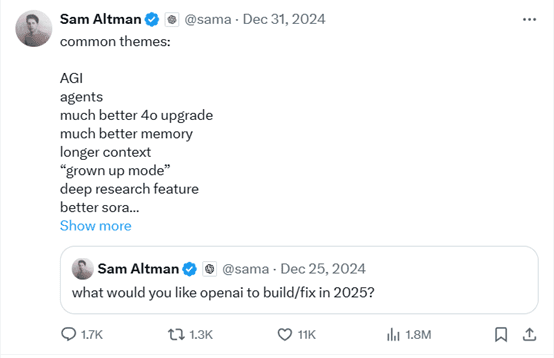

In terms of the technology outlook, Sam specifically writes "We are now confident that we can build AGI as traditionally understood.In 2025, OpenAI will bring online the first AI Agents to join the 'workforce' and materially change the efficiency of companies' outputs. We firmly believe that continually putting powerful tools in people's hands leads to great, widely distributed results."

And Agents is one of the most important vehicles for OpenAI to develop and apply AGI, making it intuitively easy to automate many repetitive, complex, and cumbersome business processes across end-to-end.

Because, the core essence of AGI is to automate different business processes, freeing human beings from boring and meaningless business processes, and using their time and energy on businesses with higher business value.

Many of you reading this reflective article are also greatly interested in the AI Agents mentioned in the article, which will have a huge impact on the workforce in 2025!

People don't realize that the Big Model can't be General Artificial Intelligence (AGI). It simply cannot be; it is about probability and mathematical operations. It cannot logically have the ability to perceive. That would require stochasticity and the ability to be an individual, and those are not things that can be achieved based on historical data.

AI Agents might be a presence that enables AGI, but how is something that is just calculating the most likely next word in a reply going to be able to act independently and translate that into actual action?

Currently, AGI is defined as a system of Agents capable of producing at least as much economic output on a computer as any human. No one mentions the ability to perceive (sentience), as that is somewhat irrelevant in this context.

Also circled the tech outlook bit of the article.

Amazing reflective memories. I am struck by the mere fact that we are talking about, mentioning and discussing "superintelligence" and even aiming for it in early 2025 - which means that General Artificial Intelligence (AGI) has already been realized.

I can't wait to see AI Agents join the workforce, automate heavy work, and continue to evolve.

So OpenAI will release AI Agents and AGI this year right.

In addition, when Sam was working on OpenAI's 2025 product release plan, he purposely placed Agents behind General Artificial Intelligence AGI, which shows the importance he placed on it.

Reflections Original

ChatGPT's second birthday was just over a month ago, and now we have moved into a new phase of modeling capable of complex reasoning. As the New Year always leads to contemplation, I'd like to share some personal thoughts on how things have gone so far and some of the things I've learned along the way.

As we get closer to AGI (generalized artificial intelligence), it's especially important to look at the company's development process. There's still a lot to understand, a lot we don't know, and it's still in the early stages. But we know a lot more than we did when we first started.

We founded OpenAI about 9 years ago because we believed that AGI was possible and that it could be the most impactful technology in human history. We wanted to figure out how to build it and make it broadly beneficial to humanity; we were eager to leave our mark on history. Our ambitions are extremely high, as is our conviction that this work may benefit society in extraordinary ways.

At the time, hardly anyone cared, and if anyone did, it was mostly because they didn't think we had a chance of success.

In 2022, OpenAI is still a quiet research lab working on a project tentatively called "Chatting with GPT-3.5" (we're much better at research than we are at naming). We've been watching people use test features of our API and know that developers really enjoy talking to models. We think building a demo product around this experience will show people something important for the future and help us improve and refine the model to make it more secure.

Eventually, we were lucky enough to name it ChatGPT and launch it on November 30, 2022.

We've always known in the abstract that at some point we would reach a tipping point and the AI revolution would kick off. But we didn't know when that moment would be. To our surprise, it is that moment.

The launch of ChatGPT has unleashed a growth curve like we've never seen before - in our company, in our industry, and in the world at large. We're finally seeing the great benefits we've always hoped for from AI, and we can see more coming soon.

It is not easy. The road is not smooth and the right choice is not obvious.

Over the past two years, we've built an entire company around this new technology almost from scratch. There's no way to train employees except by doing, and no one can tell you exactly what to do when the technology category is brand new.

Building a company at high speed with such a lack of training can be a confusing process. It's usually two steps forward and one step back (sometimes even one step forward and two steps back). Mistakes are corrected along the way, but there really is no manual or signposts to follow when doing original work. Moving quickly through uncharted waters is an incredible experience, but it is also extremely stressful for all involved. Conflict and misunderstandings abound.

These years have been by far the most rewarding, interesting, awesome, fun, exhausting, stressful, and especially the last two years have been particularly unpleasant. The overwhelming feeling is gratitude; I know that one day I'll retire on our ranch, watch the plants grow, get a little bored, and then remember how cool it was to have the job I dreamed of doing since I was a kid. I try to remember that on any given Friday when seven things go horribly wrong by 1pm.

One Friday a little over a year ago, the main thing that went wrong that day was that I was abruptly fired from my job during a video call, and then the board posted a blog post about it right after we hung up. I was in a hotel room in Las Vegas. It felt, almost unexplainably, like a dream gone wrong.

Getting fired in public without warning triggered a crazy few hours and a pretty crazy few days. The "fog of war" is the strangest part. None of us can get a satisfactory answer as to what happened or why it happened.

In my view, the whole affair was a major failure of governance by people of goodwill, including myself. Looking back, I certainly wish I had done things differently then, and I would like to believe that I am a better and more thoughtful leader now than I was a year ago.

I also recognize the importance of a board with diverse perspectives and broad experience in managing a complex set of challenges. Good governance requires a great deal of trust and credibility. I applaud the fact that so many people are working together to build a stronger governance system for OpenAI that will allow us to pursue our mission of ensuring that AGI benefits all of humanity.

My biggest takeaway is how grateful I am and how many people I am grateful to: to everyone who works at OpenAI and chooses to spend their time and energy pursuing this dream, to our friends who have helped us through times of crisis, to our partners and customers who have supported us and entrusted us to help them succeed, and to the people in my life who have shown me that they care about me.

We all got back to work in a much more cohesive and positive way, and I'm very proud of how focused we've been since then. We've conducted some of arguably the best research we've ever done. We've grown our weekly active users from about 100 million to over 300 million. Most importantly, we continue to put technology out into the world that people really love and that solves real problems.

Nine years ago, we really didn't know what we'd end up with; even now, we only have a general idea. The development of artificial intelligence has taken many twists and turns, and we expect there will be more in the future.

Some of the twists and turns have been enjoyable; others have been tough. It's been fun to watch a series of research miracles unfold, and many of the naysayers have become true believers. We've also seen some colleagues leave and become competitors. Turnover is common as teams get bigger, and OpenAI is growing very fast. I think this is somewhat inevitable - startups typically have a lot of turnover at each new major scale tier, and at OpenAI, the number grows exponentially every few months.

The last two years have been like ten years in an average company. When any company grows and evolves so quickly, interests naturally diverge. And when any company that is a leader in an important industry is attacked by many for a variety of reasons, especially when they try to compete with it.

Our vision will not change; our strategy will continue to evolve. For example, in the beginning we didn't know we had to build a product company; we thought we were just going to do great research. We also didn't know we would need such huge amounts of money. There are new things now that we didn't understand a few years ago but must now build, and there will be new things in the future that we can barely imagine now.

We are proud of our track record in research and deployment to date and are committed to continuing to advance our thinking on safety and benefit sharing. We continue to believe that the best way to make AI systems safe is to release them into the world iteratively and incrementally, giving society time to adapt and evolve with the technology, learn from experience, and continue to make the technology safer. We believe in the importance of being a world leader in safety and alignment research, and guiding that research with feedback from real-world applications.

We are now confident that AGI is being built as traditionally understood, and we believe that by 2025 we may see the first AI Agents "join the workforce" and materially change the efficiency of a company's output. We firmly believe that putting powerful tools into people's hands will lead to great, widely distributed results.

We're starting to turn our sights further afield, to what is truly super smart. We love our current products, but we're in it for the glorious future. With superintelligence, we can do anything else. Superintelligence tools can dramatically accelerate scientific discovery and innovation far beyond what we can do on our own, and in turn dramatically increase abundance and prosperity.

This sounds like science fiction right now, and even talking about it is a little crazy. That's okay - we've been through this before and have no problem being in this situation again. We're pretty confident that everyone will see what we see over the next few years, and it's so important to proceed with caution while still maximizing broad benefits and empowerment. Given the possibilities of our work, OpenAI can't be an ordinary company.

How fortunate and humbling it is to be able to play a role in this work.

Thanks to Josh Tyrangiel for prompting me to write this. I wish I had more time.

There were a lot of people who did an incredible amount of work to help OpenAI and me personally during those days, but two people stood out.

Ron Conway and Brian Chesky have gone so far beyond the call of duty that I don't even know how to describe it. I've certainly heard about Ron's abilities and tenacity over the years, and I've spent a lot of time with Brian over the last couple years and gotten tons of help and advice.

But there's nothing like working side-by-side with people to see what they're really capable of. I have reason to believe that OpenAI might have fallen apart without their help; they worked around the clock for days on end until things were done.

Although they worked exceptionally hard, they were always calm, had clear strategic thinking and excellent advice. They prevented me from making several mistakes without making a single one themselves. They utilized their extensive network of contacts for everything needed and were able to deal with many complex situations. And I'm sure they did a lot of things I didn't know about.

However, what I will remember most is their concern, compassion and support.

I originally thought I knew what it was like to support a founder as well as a company, and to some small degree I do. But I'd never seen, or even heard of, what people like them do before, and now I understand more fully why they have legendary status. They are different, but both fully deserve their truly unique reputations, and they are similar in their exceptional problem-solving and ability to help others, as well as their unwavering commitment in difficult times. The tech industry is far better off with them both in it.

There are others like them; it's something very special about our industry and they do more than people realize to help make everything run smoothly. I look forward to giving back to others.

On a personal note, a special thanks to Ollie for that weekend and for his continued support; he was incredible in every way and no one could have asked for a better companion.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...