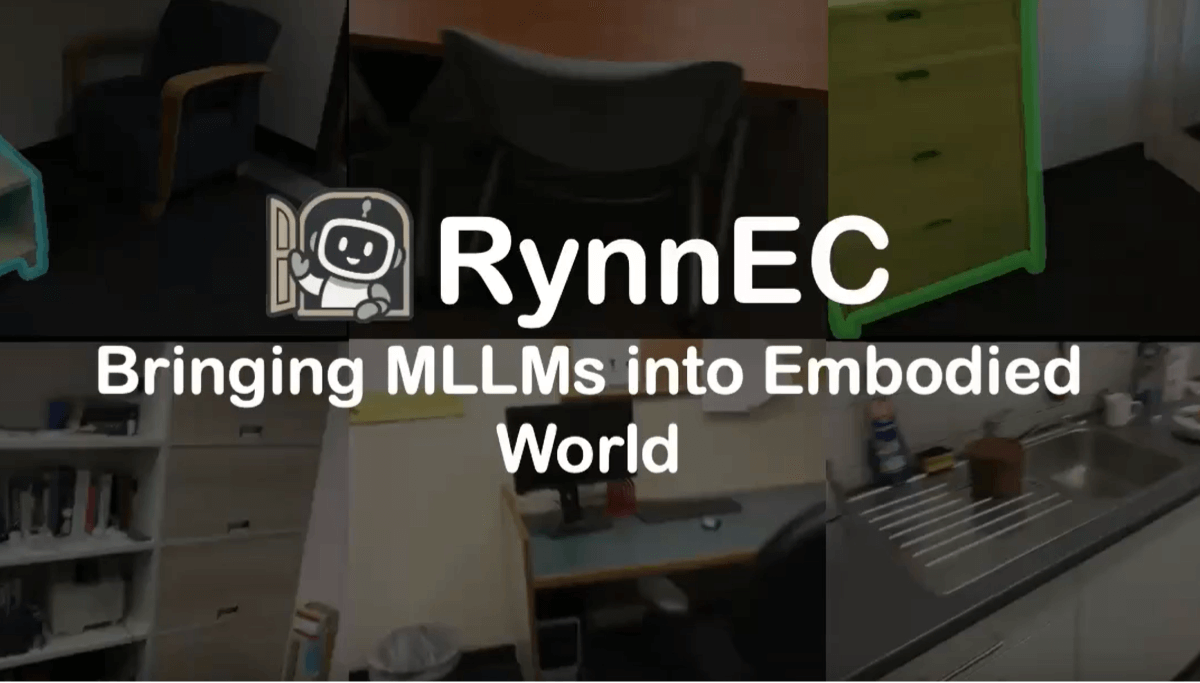

RynnEC - Ali Dharma Institute's open source world understanding model

What is RynnEC?

RynnEC is a world understanding model introduced by Alibaba Dharma Institute, focusing on embodied intelligence tasks. The model is based on multimodal fusion technology, combining video data and natural language, and can parse objects in a scene from multiple dimensions, supporting object understanding, spatial perception, and video target segmentation, etc. RynnEC does not need to rely on a 3D model, but only relies on video sequences to establish continuous spatial perception, and can complete tasks based on natural language commands. The model has a wide range of applications in various fields such as home service robots, industrial automation, intelligent security, medical assistance, and education and training, providing robots and intelligent systems with powerful semantic comprehension capabilities to help them better understand the physical world.

Features of RynnEC

- Multidimensional object understanding: It supports comprehensive analysis of objects in the scene from 11 dimensions, such as location, function and number, and accurately recognizes object features.

- Strong spatial perception: The ability to establish continuous spatial perception and understand spatial relationships between objects, relying only on video sequences, without relying on 3D models.

- Video target segmentation: Accurately segment target objects or regions in the video based on natural language commands to meet the needs of complex scenes.

- Flexible Interaction Capabilities: Support natural language interaction, users communicate with the model in real time through commands and dynamically adjust the model behavior.

- Multi-modal fusion technology: Combining video data with natural language text, RynnEC can process both visual and verbal information to improve scene understanding.

- Efficient training and optimization: Using large-scale labeled data and phased training strategy to gradually optimize the multimodal understanding and generation capability, and support LORA technology to further improve the performance.

RynnEC's core strengths

- Spatial perception without 3D modeling: Continuous spatial perception can be established from video sequences alone, without relying on additional 3D models, reducing the cost and complexity of the application.

- Multi-dimensional semantic understanding: It can fully parse the objects in the scene from multiple dimensions, provide richer semantic information, and improve the understanding of complex scenes.

- Command-driven flexibility: Supports interaction based on natural language commands, where the user adjusts the model's behavior in real time with simple commands to adapt to dynamic task requirements.

- Efficient training and optimization techniques: Use the staged training strategy and LORA technology to quickly optimize model performance and adapt to different application scenarios.

- Wide range of applicability: Applicable to home, industry, security, medical, education and many other fields, with strong versatility and expandability.

- Real-time and dynamic: The ability to process video data in real time and respond dynamically to user commands makes it suitable for scenarios that require a quick response.

- High precision target segmentation: A video target segmentation technique based on textual commands to accurately recognize and segment the targets in the video and enhance the accuracy of task execution.

What is RynnEC's official website?

- GitHub repository:: https://github.com/alibaba-damo-academy/RynnEC/

Who RynnEC is for

- Robotics R&D Engineer: With powerful multi-dimensional object understanding and spatial perception capabilities, it helps engineers develop smarter robots to accurately accomplish complex tasks.

- Artificial intelligence researchers: With open-source code and cutting-edge technology, researchers provide rich experimental material to advance multimodal fusion and embodied intelligence research.

- Smart Security System Developer: Text-based command target segmentation and real-time spatial awareness function, rapid identification of tracking targets, help security system upgrading.

- Industrial Automation Engineer: Multi-dimensional object understanding and precise operation capability can effectively enhance the automation level of industrial robots in complex production lines.

- educator: The video target segmentation function can assist in teaching and visualizing complex concepts to enhance students' learning experience and comprehension.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...